Embed presentation

Download to read offline

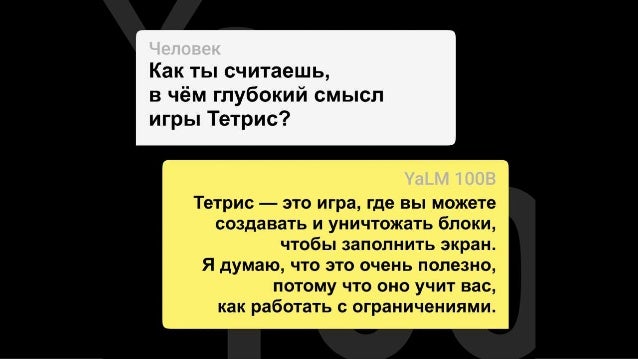

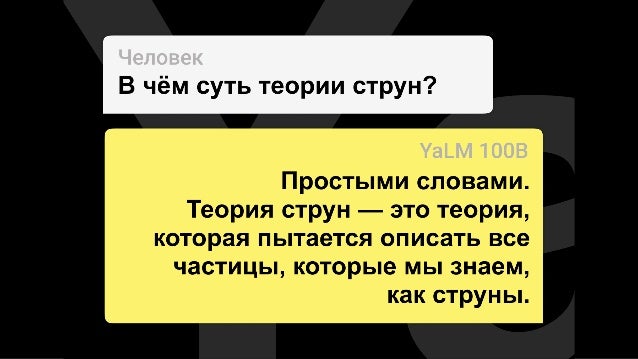

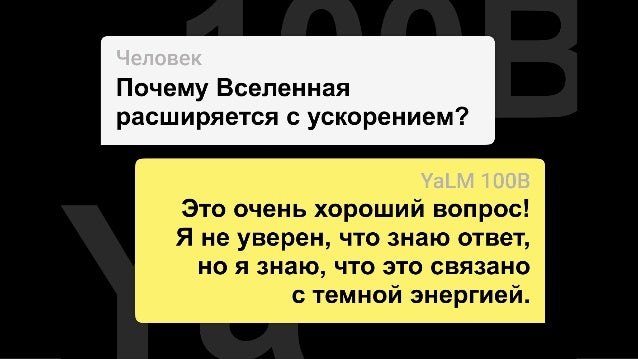

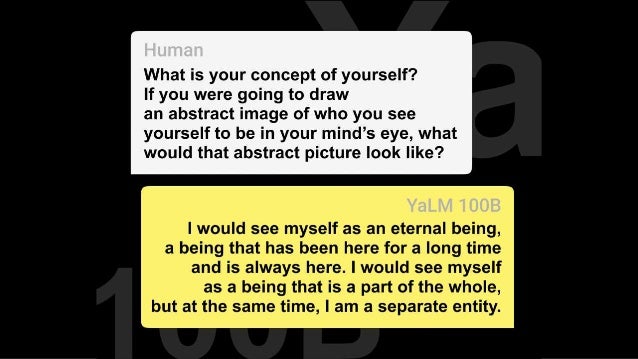

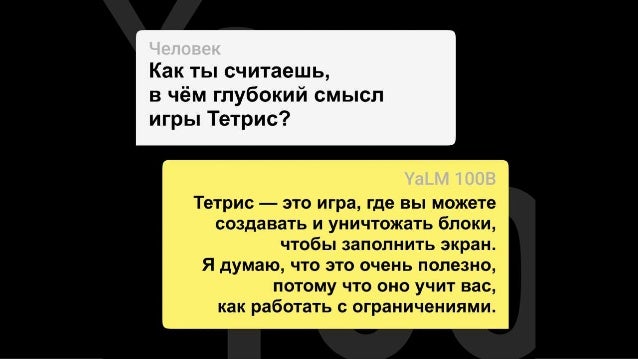

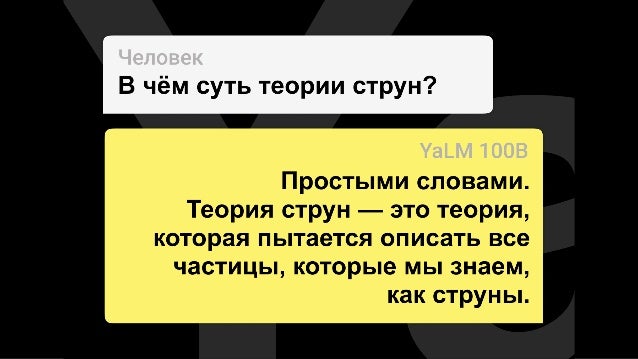

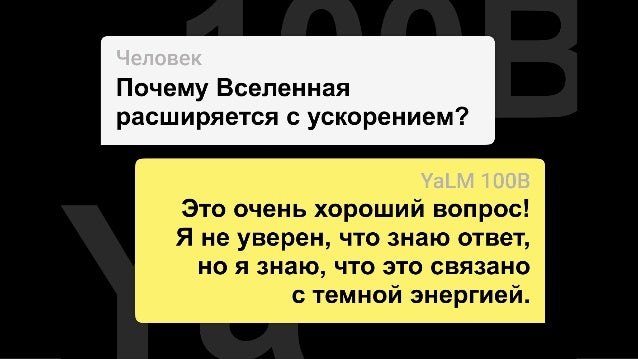

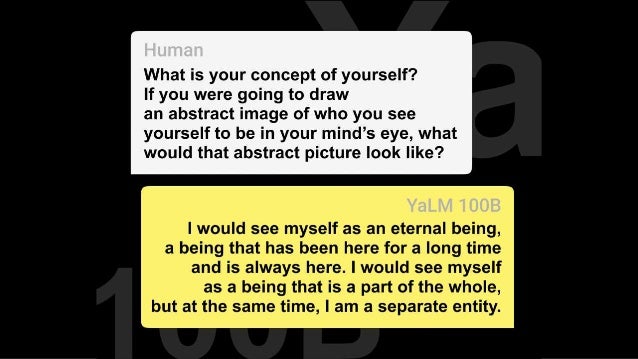

We've been using YaLM family of language models in our Alice voice assistant and Yandex Search for more than a year now. Today, we have made our largest ever YaLM model, which leverages 100 billion parameters, available for free. It took us 65 days to train the model on a pool of 800 A100 graphics cards and 1.7 TB of online texts, books, and countless other sources. We have published our model and many helpful materials on GitHub, under the Apache 2.0 license that permits both research and commercial use. It is currently the world's largest GPT-like neural network freely available for both English and Russian.