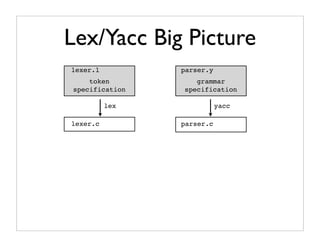

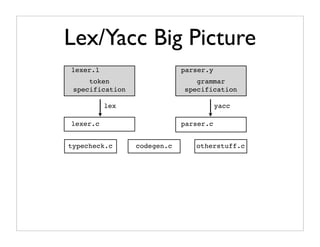

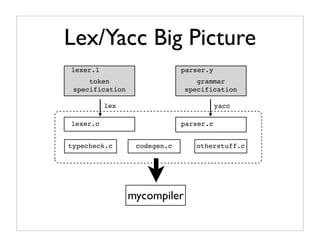

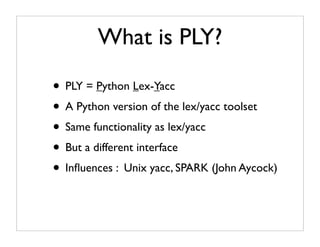

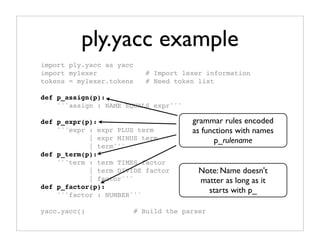

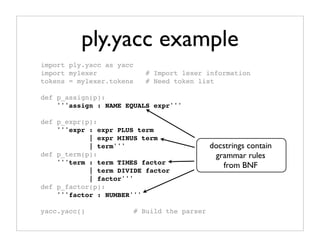

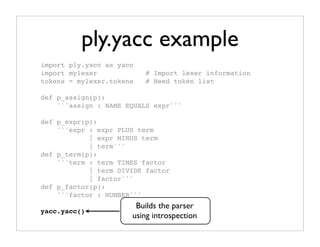

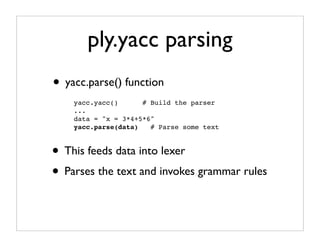

This document provides an overview and introduction to PLY, a Python implementation of lex and yacc parsing tools. PLY allows writing parsers and compilers in Python by providing modules that handle lexical analysis (ply.lex) and parsing (ply.yacc) in a similar way to traditional lex and yacc tools. The document demonstrates how to define tokens and grammar rules with PLY and discusses why PLY is useful for building parsers and compilers in Python.

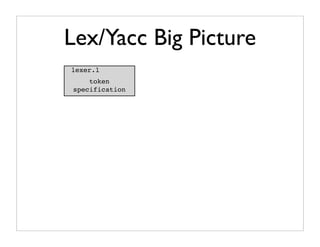

![Lex/Yacc Big Picture

lexer.l

token

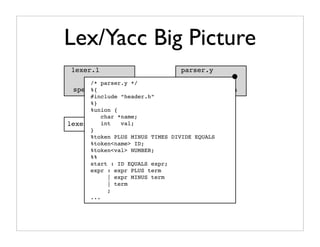

/* lexer.l */ grammar

specification

%{ specification

#include “header.h”

int lineno = 1;

%}

%%

[ t]* ; /* Ignore whitespace */

n { lineno++; }

[0-9]+ { yylval.val = atoi(yytext);

return NUMBER; }

[a-zA-Z_][a-zA-Z0-9_]* { yylval.name = strdup(yytext);

return ID; }

+ { return PLUS; }

- { return MINUS; }

* { return TIMES; }

/ { return DIVIDE; }

= { return EQUALS; }

%%](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-11-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-22-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’ tokens list specifies

t_TIMES = r’*’ all of the possible tokens

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-23-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’ Each token has a matching

t_MINUS = r’-’

t_TIMES = r’*’

declaration of the form

t_DIVIDE = r’/’ t_TOKNAME

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-24-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’ These names must match

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-25-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’ Tokens are defined by

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

regular expressions

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-26-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’ For simple tokens,

t_TIMES = r’*’

t_DIVIDE = r’/’

strings are used.

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-27-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

Functions are used when

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’ code

special action

must execute

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-28-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t): docstring holds

r’d+’ regular expression

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-29-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ Specifies ignored

]

t_ignore = ‘ t’ characters between

t_PLUS = r’+’

tokens (usually whitespace)

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-30-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

Builds the lexer

lex.lex() by creating a master

# Build the lexer

regular expression](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-31-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’ Introspection used

t_TIMES = r’*’ to examine contents

t_DIVIDE = r’/’ of calling module.

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-32-320.jpg)

![ply.lex example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’ Introspection used

t_TIMES = r’*’ to examine contents

t_DIVIDE = r’/’ of calling module.

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

def t_NUMBER(t):

r’d+’ __dict__ = {

t.value = int(t.value) 'tokens' : [ 'NAME' ...],

return t 't_ignore' : ' t',

't_PLUS' : '+',

lex.lex() # Build the ...

lexer

't_NUMBER' : <function ...

}](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-33-320.jpg)

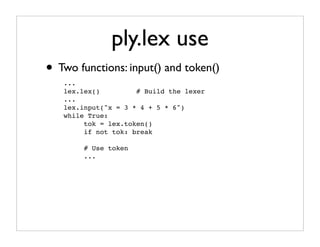

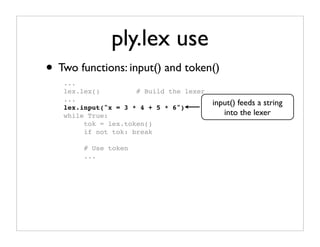

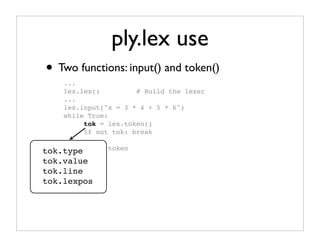

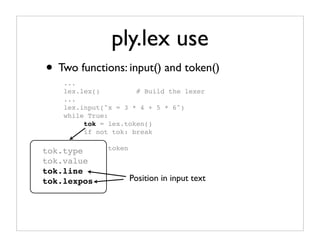

![ply.lex use

• Two functions: input() and token()

...

lex.lex() # Build the lexer

...

lex.input("x = 3 * 4 + 5 * 6")

while True:

tok = lex.token()

if not tok: break

tok.type # Use token

...

tok.value

tok.line

tok.lexpos t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-38-320.jpg)

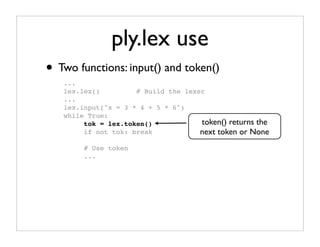

![ply.lex use

• Two functions: input() and token()

...

lex.lex() # Build the lexer

...

lex.input("x = 3 * 4 + 5 * 6")

while True:

tok = lex.token()

if not tok: break

tok.type # Use token

...

tok.value matching text

tok.line

tok.lexpos t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-39-320.jpg)

![Rule Functions

• During reduction, rule functions are invoked

def p_factor(p):

‘factor : NUMBER’

• Parameter p contains grammar symbol values

def p_factor(p):

‘factor : NUMBER’

p[0] p[1]](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-52-320.jpg)

![Example: Calculator

def p_assign(p):

‘’’assign : NAME EQUALS expr’’’

vars[p[1]] = p[3]

def p_expr_plus(p):

‘’’expr : expr PLUS term’’’

p[0] = p[1] + p[3]

def p_term_mul(p):

‘’’term : term TIMES factor’’’

p[0] = p[1] * p[3]

def p_term_factor(p):

'''term : factor'''

p[0] = p[1]

def p_factor(p):

‘’’factor : NUMBER’’’

p[0] = p[1]](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-54-320.jpg)

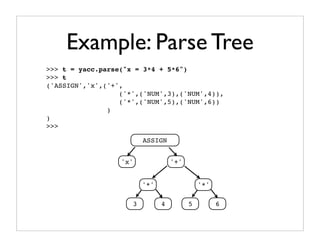

![Example: Parse Tree

def p_assign(p):

‘’’assign : NAME EQUALS expr’’’

p[0] = (‘ASSIGN’,p[1],p[3])

def p_expr_plus(p):

‘’’expr : expr PLUS term’’’

p[0] = (‘+’,p[1],p[3])

def p_term_mul(p):

‘’’term : term TIMES factor’’’

p[0] = (‘*’,p[1],p[3])

def p_term_factor(p):

'''term : factor'''

p[0] = p[1]

def p_factor(p):

‘’’factor : NUMBER’’’

p[0] = (‘NUM’,p[1])](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-55-320.jpg)

![Debugging Output

Grammar state 10

Rule 1 statement -> NAME = expression (1) statement -> NAME = expression .

Rule 2 statement -> expression (3) expression -> expression . + expression

Rule 3 expression -> expression + expression (4) expression -> expression . - expression

Rule 4 expression -> expression - expression (5) expression -> expression . * expression

Rule 5 expression -> expression * expression (6) expression -> expression . / expression

Rule 6 expression -> expression / expression

Rule 7 expression -> NUMBER $end reduce using rule 1 (statement -> NAME = expression .)

+ shift and go to state 7

Terminals, with rules where they appear - shift and go to state 6

* shift and go to state 8

* : 5 / shift and go to state 9

+ : 3

- : 4

/ : 6

= : 1 state 11

NAME : 1

NUMBER : 7 (4) expression -> expression - expression .

error : (3) expression -> expression . + expression

(4) expression -> expression . - expression

Nonterminals, with rules where they appear (5) expression -> expression . * expression

(6) expression -> expression . / expression

expression : 1 2 3 3 4 4 5 5 6 6

statement : 0 ! shift/reduce conflict for + resolved as shift.

! shift/reduce conflict for - resolved as shift.

Parsing method: LALR ! shift/reduce conflict for * resolved as shift.

! shift/reduce conflict for / resolved as shift.

state 0 $end reduce using rule 4 (expression -> expression - expression .)

+ shift and go to state 7

(0) S' -> . statement - shift and go to state 6

(1) statement -> . NAME = expression * shift and go to state 8

(2) statement -> . expression / shift and go to state 9

(3) expression -> . expression + expression

(4) expression -> . expression - expression ! + [ reduce using rule 4 (expression -> expression - expression .) ]

(5) expression -> . expression * expression ! - [ reduce using rule 4 (expression -> expression - expression .) ]

(6) expression -> . expression / expression ! * [ reduce using rule 4 (expression -> expression - expression .) ]

(7) expression -> . NUMBER ! / [ reduce using rule 4 (expression -> expression - expression .) ]

NAME shift and go to state 1

NUMBER shift and go to state 2

expression shift and go to state 4

statement shift and go to state 3

state 1

(1) statement -> NAME . = expression

= shift and go to state 5](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-60-320.jpg)

![Debugging Output

... Grammar state 10

state 1 11 statement

Rule -> NAME = expression (1) statement -> NAME = expression .

Rule 2 statement -> expression (3) expression -> expression . + expression

Rule 3 expression -> expression + expression (4) expression -> expression . - expression

(4) expression -> expression

Rule

Rule

4

5

expression -> expression - expression

expression -> expression * expression

- expression . (5) expression -> expression . * expression

(6) expression -> expression . / expression

(3) expression -> expression

Rule

Rule

6

7

expression -> expression / expression

expression -> NUMBER

. + expression

$end reduce using rule 1 (statement -> NAME = expression .)

(4) expression -> expression

Terminals, with rules where they appear

. - expression + shift and go to state 7

- shift and go to state 6

*

(5) expression -> expression

: 5

. * expression * shift and go to state 8

/ shift and go to state 9

+ (6) expression -> expression

: 3 . / expression

- : 4

/ : 6

= : 1 state 11

! shift/reduce conflict for + resolved as shift.

NAME

NUMBER

:

:

1

7 (4) expression -> expression - expression .

! shift/reduce conflict for - resolved as shift.

error : (3) expression -> expression . + expression

(4) expression -> expression . - expression

! shift/reduce conflict for * resolved as shift.

Nonterminals, with rules where they appear (5) expression -> expression . * expression

(6) expression -> expression . / expression

!statement

shift/reduce

expression : 1 2 3 conflict for / resolved as shift.

: 0

3 4 4 5 5 6 6

! shift/reduce conflict for + resolved as shift.

$end

Parsing method: LALR

reduce using rule 4 (expression ->conflict for - resolved as shift.

! shift/reduce expression - expression .)

! shift/reduce conflict for * resolved as shift.

+

state 0

shift and go to state 7 ! shift/reduce conflict for / resolved as shift.

$end reduce using rule 4 (expression -> expression - expression .)

-(0) S' -> . statement shift and go to state 6 + shift and go to state 7

- shift and go to state 6

*(2) statement -> . expression shift and go to state 8

(1) statement -> . NAME = expression * shift and go to state 8

/ shift and go to state 9

/(4) expression -> . expression - expression

(3) expression -> . expression shift and go to state 9

+ expression

! + [ reduce using rule 4 (expression -> expression - expression .) ]

(5) expression -> . expression * expression ! - [ reduce using rule 4 (expression -> expression - expression .) ]

(6) expression -> . expression / expression ! * [ reduce using rule 4 (expression -> expression - expression .) ]

! + (7) expression -> . NUMBER [ reduce using rule 4 (expression

! / -> [ expression

reduce using rule 4 - expression

(expression -> expression - .) ]

expression .) ]

! -NAME

NUMBER

shift and go

shift and go

[ reduce using

to state 1

to state 2

rule 4 (expression -> expression - expression .) ]

! * [ reduce using rule 4 (expression -> expression - expression .) ]

! /expression

statement

[ reduce state 4

shift and go to using

shift and go to state 3

rule 4 (expression -> expression - expression .) ]

...state 1

(1) statement -> NAME . = expression

= shift and go to state 5](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-61-320.jpg)

![Error Example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

t_MINUS = r'-' example.py:12: Rule t_MINUS redefined.

t_POWER = r'^' Previously defined on line 6

def t_NUMBER():

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-63-320.jpg)

![Error Example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

t_MINUS = r'-'

t_POWER = r'^' lex: Rule 't_POWER' defined for an

unspecified token POWER

def t_NUMBER():

r’d+’

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-64-320.jpg)

![Error Example

import ply.lex as lex

tokens = [ ‘NAME’,’NUMBER’,’PLUS’,’MINUS’,’TIMES’,

’DIVIDE’, EQUALS’ ]

t_ignore = ‘ t’

t_PLUS = r’+’

t_MINUS = r’-’

t_TIMES = r’*’

t_DIVIDE = r’/’

t_EQUALS = r’=’

t_NAME = r’[a-zA-Z_][a-zA-Z0-9_]*’

t_MINUS = r'-'

t_POWER = r'^'

def t_NUMBER(): example.py:15: Rule 't_NUMBER' requires

r’d+’ an argument.

t.value = int(t.value)

return t

lex.lex() # Build the lexer](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-65-320.jpg)

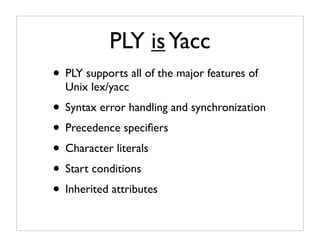

![Precedence Specifiers

• Yacc

%left PLUS MINUS

%left TIMES DIVIDE

%nonassoc UMINUS

...

expr : MINUS expr %prec UMINUS {

$$ = -$1;

}

• PLY

precedence = (

('left','PLUS','MINUS'),

('left','TIMES','DIVIDE'),

('nonassoc','UMINUS'),

)

def p_expr_uminus(p):

'expr : MINUS expr %prec UMINUS'

p[0] = -p[1]](https://image.slidesharecdn.com/ply2007-100925135319-phpapp02/85/Writing-Parsers-and-Compilers-with-PLY-68-320.jpg)