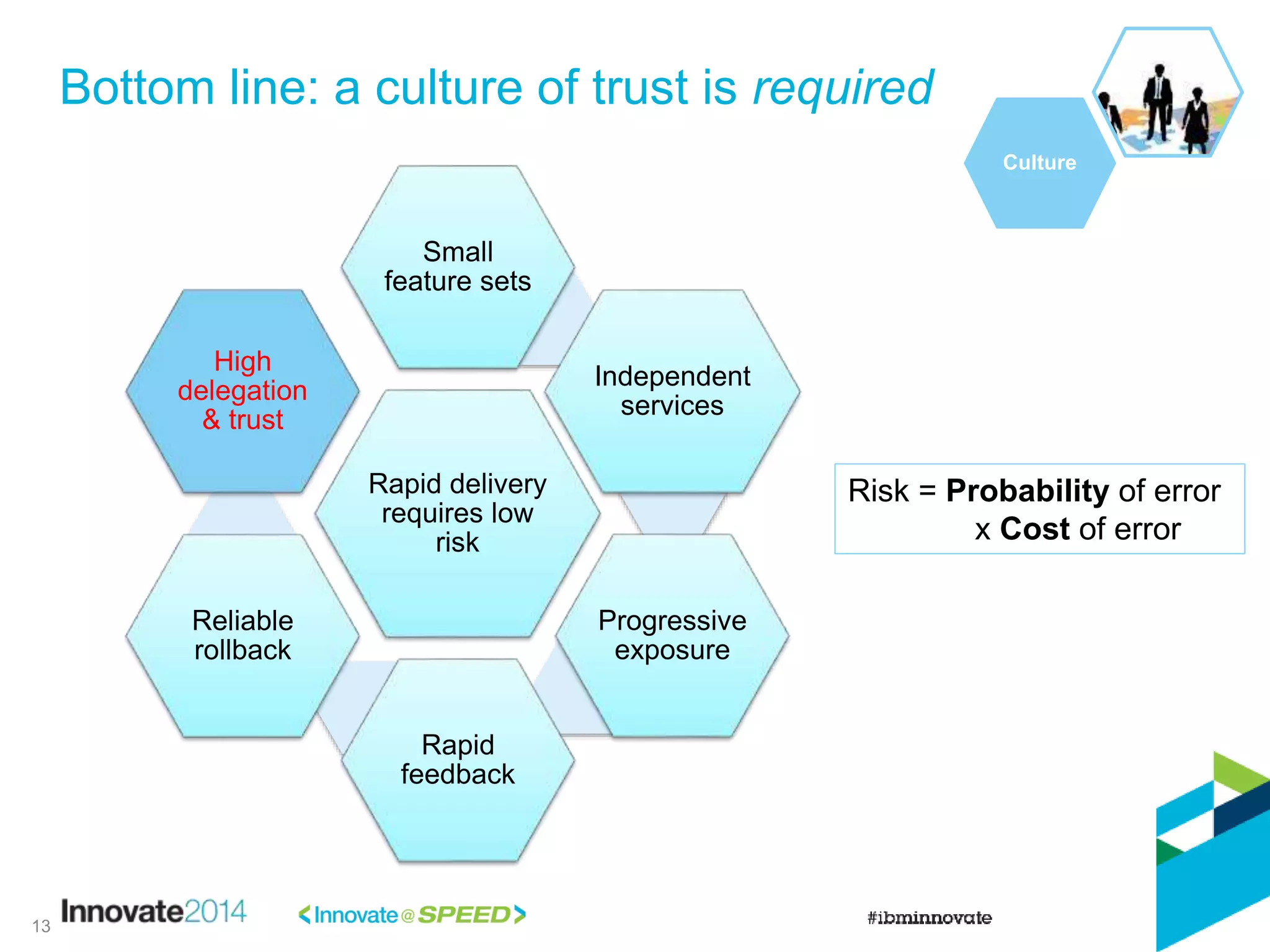

The document discusses insights into DevOps practices derived from successful web-based companies like Etsy and Netflix, emphasizing their organizational culture, automation, practices, and measurements. It highlights essential traits, such as high delegation and a culture of trust leading to rapid delivery and low risk. The key takeaways suggest that while not all aspects can be replicated, enterprises can learn from these 'cool kids' to enhance their own DevOps approaches.

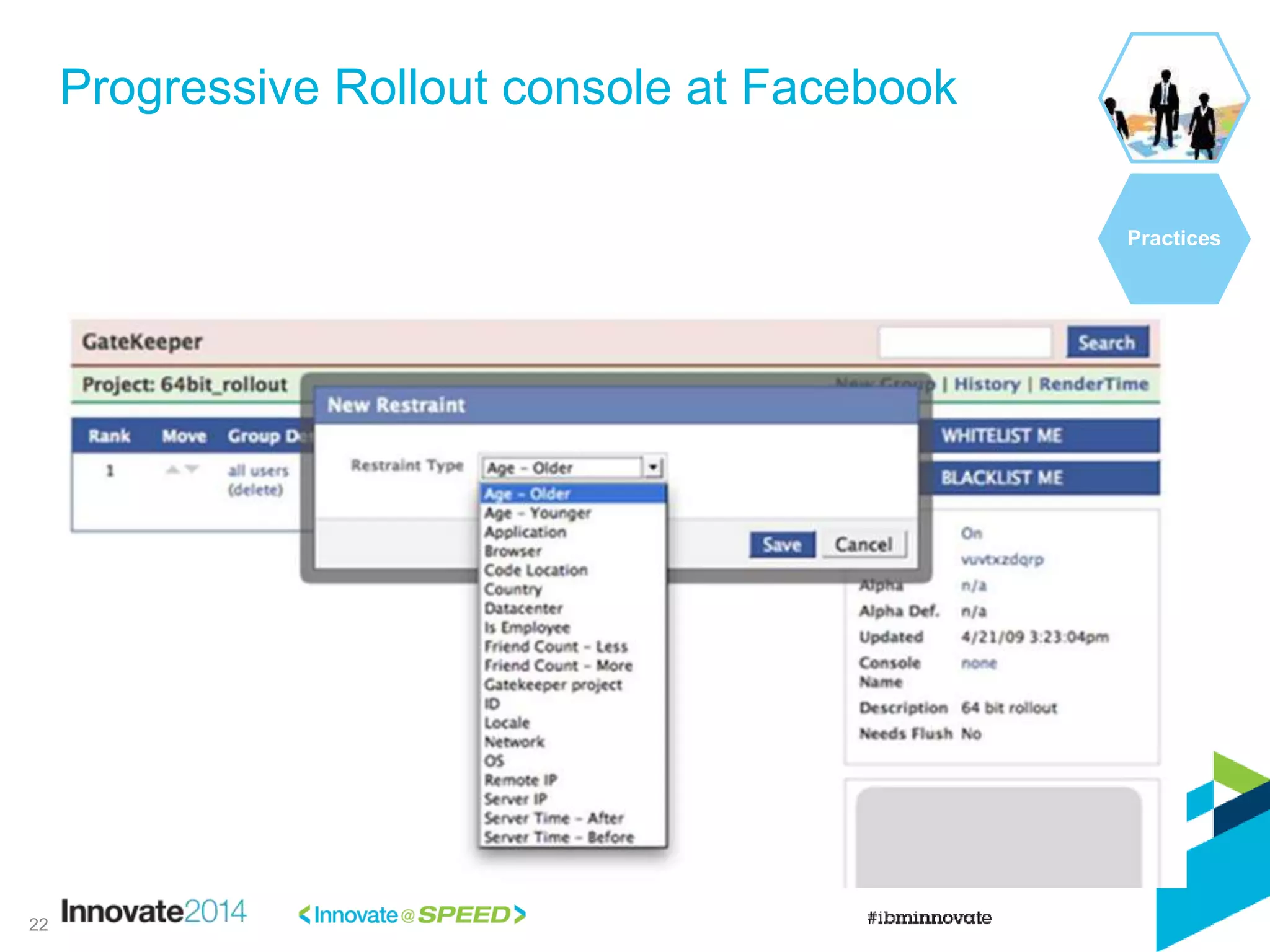

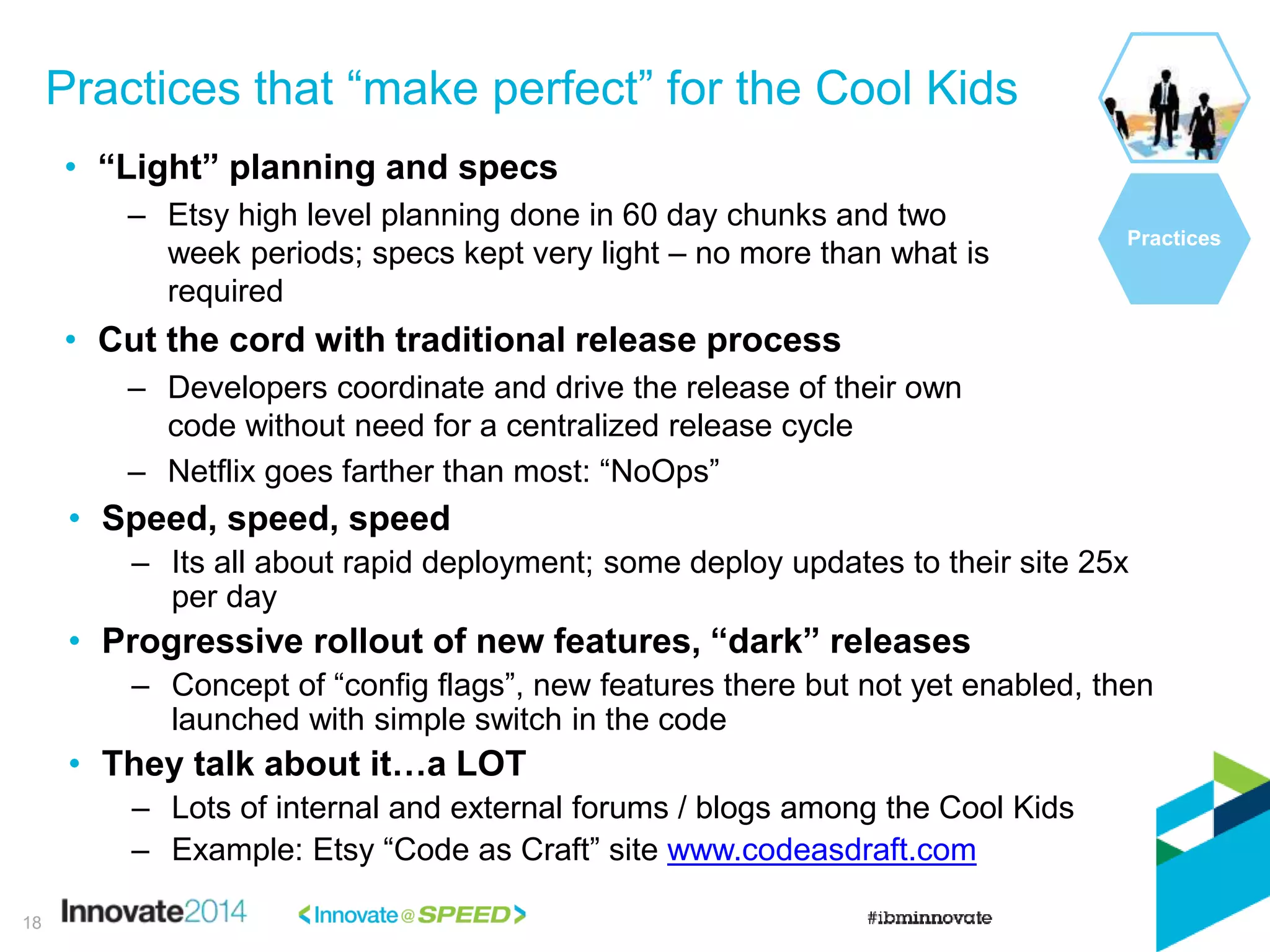

![• Progressive rollout of new features, “dark” releases:

– Deploy to one server with all features disabled to ensure no

performance or resource regressions (also known as “canarying”)

– Turn on features for a small population, and measure (“smoke test”)

– Turn it on for up to 1% of users, and measure

– Progressively roll out to all servers, continuing to measure

– Config Flags (also known as feature flags or gatekeepers [LinkedIn])

control which users see which features

• In order to successfully do Progressive Rollout, you’ll need

two more of our five essential elements:

– Automation, both to progressively roll out and to roll back if a

problem is discovered

– Measurement (tied to Instrumentation), in order to be able to rapidly

measure the impact

Practices: Progressive Rollout

Practices

21](https://image.slidesharecdn.com/2427-whatthecoolkidsaredoingwithdevops-140612135802-phpapp01/75/What-do-the-Cool-Kids-know-about-DevOps-22-2048.jpg)