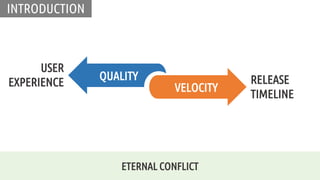

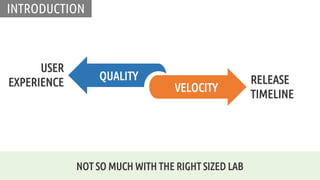

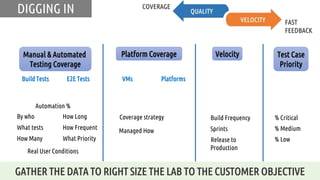

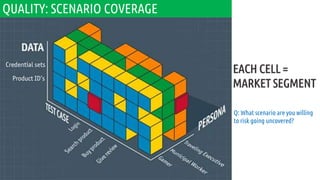

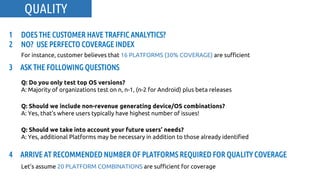

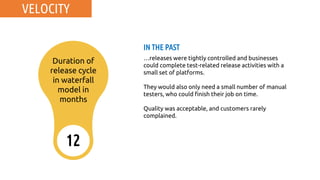

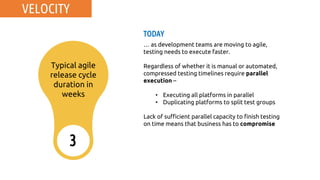

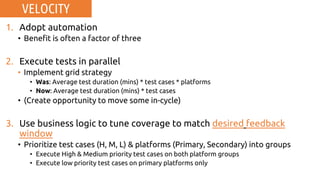

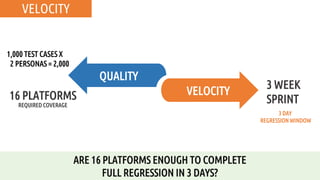

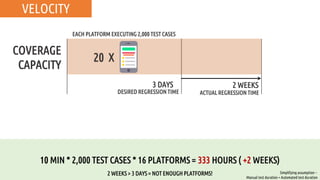

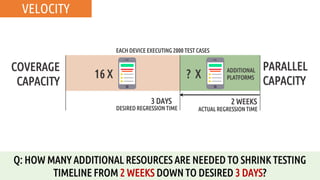

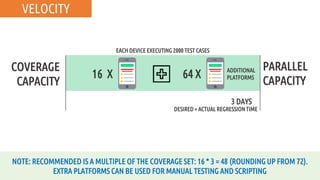

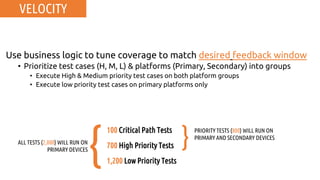

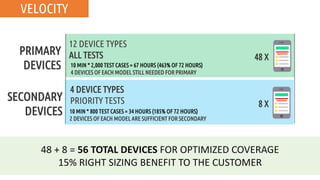

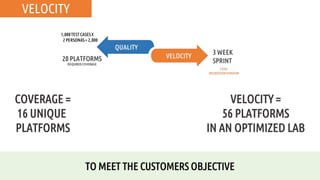

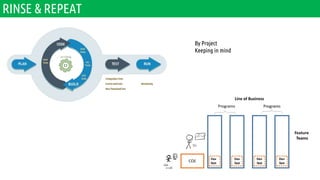

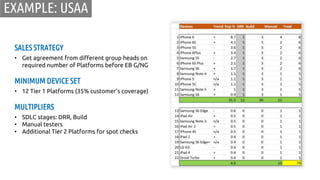

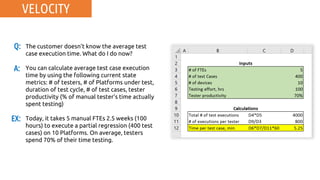

The document outlines a strategy for optimizing lab configurations to enhance testing quality and velocity while managing release timelines. It emphasizes the importance of parallel execution and automation to meet accelerated development cycles, determining the necessary platform coverage to ensure comprehensive testing. Recommendations are given for adjusting resource allocation and testing priorities based on customer needs and historical performance metrics.