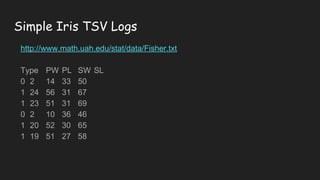

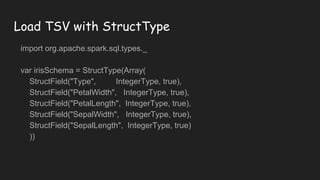

1) This document provides examples of how to use Spark DataFrames and SQL to load and analyze Iris flower data. It shows how to load data from files and Kafka, define schemas, select, filter, sort, group, and join dataframes.

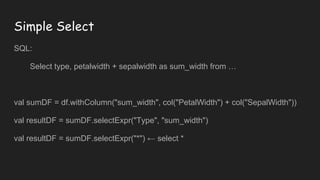

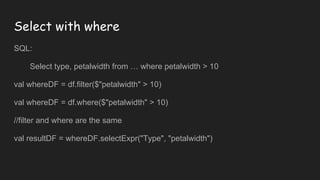

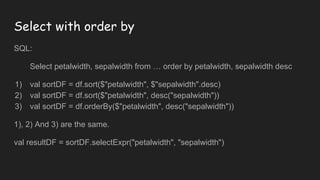

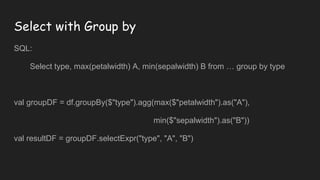

2) Methods like spark.read, dataframe.select(), dataframe.filter(), and dataframe.groupBy() are used to load and query the data. StructType and case classes define the schema. SQL statements can also be used via the sqlContext.

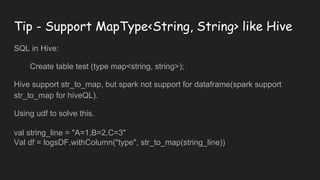

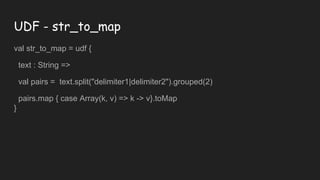

3) User defined functions (UDFs) are demonstrated to handle custom data types like maps. The examples provide an overview of basic Spark DataFrame and SQL functionality.

val logsDF = rdd.map { _.value }.toDF](https://image.slidesharecdn.com/usingsparkdataframeforsql-170714030904/85/Using-spark-data-frame-for-sql-3-320.jpg)

![Load TSV with Encoder #1

import org.apache.spark.sql.Encoders

case class IrisSchema(Type: Int, PetalWidth: Int, PetalLength: Int,

SepalWidth: Int, SepalLength: Int)

var irisSchema = Encoders.product[IrisSchema].schema](https://image.slidesharecdn.com/usingsparkdataframeforsql-170714030904/85/Using-spark-data-frame-for-sql-7-320.jpg)