Embed presentation

Download as PDF, PPTX

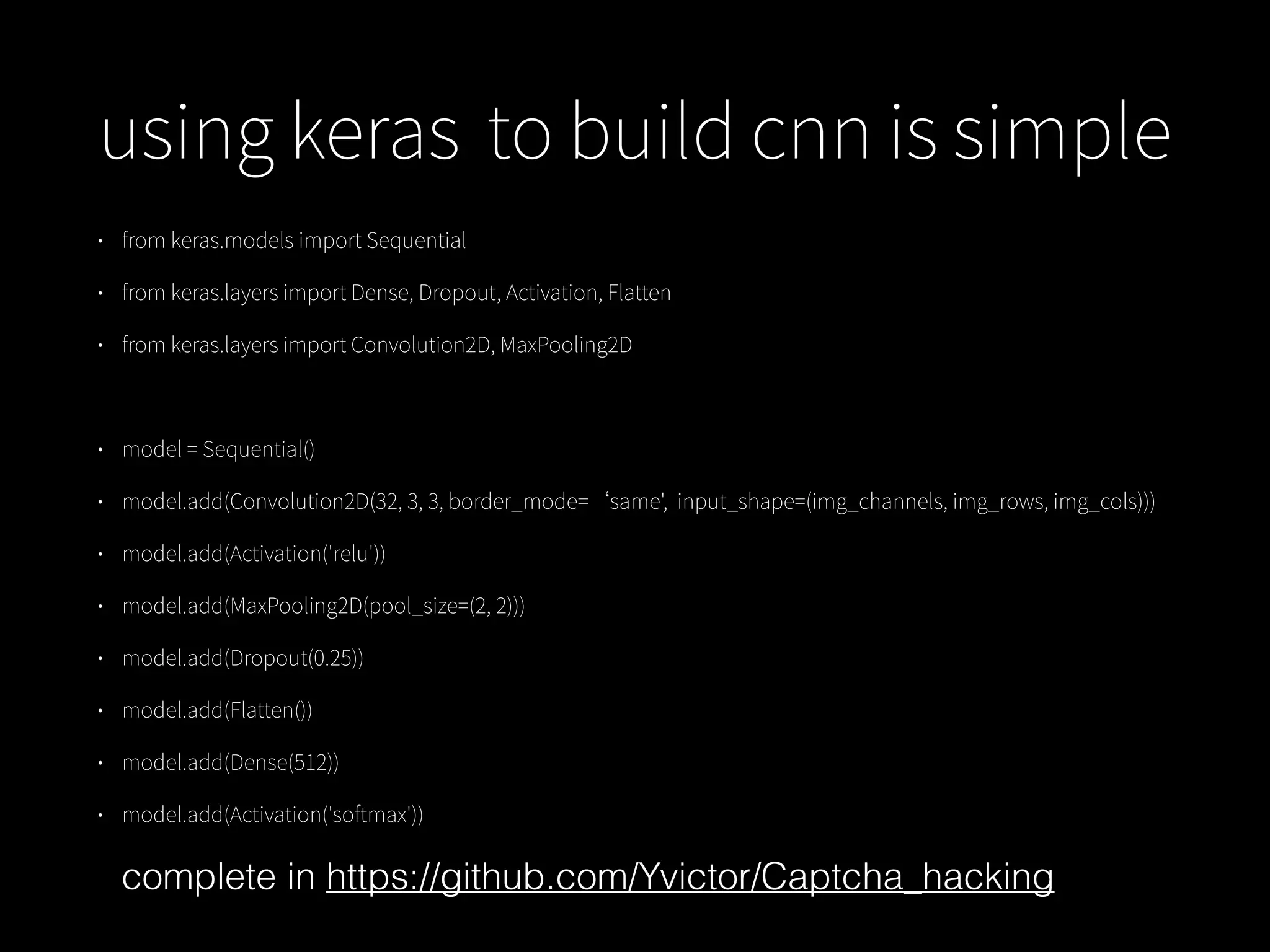

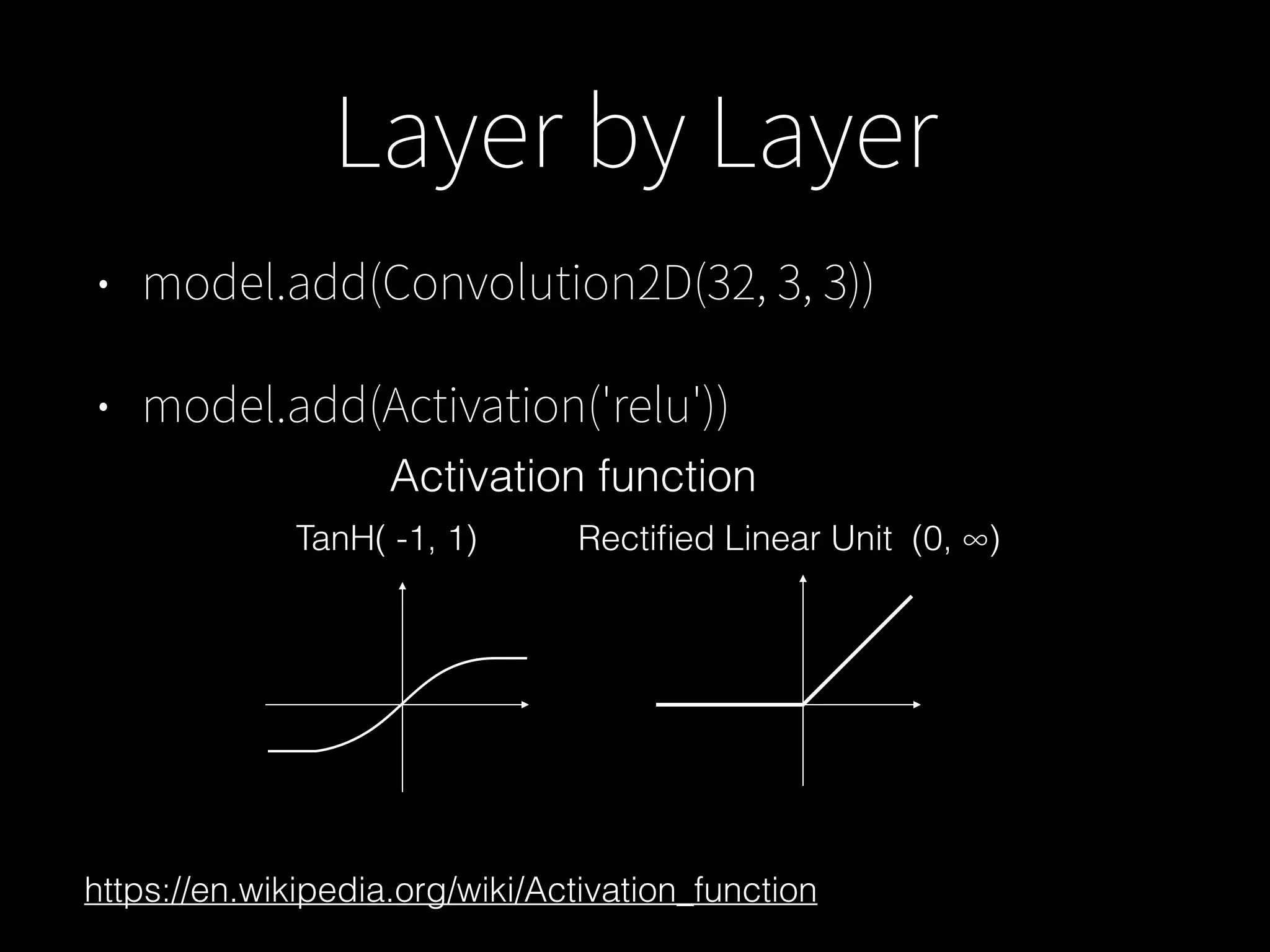

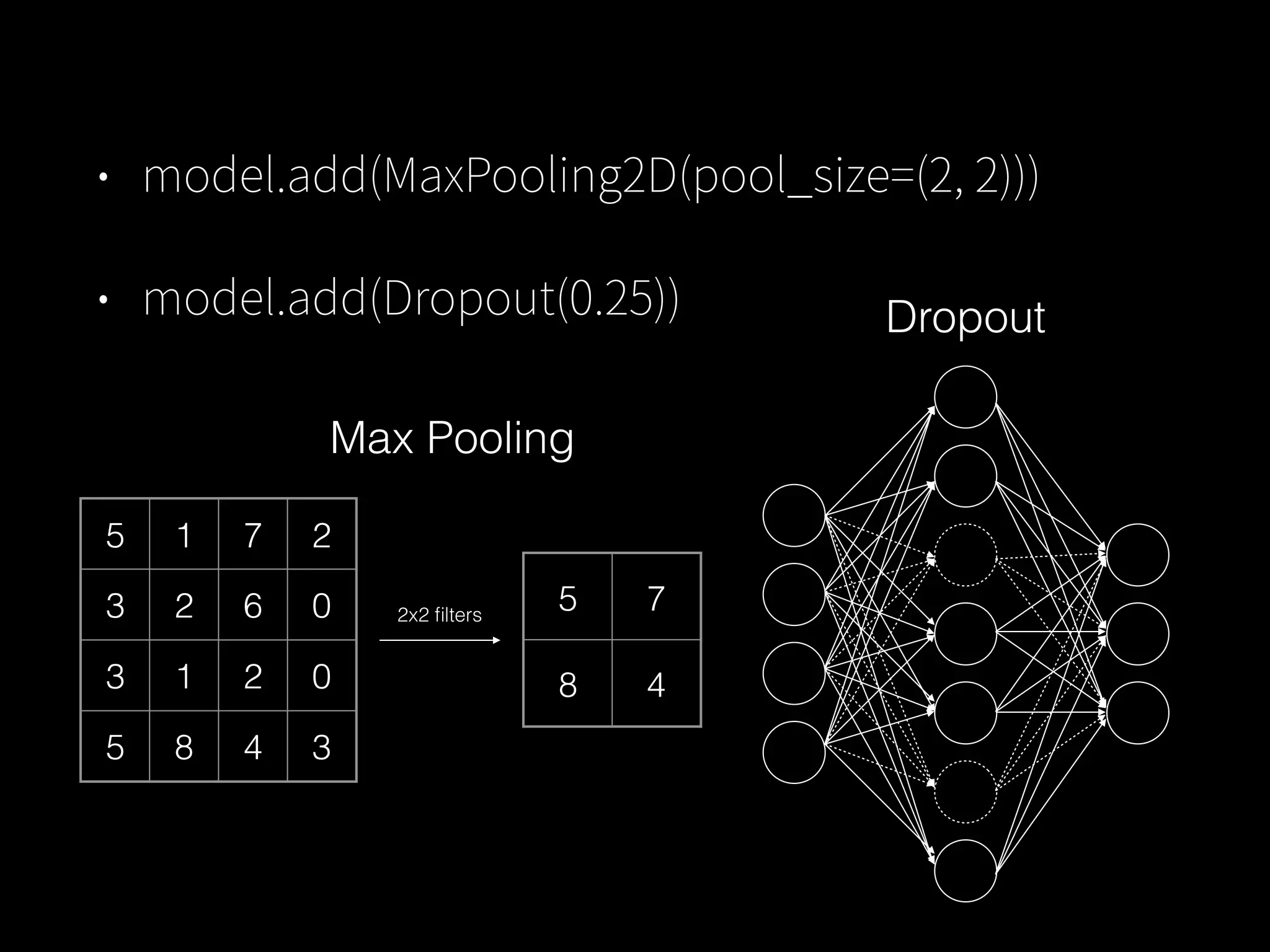

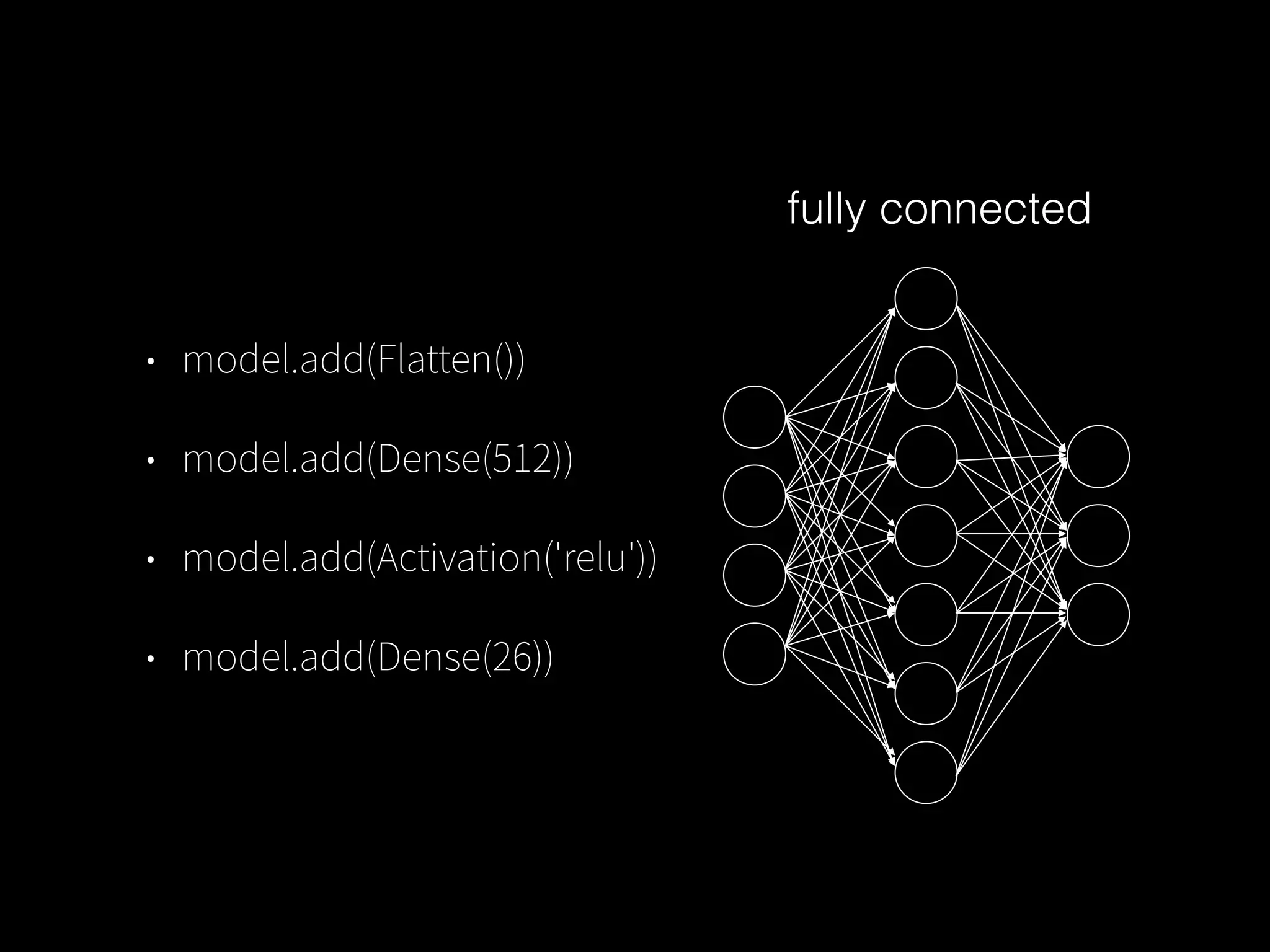

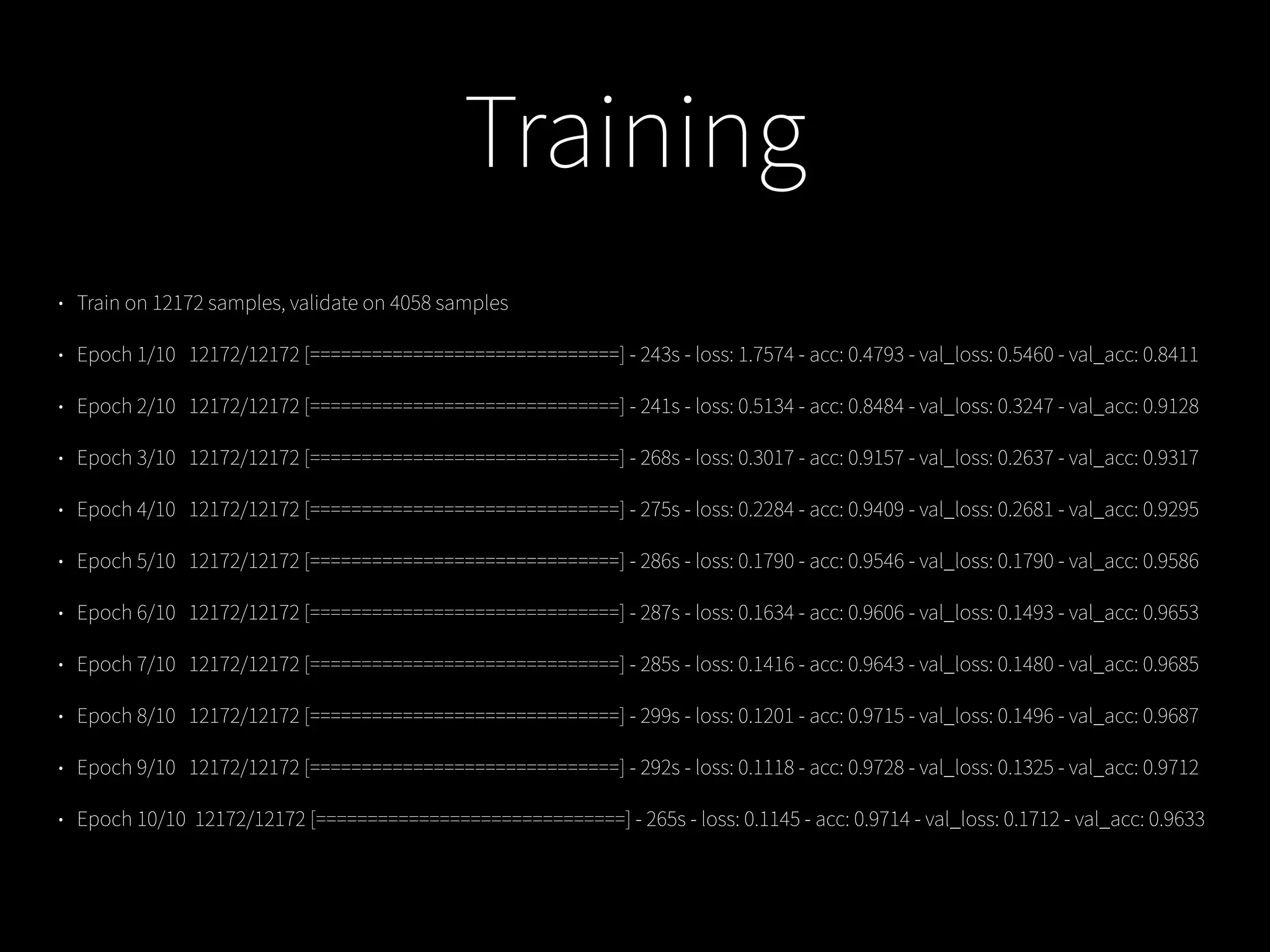

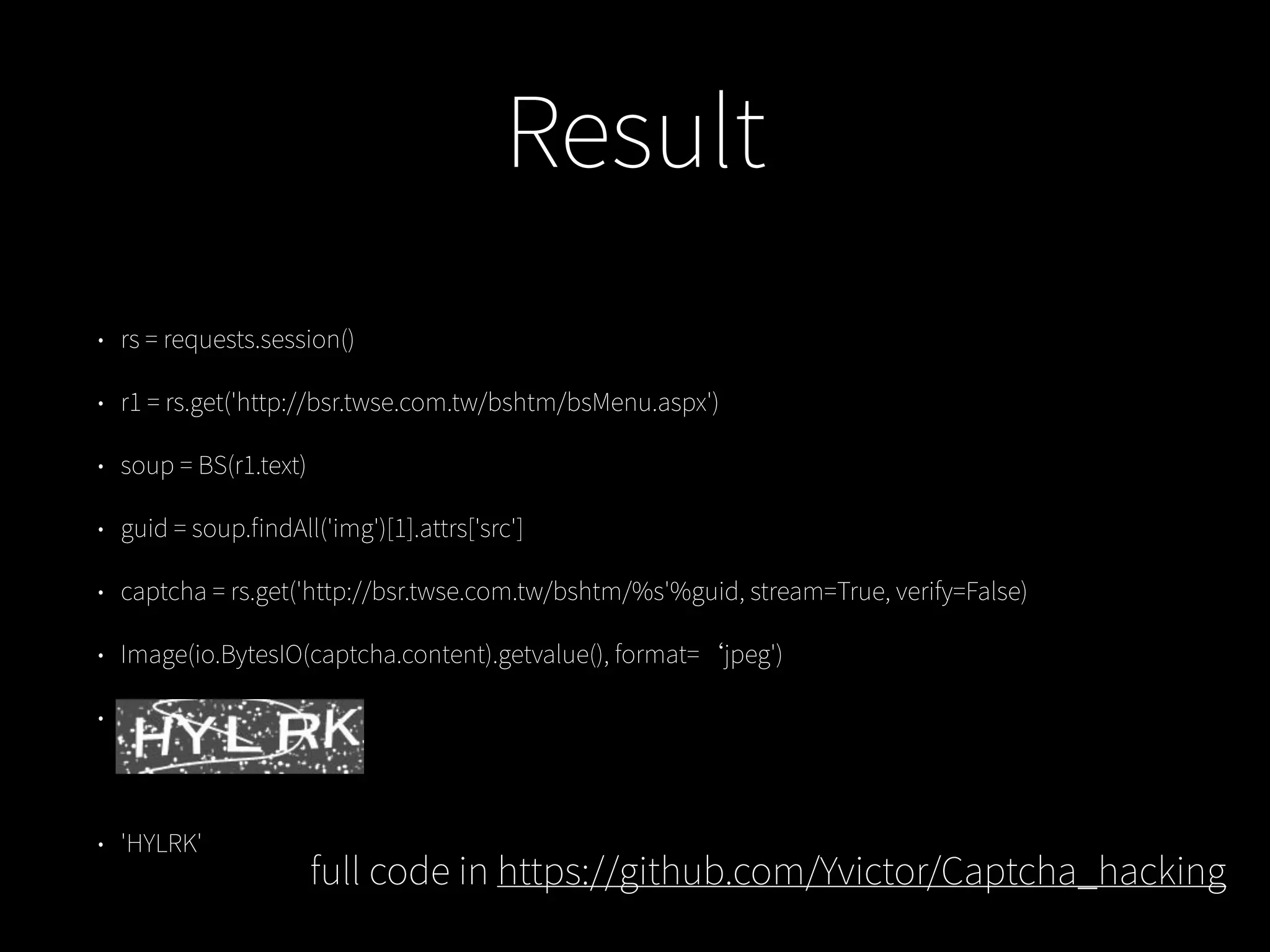

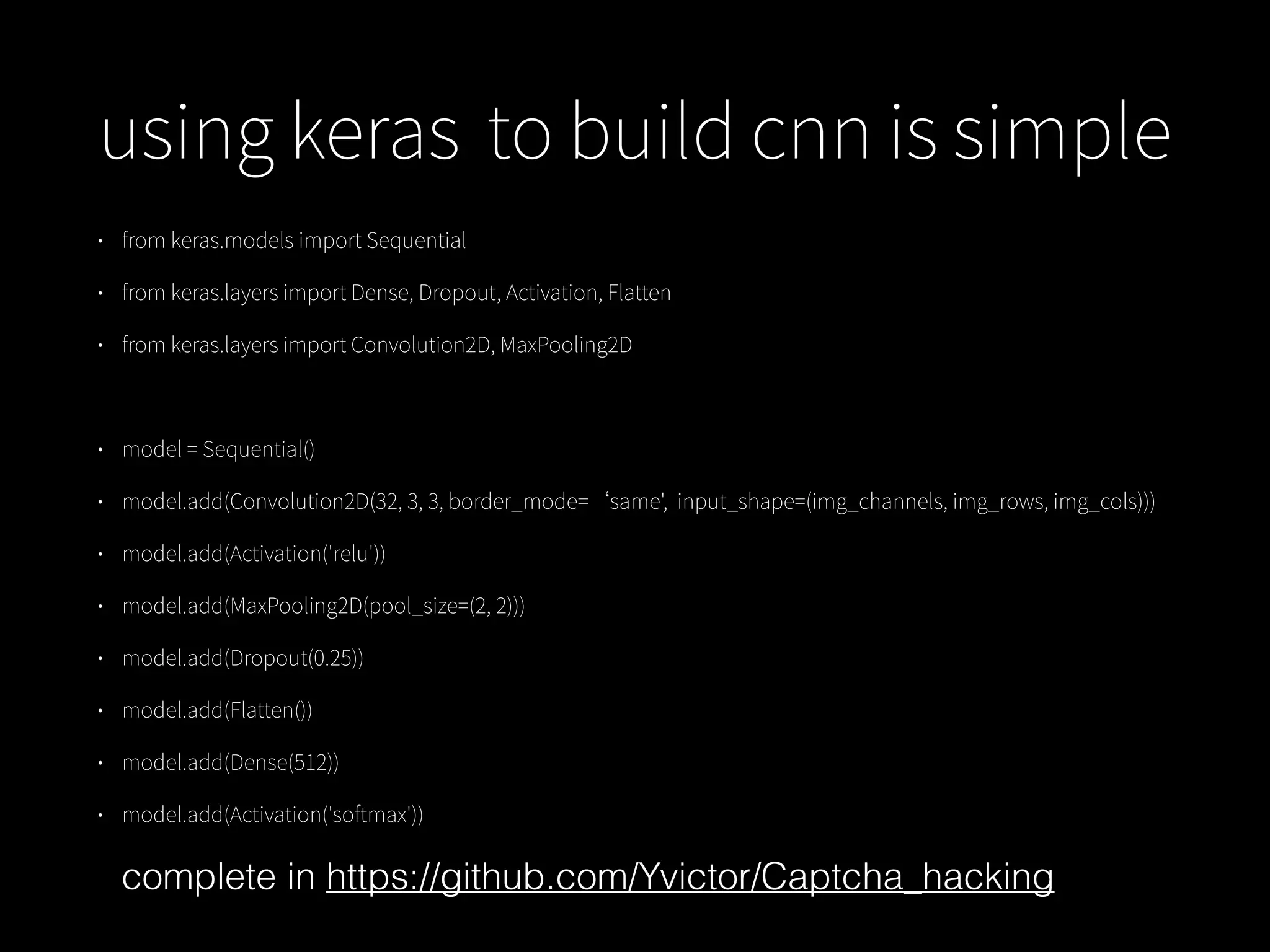

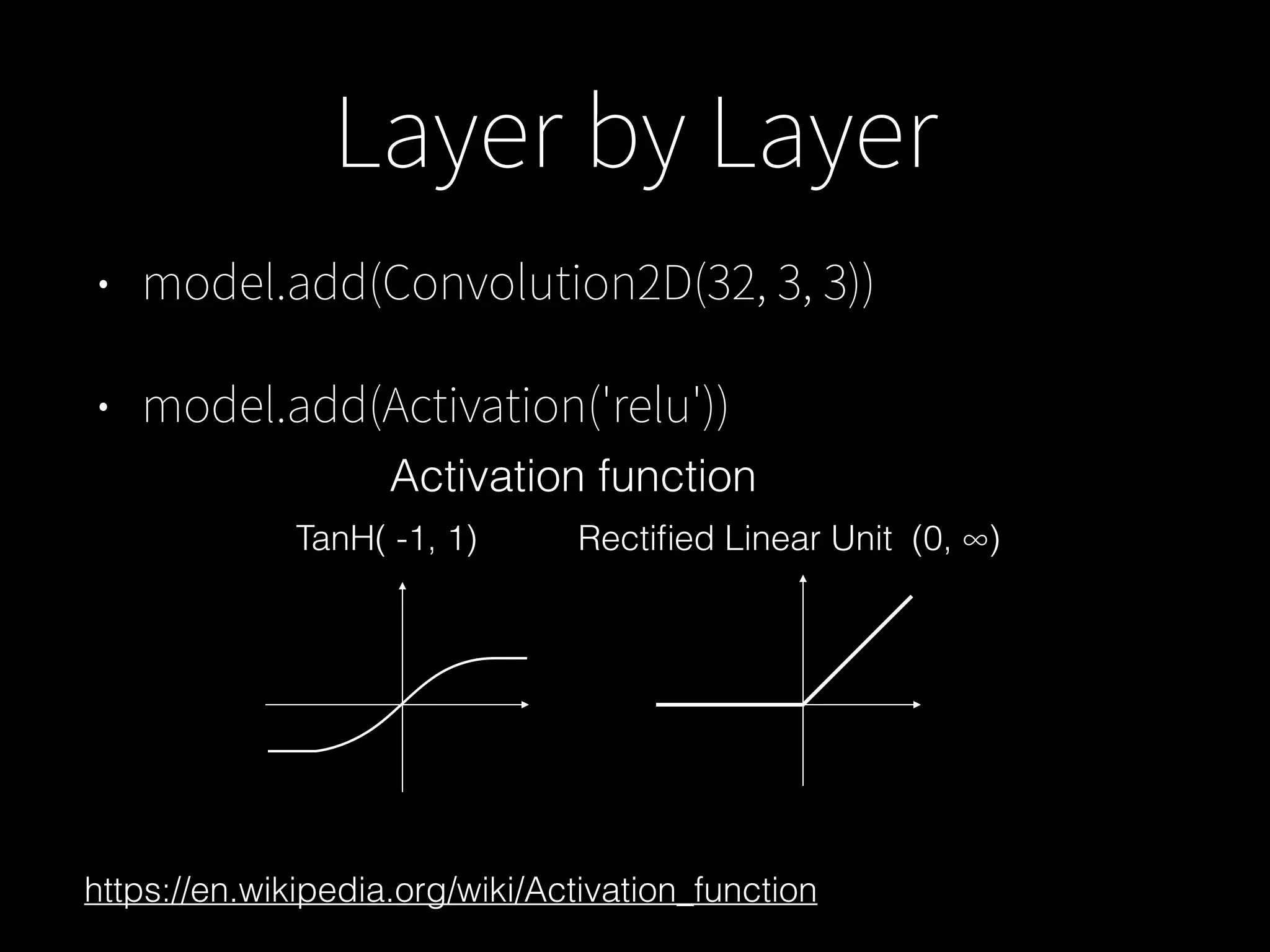

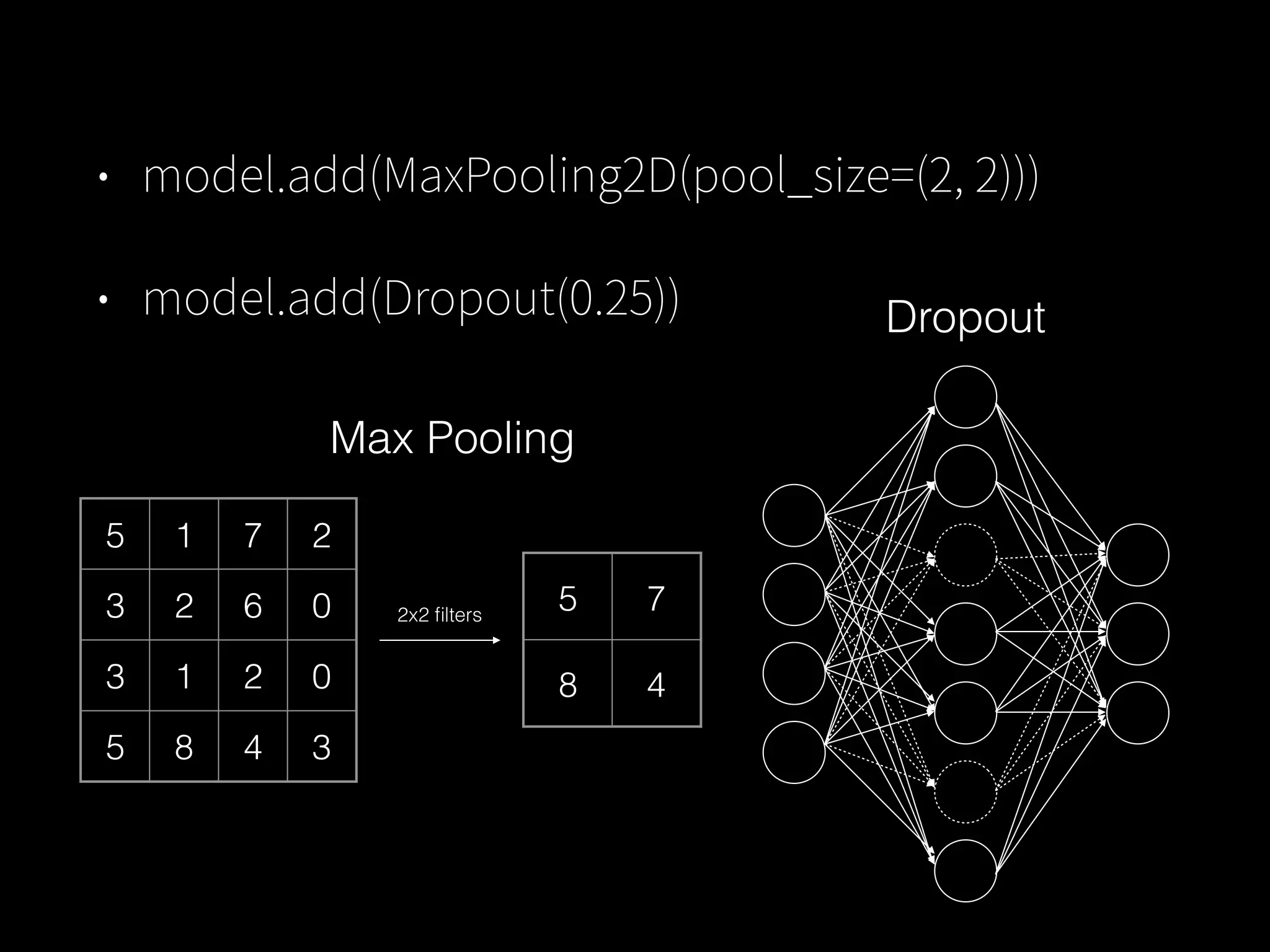

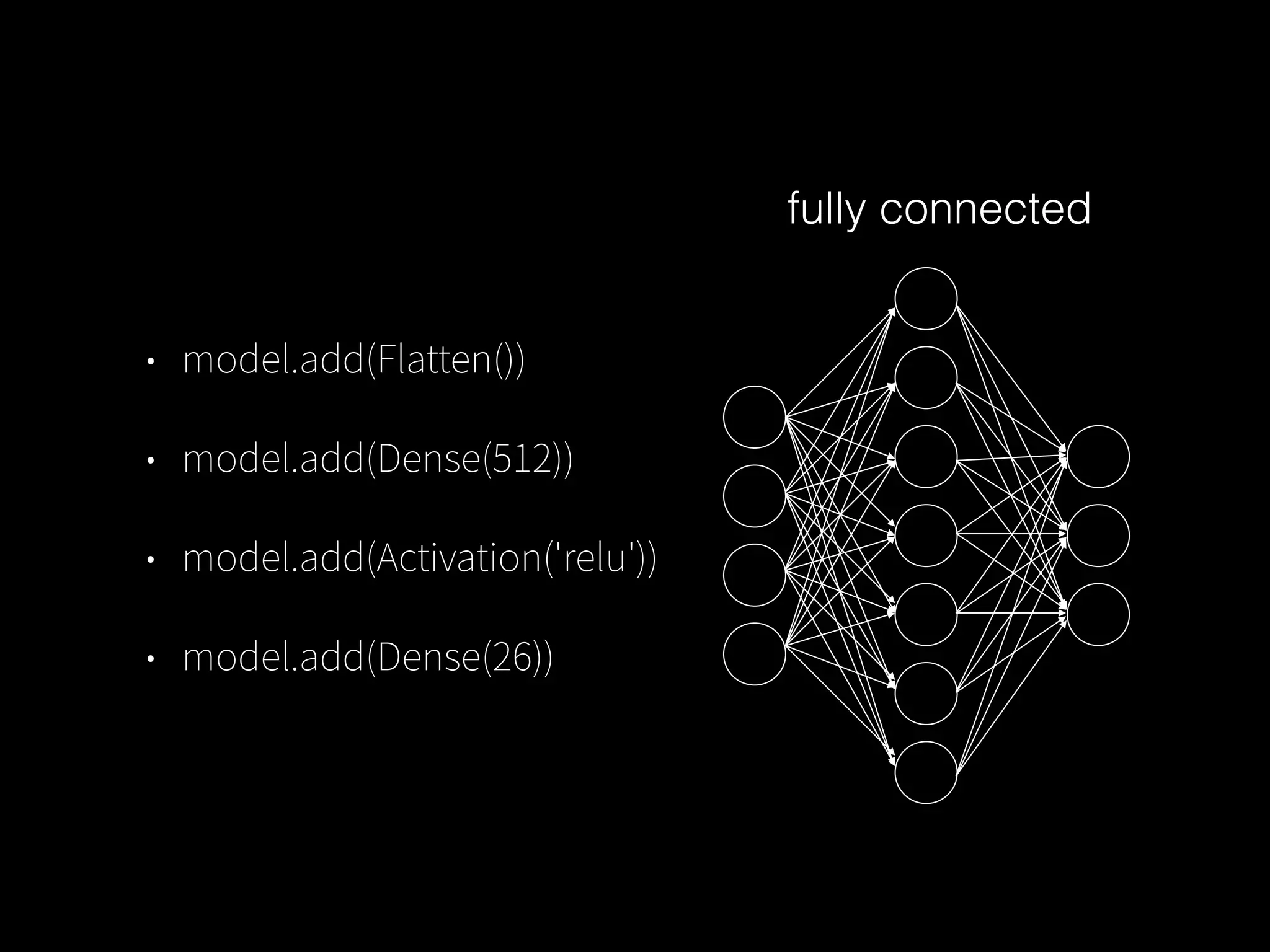

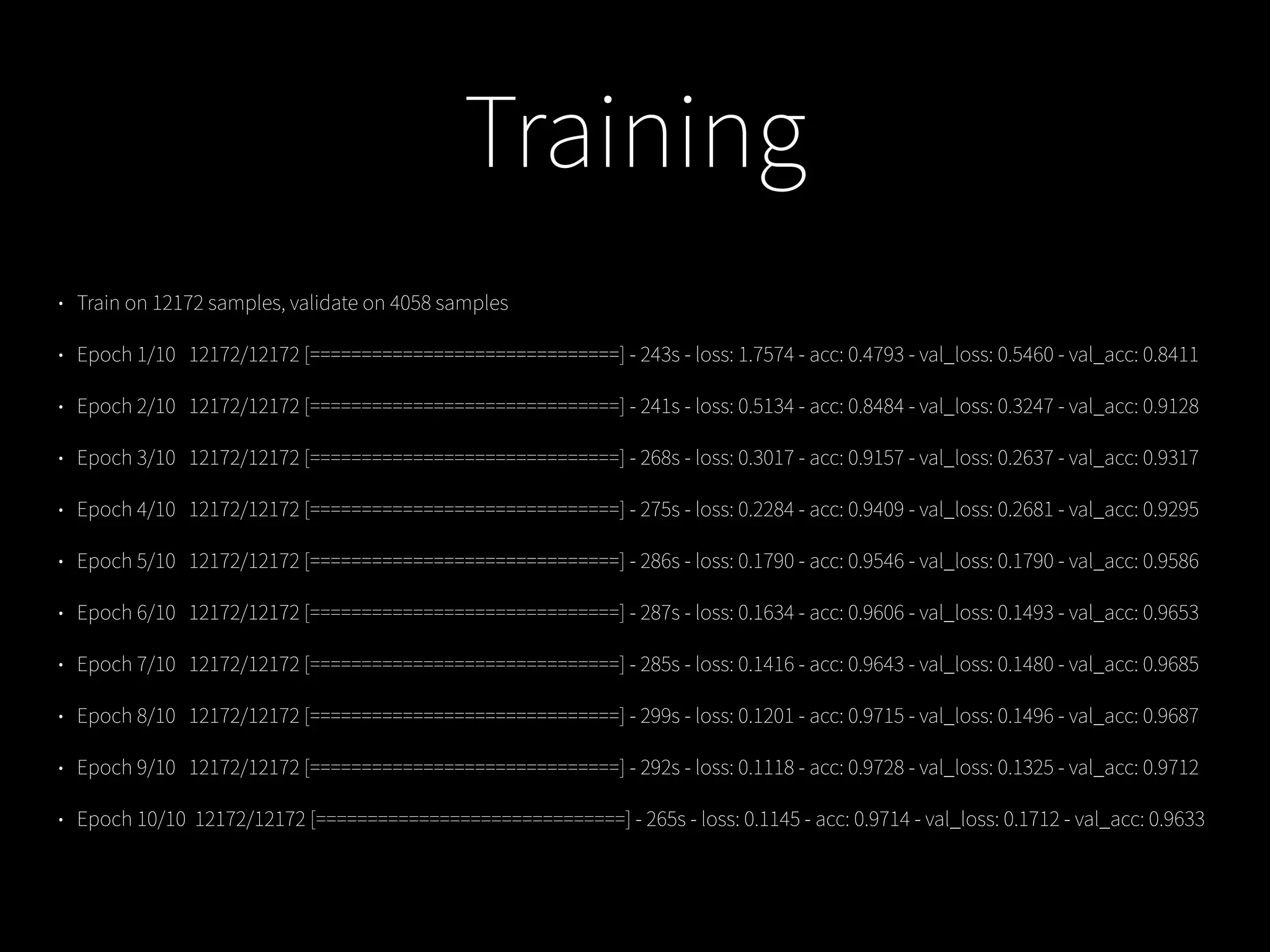

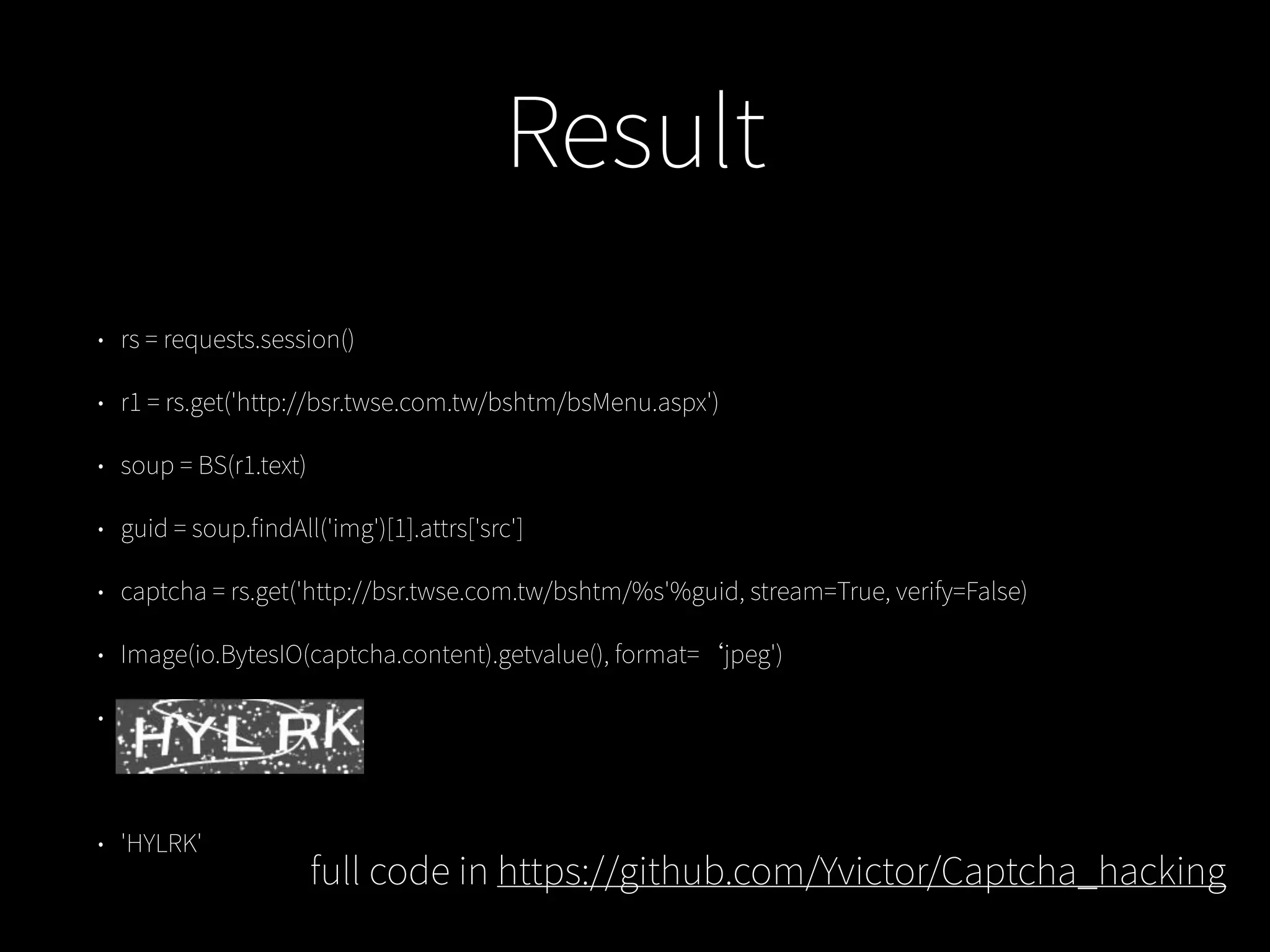

This document discusses techniques used in neural networks including activation functions like TanH and ReLU, max pooling to reduce dimensions, dropout to prevent overfitting, and fully connected layers. It also provides a link to a GitHub project for hacking captchas using these techniques.