The document describes a MinMax normalization estimator and its model in a Spark environment, detailing the transformation process for data normalization. It includes class definitions, methods for fitting the model, and test specifications using JUnit for verifying the functionality of the estimator. Additionally, it references links to external resources for further information on Spark's capabilities and best practices.

![class MinMaxNormEstimator(uid: String = UID[MinMaxNormEstimator])

extends UnaryEstimator[Real, Real](operationName = "minMaxNorm", uid = uid) {

def fitFn(dataset: Dataset[Real#Value]): UnaryModel[Real, Real] = {

val grouped = dataset.groupBy()

val max = grouped.max().first().getDouble(0)

val min = grouped.min().first().getDouble(0)

new MinMaxNormEstimatorModel(min = min, max = max, operationName = operationName, uid = uid)

}

}

class MinMaxNormEstimatorModel(val min: Double, val max: Double, operationName: String, uid: String)

extends UnaryModel[Real, Real](operationName = operationName, uid = uid) {

def transformFn: Real => Real = _.value.map(v => (v - min) / (max - min)).toReal

}](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-8-2048.jpg)

![@RunWith(classOf[JUnitRunner])

class MinMaxNormEstimatorTest extends FlatSpec with Matchers {

val conf = new SparkConf().setMaster("local[*]")

implicit val spark = SparkSession.builder().config(conf).getOrCreate()

import spark.implicits._

"MinMaxNormEstimator" should ”fit a min/max model" in {

val data = spark.createDataset(Seq(-1.0, 0.0, 5.0, 1.0).map(Option(_)))

val model = new MinMaxNormEstimator().fitFn(data)

model match {

case m: MinMaxNormEstimatorModel =>

m.min shouldBe -1.0

m.max shouldBe 5.0

case m => fail(s"Unexpected model type: ${m.getClass}")

}

}

}](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-9-2048.jpg)

![@RunWith(classOf[JUnitRunner])

class MinMaxNormEstimatorTest extends FlatSpec with Matchers with BeforeAndAfterAll {

val conf = new SparkConf().setMaster("local[*]")

implicit val spark = SparkSession.builder().config(conf).getOrCreate()

import spark.implicits._

override def afterAll(): Unit = {

super.afterAll()

spark.stop()

}

// …

}](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-10-2048.jpg)

![trait TestSpark extends BeforeAndAfterAll { self: Suite =>

val kryoClasses: Array[Class[_]] = Array()

val conf: SparkConf = new SparkConf()

.setMaster("local[*]")

.registerKryoClasses(kryoClasses)

.set("spark.serializer", classOf[org.apache.spark.serializer.KryoSerializer].getName)

.set("spark.ui.enabled", false.toString) // disable Spark Application UI for faster tests

implicit lazy val spark = SparkSession.builder().config(conf).getOrCreate()

override def afterAll(): Unit = {

super.afterAll()

spark.stop()

}

}

abstract class SparkFlatSpec extends FlatSpec with Matchers with TestSpark](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-11-2048.jpg)

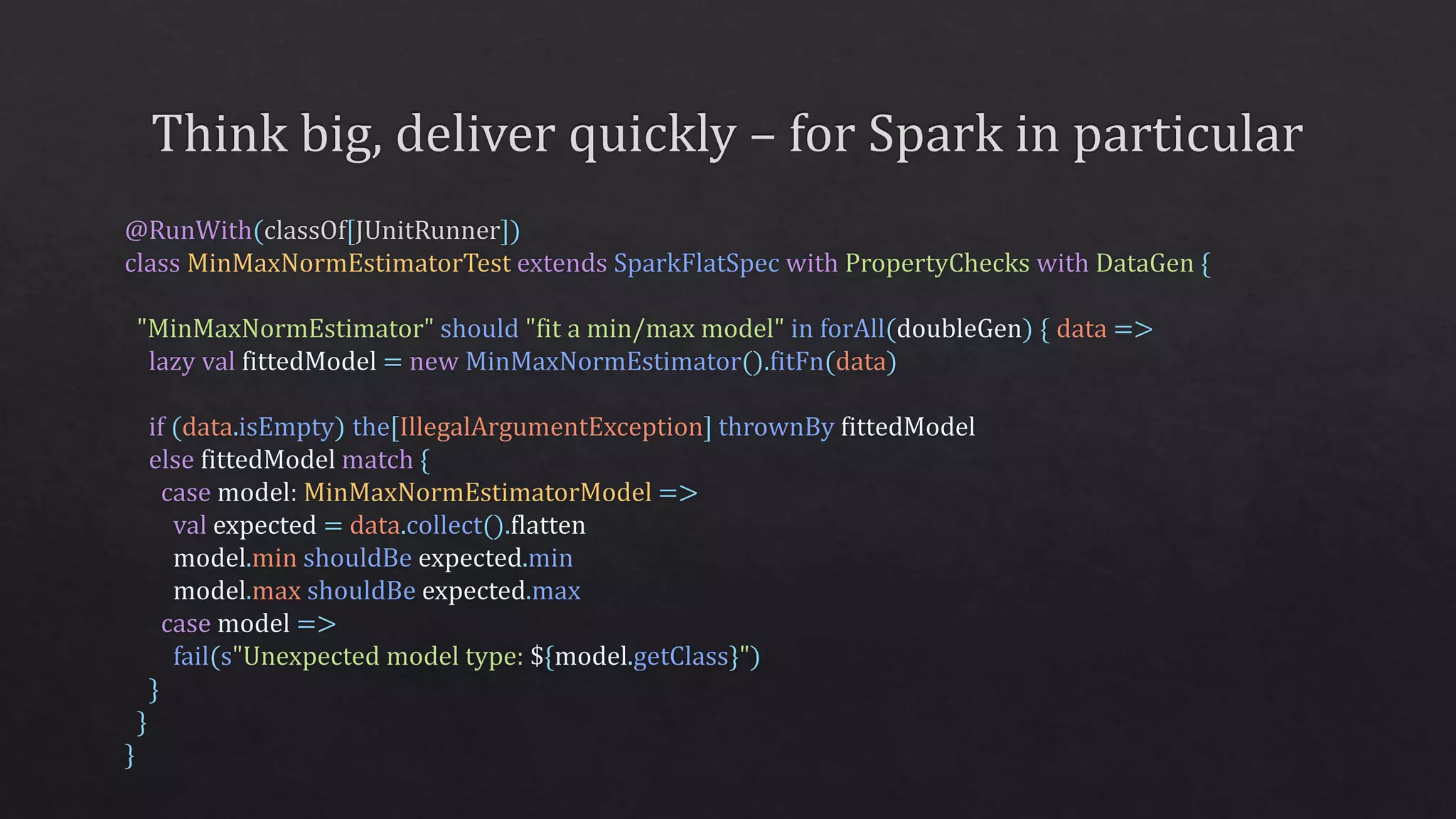

![@RunWith(classOf[JUnitRunner])

class MinMaxNormEstimatorTest extends SparkFlatSpec {

import spark.implicits._

val data = spark.createDataset(Seq(-1.0, 0.0, 5.0, 1.0).map(Option(_)))

"MinMaxNormEstimator" should "fit a min/max model" in {

new MinMaxNormEstimator().fitFn(data) match {

case model: MinMaxNormEstimatorModel =>

model.min shouldBe -1.0

model.max shouldBe 5.0

case model =>

fail(s"Unexpected model type: ${model.getClass}")

}

}

}

More here:

https://github.com/apache/spark/blob/master/core/src/test/scala/org/apache/spark/SparkFunSuite.scala](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-12-2048.jpg)

![package org.apache.spark.ml

abstract class Transformer extends PipelineStage

{

def transform(dataset: Dataset[_]): DataFrame

}

type DataFrame = Dataset[Row]

trait Row extends Serializable {

def get(i: Int): Any

}](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-20-2048.jpg)

![package org.apache.spark.util

case object ClosureUtils {

def checkSerializable(closure: AnyRef): Try[Unit] = scala.util.Try {

ClosureCleaner.clean(closure, checkSerializable = true, cleanTransitively = true)

}

}

package my.app

val x = new NonSerializable { def fun() = ??? }

ClosureUtils.checkSerializable(x.fun _) // make the check early at application start

udf { d: Double => x.fun(d) } // if we are here – it’s safe to use x](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-24-2048.jpg)

![val ds: Dataset[_] = ???

println("Dataset schema & plans:")

ds.printSchema()

ds.explain(extended = true)

https://databricks.com/blog/2015/04/13/deep-dive-into-spark-sqls-catalyst-optimizer.html

https://databricks.com/blog/2016/05/23/apache-spark-as-a-compiler-joining-a-billion-rows-per-second-on-a-laptop.html](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-26-2048.jpg)

![// Apply all the transformers one by one as org.apache.spark.ml.Pipeline does

transformers.zipWithIndex.foldLeft(data) { case (df, (transformer, i)) =>

val persist = i > 0 && i % persistEveryKStages == 0

val newDF = transformer.transform(df)

if (!persist) newDF

else {

// Converting to RDD and back here to break up Catalyst [SPARK-13346]

val persisted = newDF.rdd.persist()

lastPersisted.foreach(_.unpersist())

lastPersisted = Some(persisted)

spark.createDataFrame(persisted, newDF.schema)

}

}

https://github.com/salesforce/TransmogrifAI/blob/master/core/src/main/scala/com/salesforce/op/uti

ls/stages/FitStagesUtil.scala#L150](https://image.slidesharecdn.com/theruleof10000sparkjobs-learningfromexceptionsandserializingyourknowledge-190425041133/75/The-Rule-of-10-000-Spark-Jobs-Learning-from-Exceptions-and-Serializing-Your-Knowledge-28-2048.jpg)