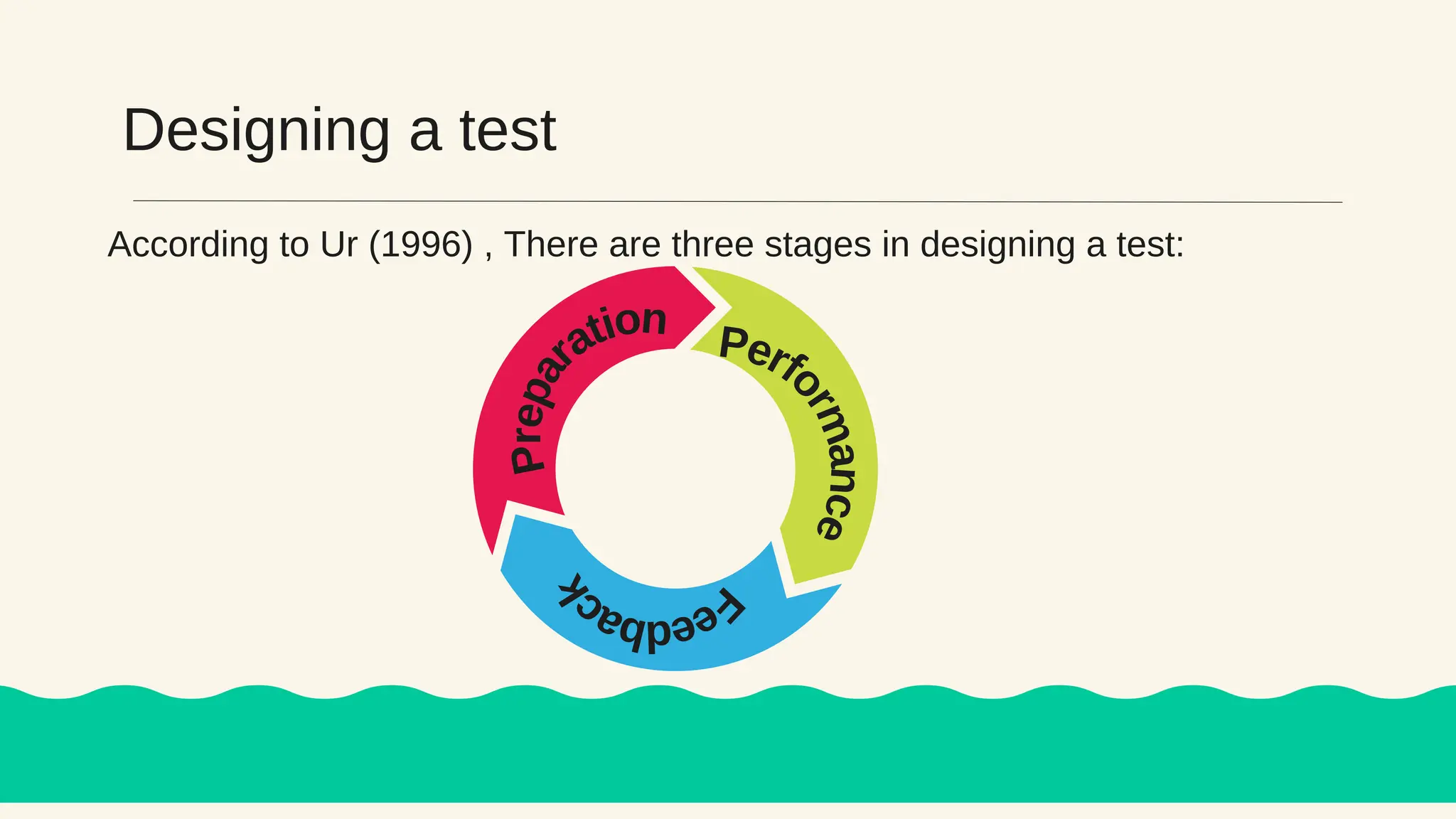

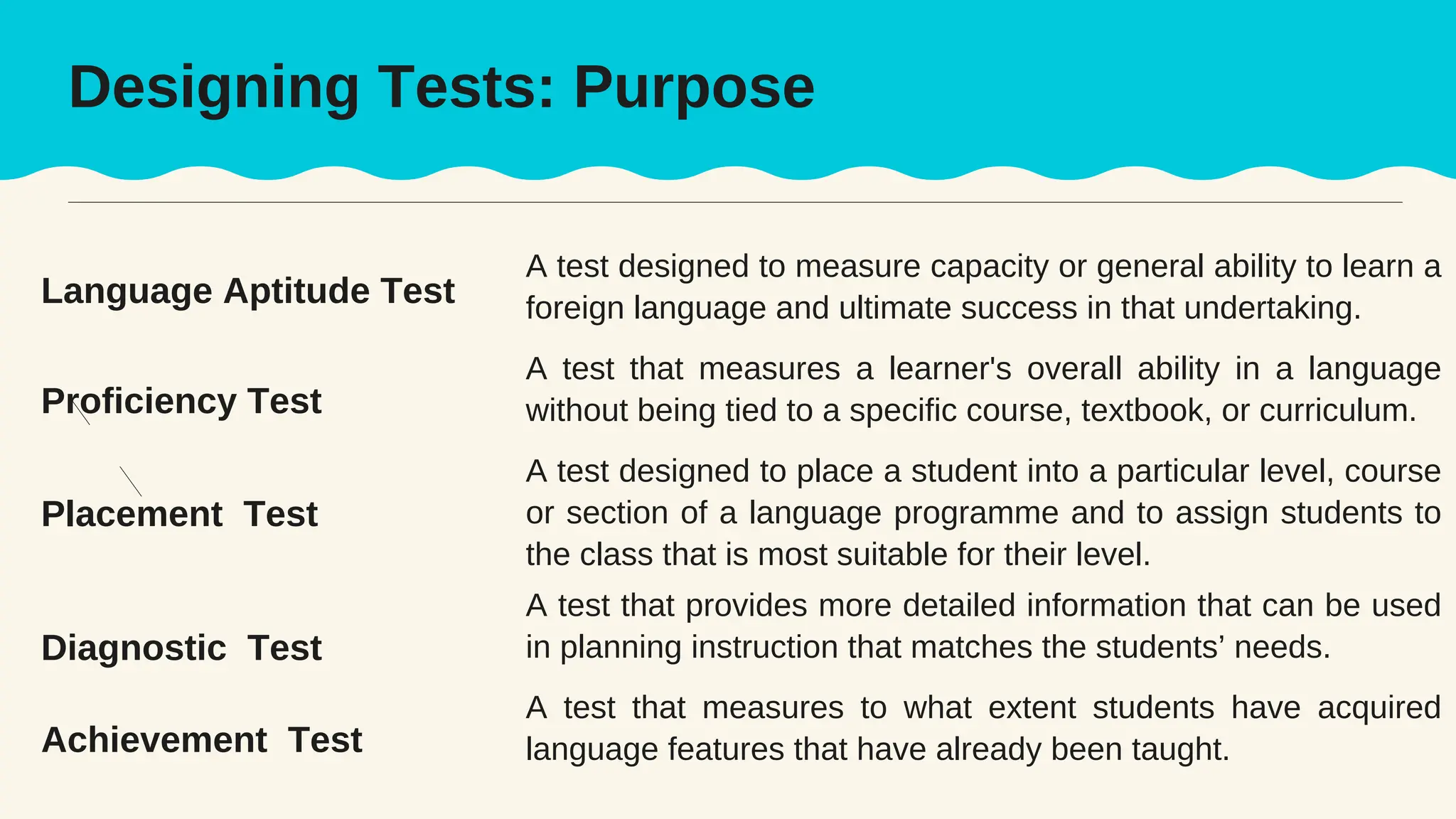

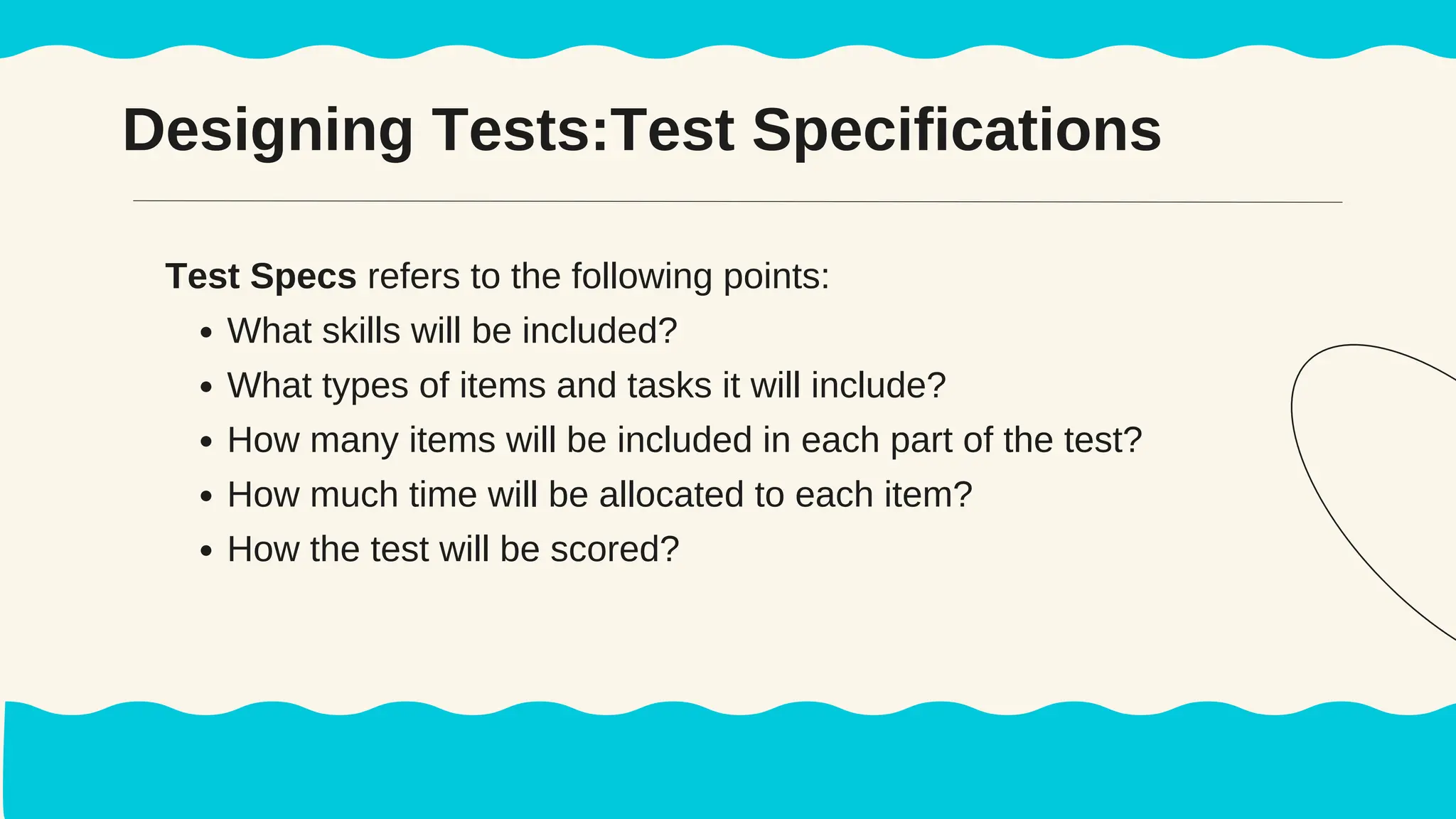

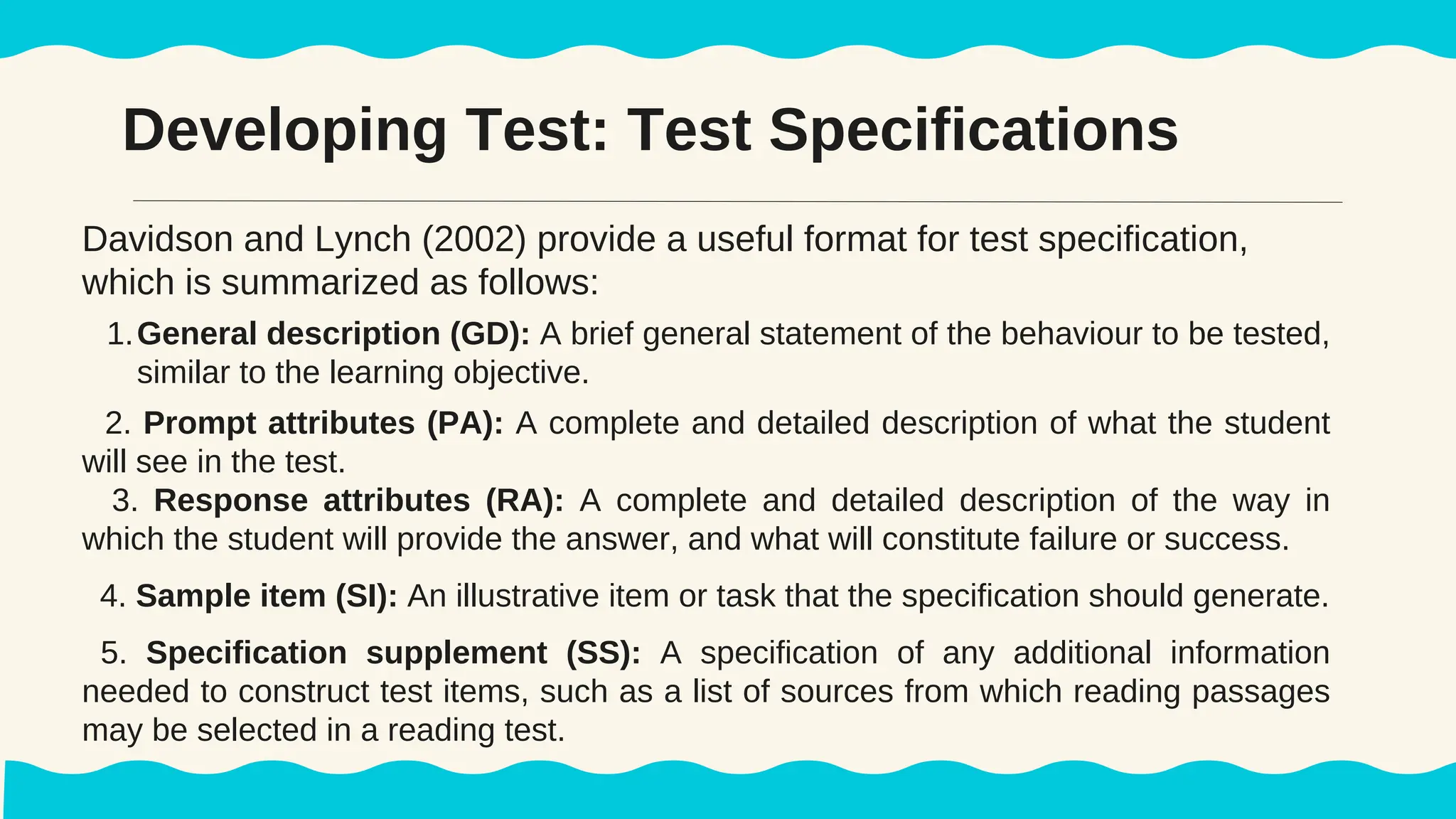

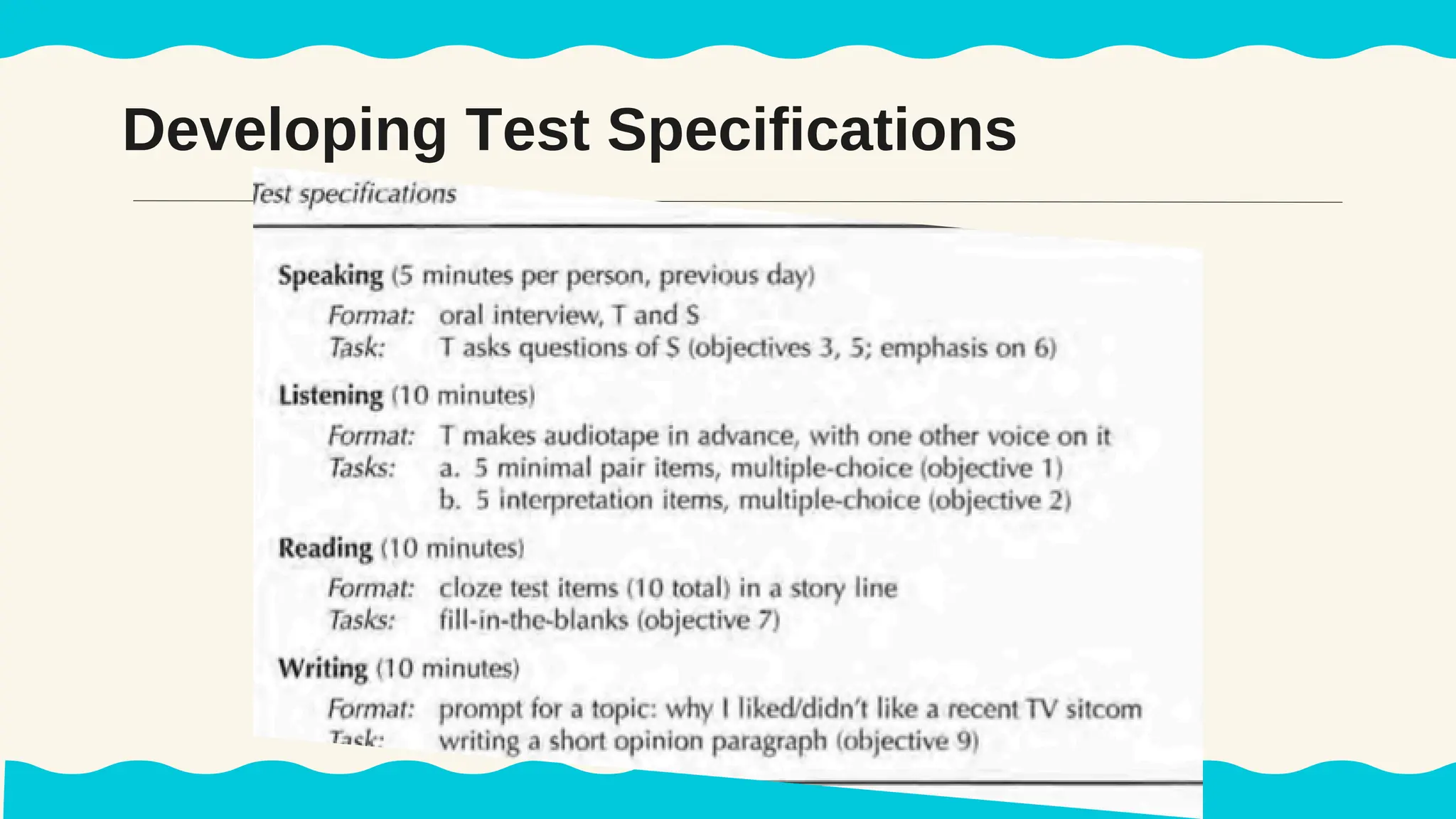

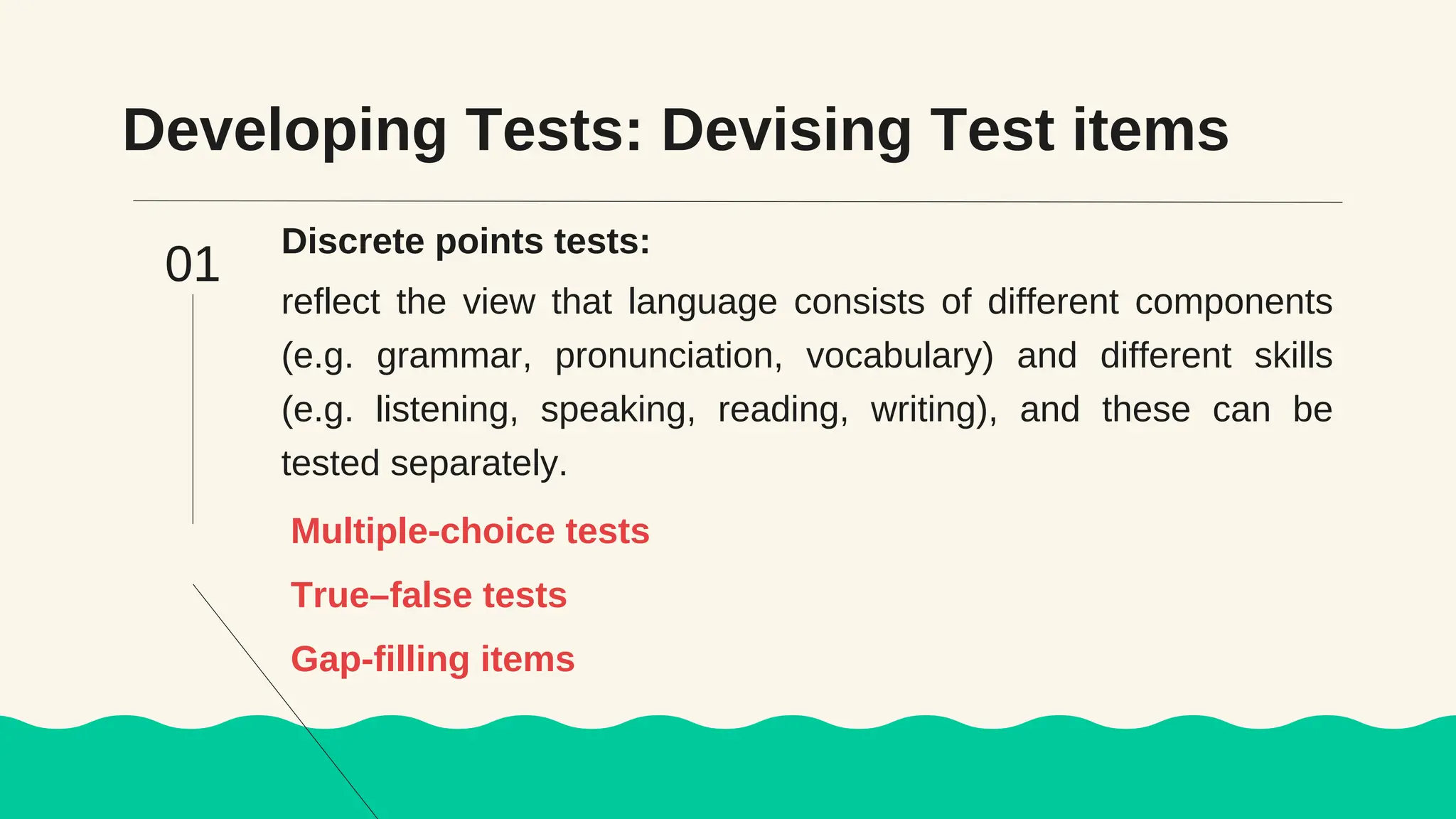

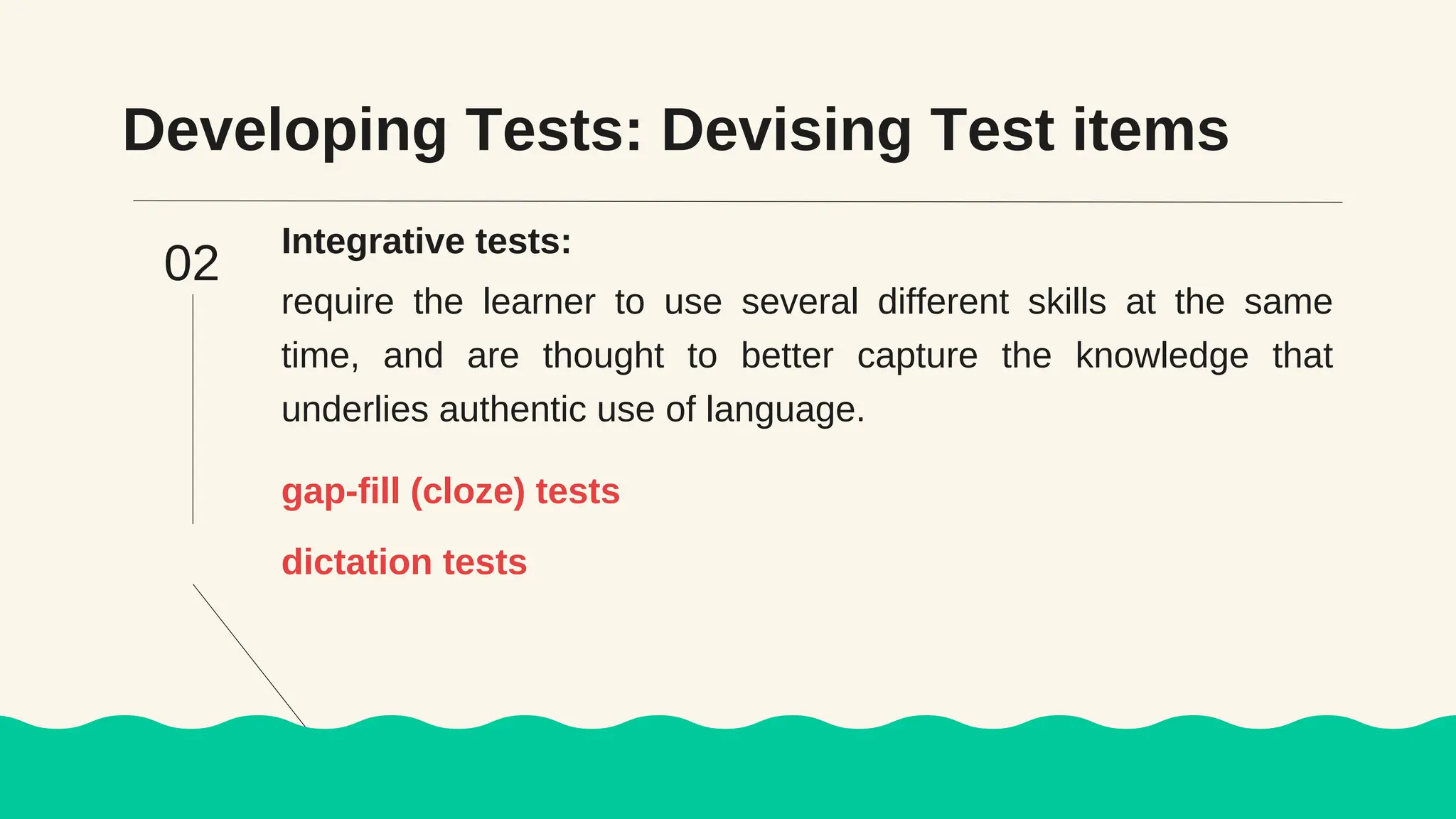

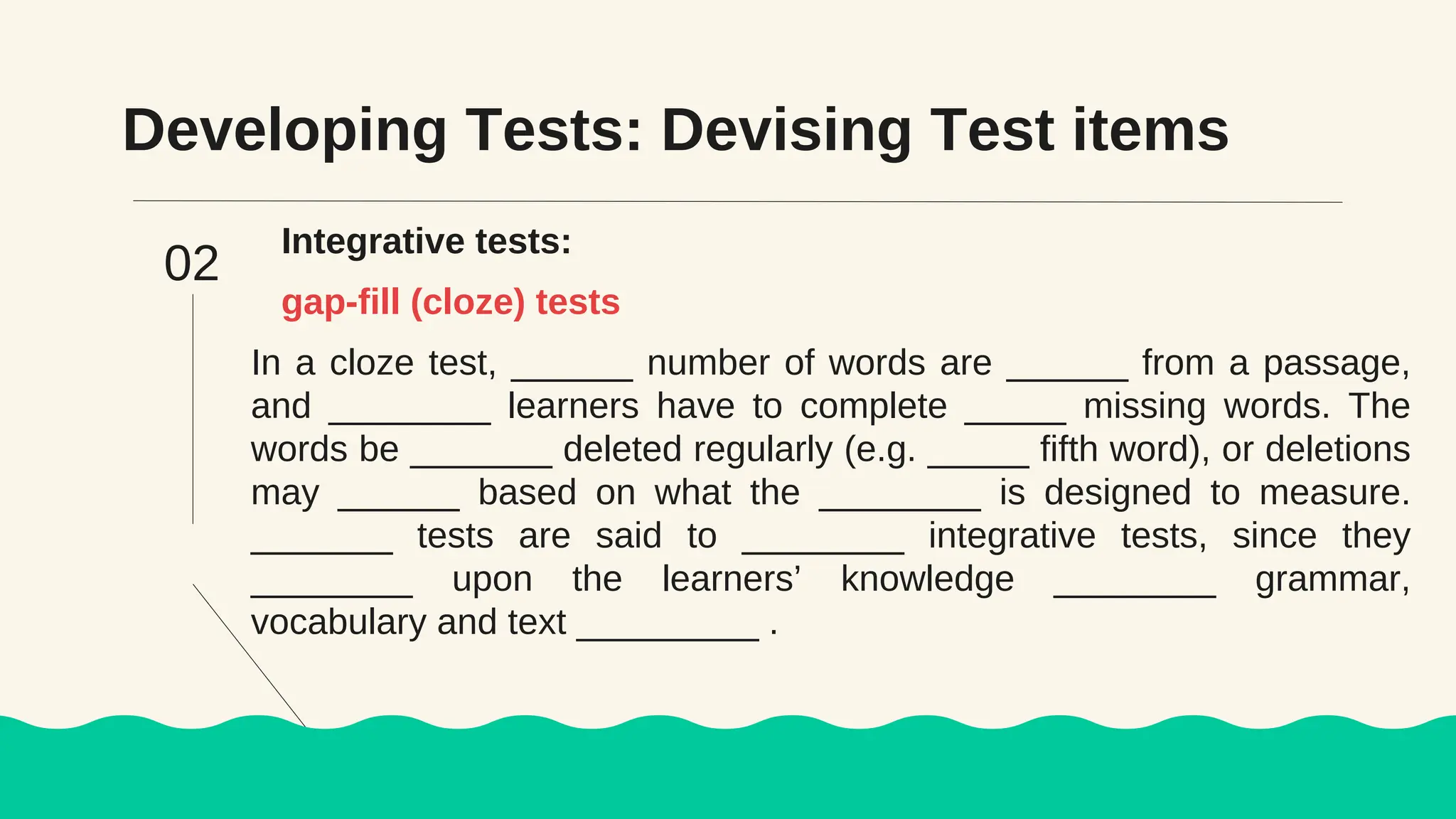

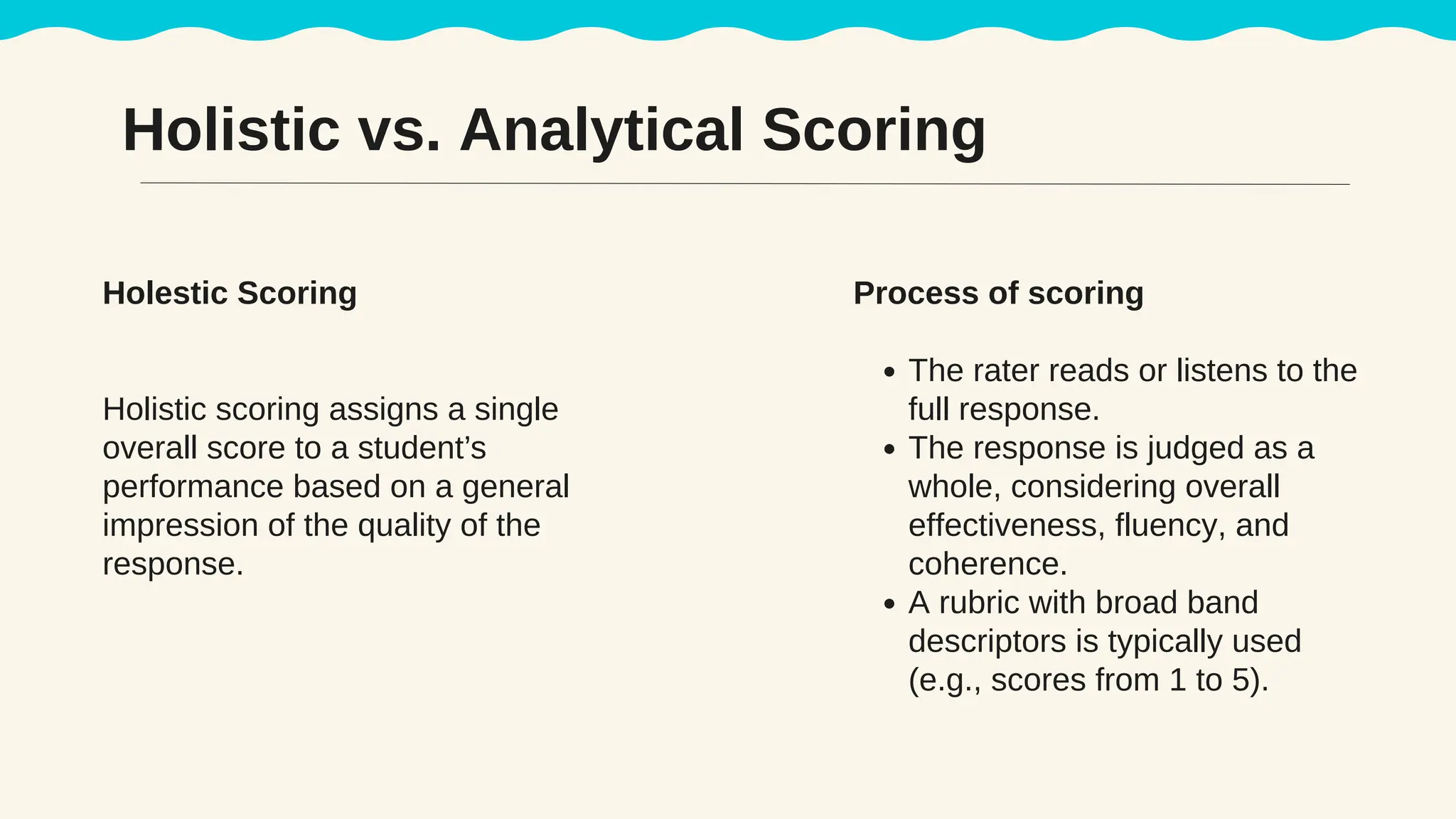

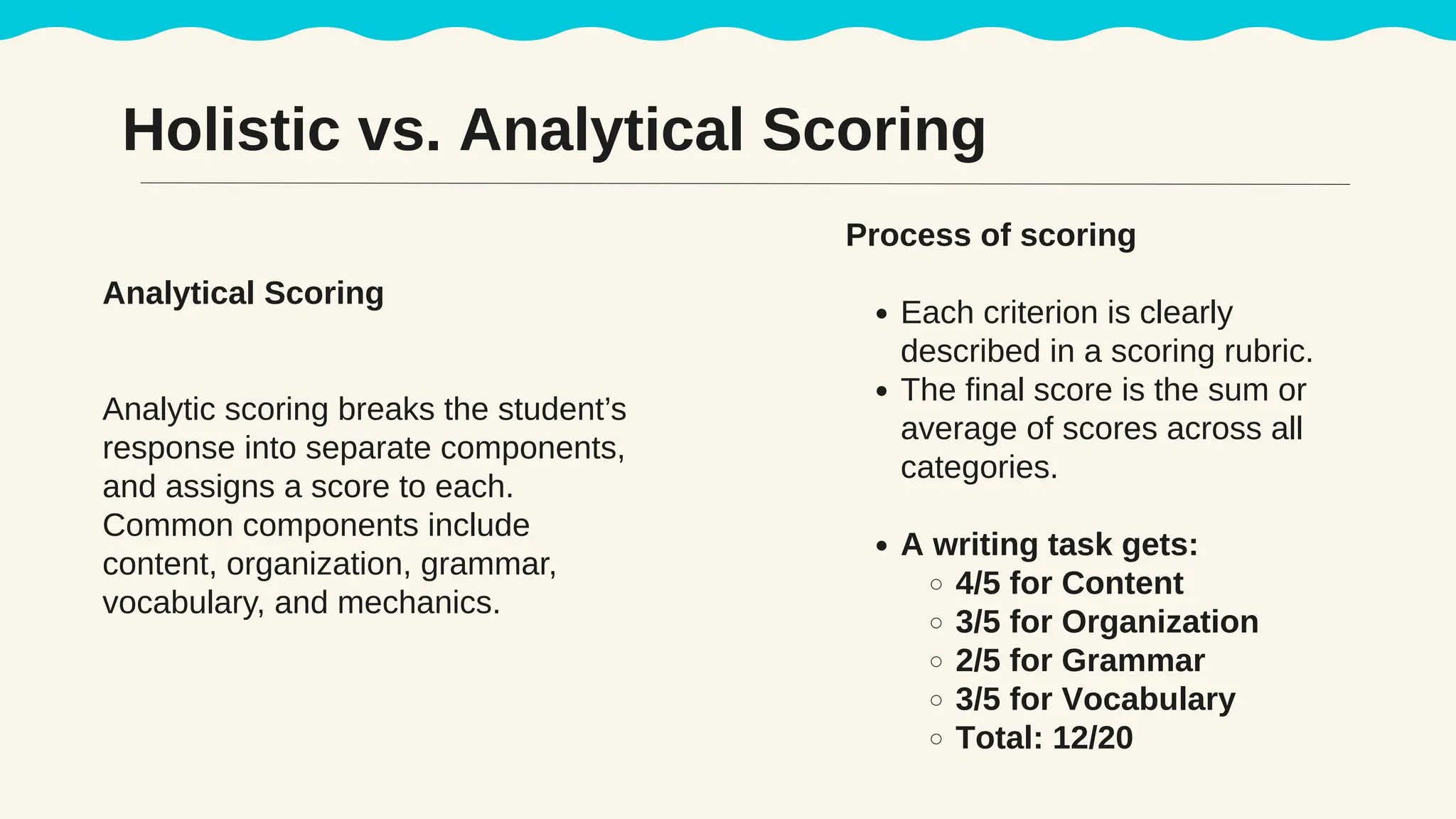

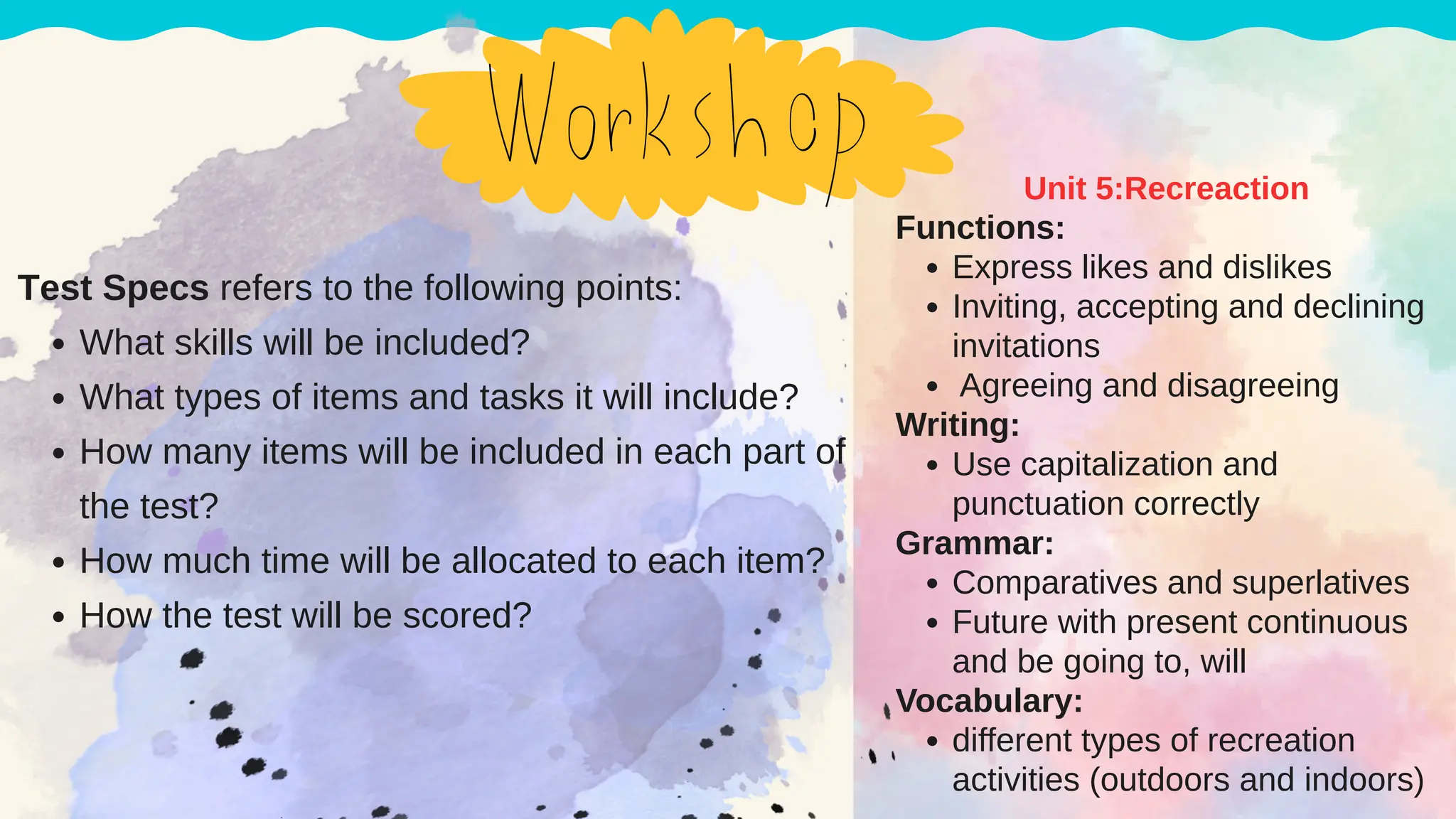

This presentation provides a comprehensive overview of key concepts and best practices in test construction, administration, and scoring, particularly within English as a Foreign Language (EFL) and English as a Second Language (ESL) contexts. It explores the principles of effective test design, including validity, reliability, and practicality (implicitly). The slides also discuss the steps involved in developing fair and balanced assessments, tips for administering tests efficiently, and methods for scoring objectively and accurately. This resource is ideal for language teachers, trainee educators, and researchers interested in language assessment and evaluation.