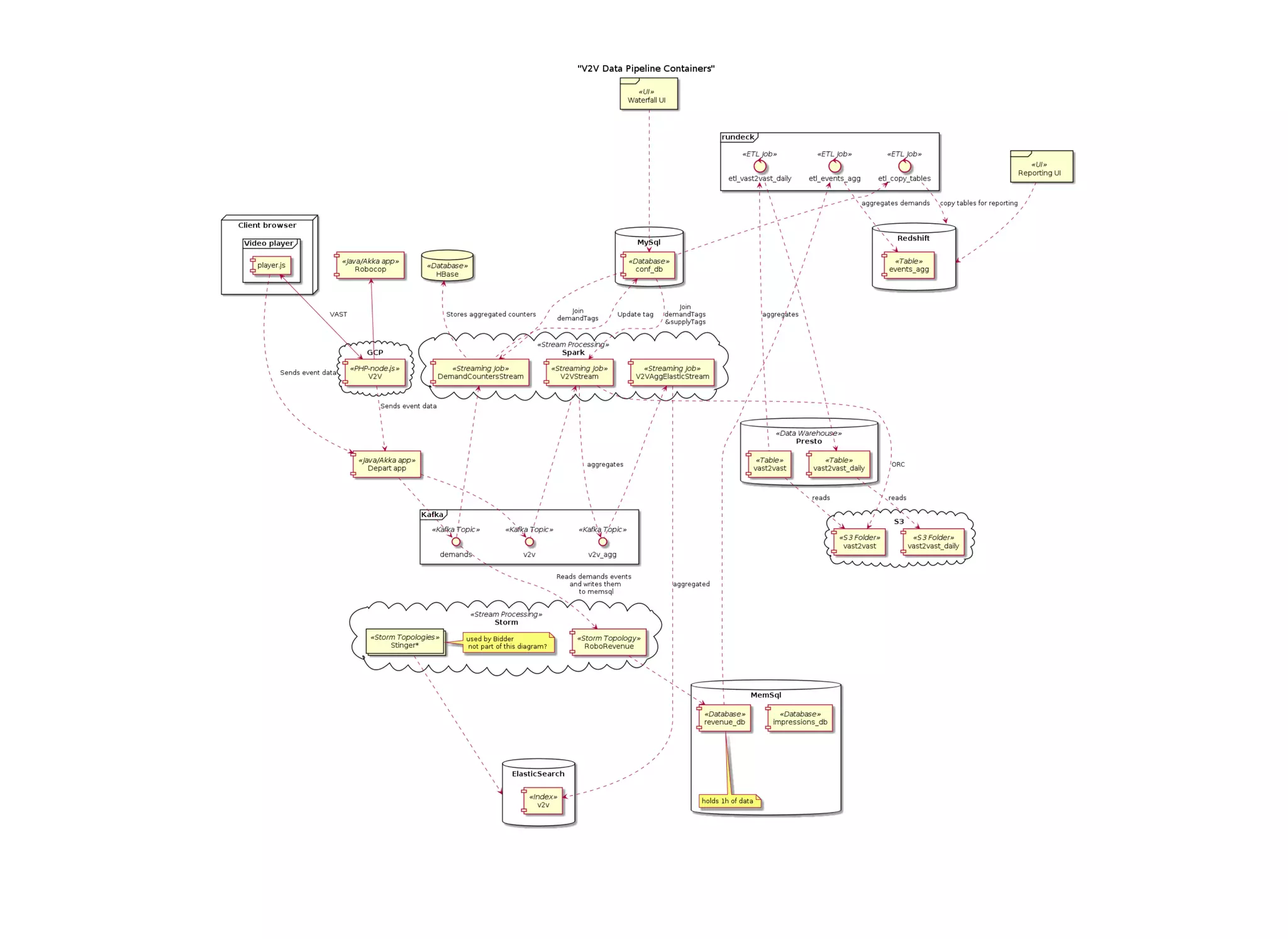

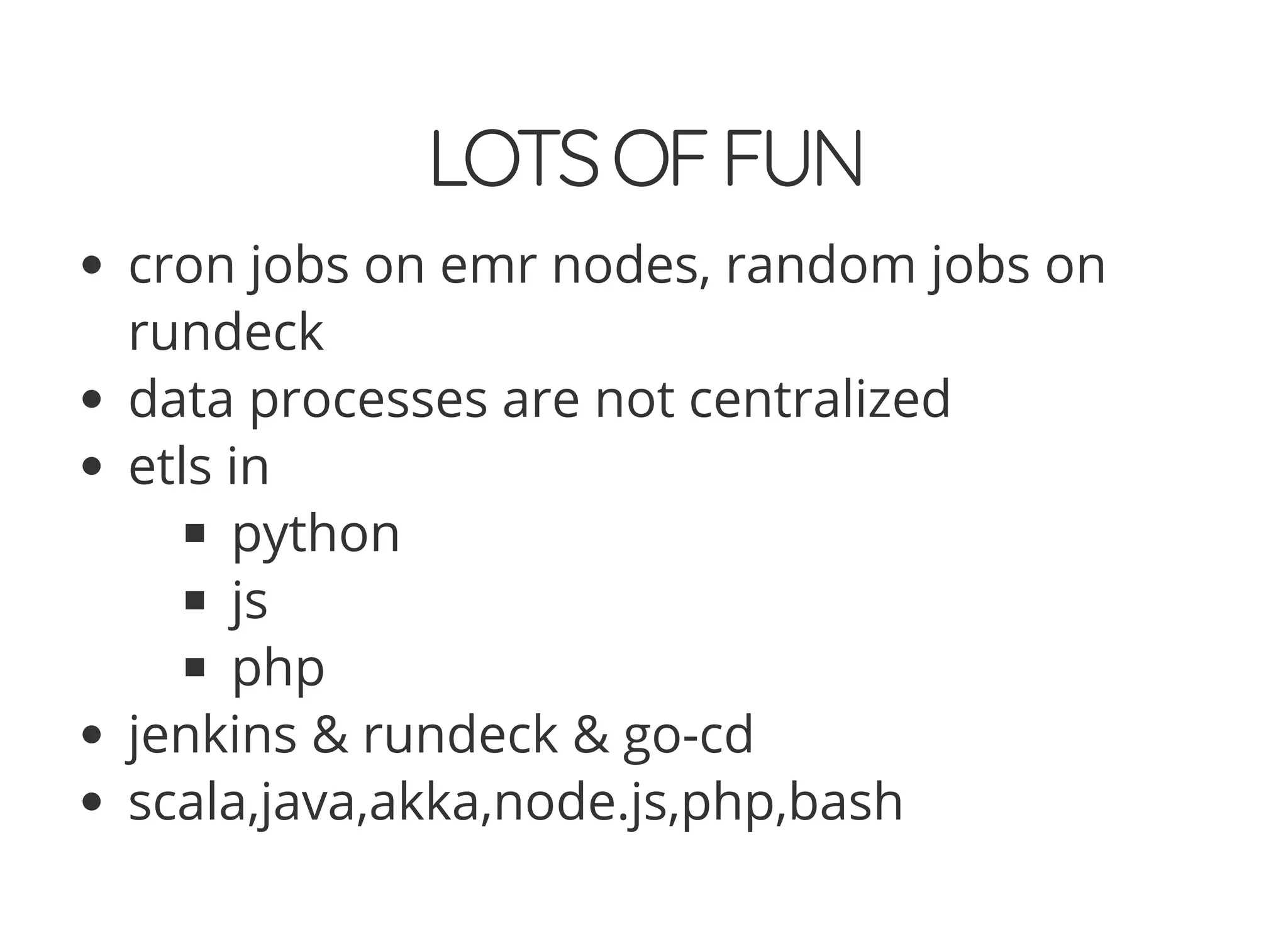

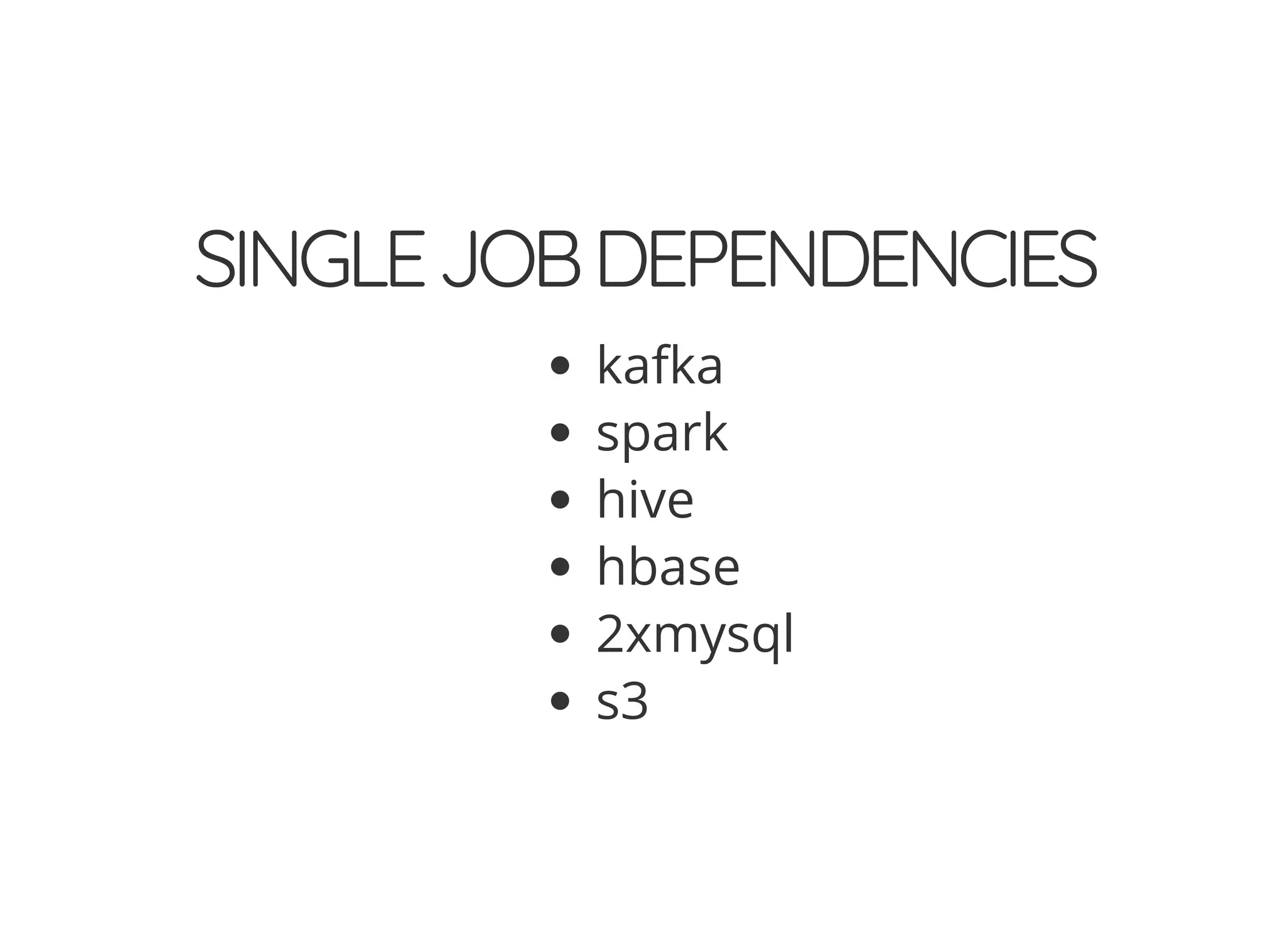

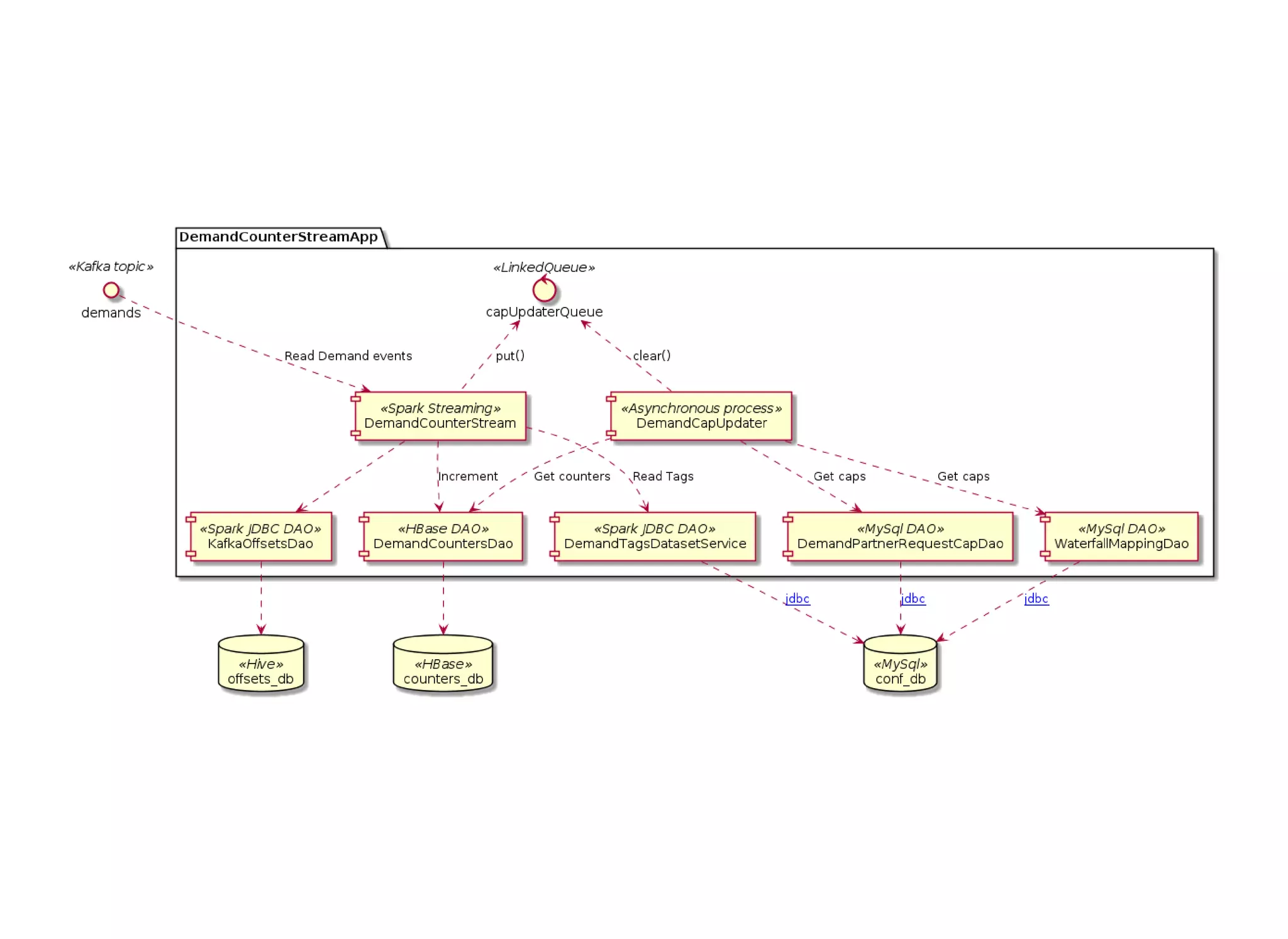

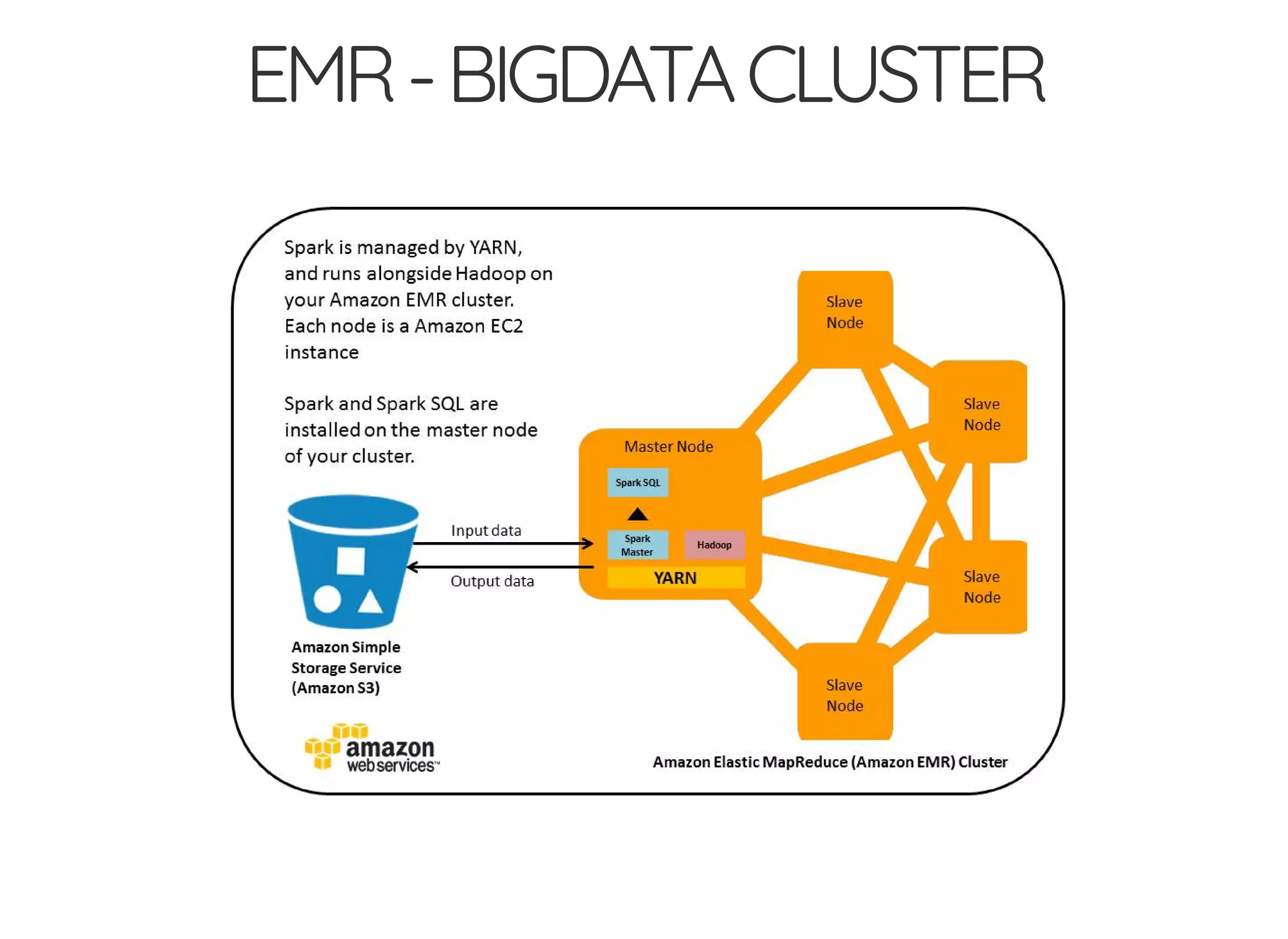

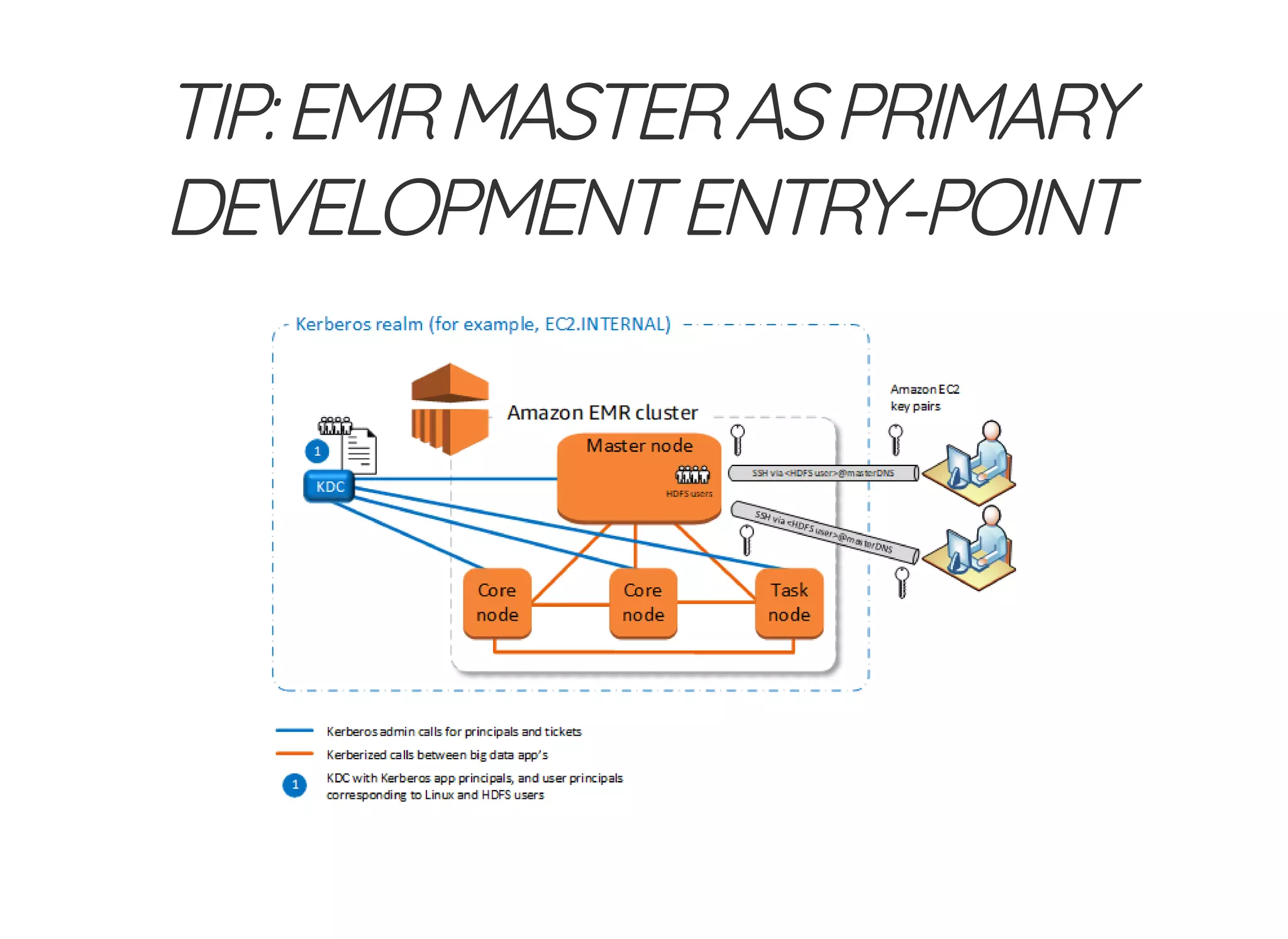

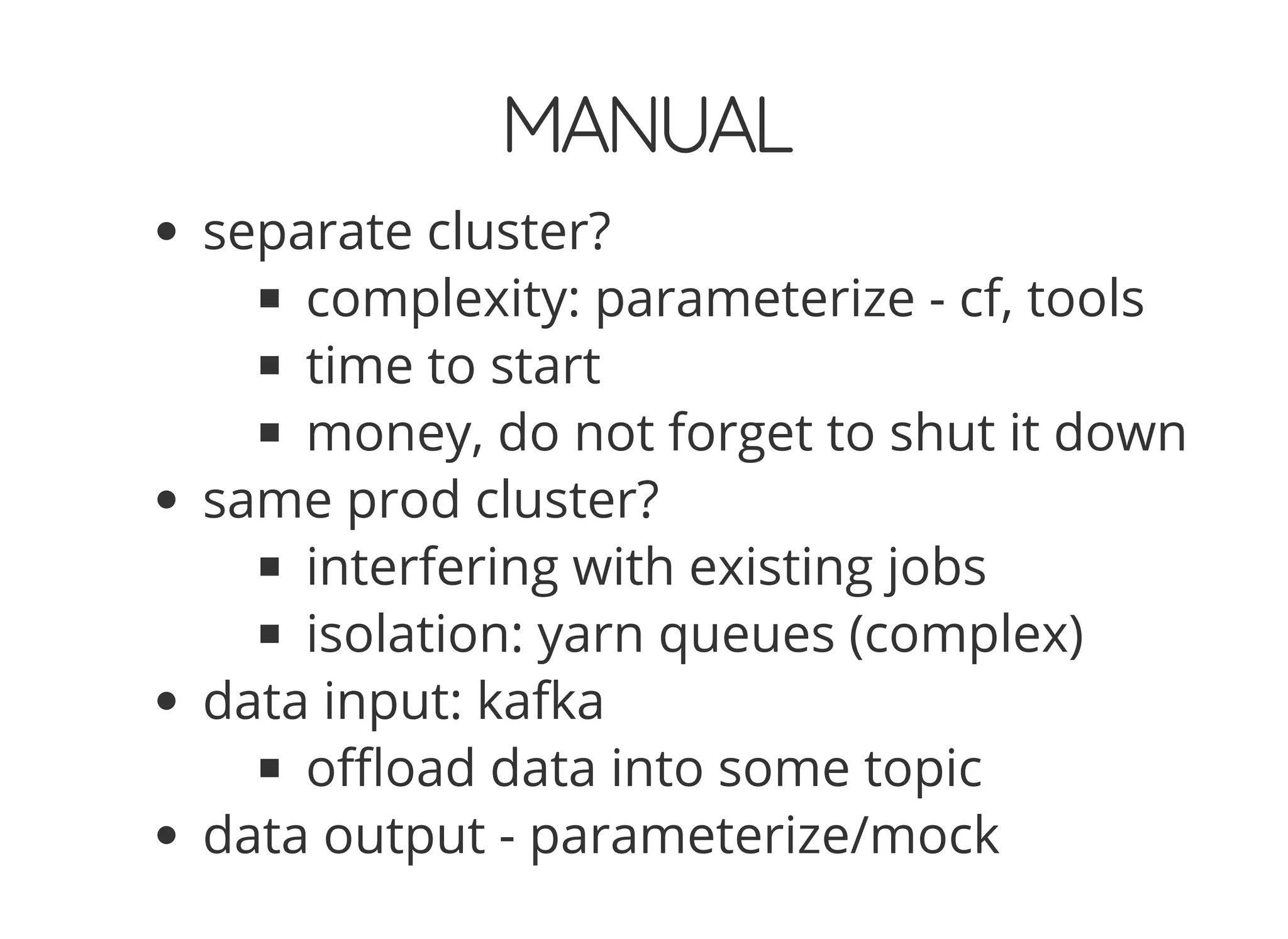

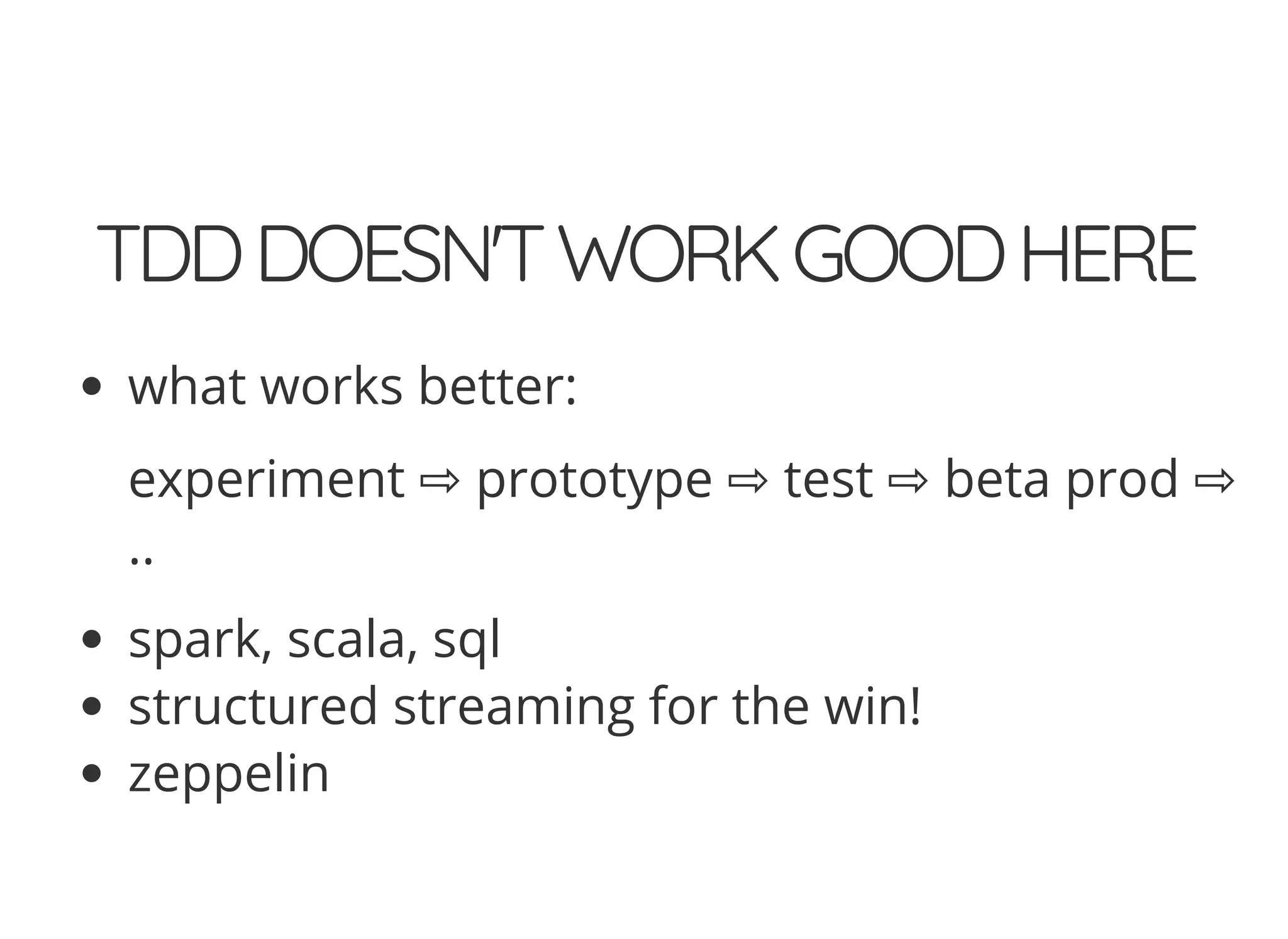

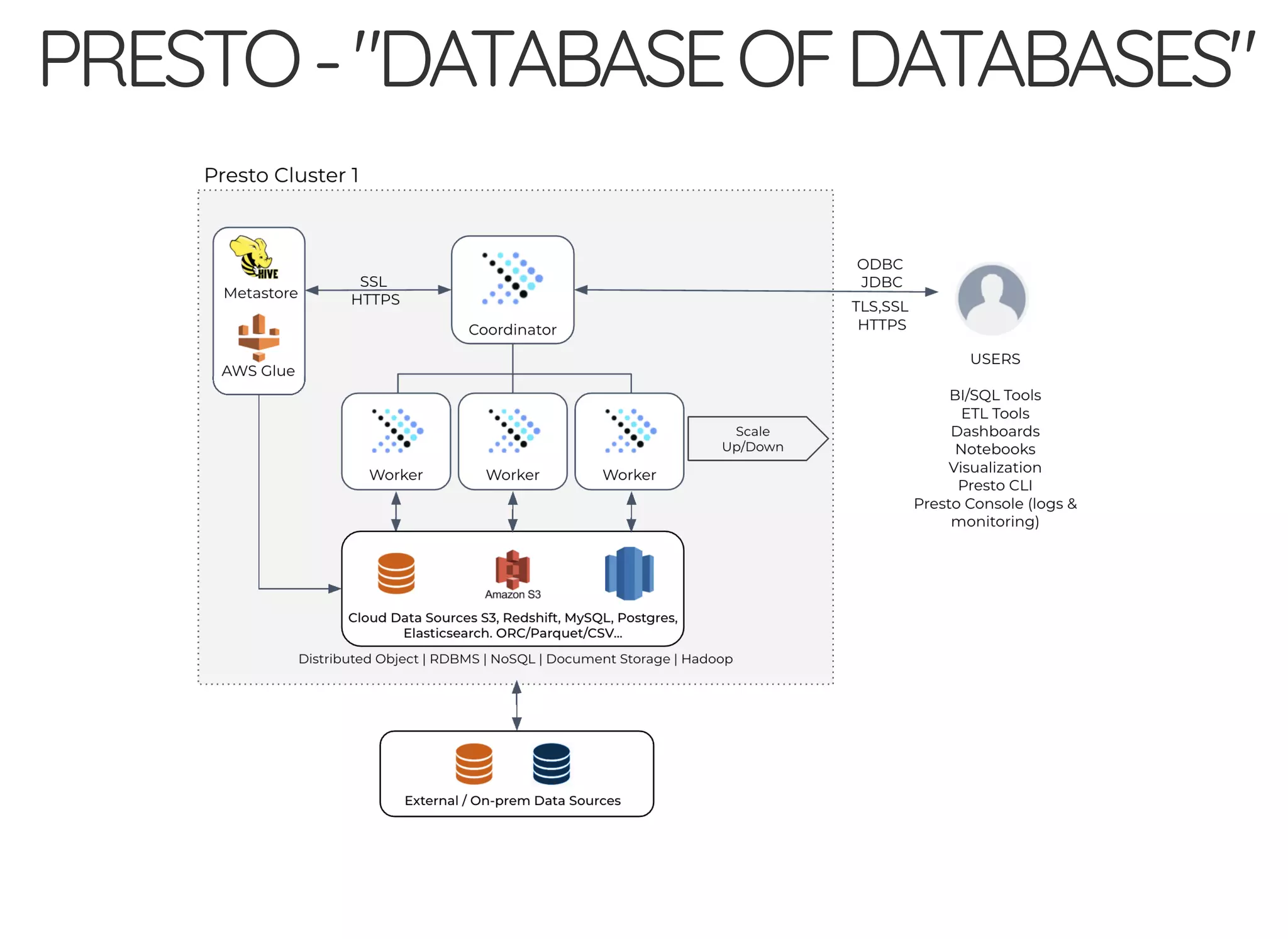

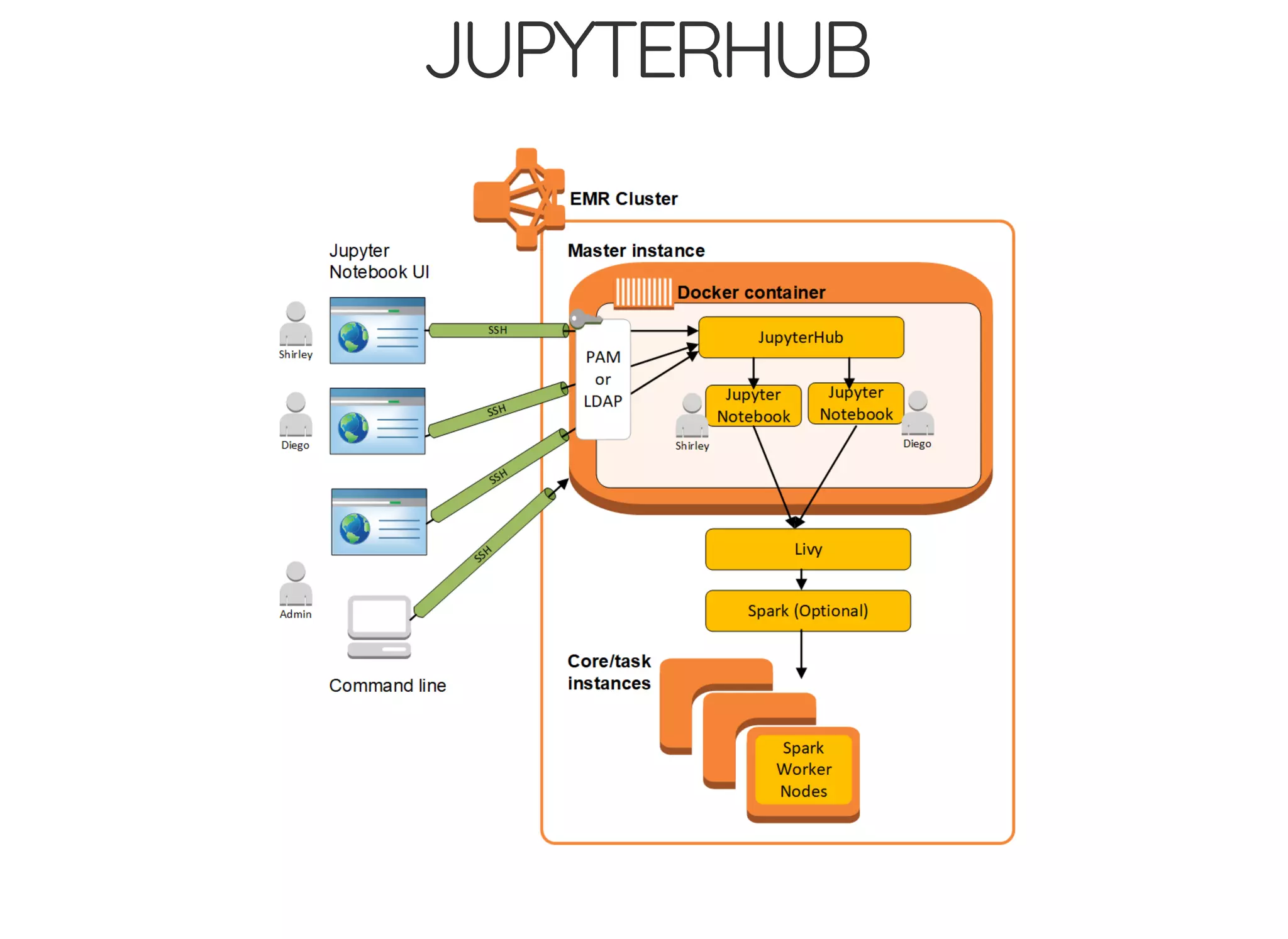

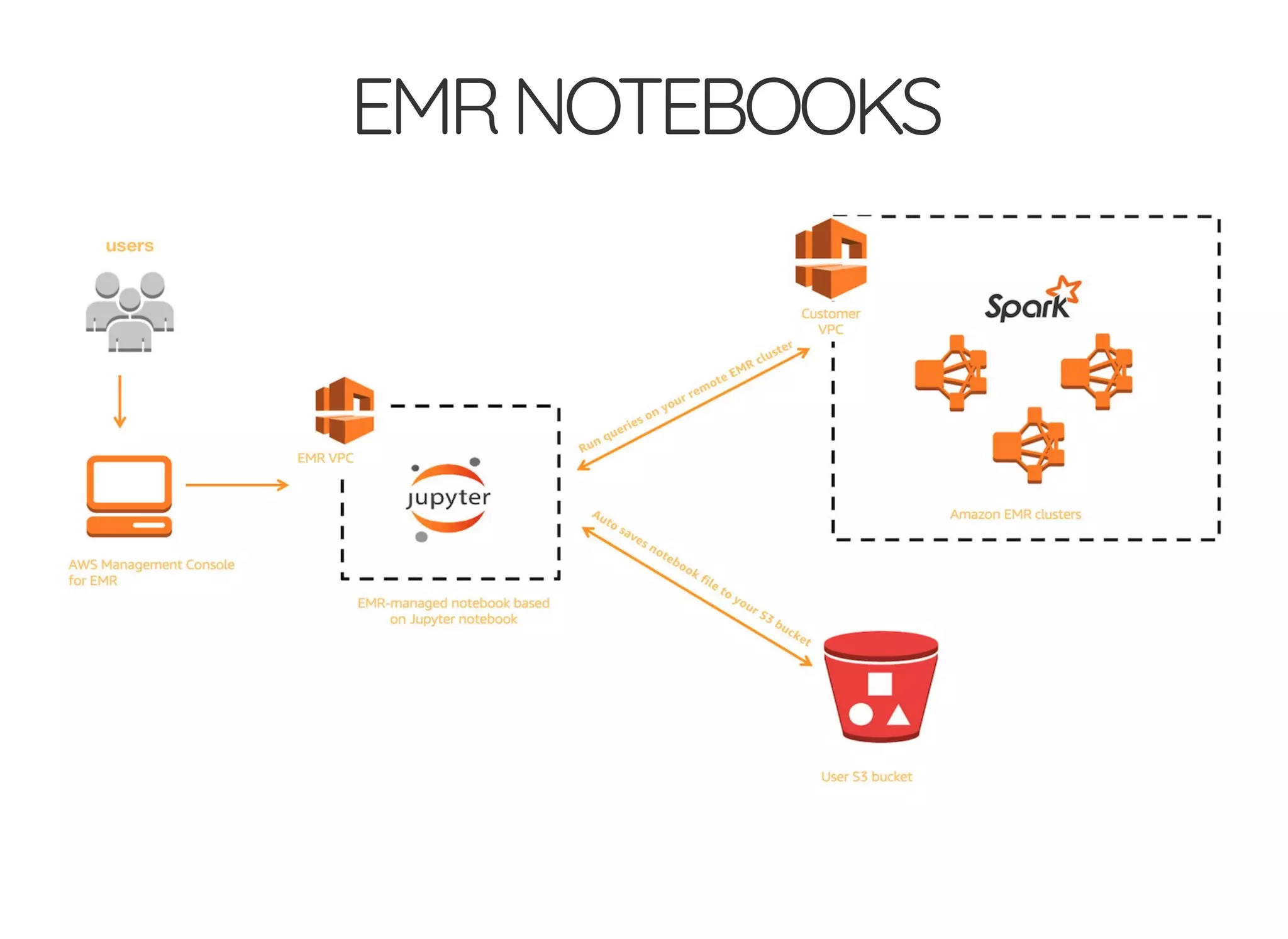

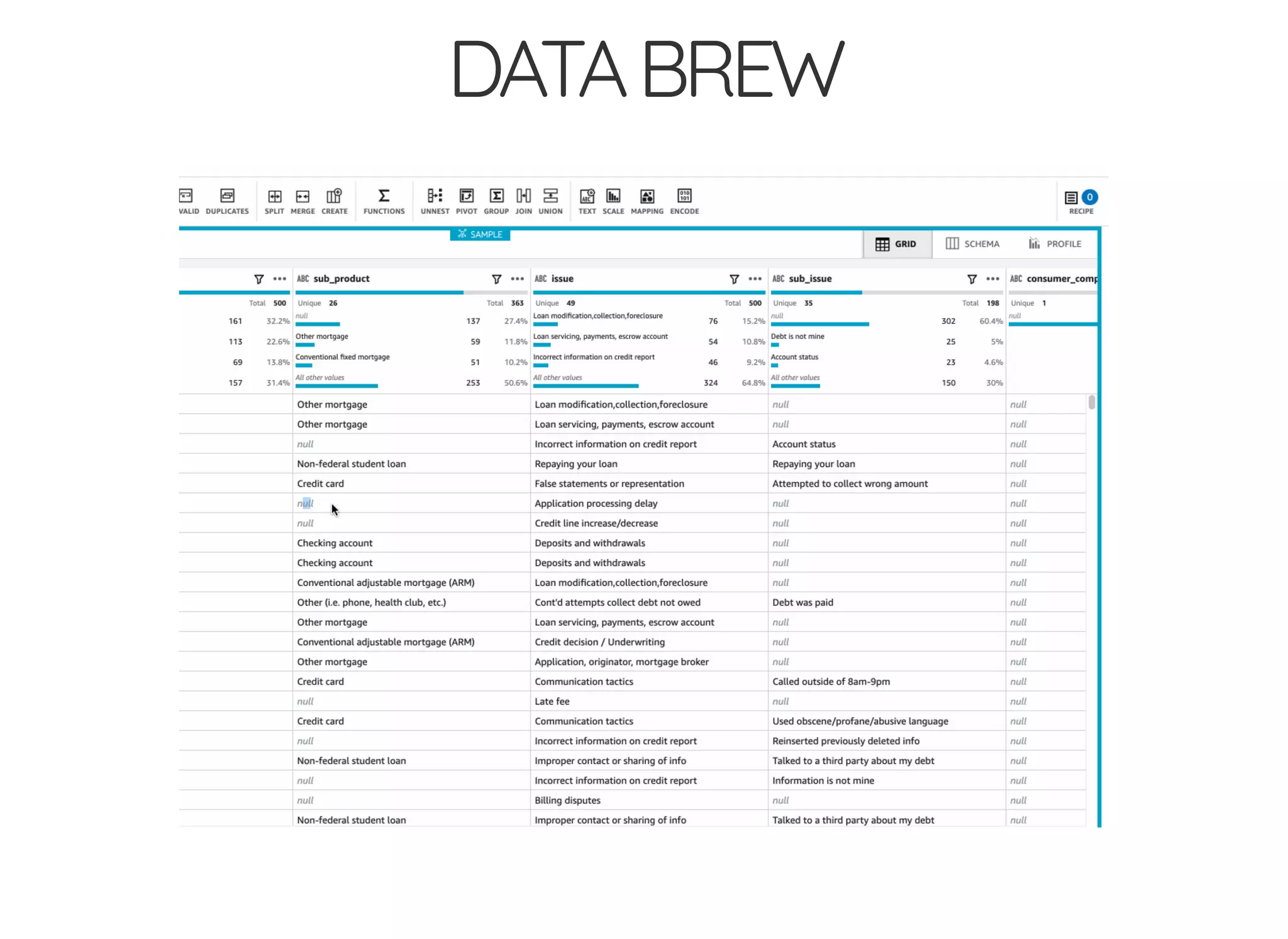

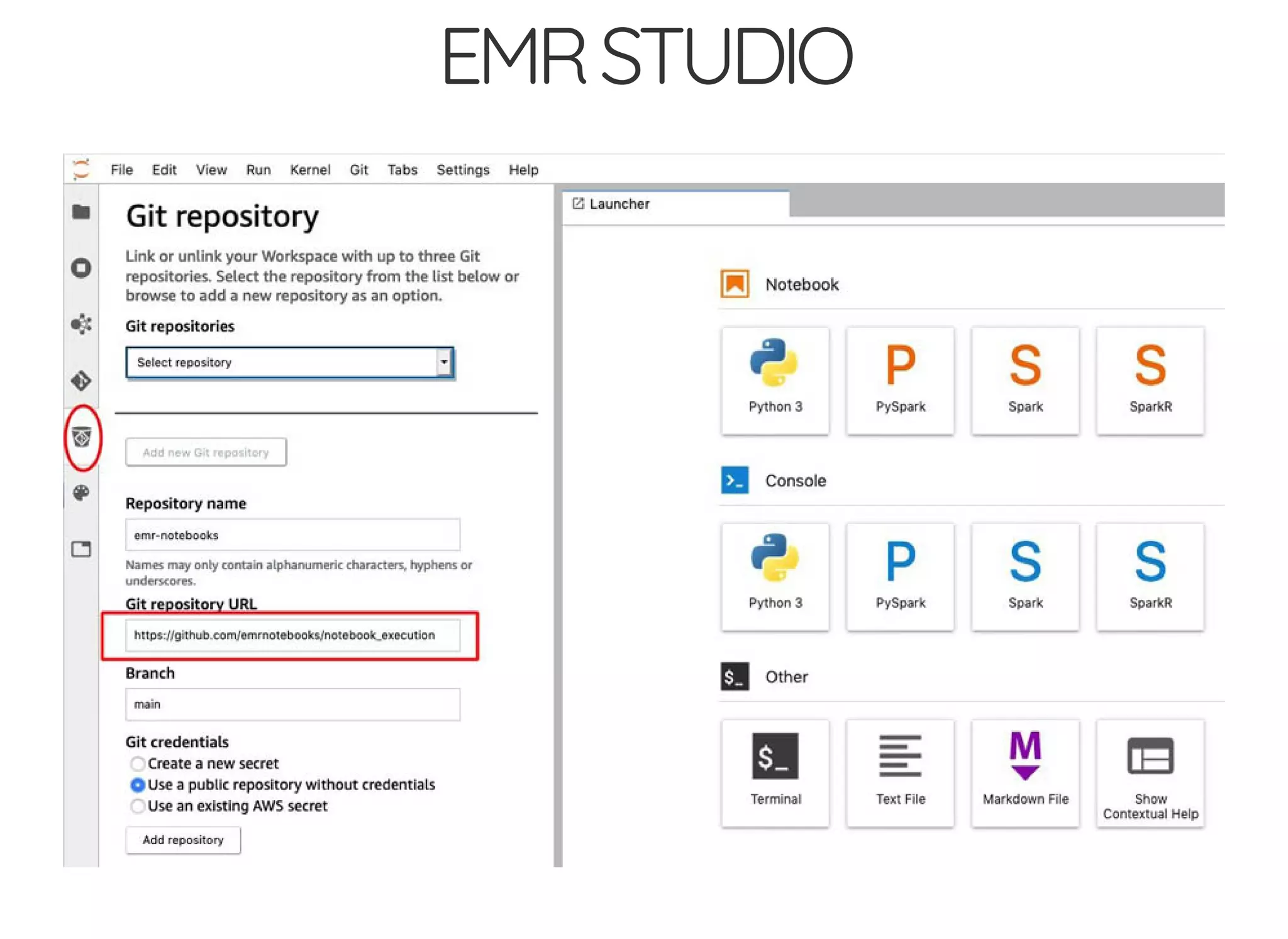

The document outlines the author's journey to enhance efficiency in data processing workflows using Spark, highlighting key needs such as creating repeatable environments, automating deployment, and debugging. It details the complexities encountered with big data applications, real-time decision-making, and the necessity for effective data access and ad-hoc querying. The author emphasizes the importance of versioning, shared dependencies, and an interactive environment for successful production and prototyping in data analytics.