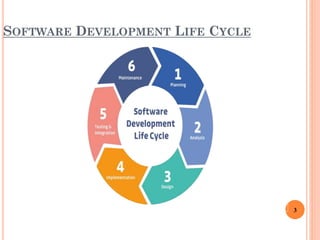

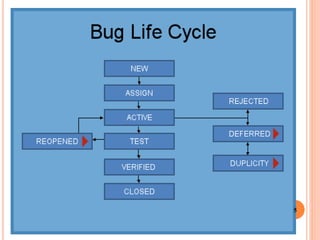

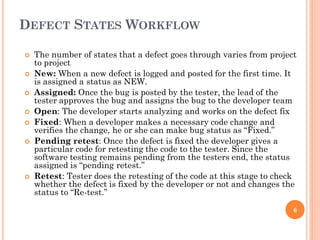

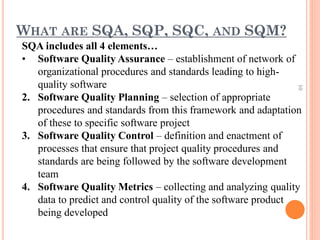

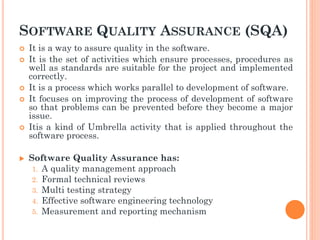

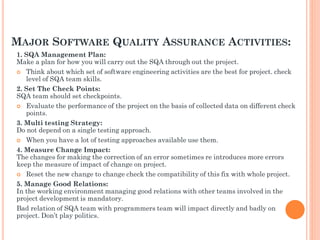

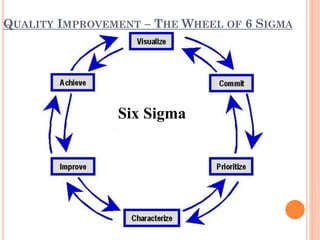

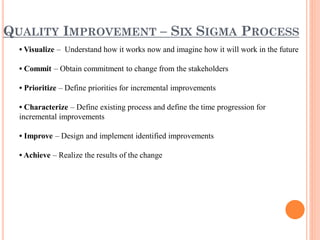

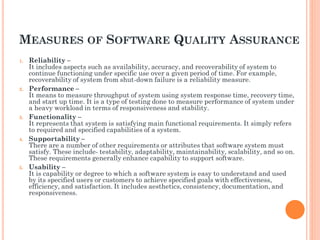

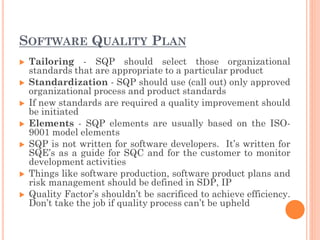

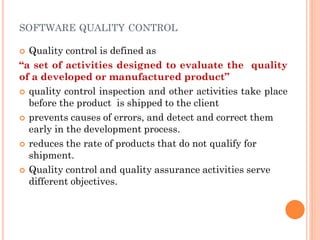

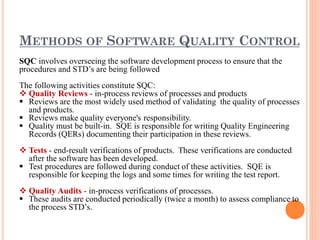

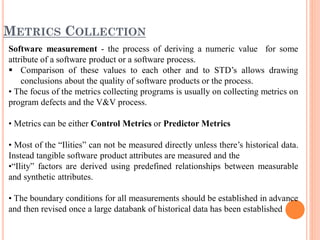

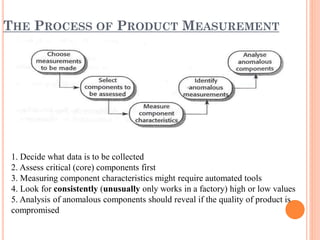

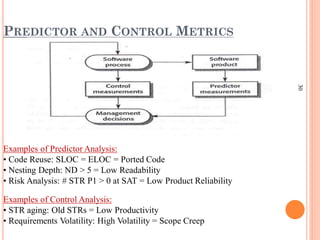

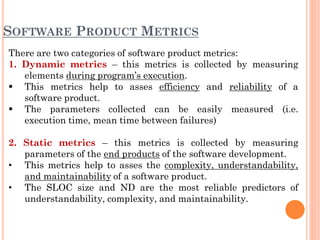

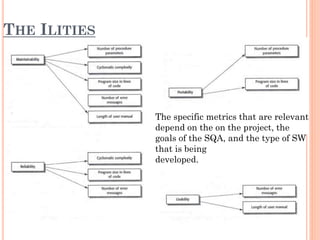

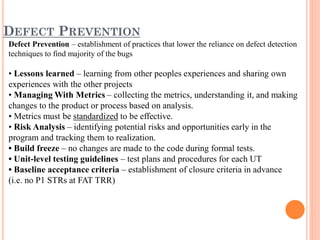

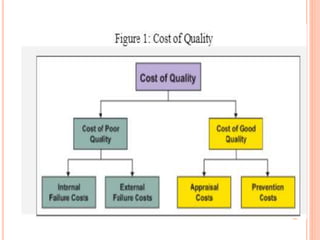

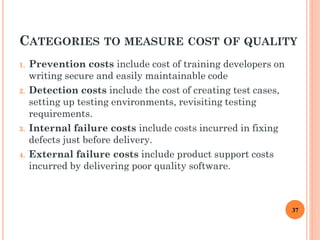

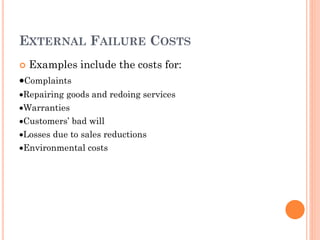

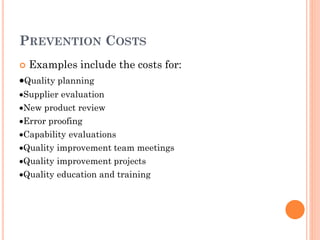

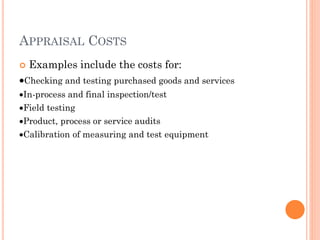

The document outlines the software quality assurance (SQA) process within the software development life cycle (SDLC), emphasizing the importance of producing high-quality software efficiently. It details the defect life cycle, methods of quality management, and components such as software quality planning, control, and metrics, highlighting the benefits and challenges associated with software quality. Additionally, it discusses the cost of quality, categorizing costs related to prevention, detection, and failures, thereby showcasing the economic implications of maintaining software quality.