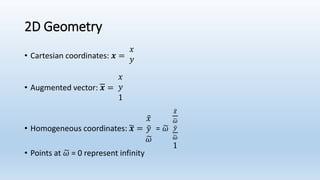

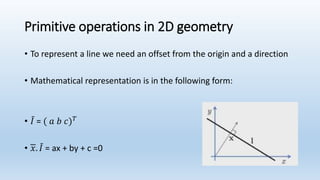

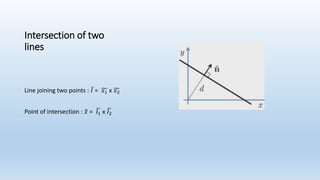

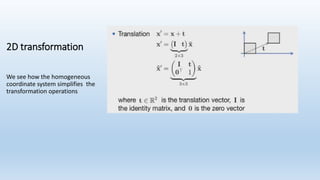

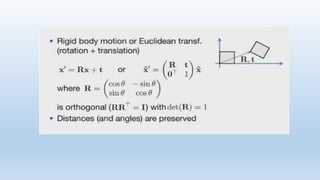

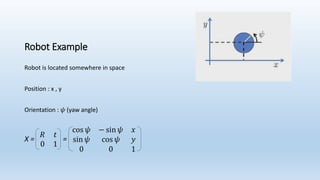

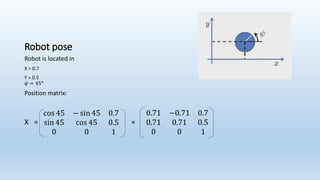

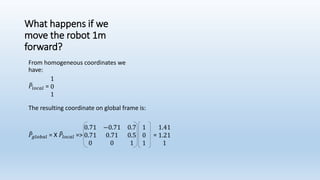

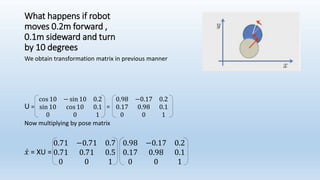

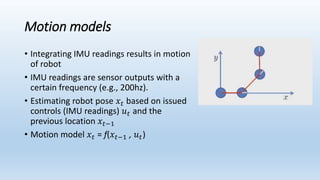

This document discusses robot navigation and pose estimation. It begins with an introduction to basic 2D geometry concepts like Cartesian and homogeneous coordinates. It then covers primitive operations in 2D geometry like representing lines and finding the intersection of two lines. Next, it discusses 2D transformations and provides an example of calculating a robot's pose and how its position would change if it moves forward or rotates. Finally, it discusses estimating a robot's motion through odometry, control-based models, and velocity sensors to integrate its movements over time.