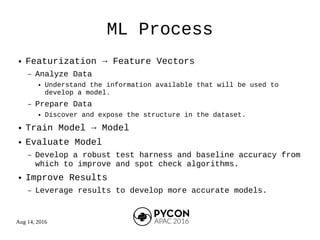

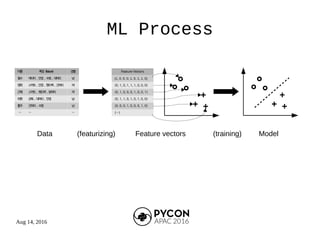

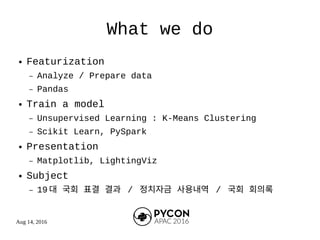

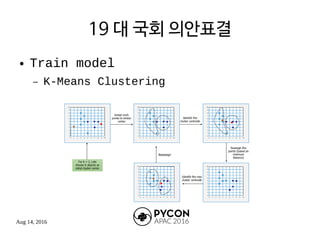

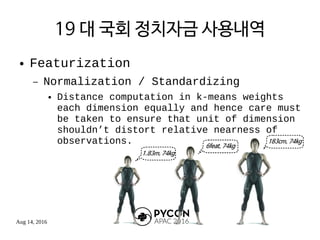

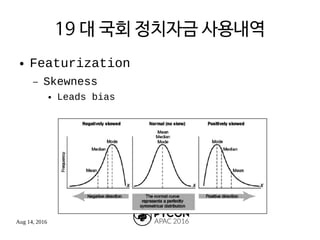

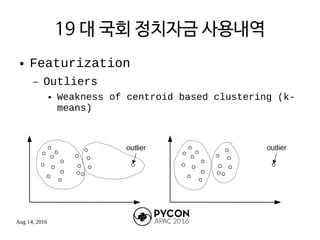

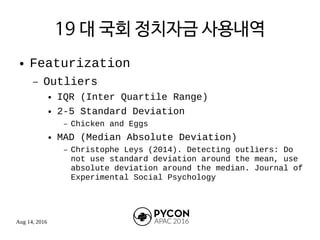

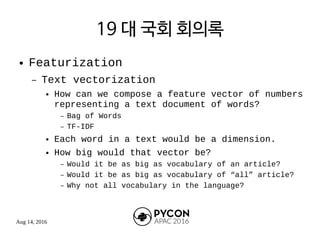

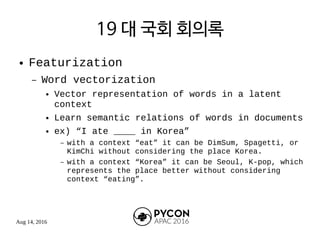

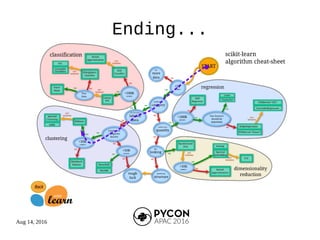

The document outlines the machine learning process and the speaker's background as a research engineer in a fintech startup, focusing on fraud detection using machine learning techniques. It details steps such as featurization, model training, and model evaluation, while addressing specific projects related to the 19th National Assembly's voting results and political funding usage. The speaker emphasizes the importance of proper data handling and model training while providing references to relevant code repositories.