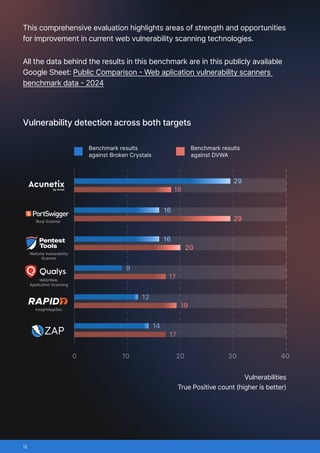

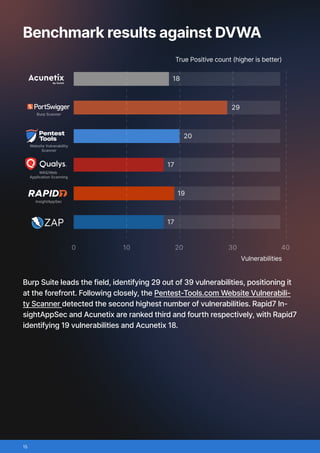

This benchmark compared six widely used web application vulnerability scanners: Acunetix, Burp Scanner, Pentest-Tools.com Website Vulnerability Scanner, Qualys, Rapid7 InsightAppSec, ZAP (Zed Attack Proxy)/

The scanners were tested against two deliberately vulnerable applications:

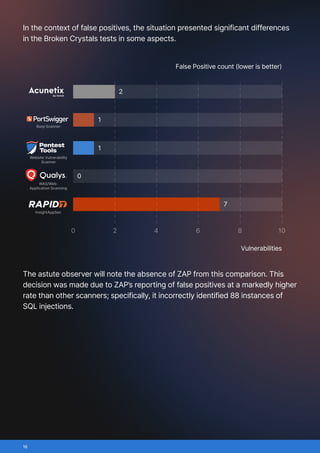

- Broken Crystals, chosen for its modern tech stack (React frontend, REST and GraphQL APIs) and wide variety of vulnerabilities, from SQL injection to JWT flaws.

- DVWA (Damn Vulnerable Web Application), an industry staple that mirrors traditional multi-page web apps still common across the internet.

Three evaluation metrics were used:

- True positives: vulnerabilities correctly identified

- False positives: incorrect vulnerability reports

- False negatives: missed real vulnerabilities

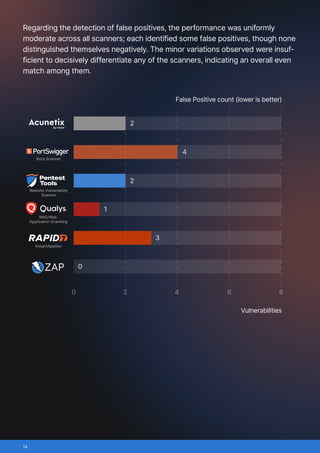

The benchmark confirms that while most scanners are broadly effective, results can vary depending on application type. Commercial tools generally offer more consistent accuracy, but strong open-source options like ZAP remain competitive in certain cases. Pentest-Tools.com distinguished itself with reliable results and a lower false-positive rate, making it a practical choice for security teams seeking balanced accuracy and efficiency.