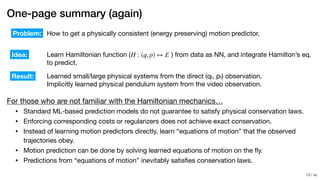

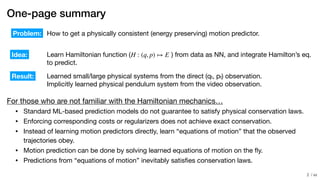

- Standard machine learning models do not guarantee satisfying physical conservation laws for motion prediction.

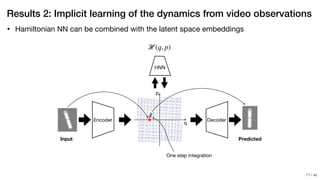

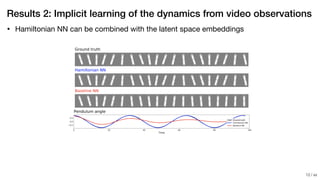

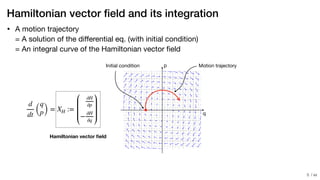

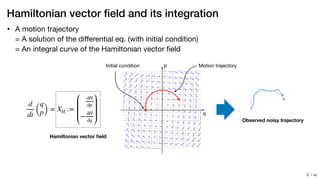

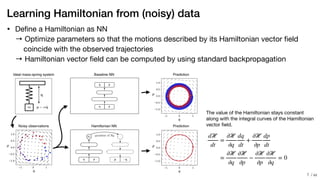

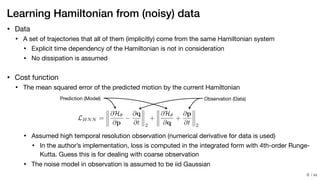

- The paper proposes learning the "equations of motion" in the form of a Hamiltonian function using neural networks to predict trajectories that obey conservation laws.

- The learned Hamiltonian function is integrated on the fly to generate predictions, ensuring the predictions satisfy conservation of energy.

![/ xx

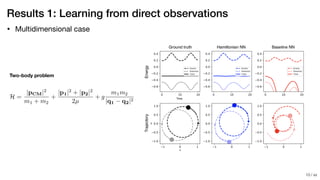

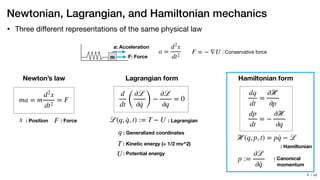

Results 1: Learning from direct observations

Ideal Harmonic Oscillator

Ideal Pendulum

Real Pendulum

Schmidt & Lipson [35]

Learned Hamiltonian

Ground Truth Hamiltonian

!9](https://image.slidesharecdn.com/hamiltoniannn-200131102421/85/Paper-reading-Hamiltonian-Neural-Networks-9-320.jpg)