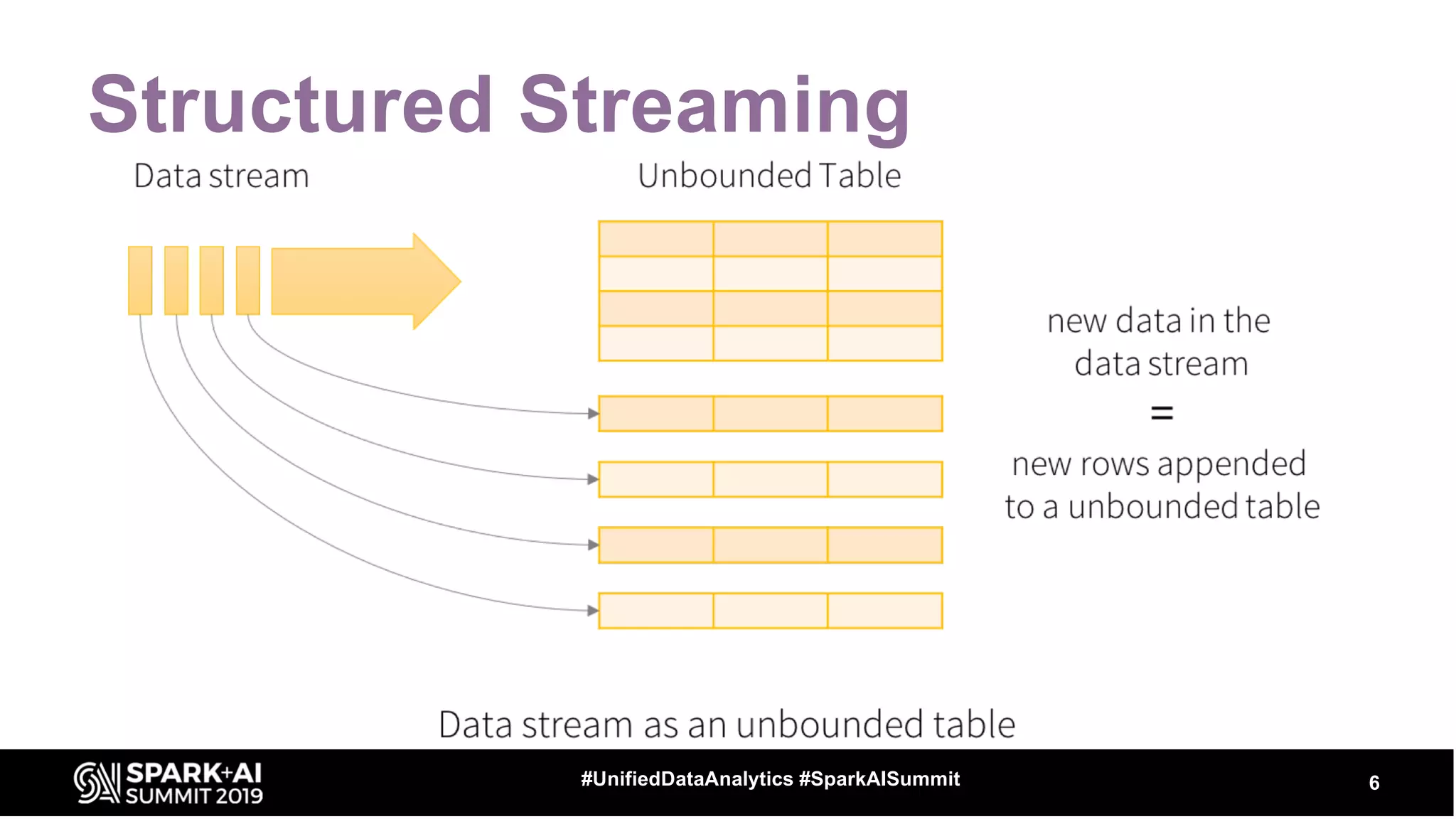

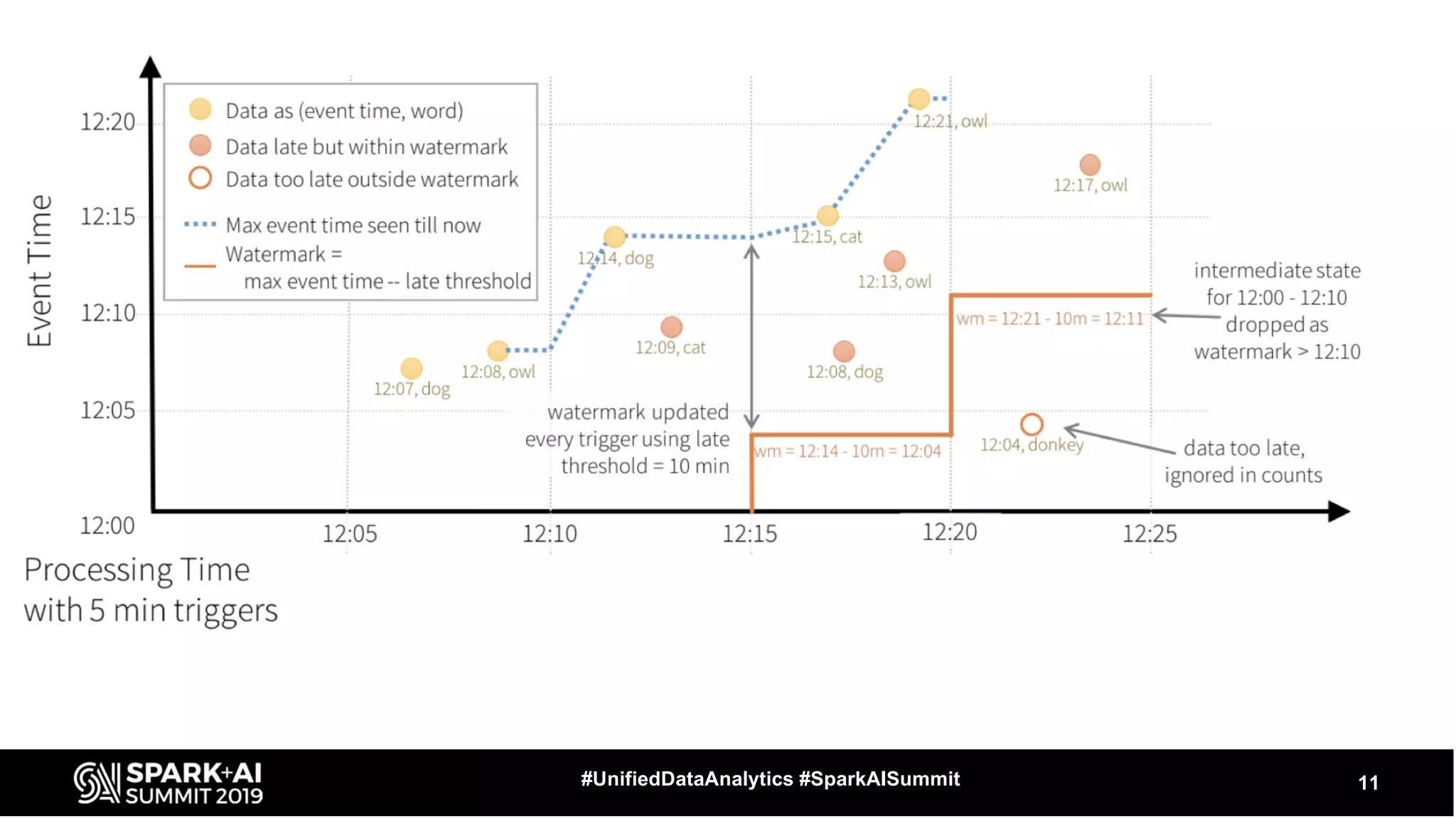

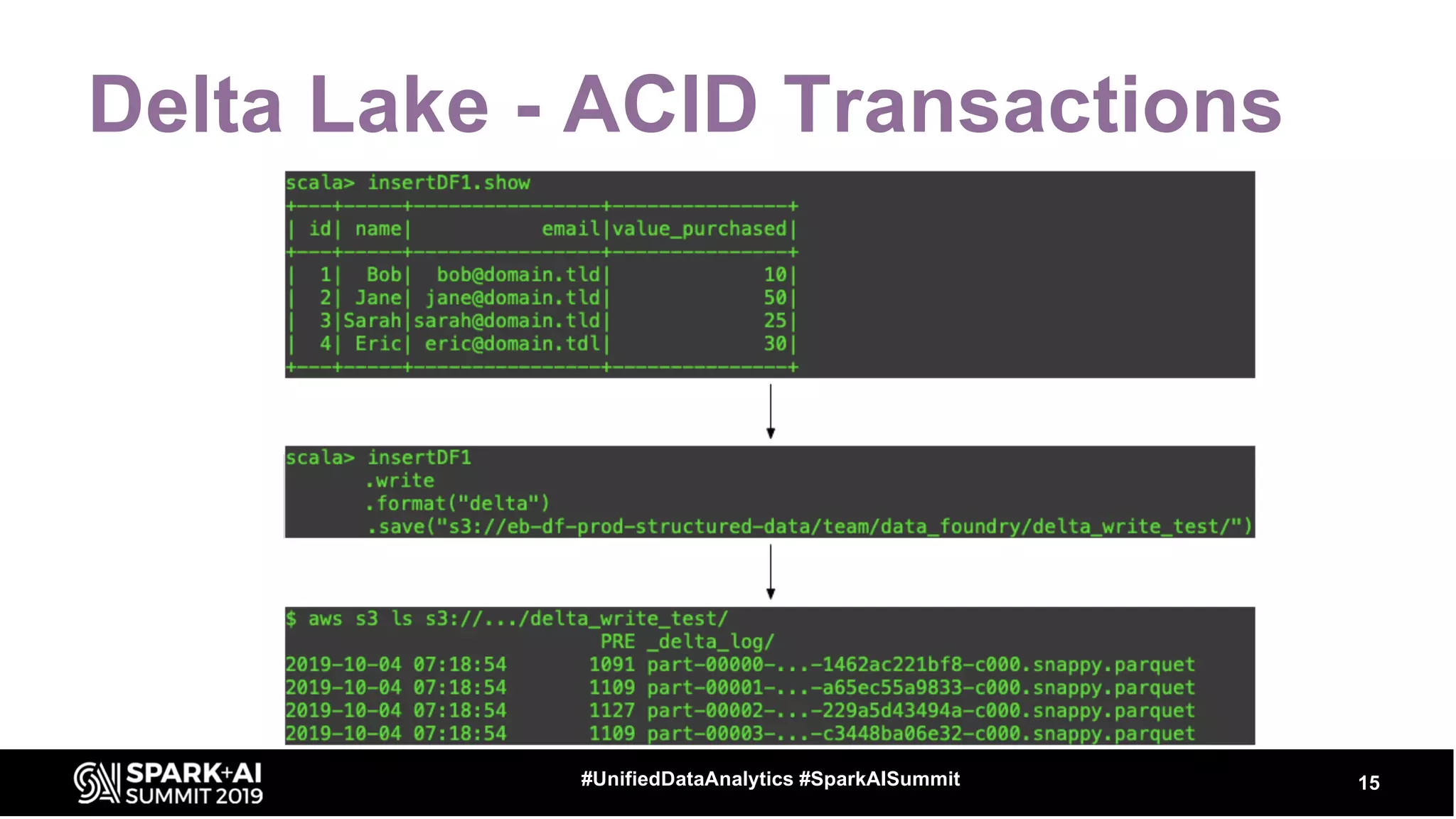

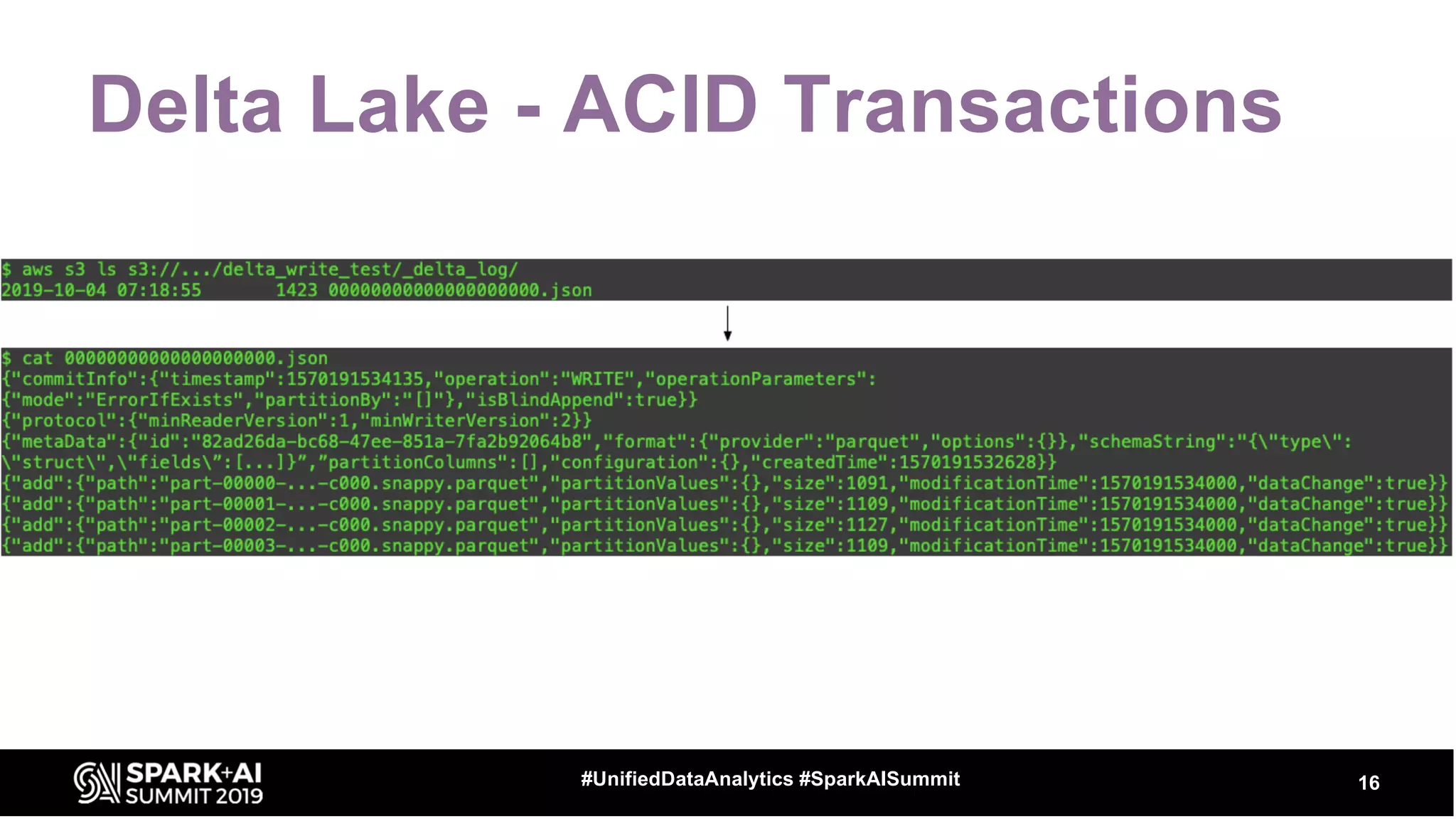

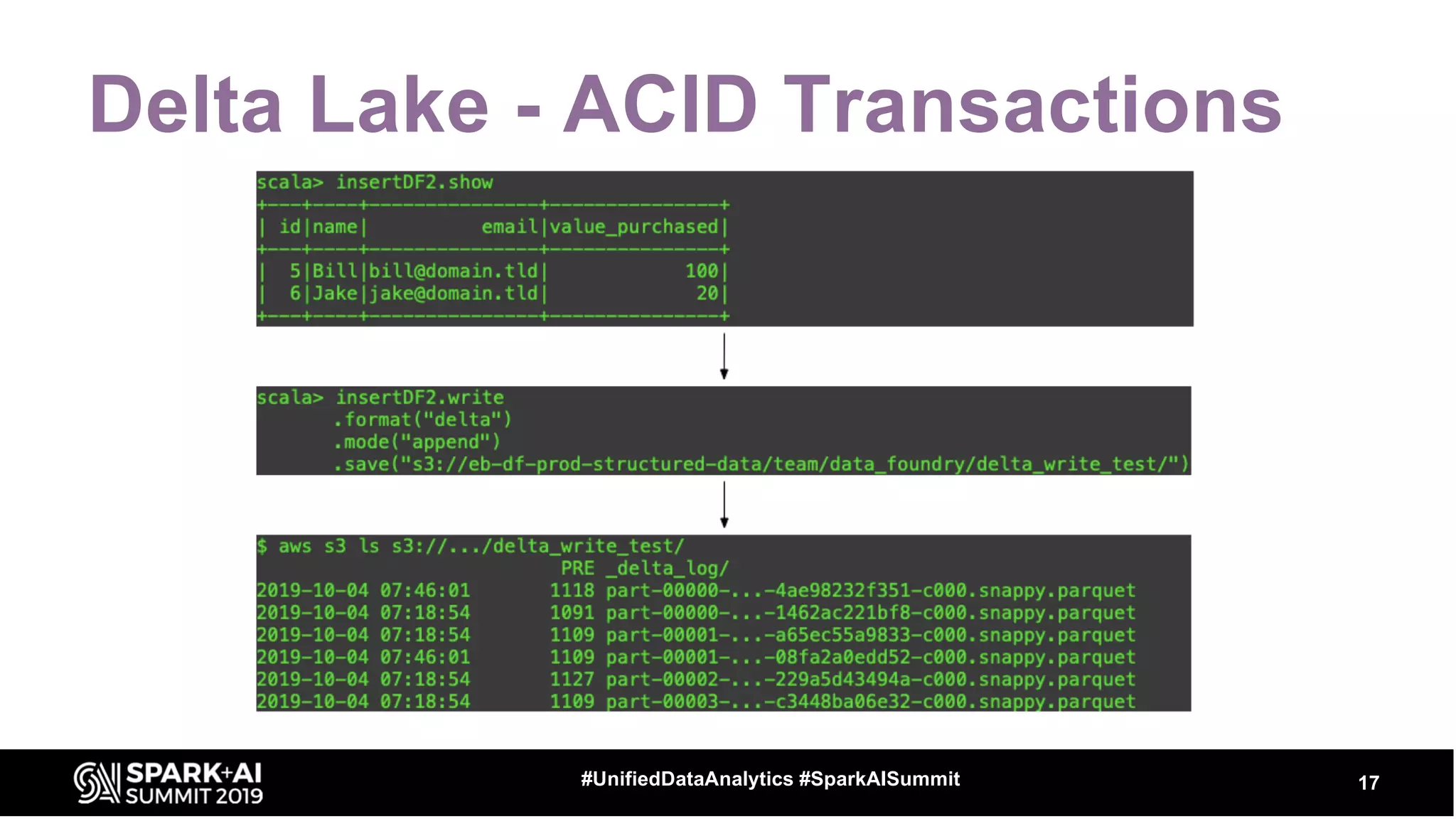

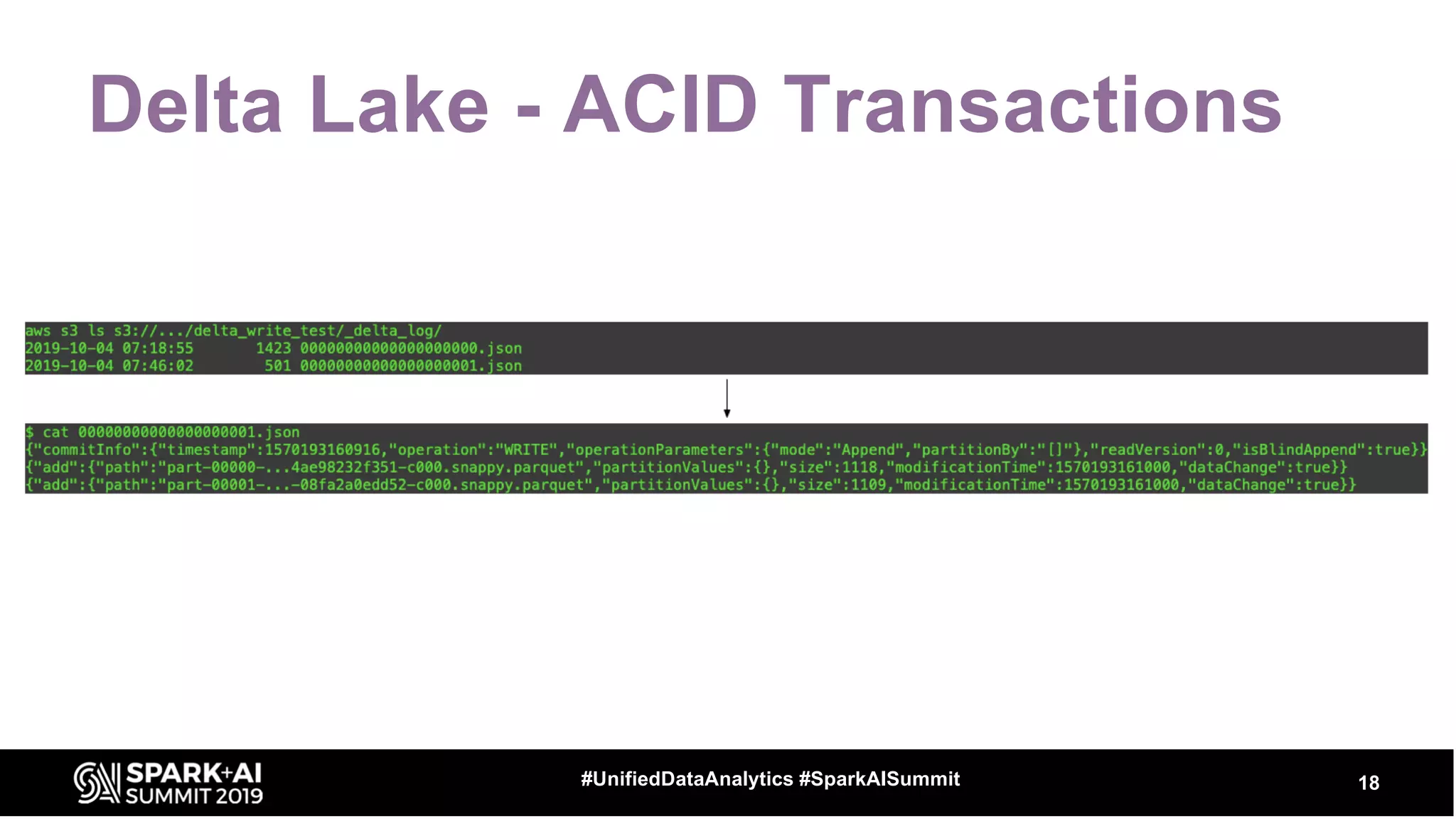

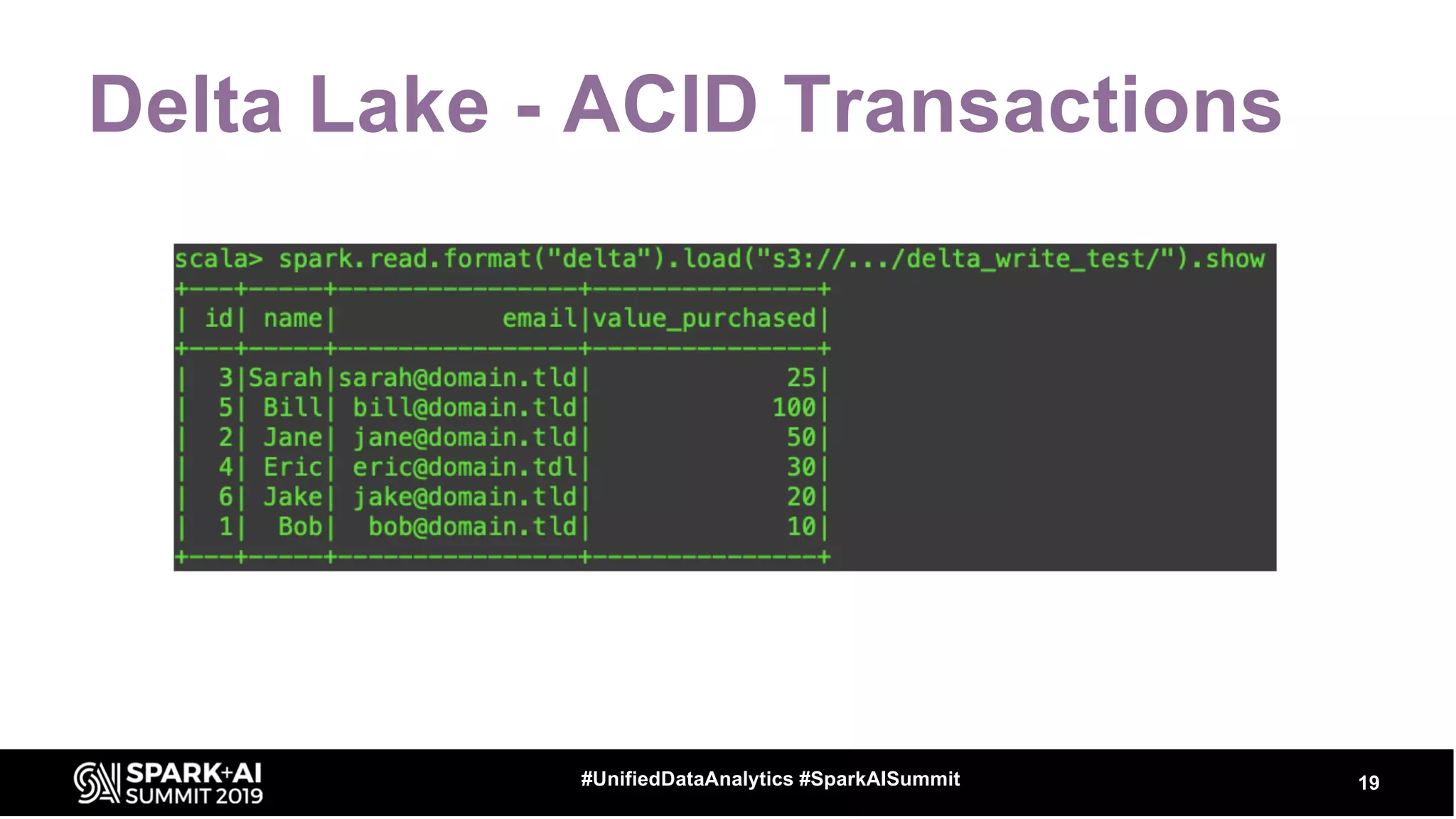

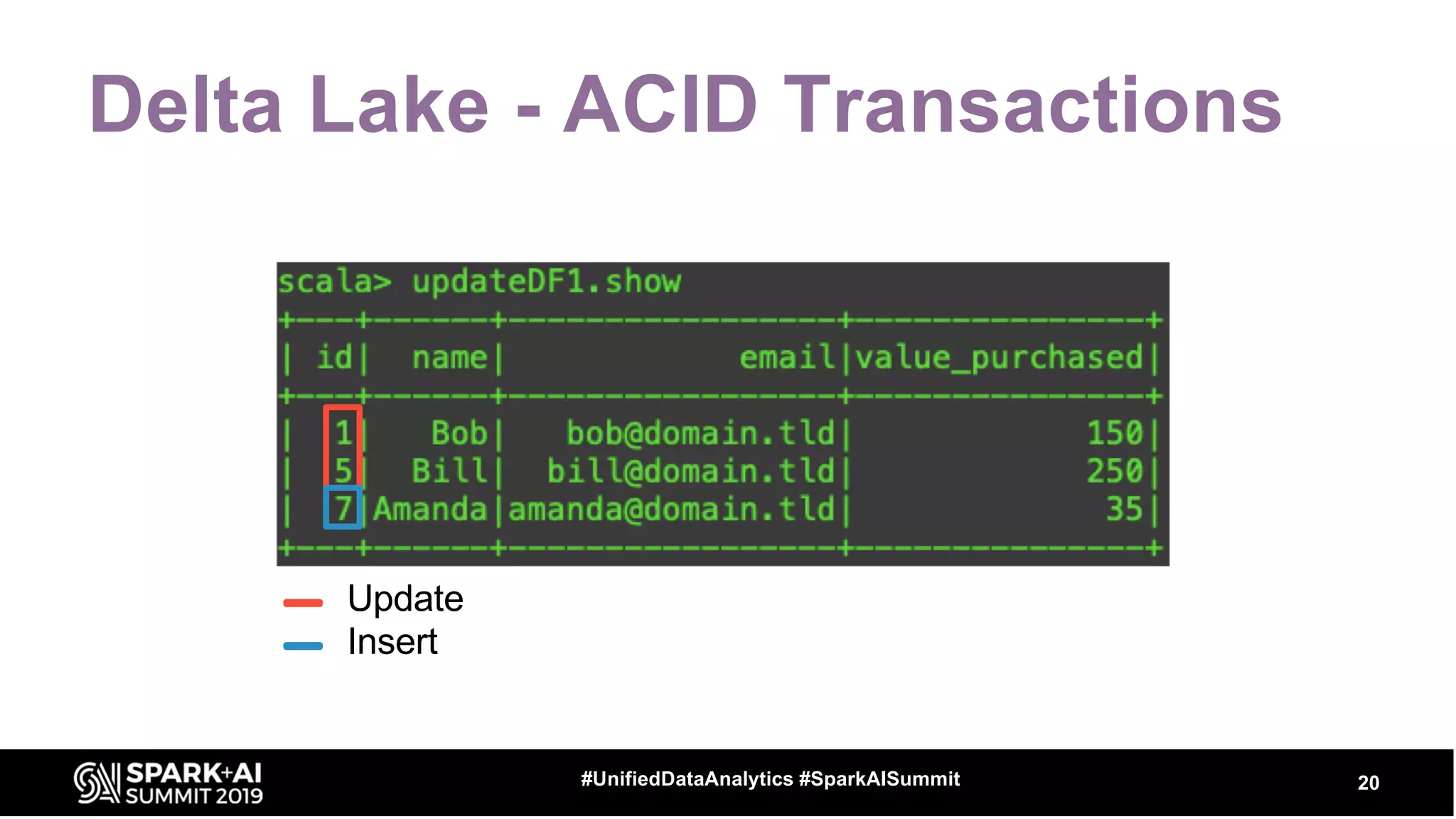

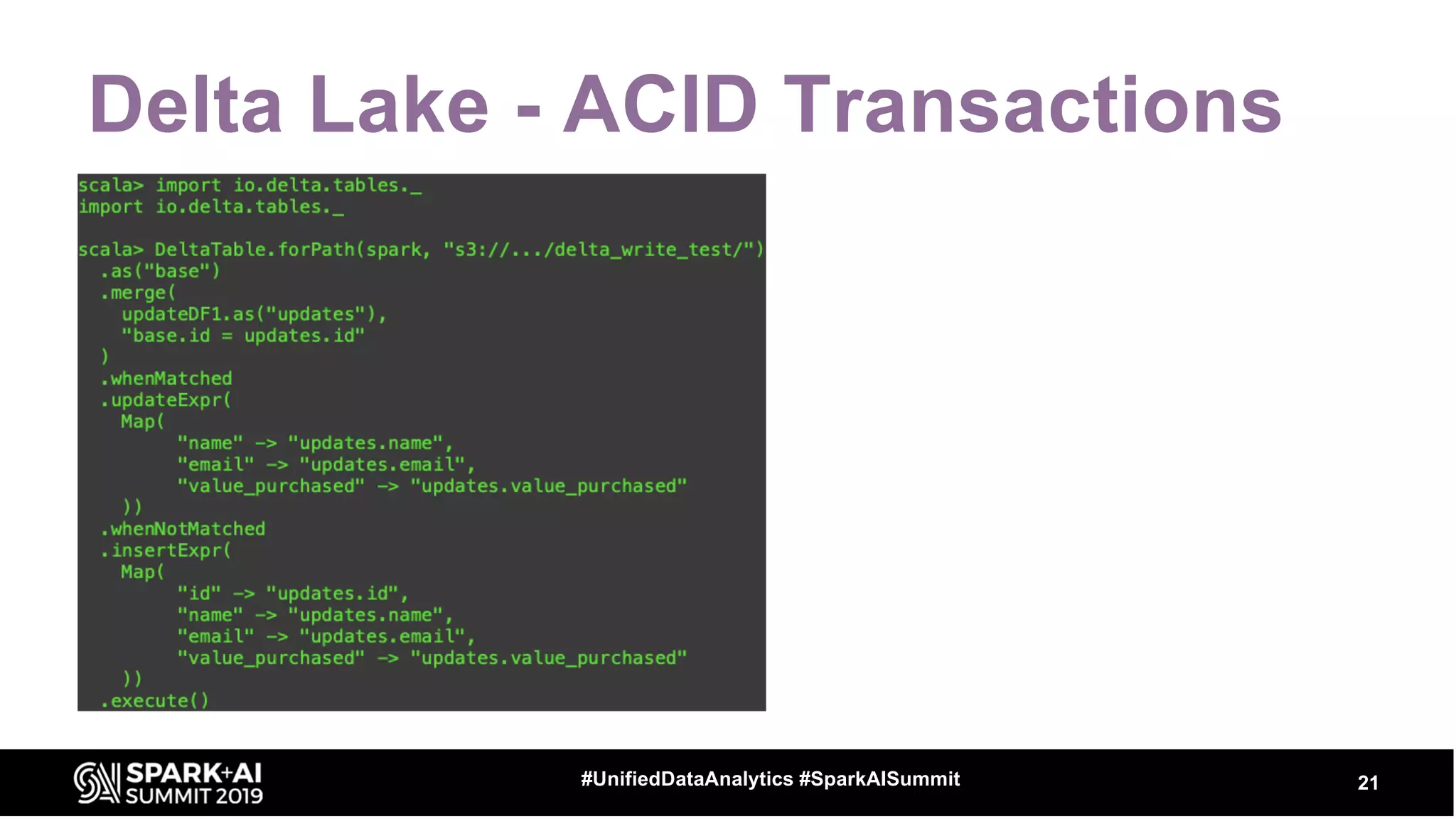

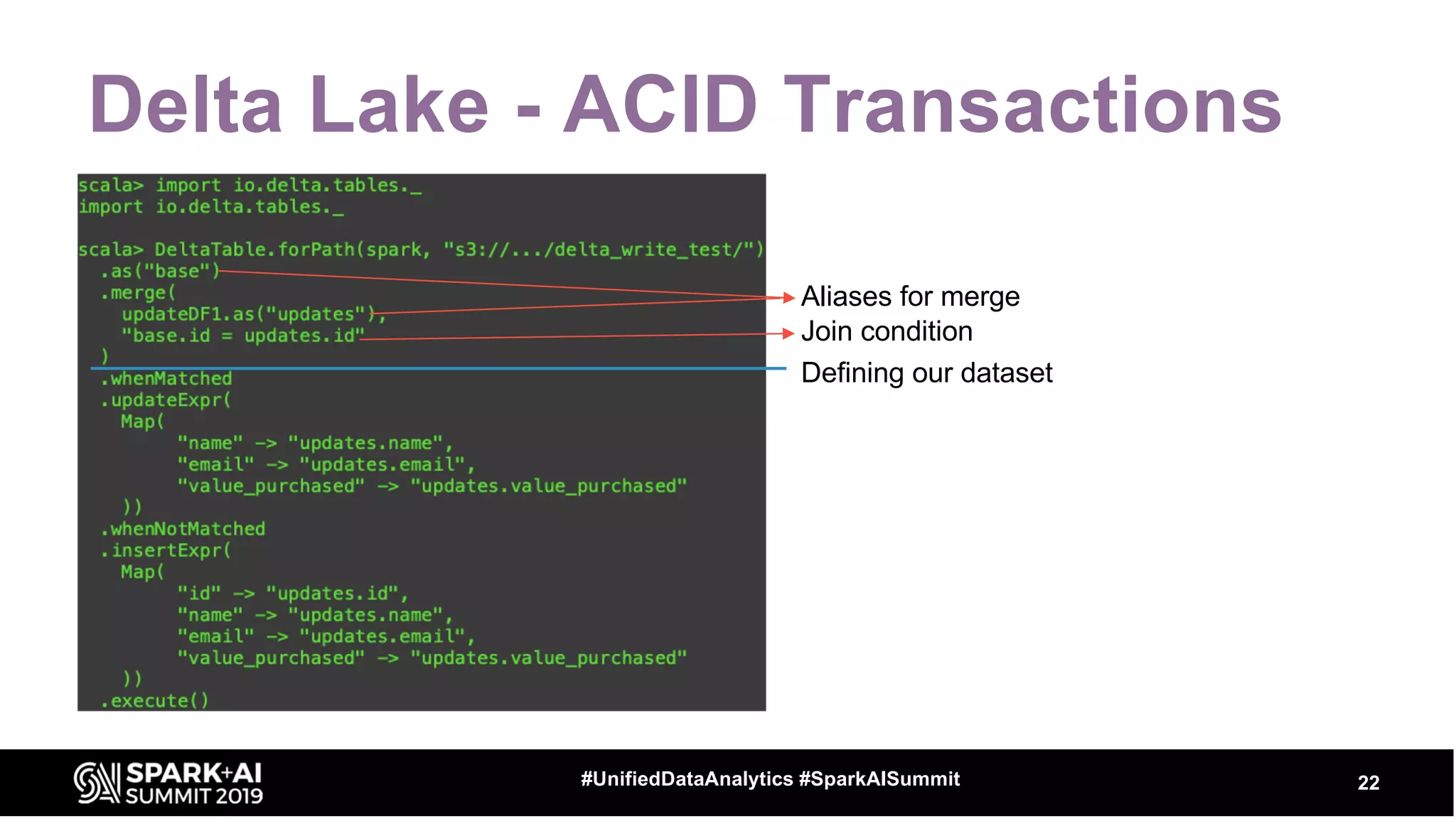

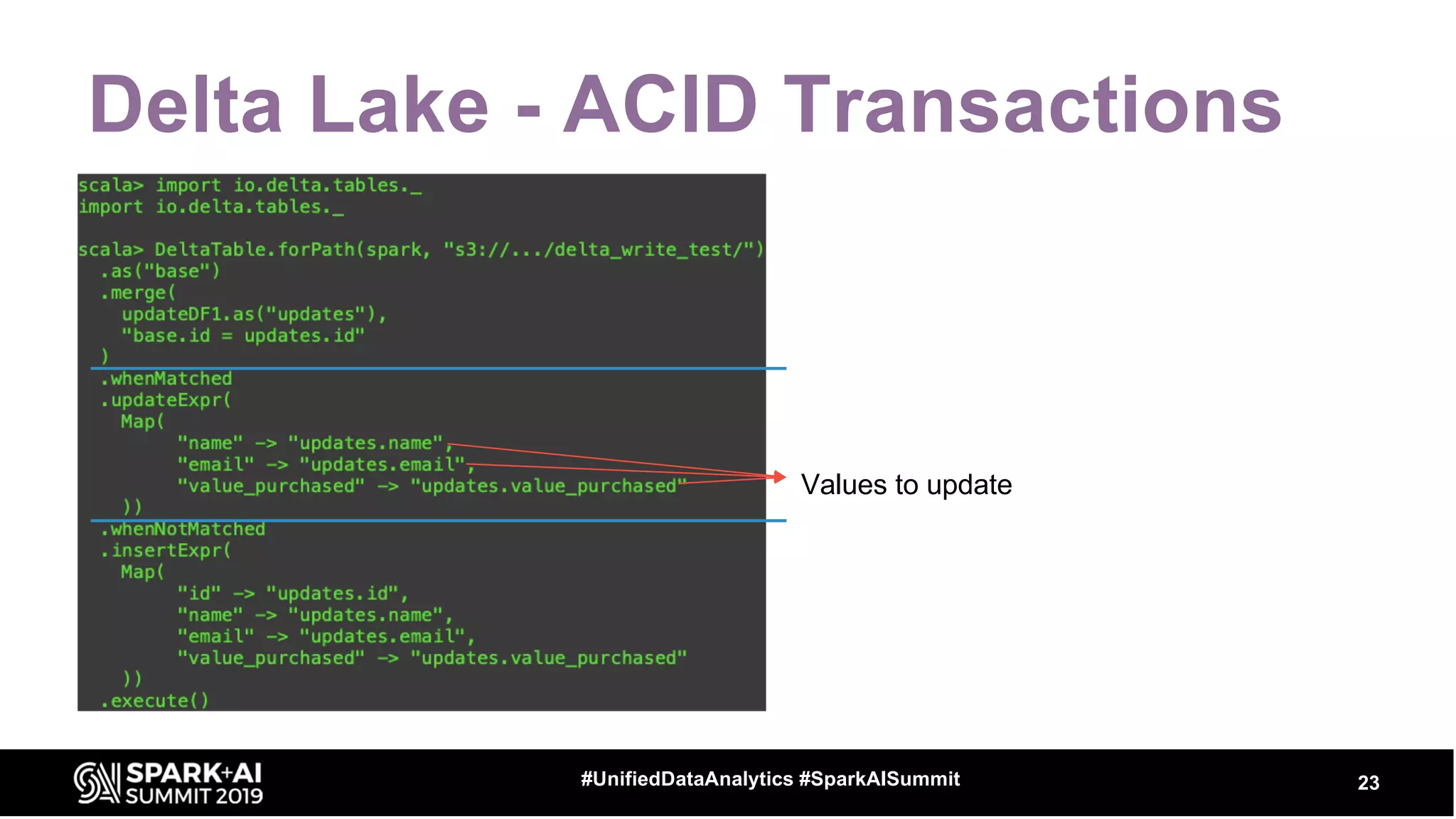

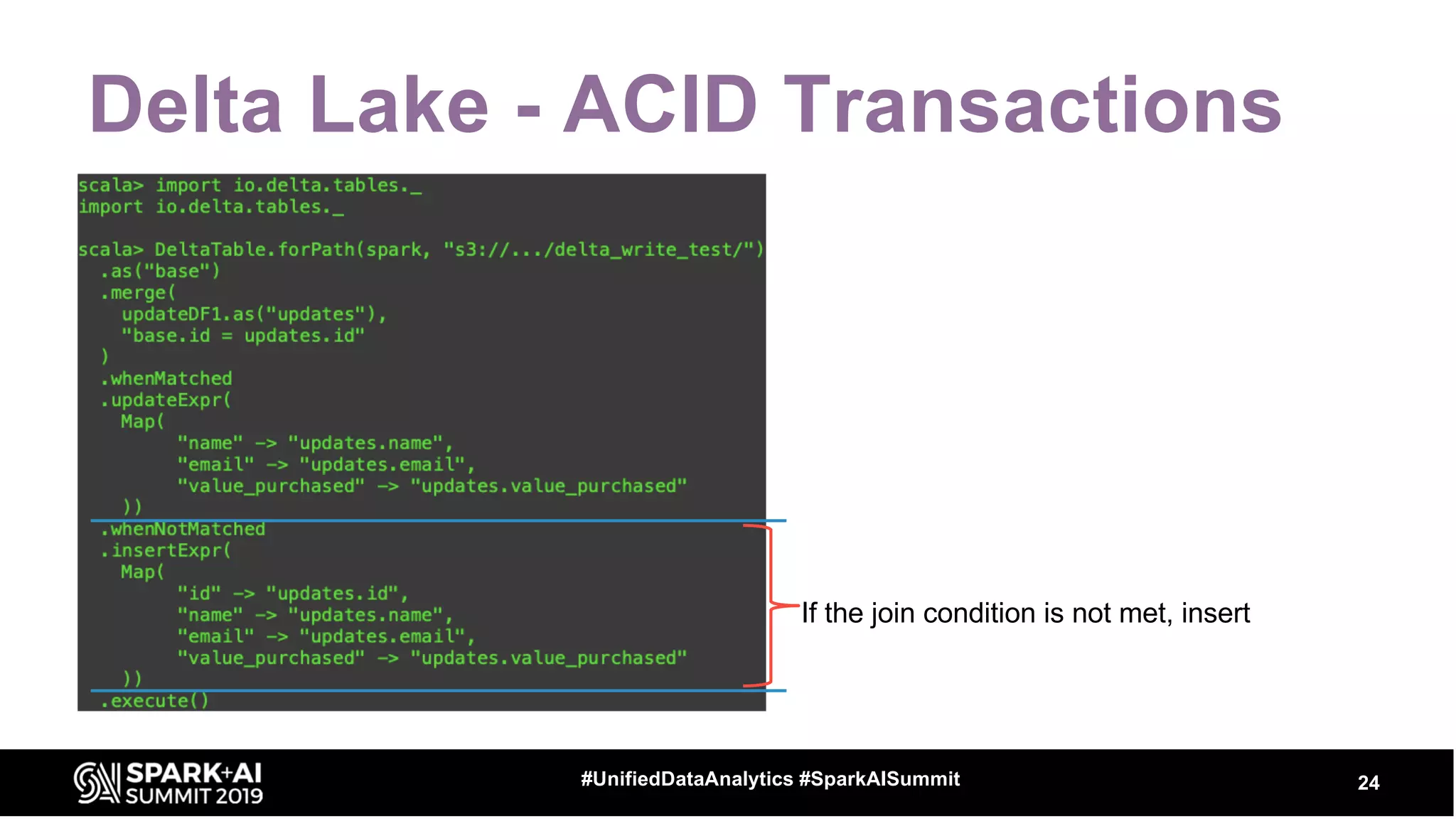

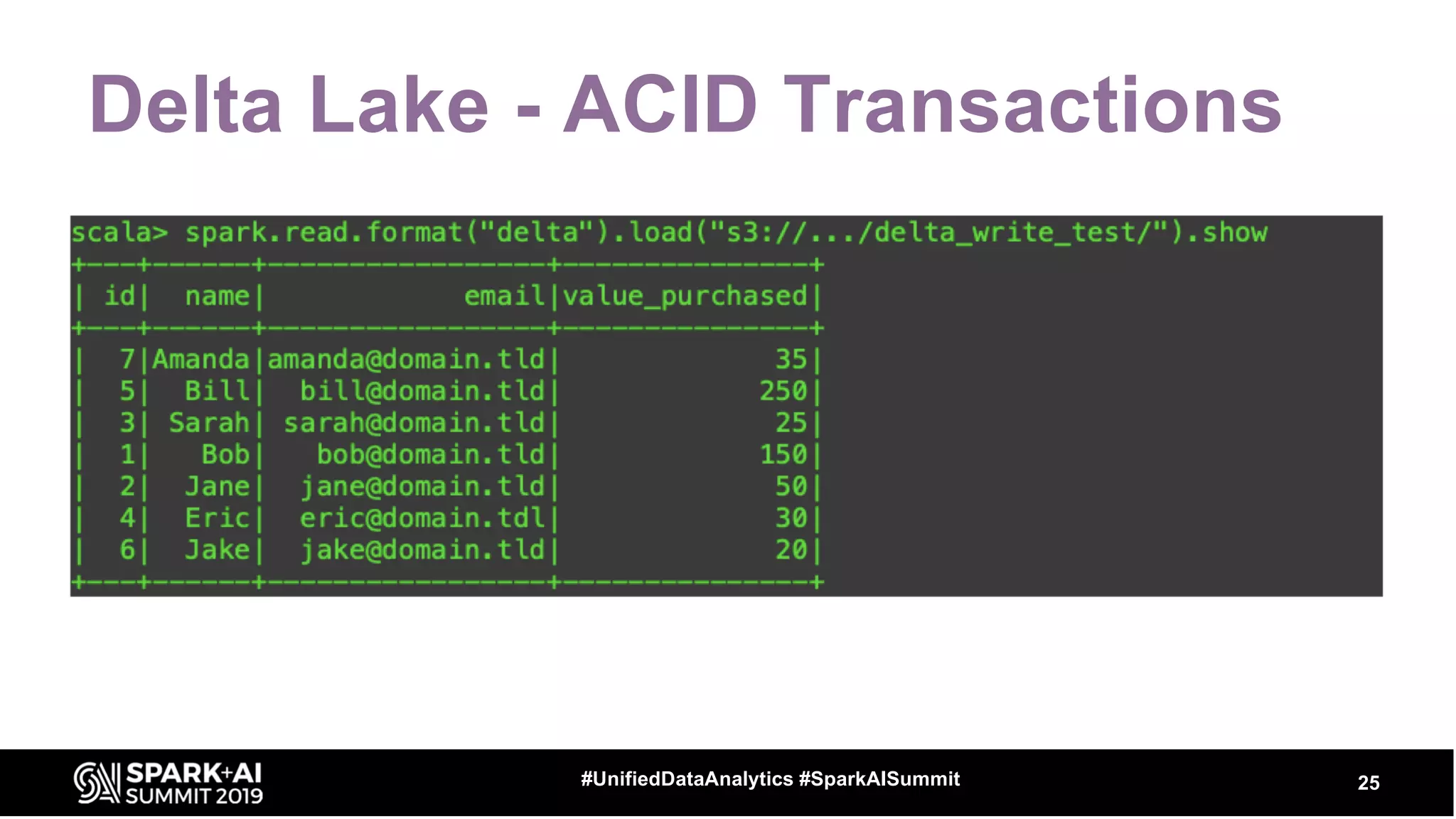

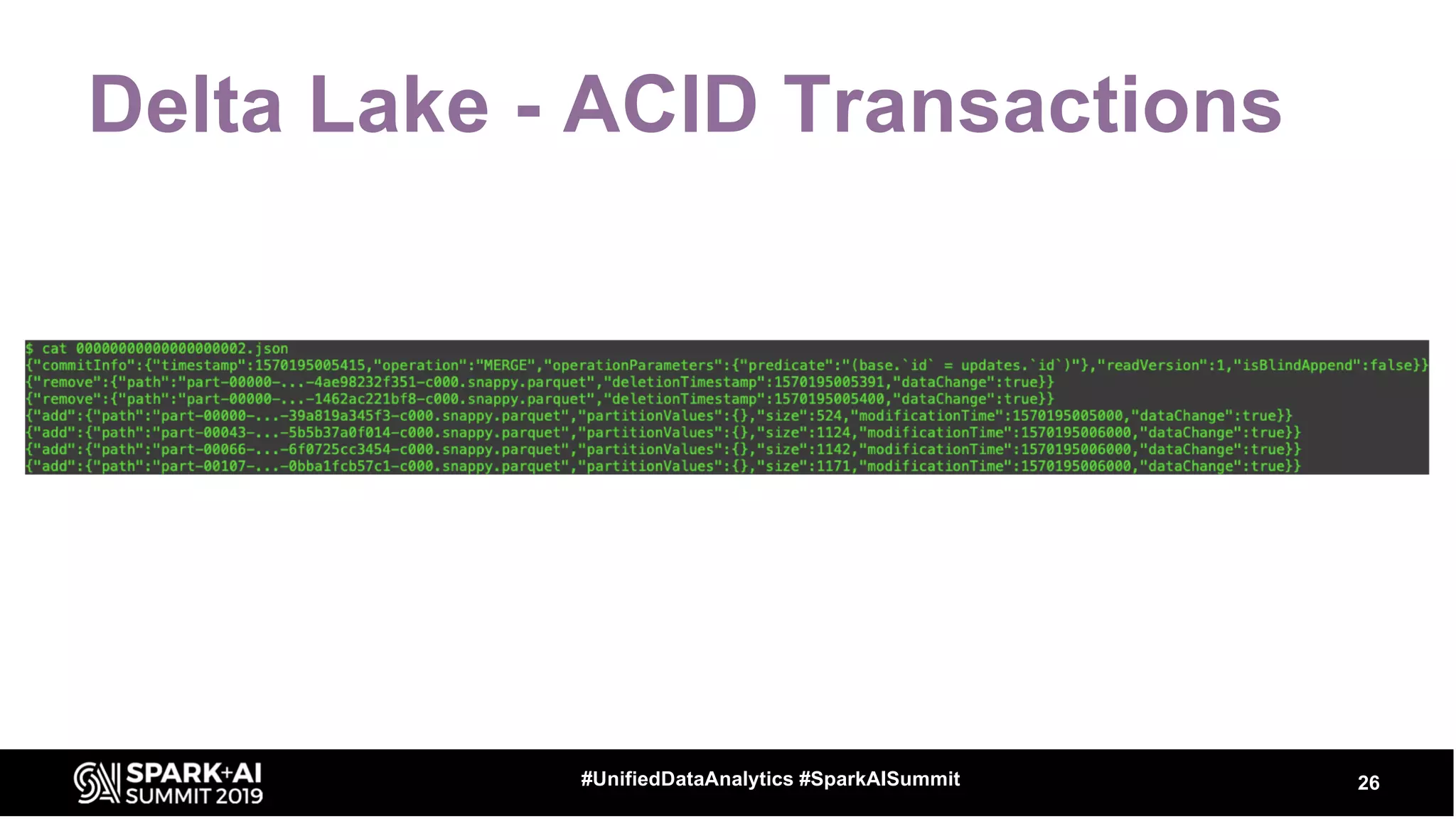

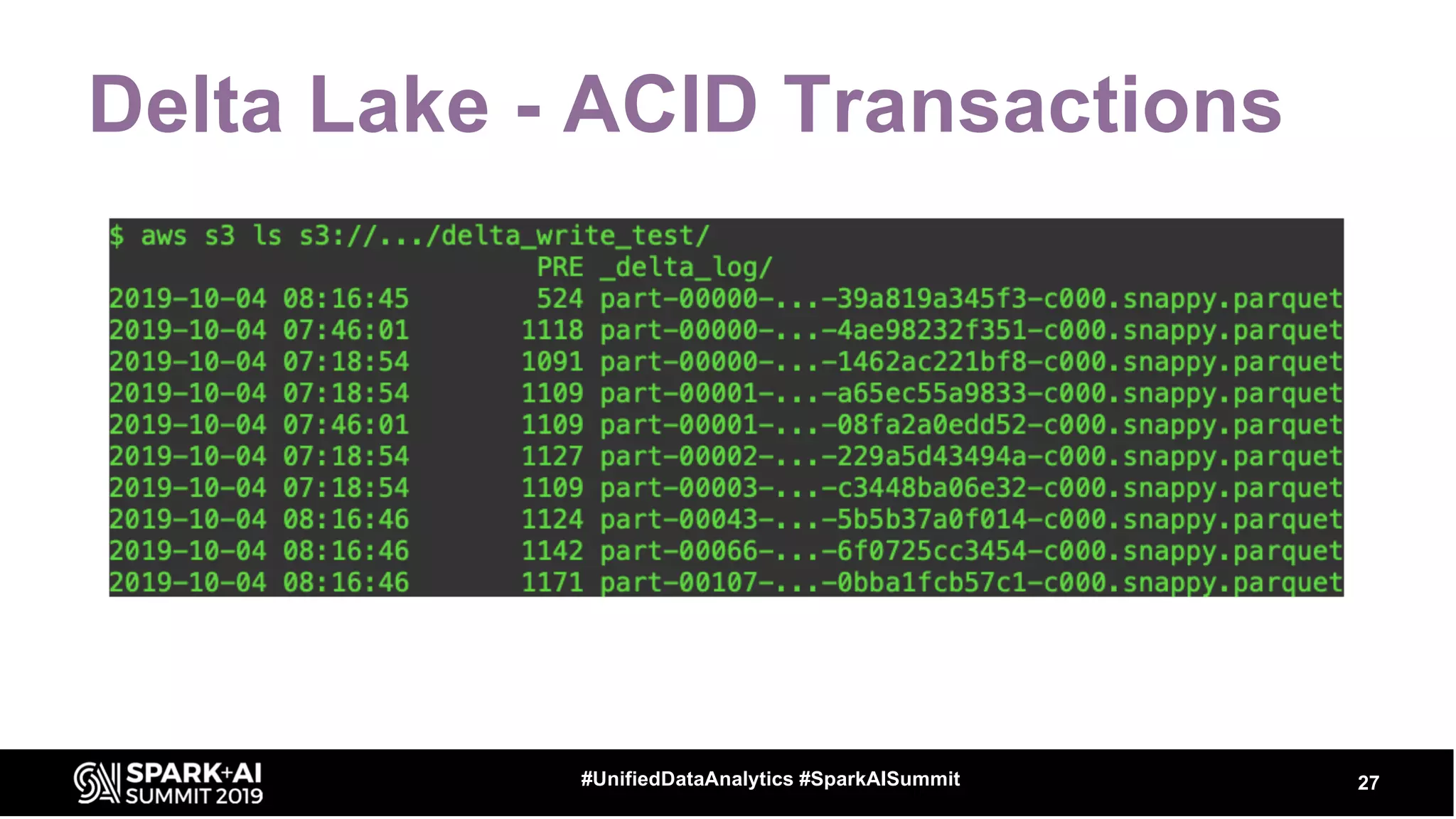

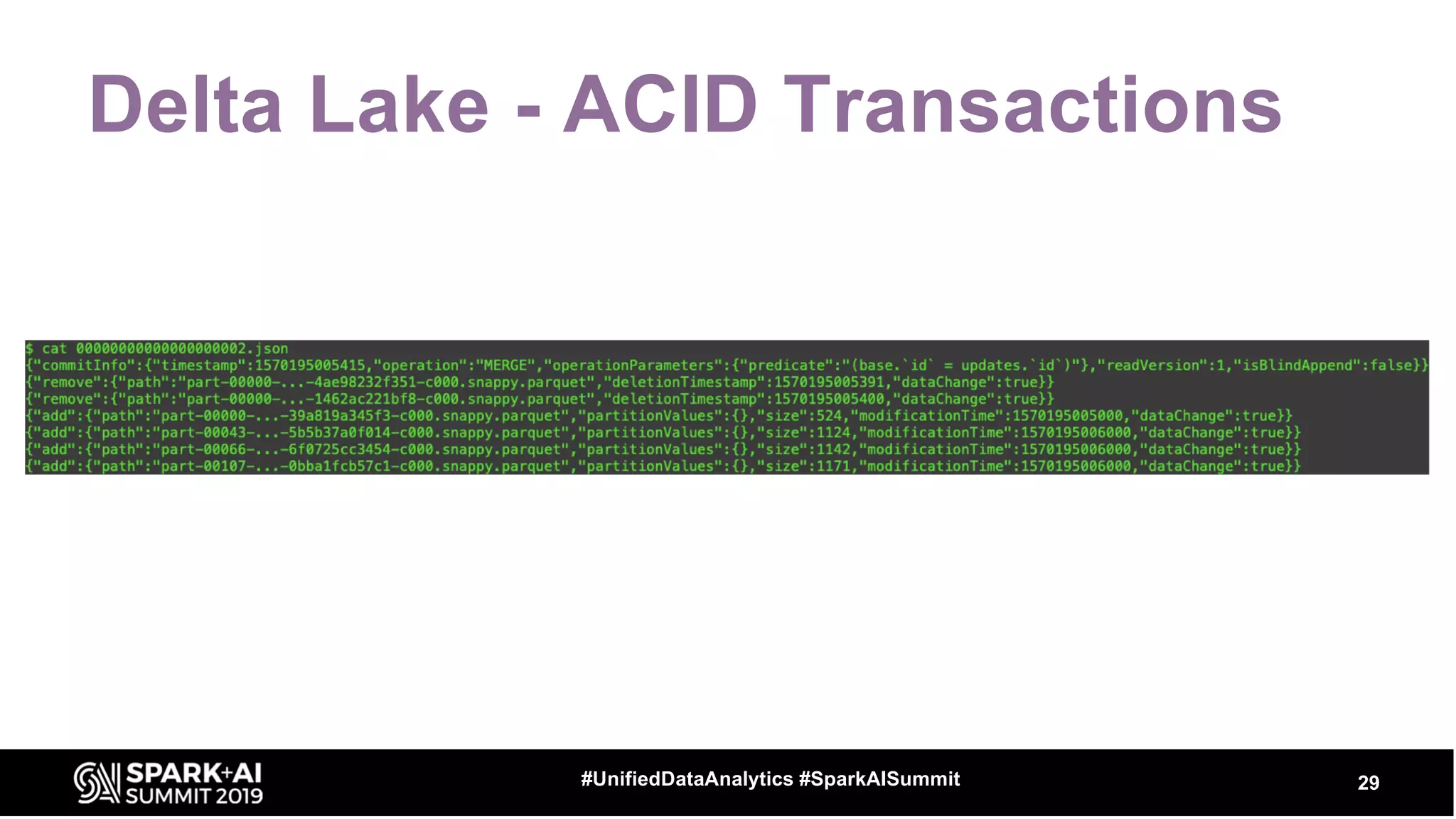

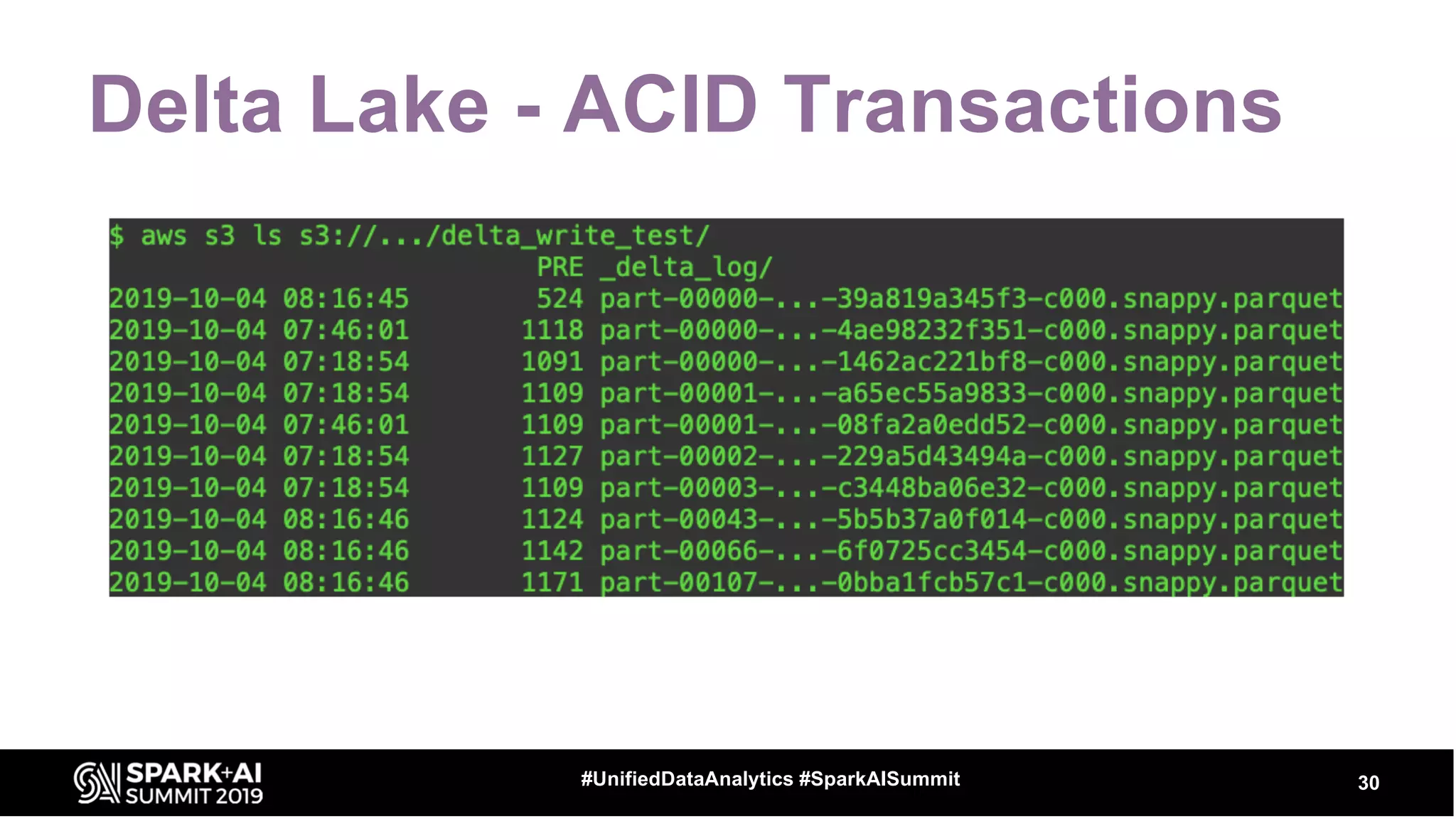

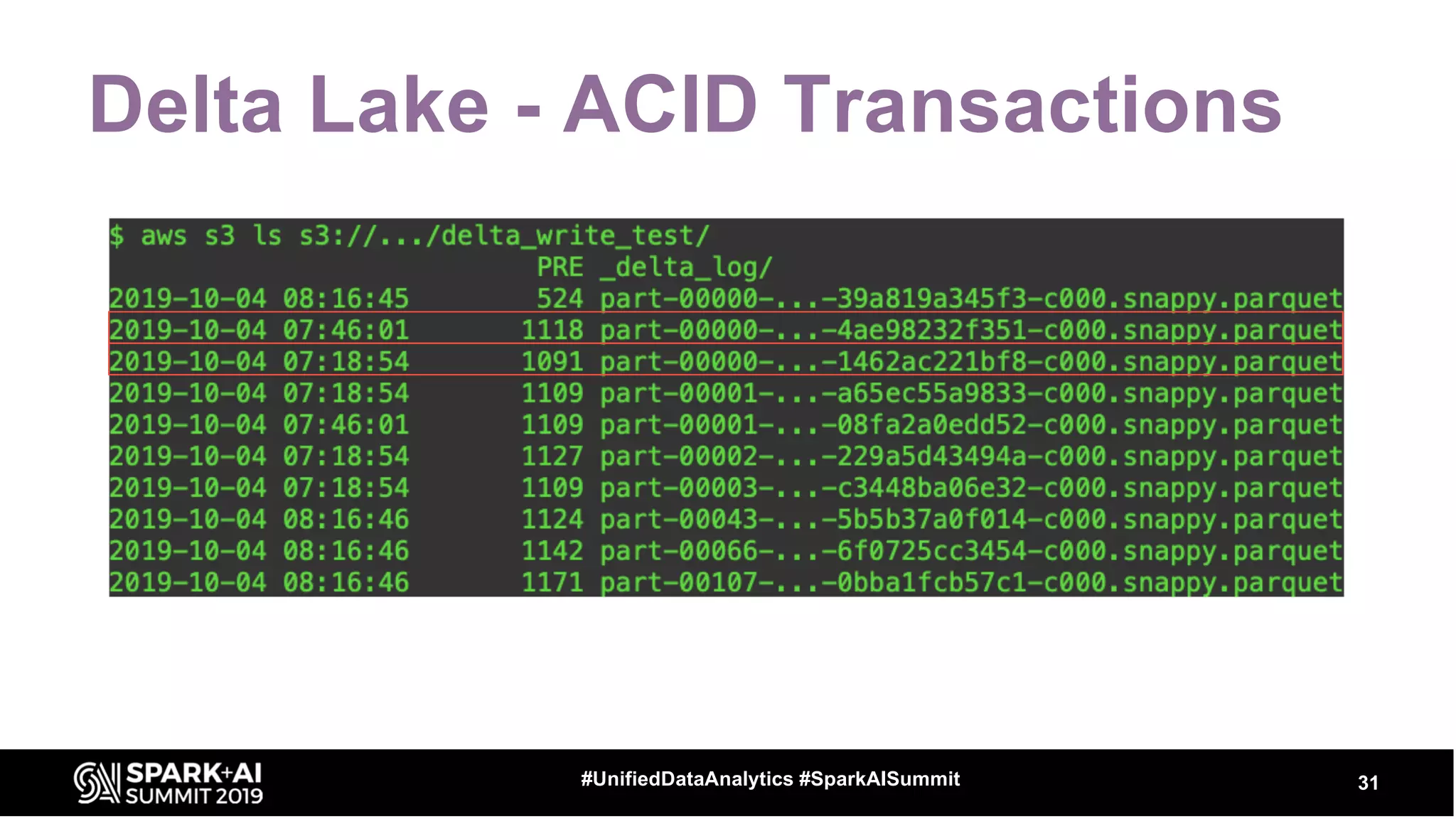

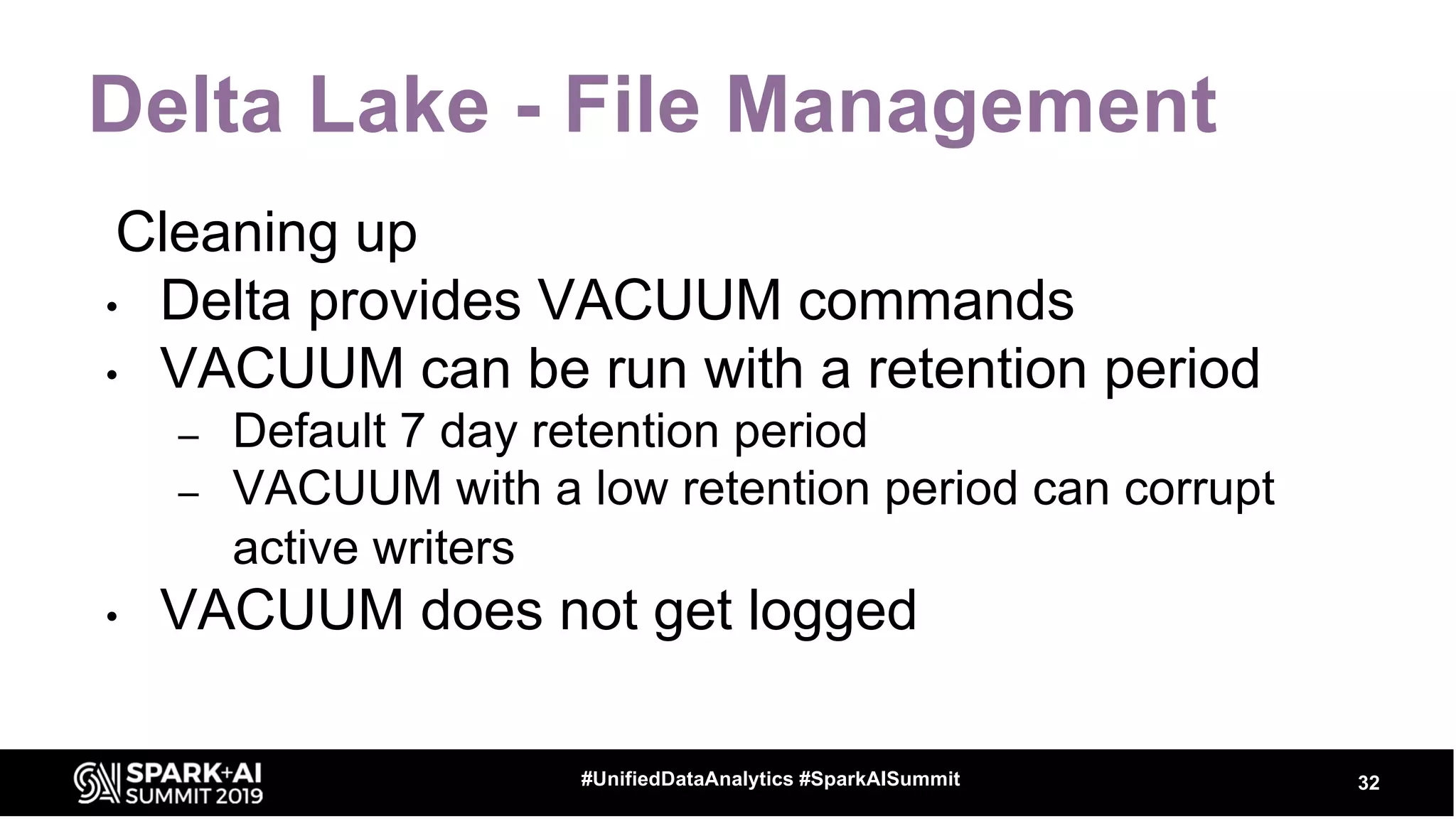

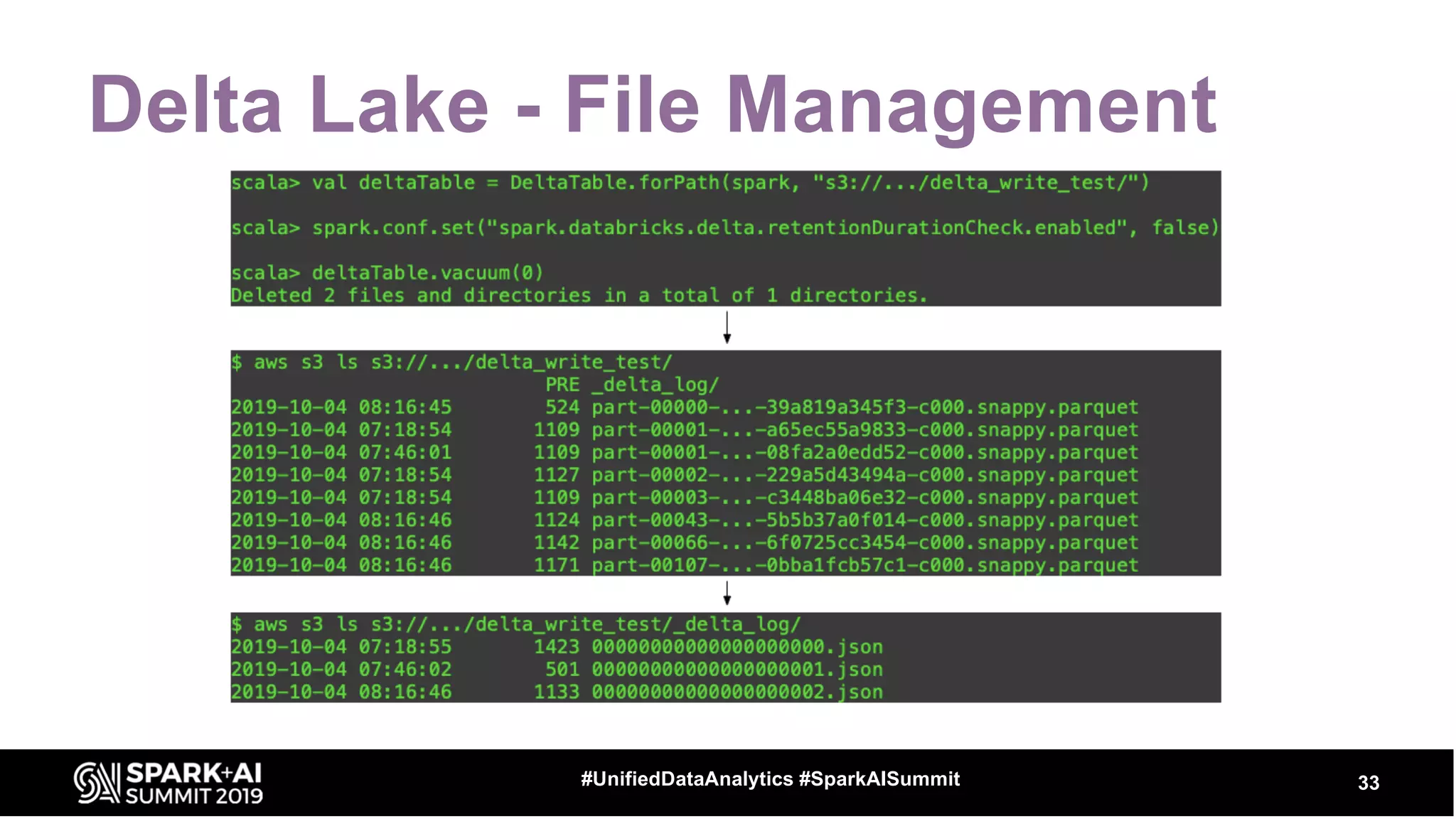

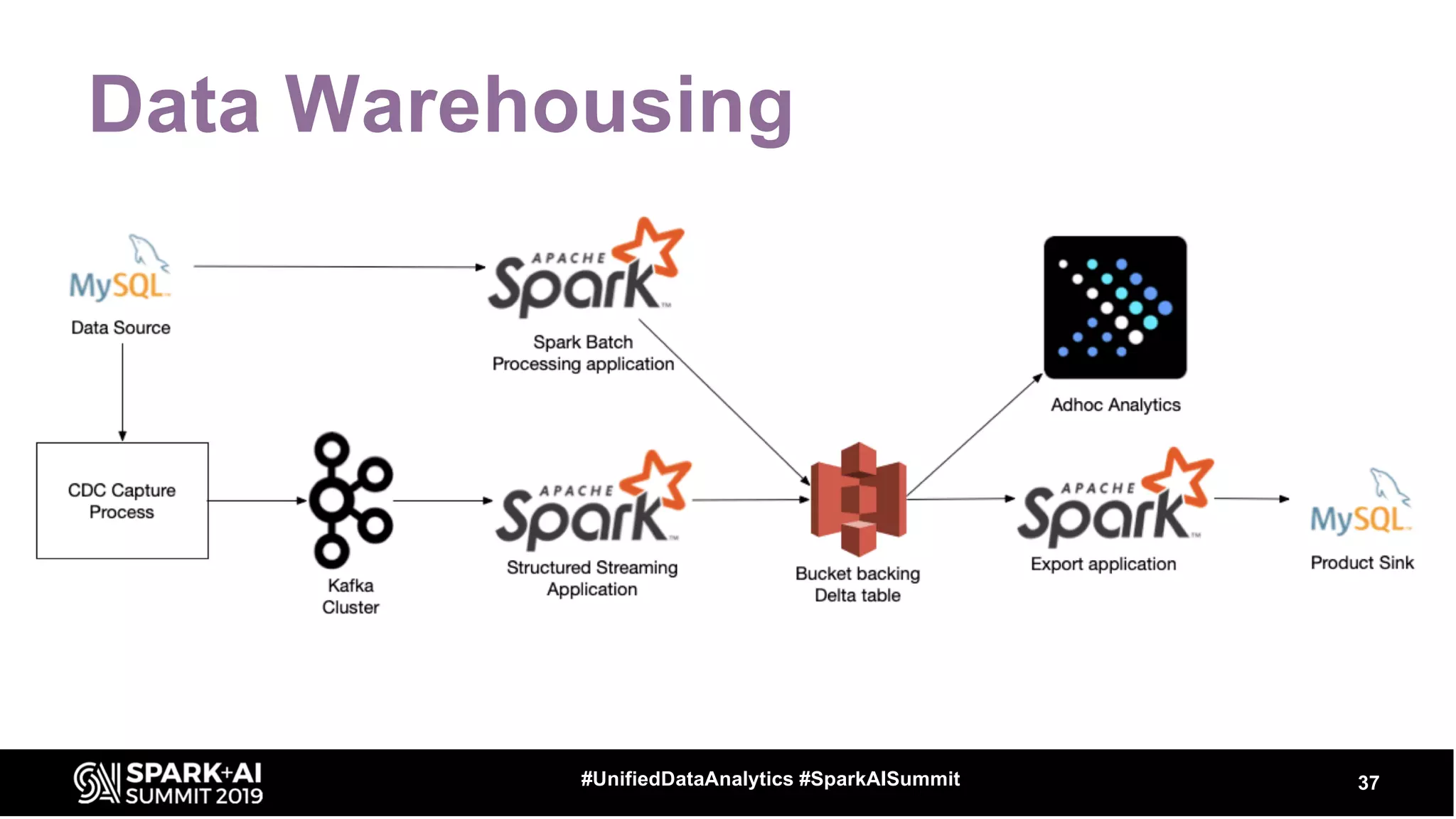

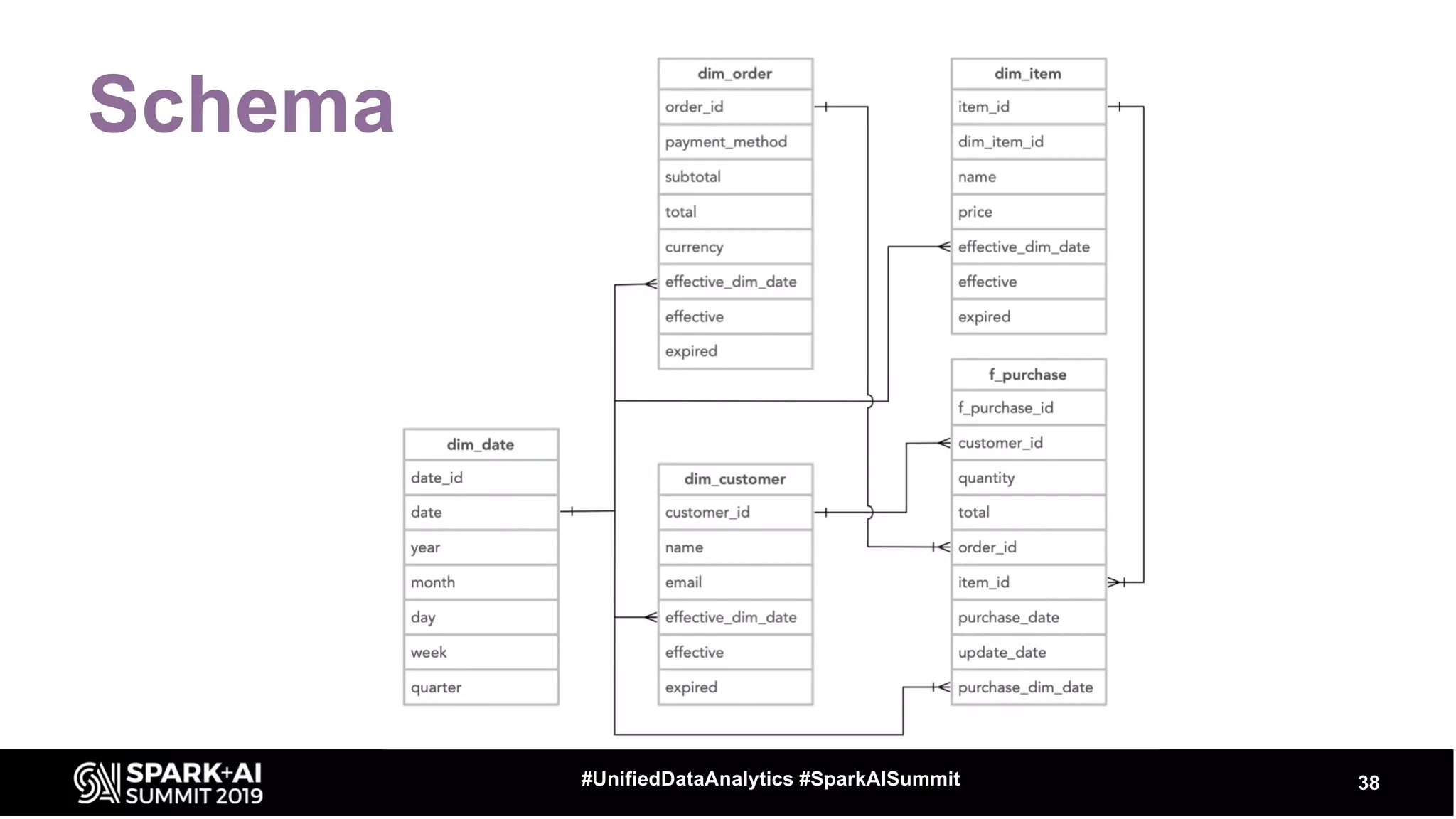

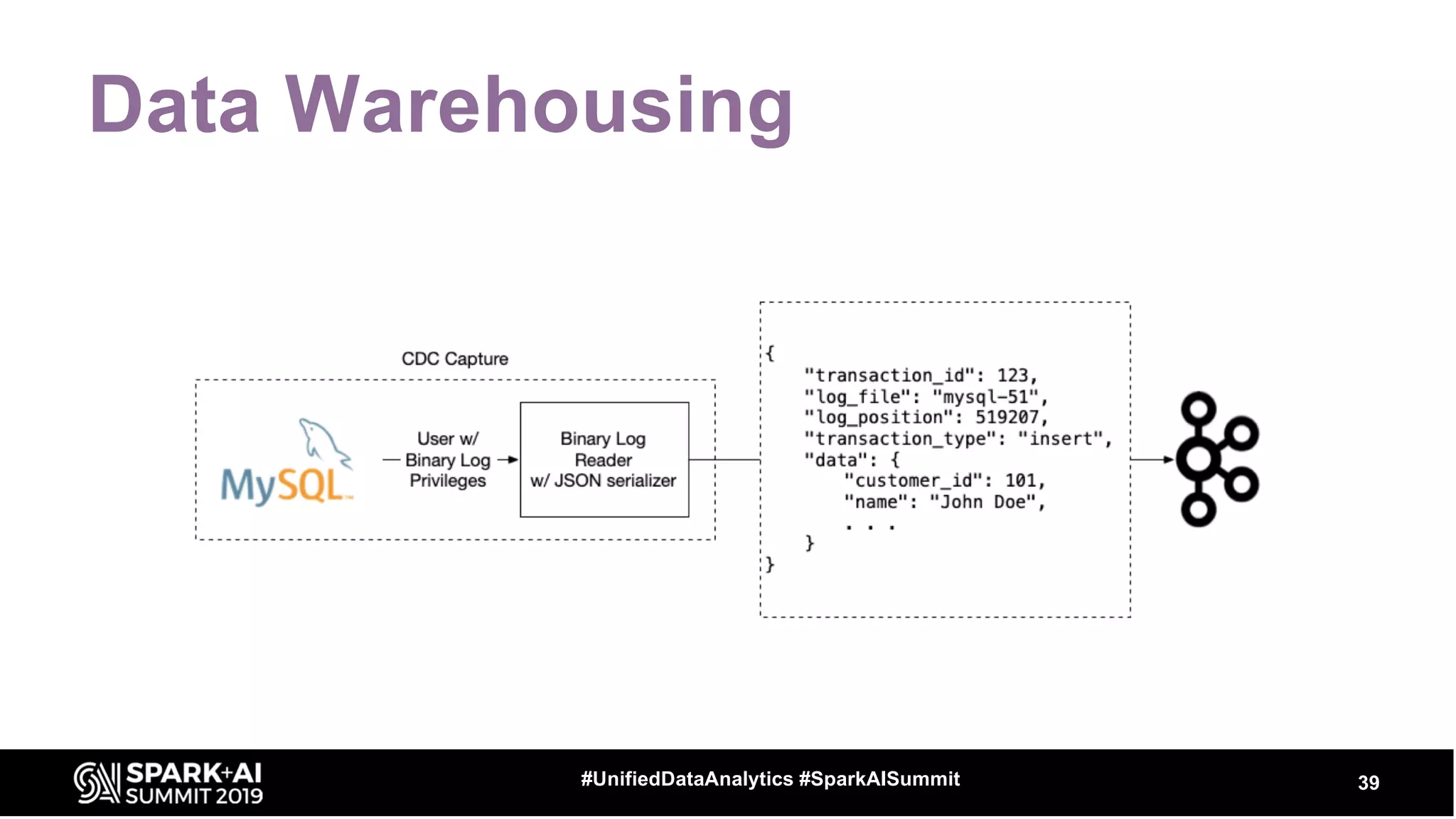

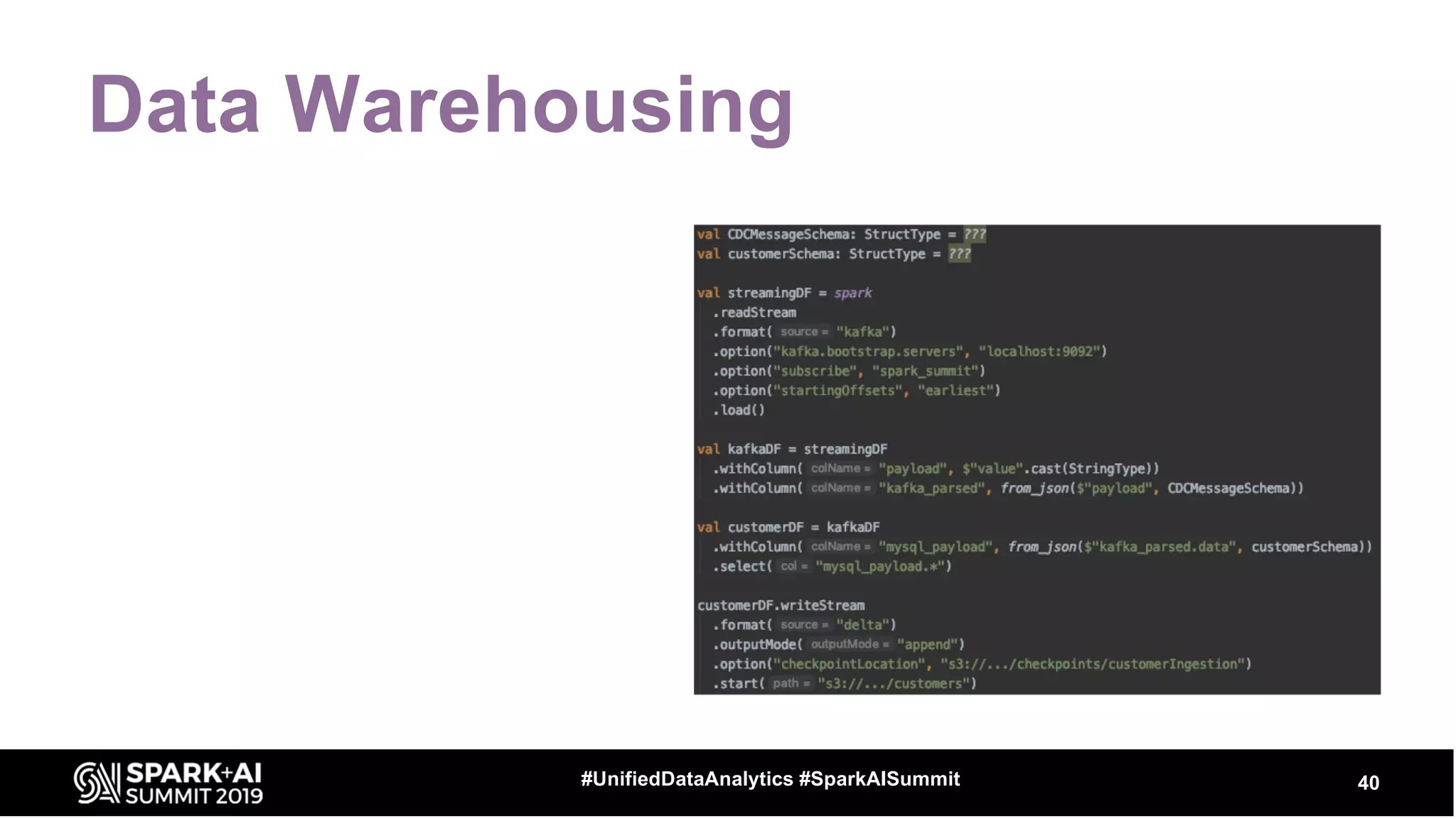

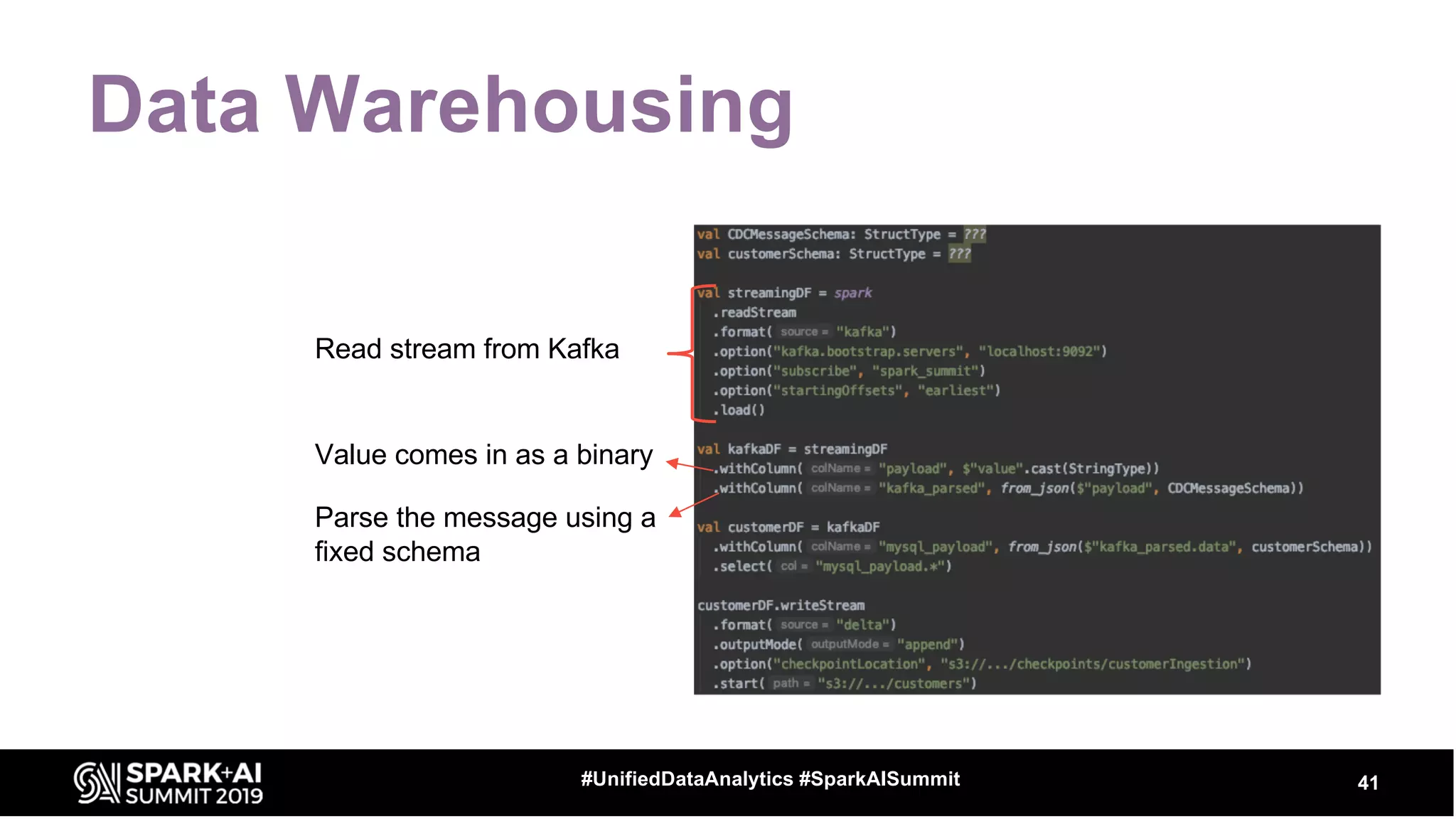

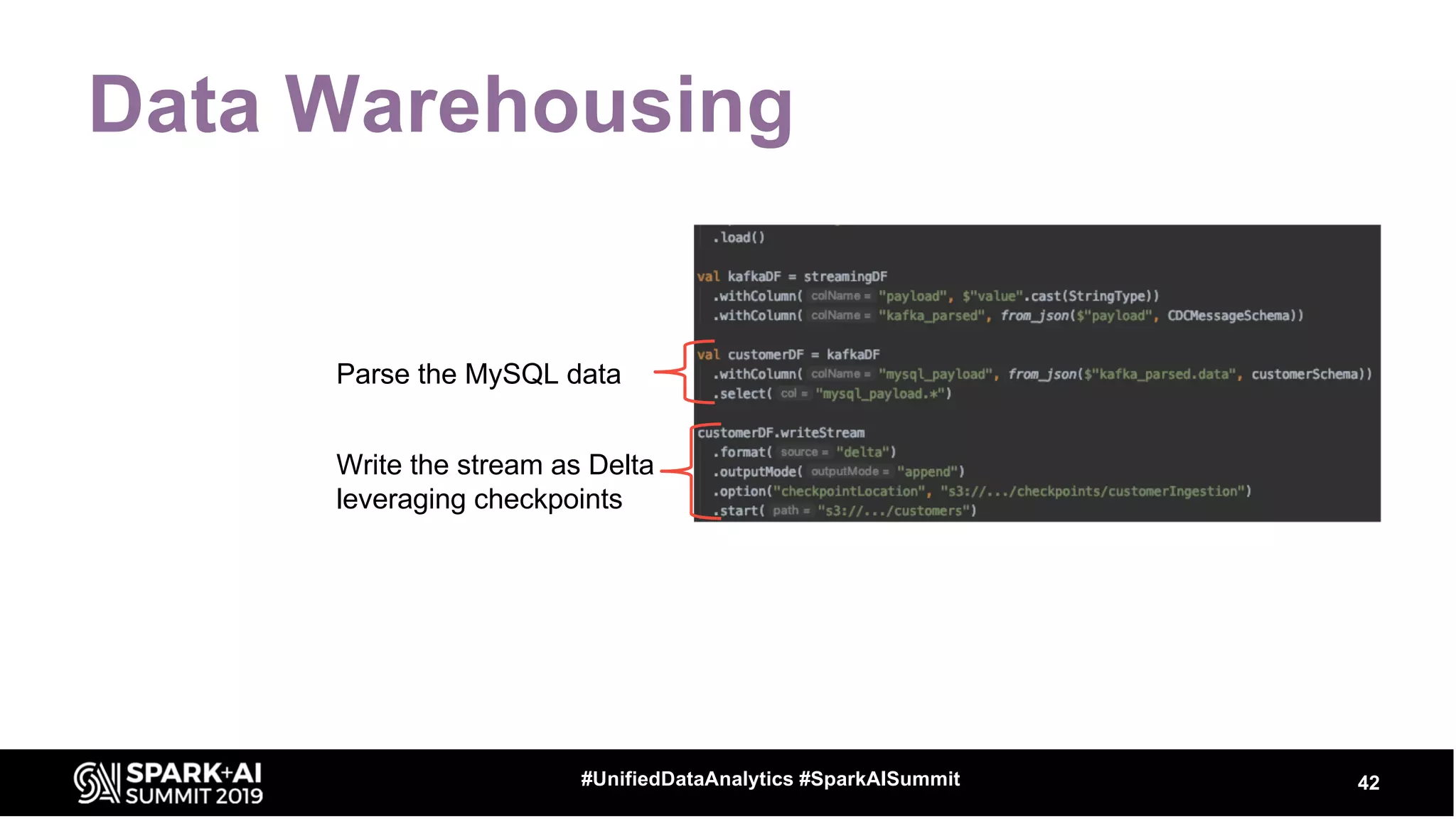

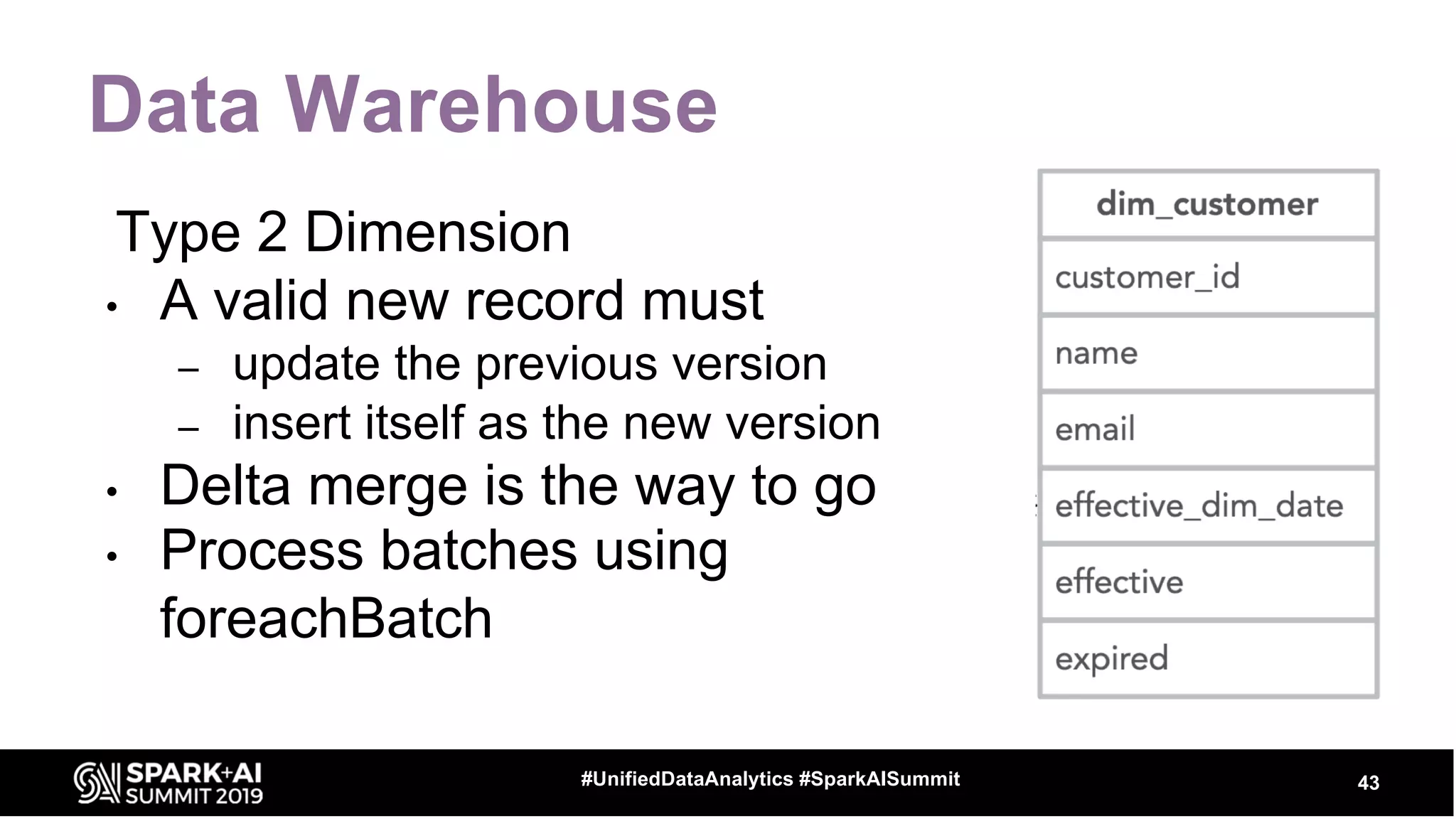

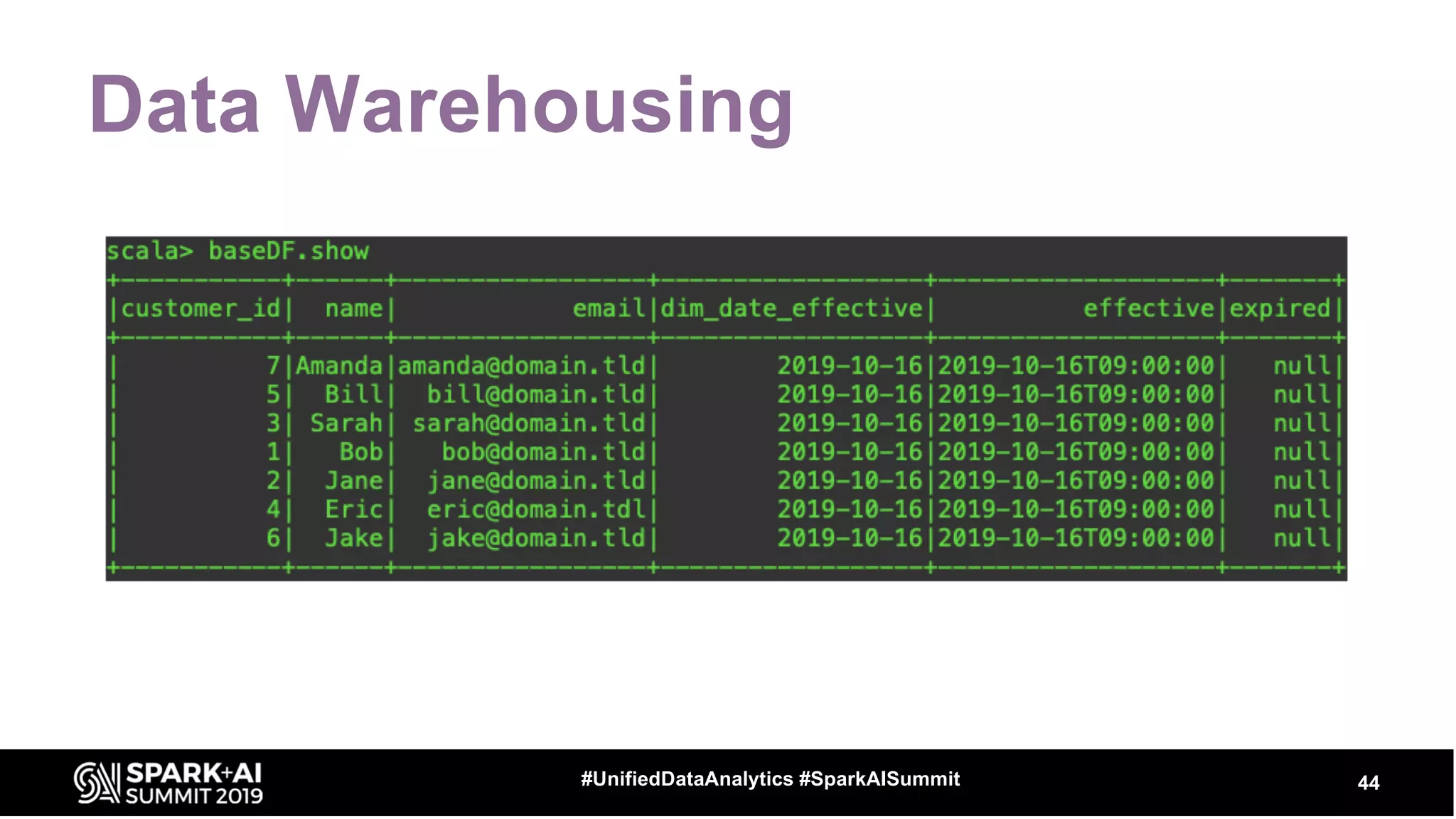

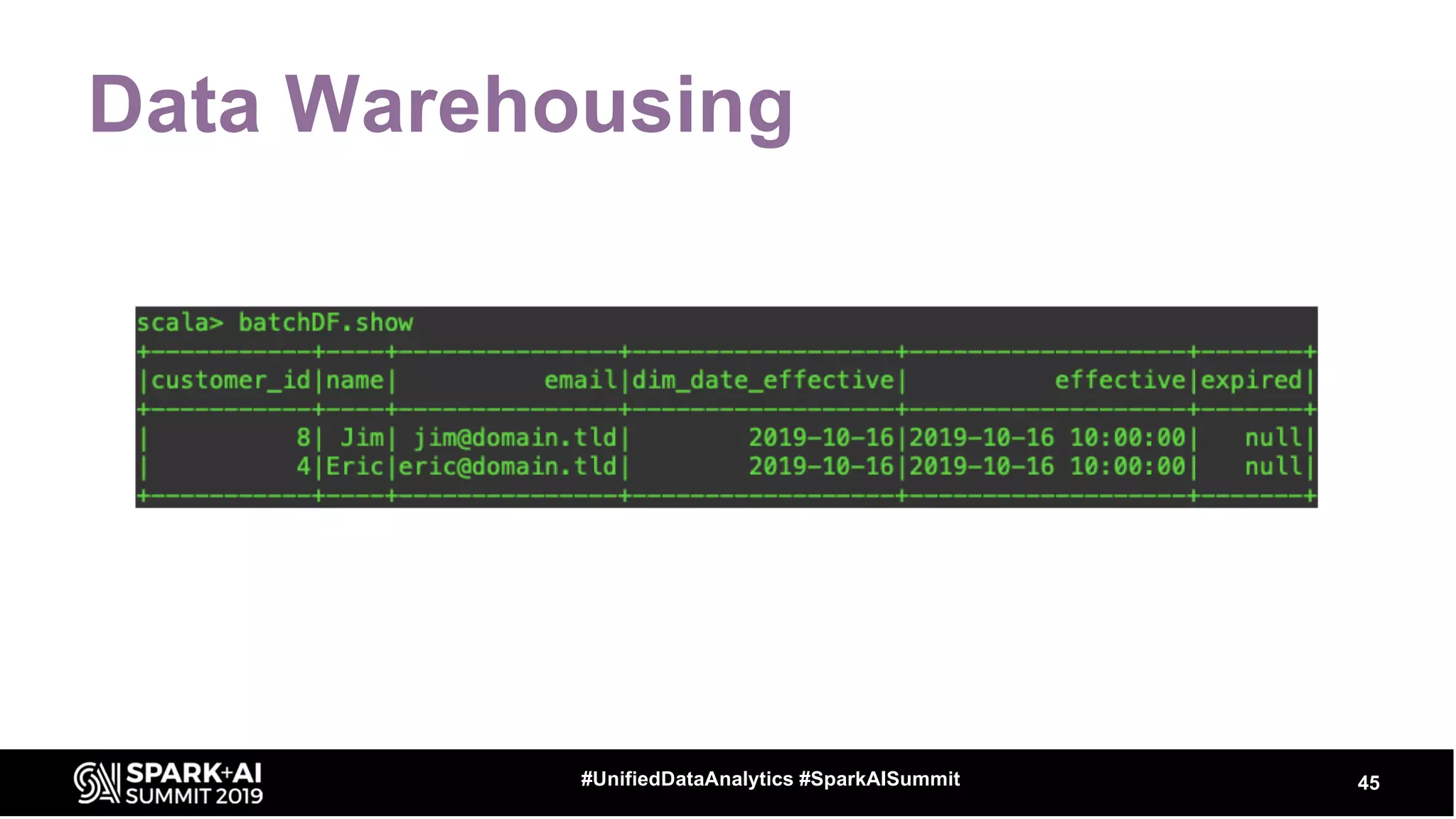

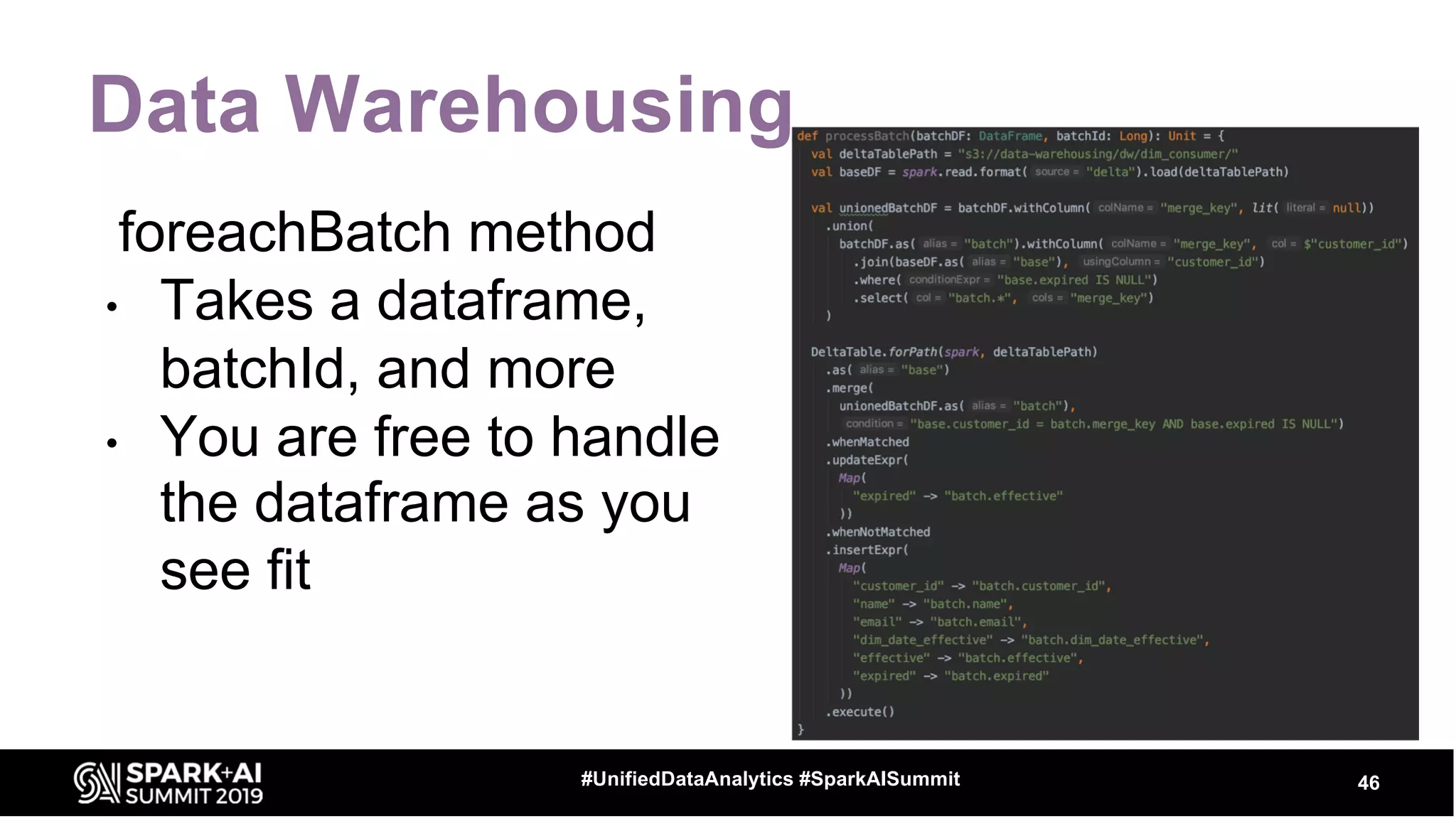

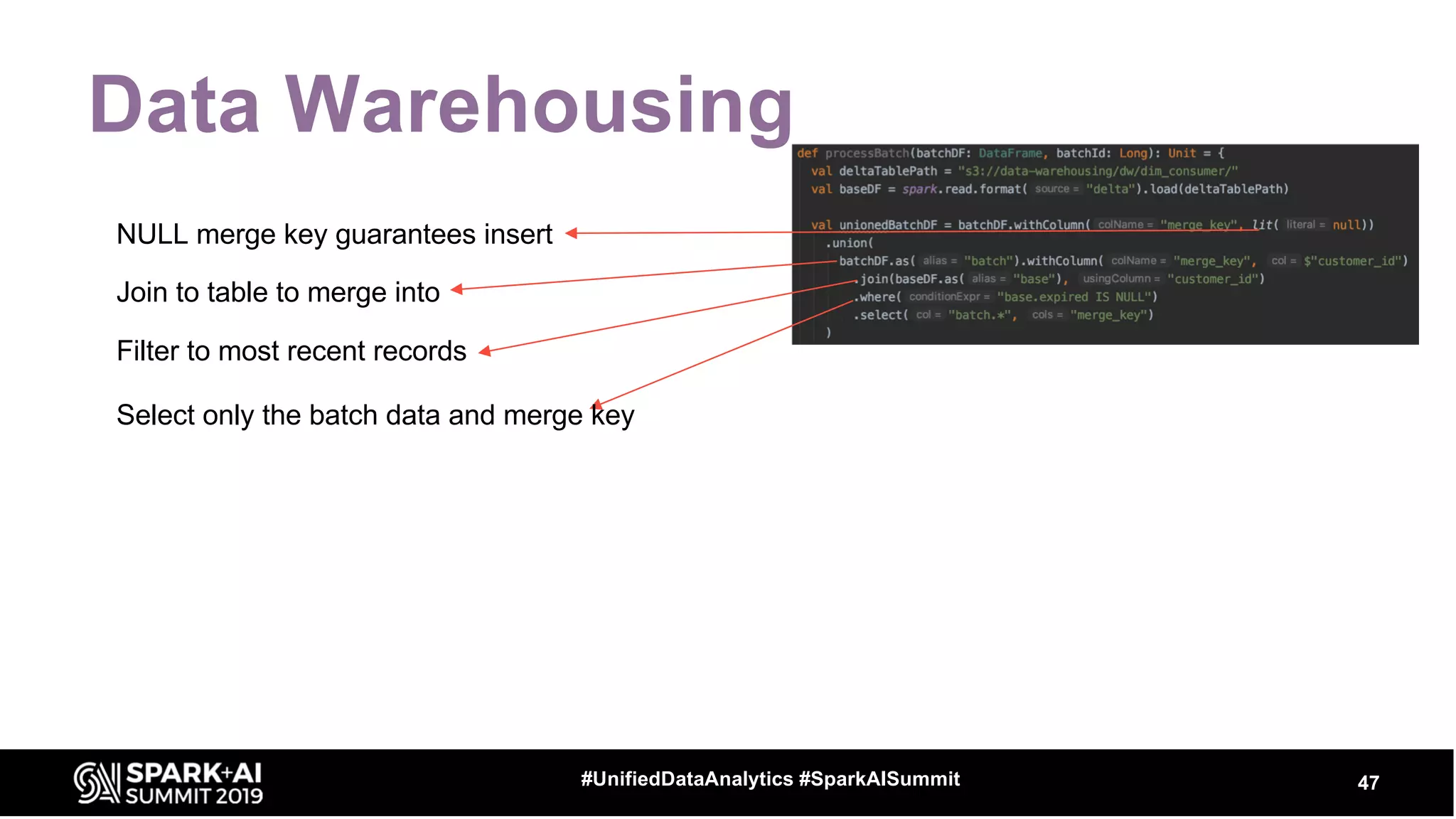

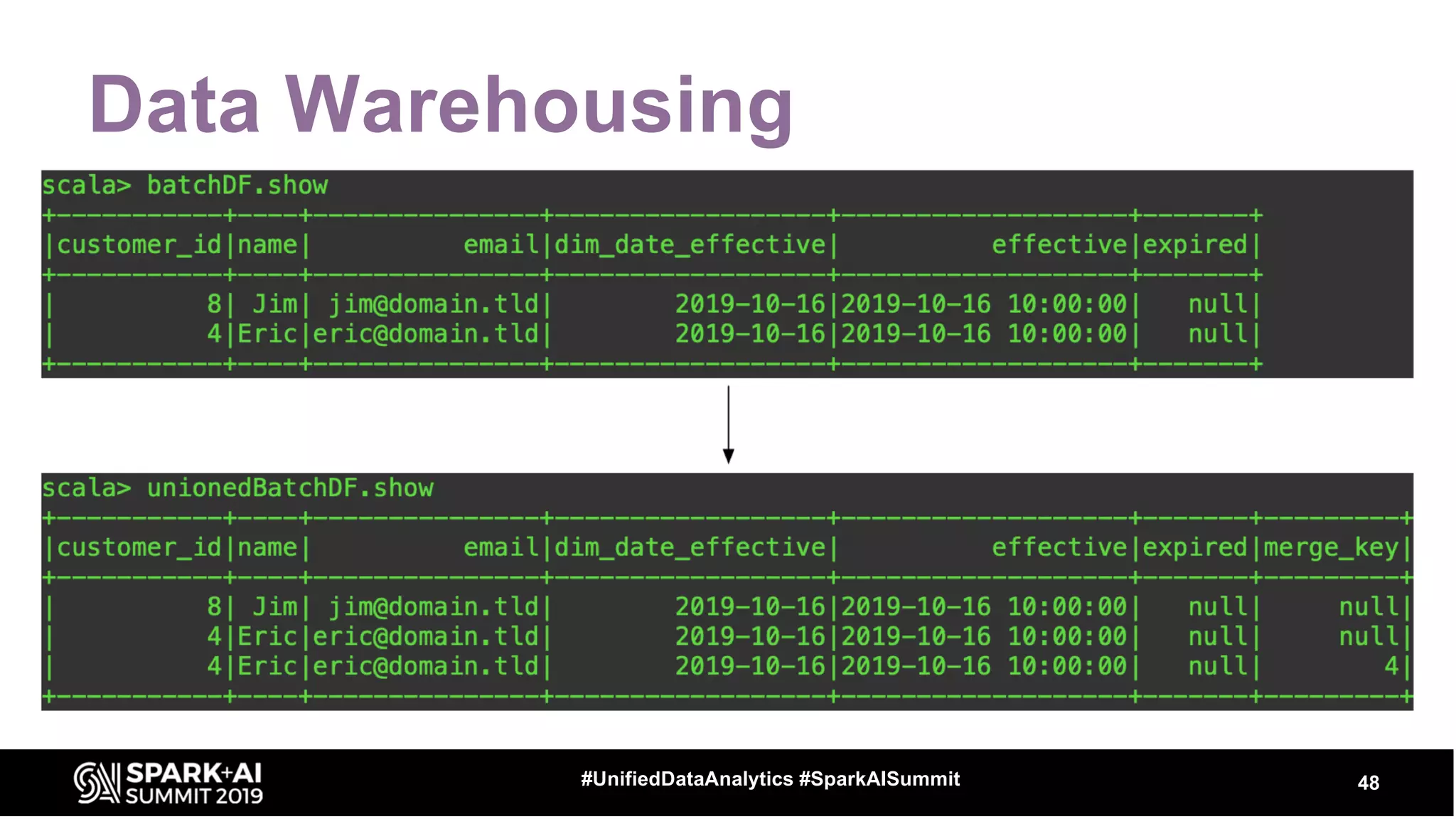

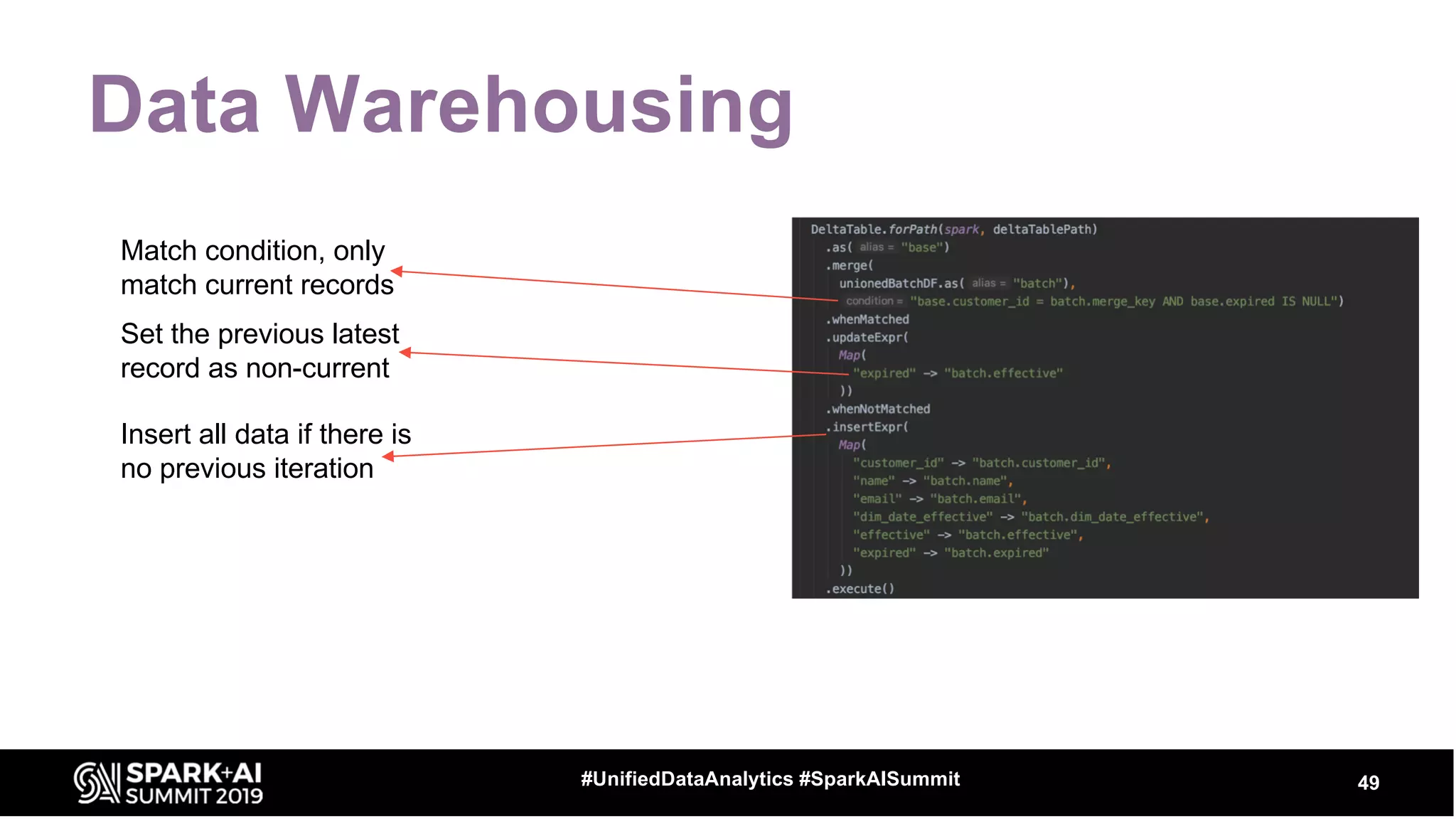

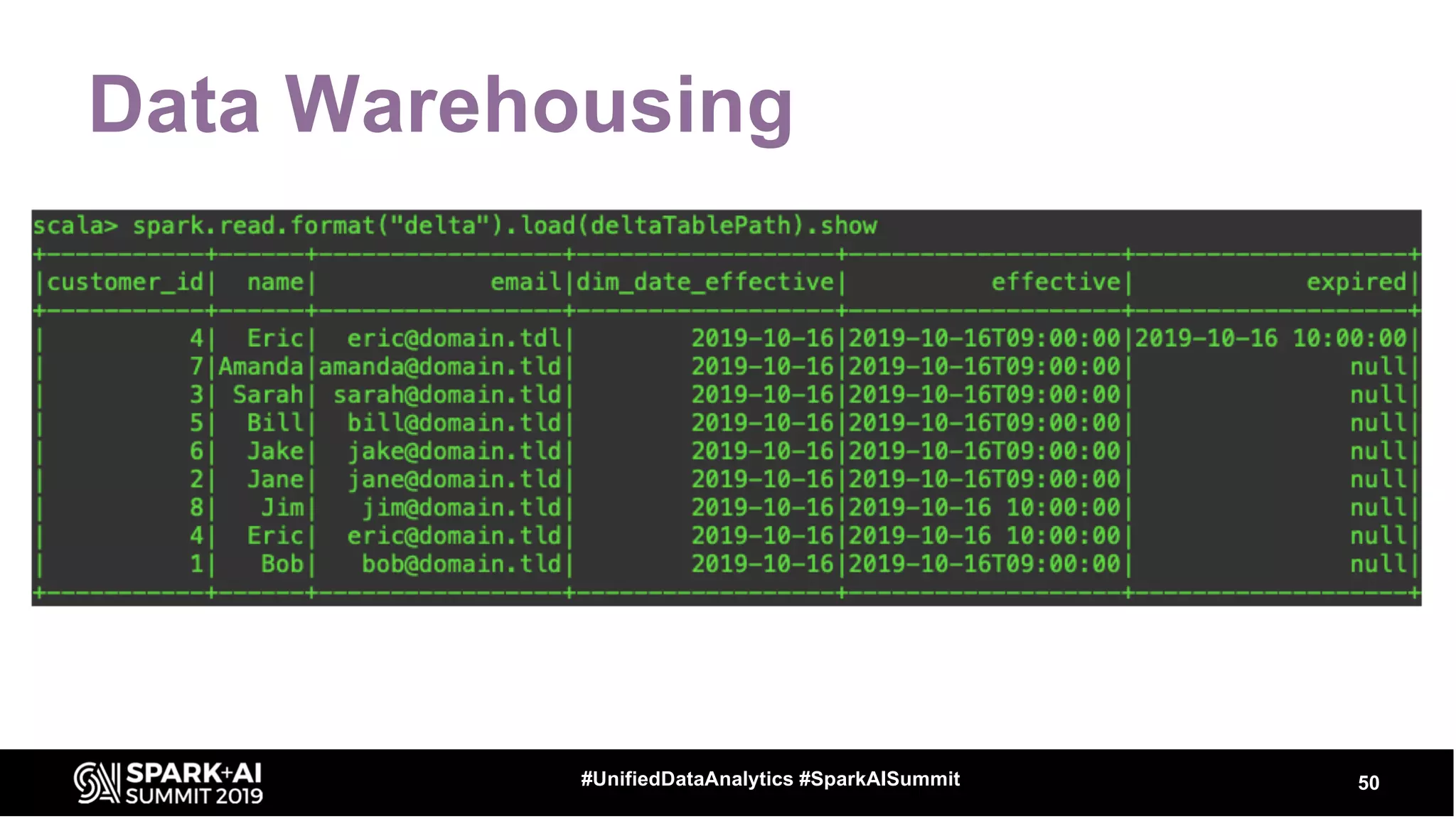

The document provides an overview of structured streaming and Delta Lake, highlighting their roles in data warehousing. It discusses features such as ACID transactions, checkpointing, handling late data, and challenges related to file management and streaming joins. The content is geared towards data engineering applications in the event industry, showcasing practical examples and best practices.