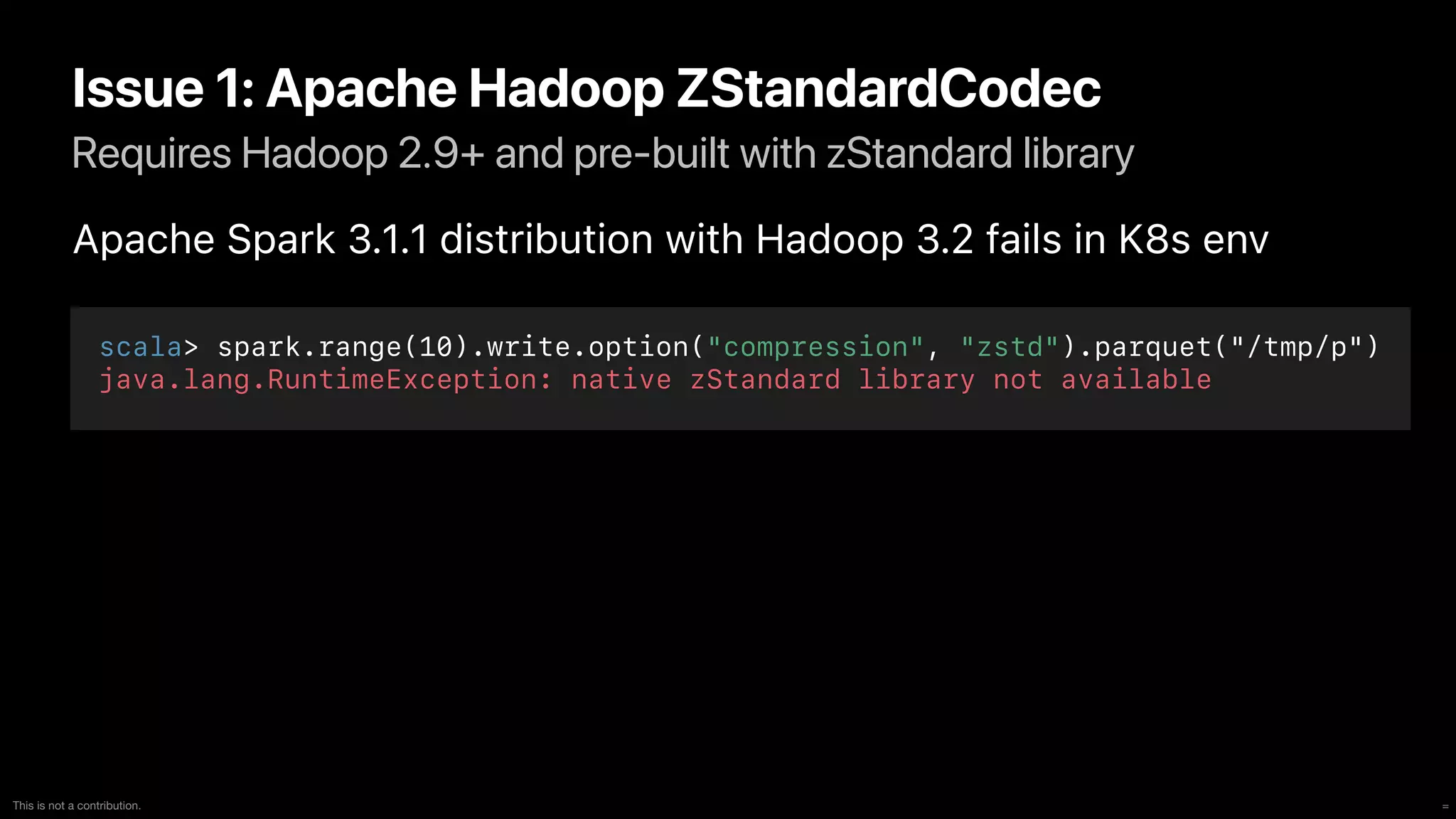

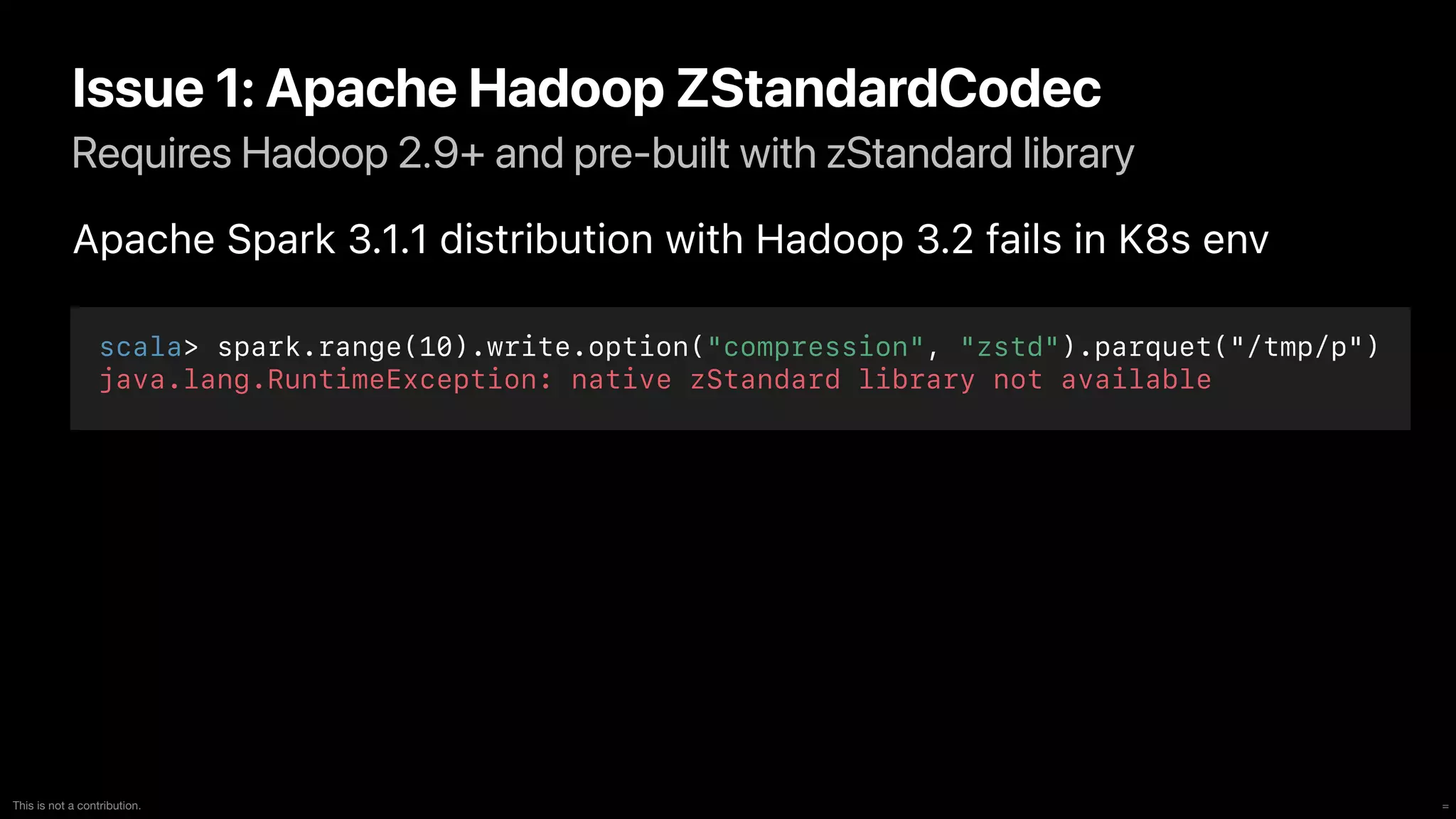

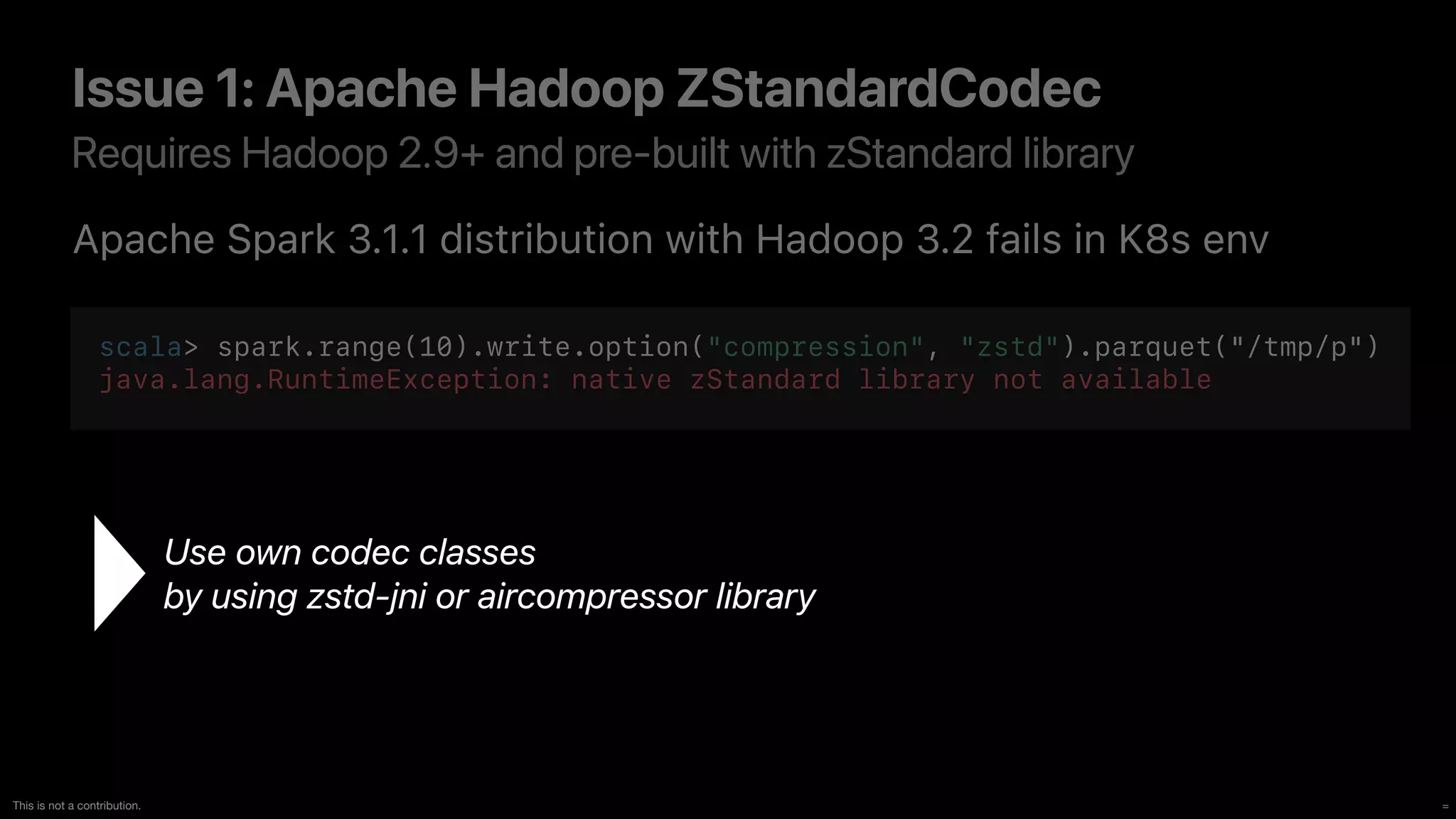

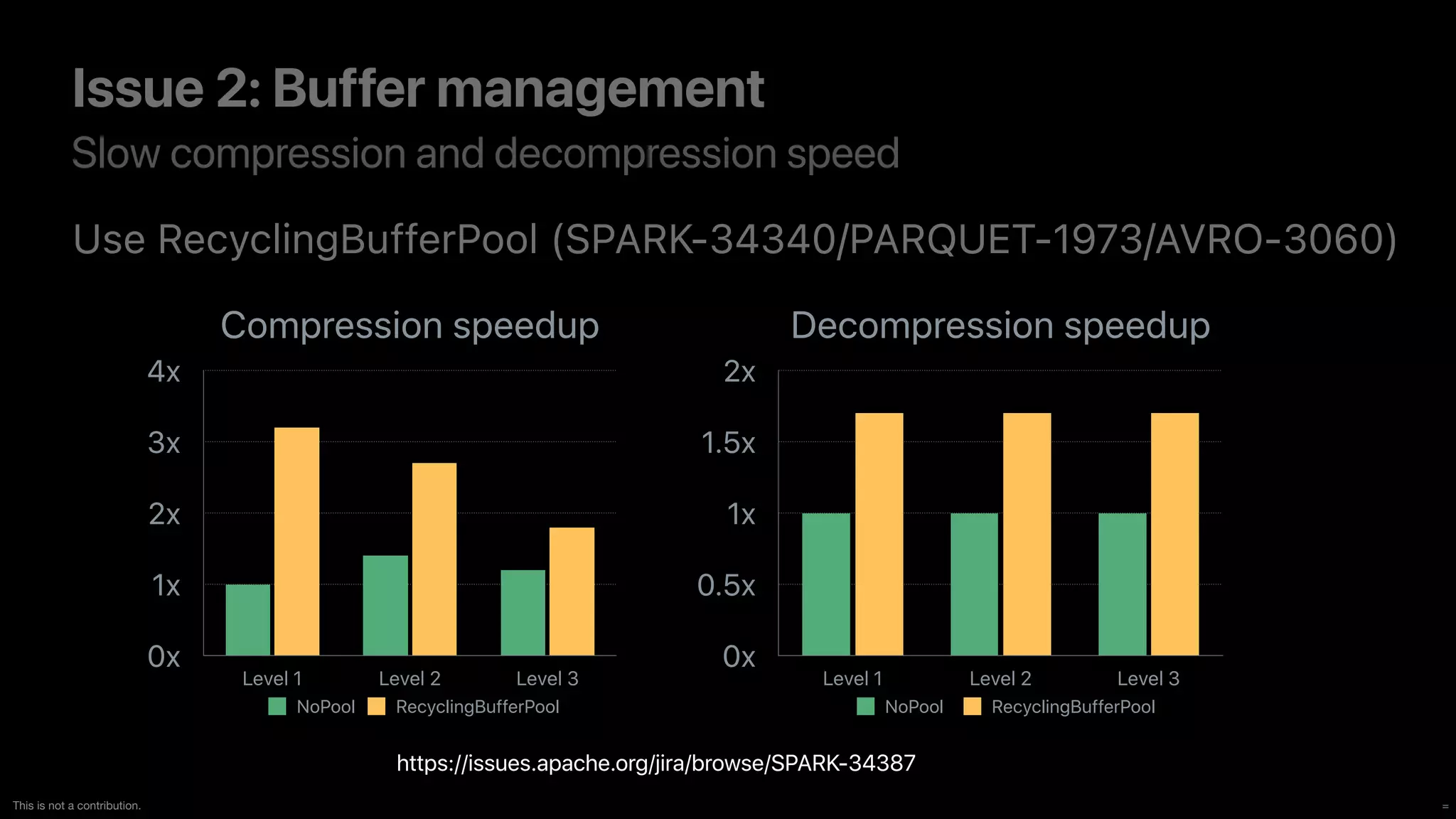

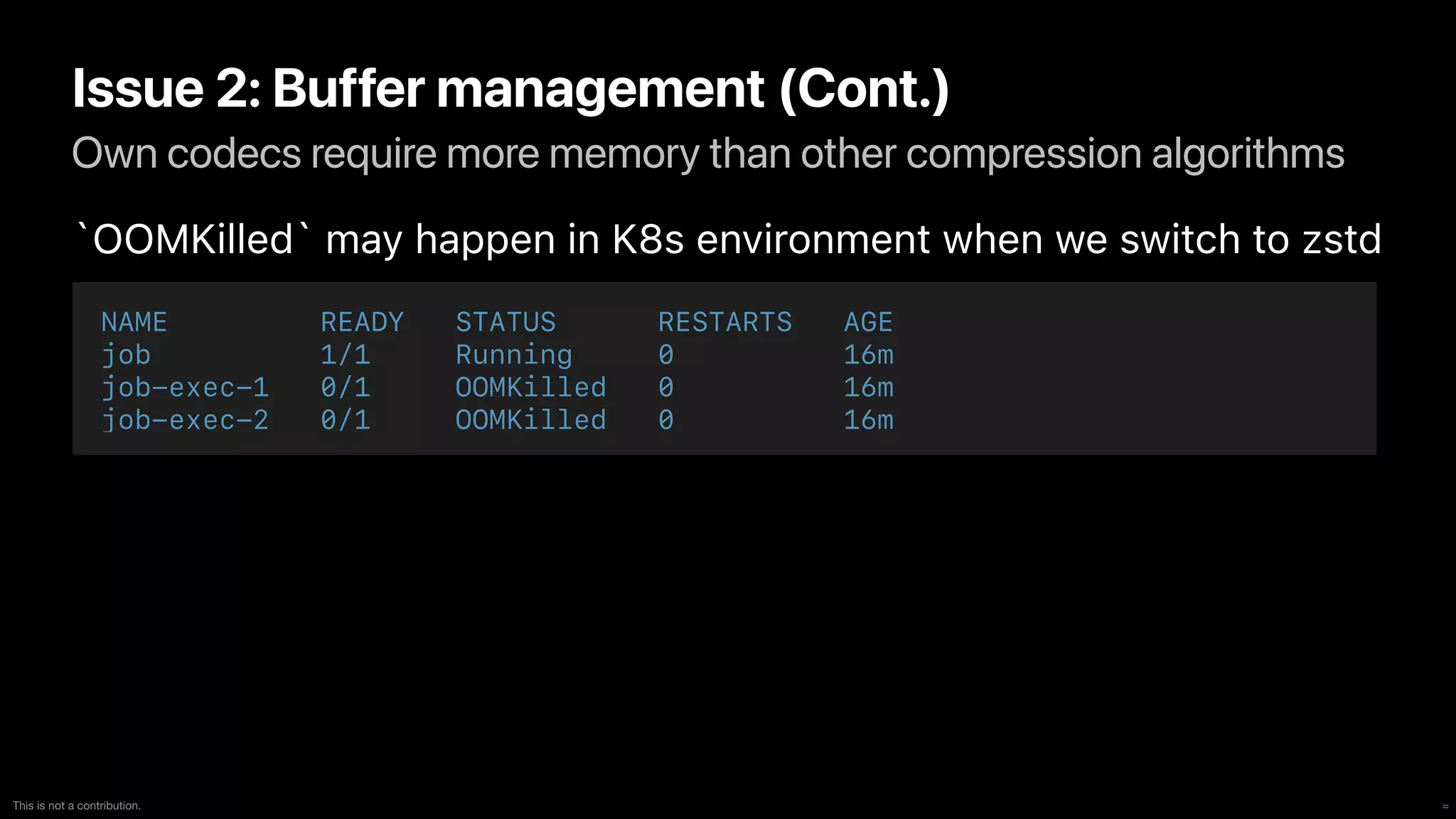

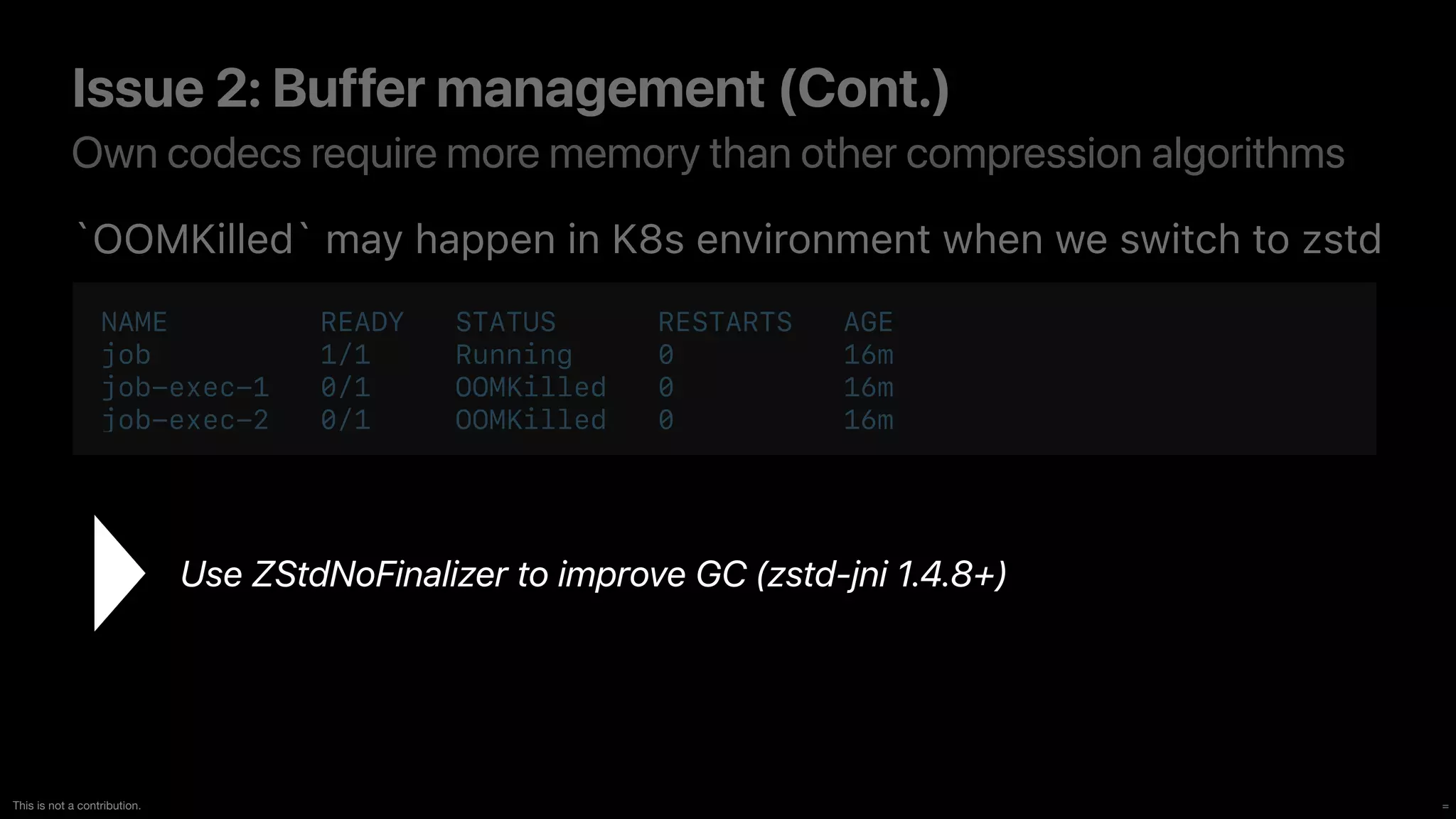

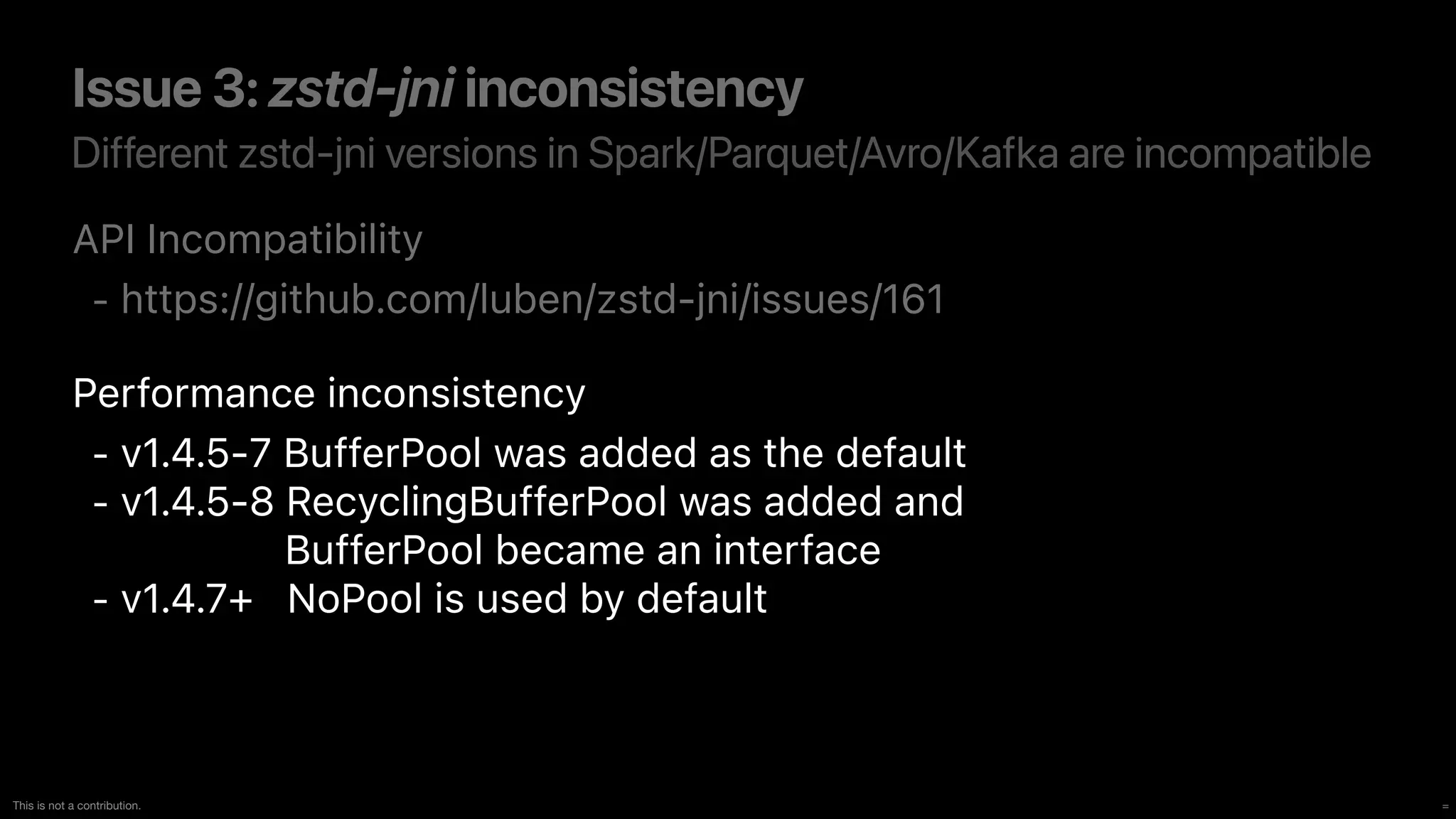

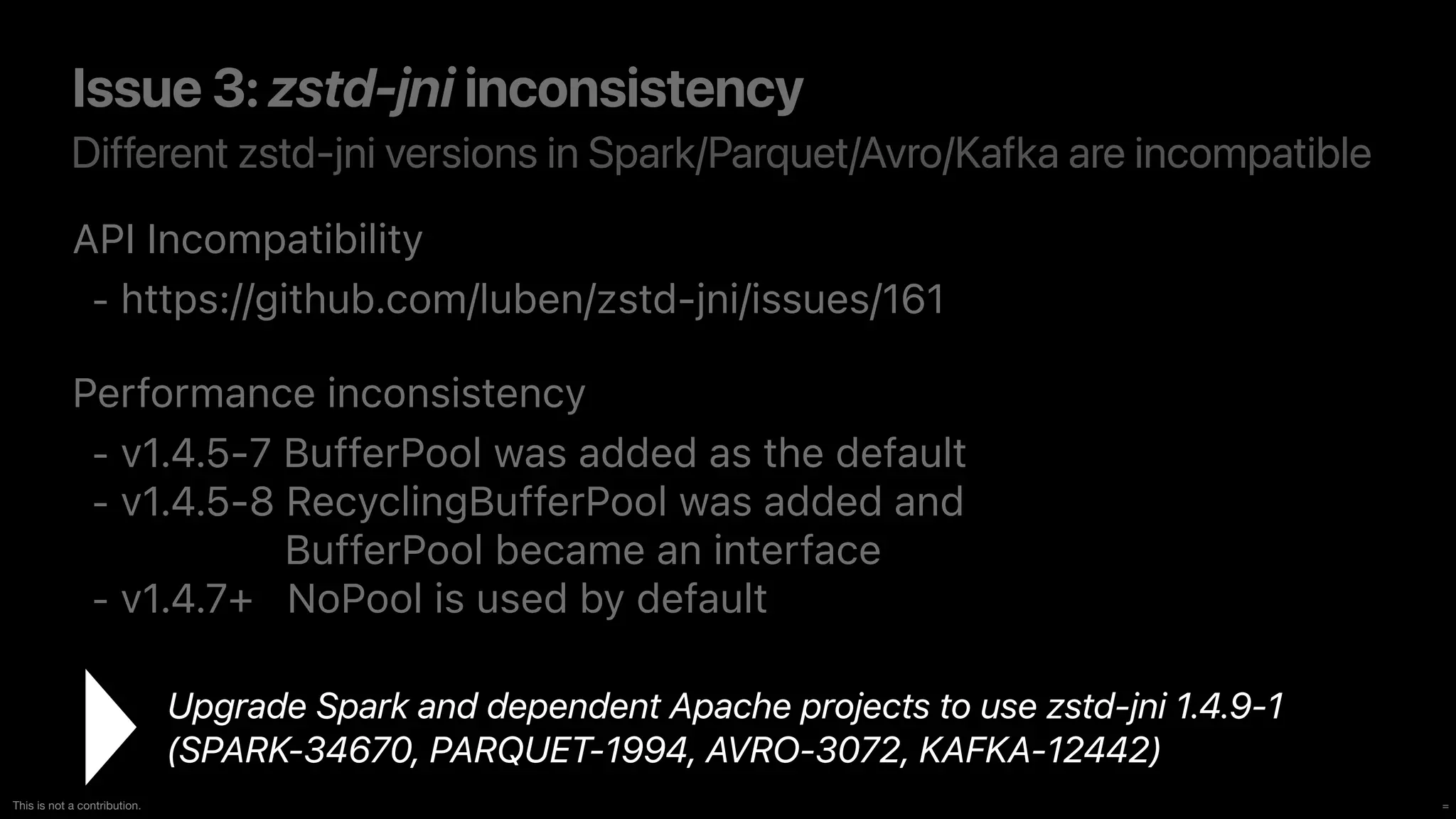

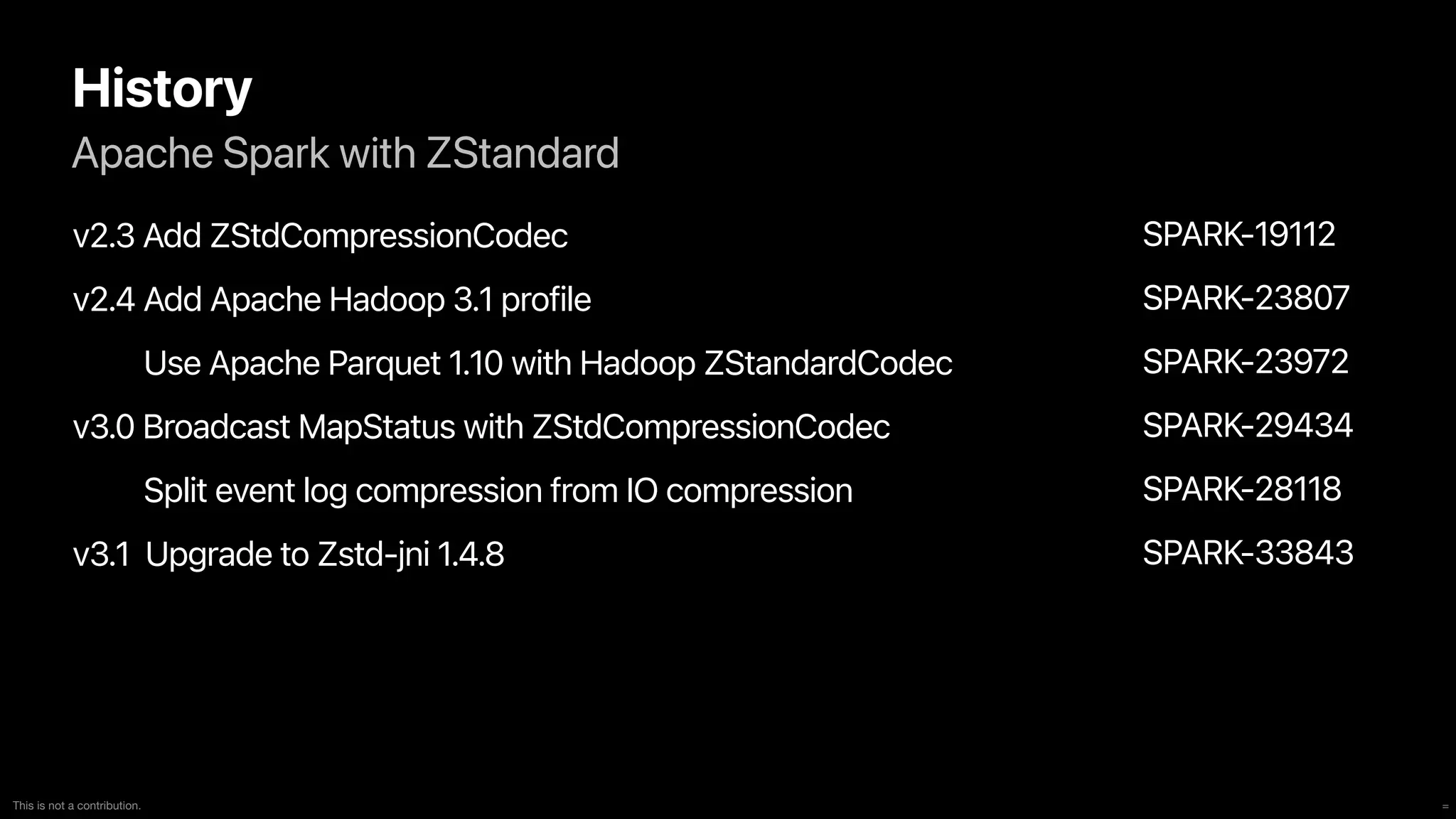

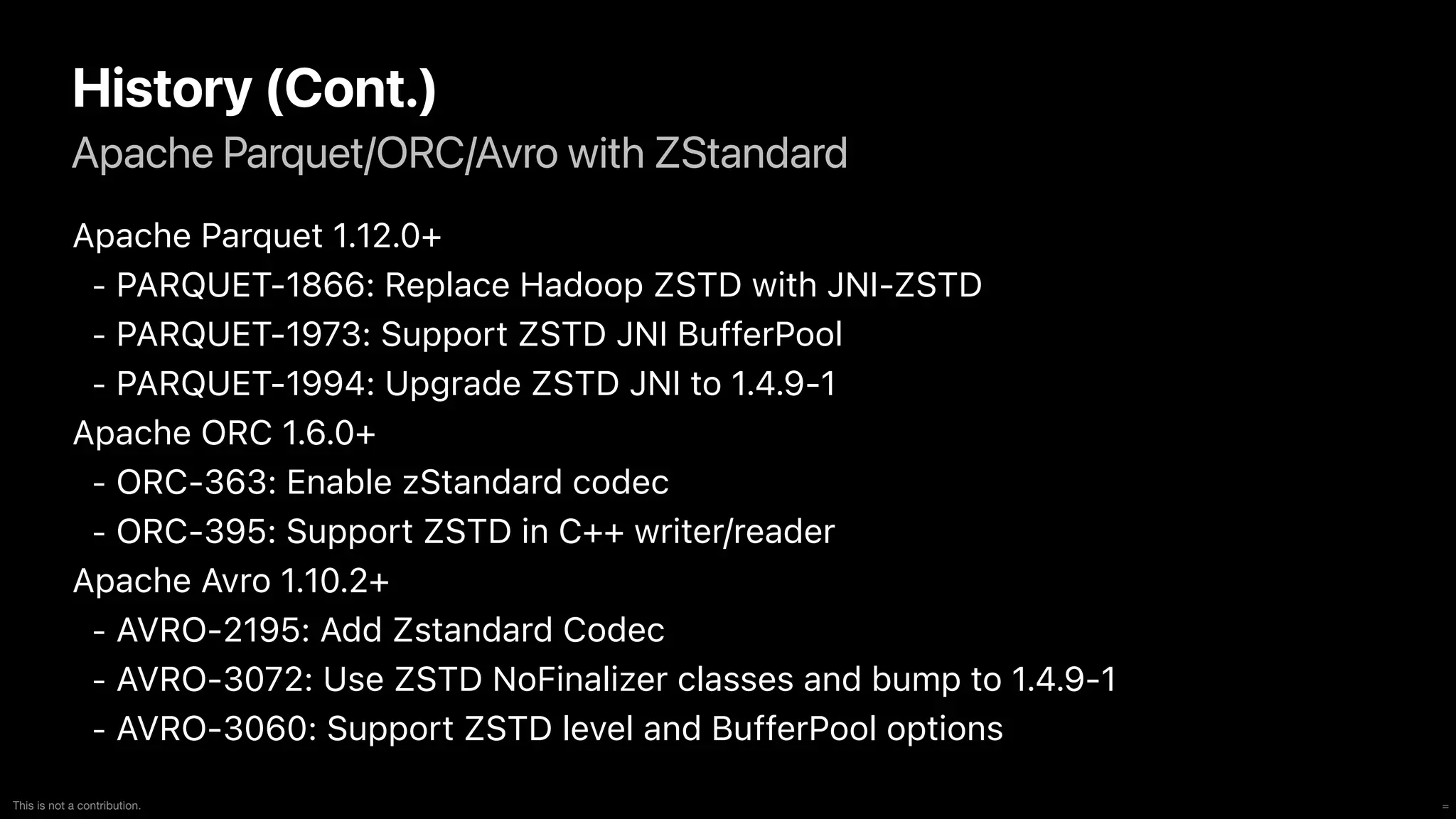

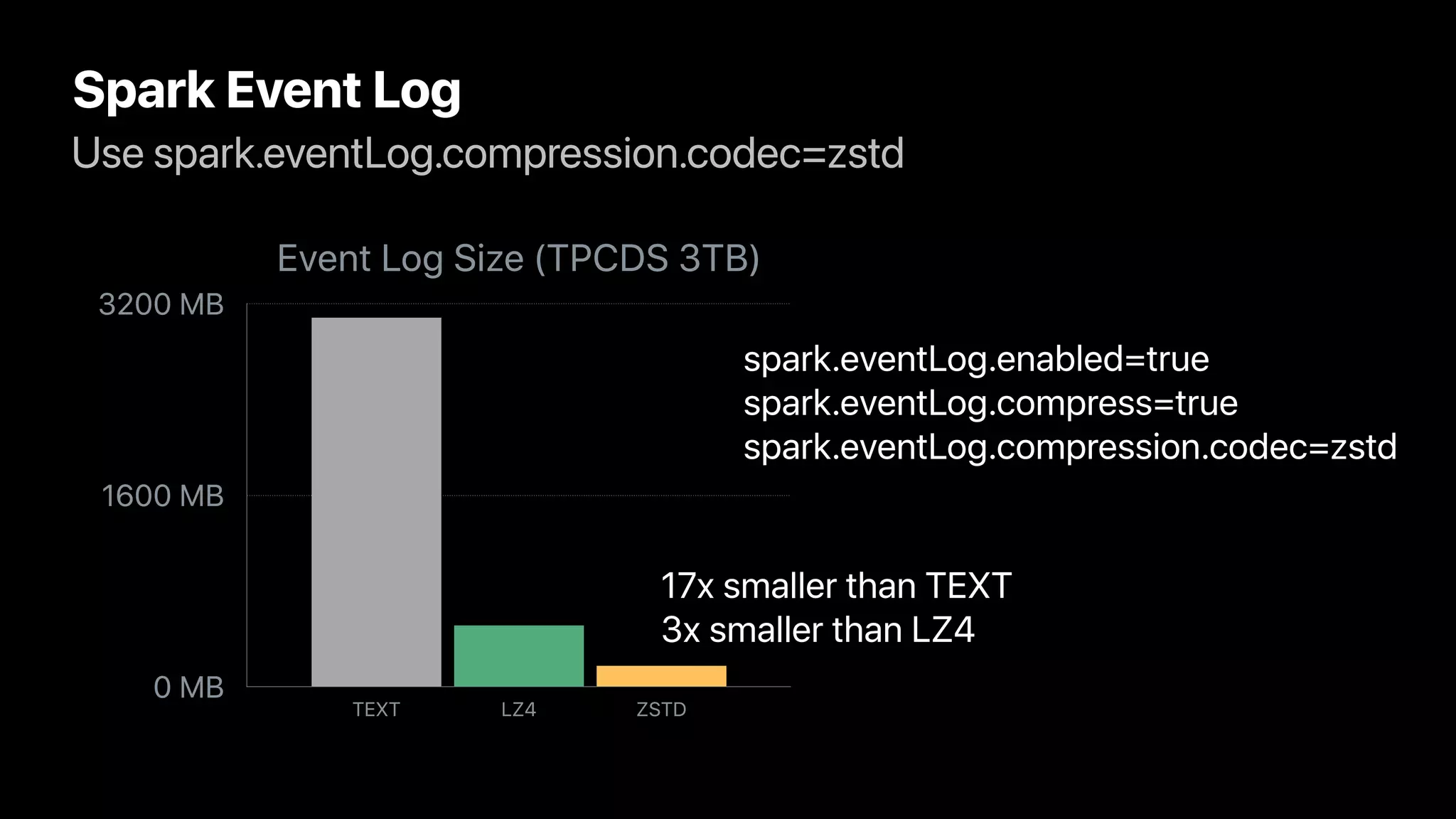

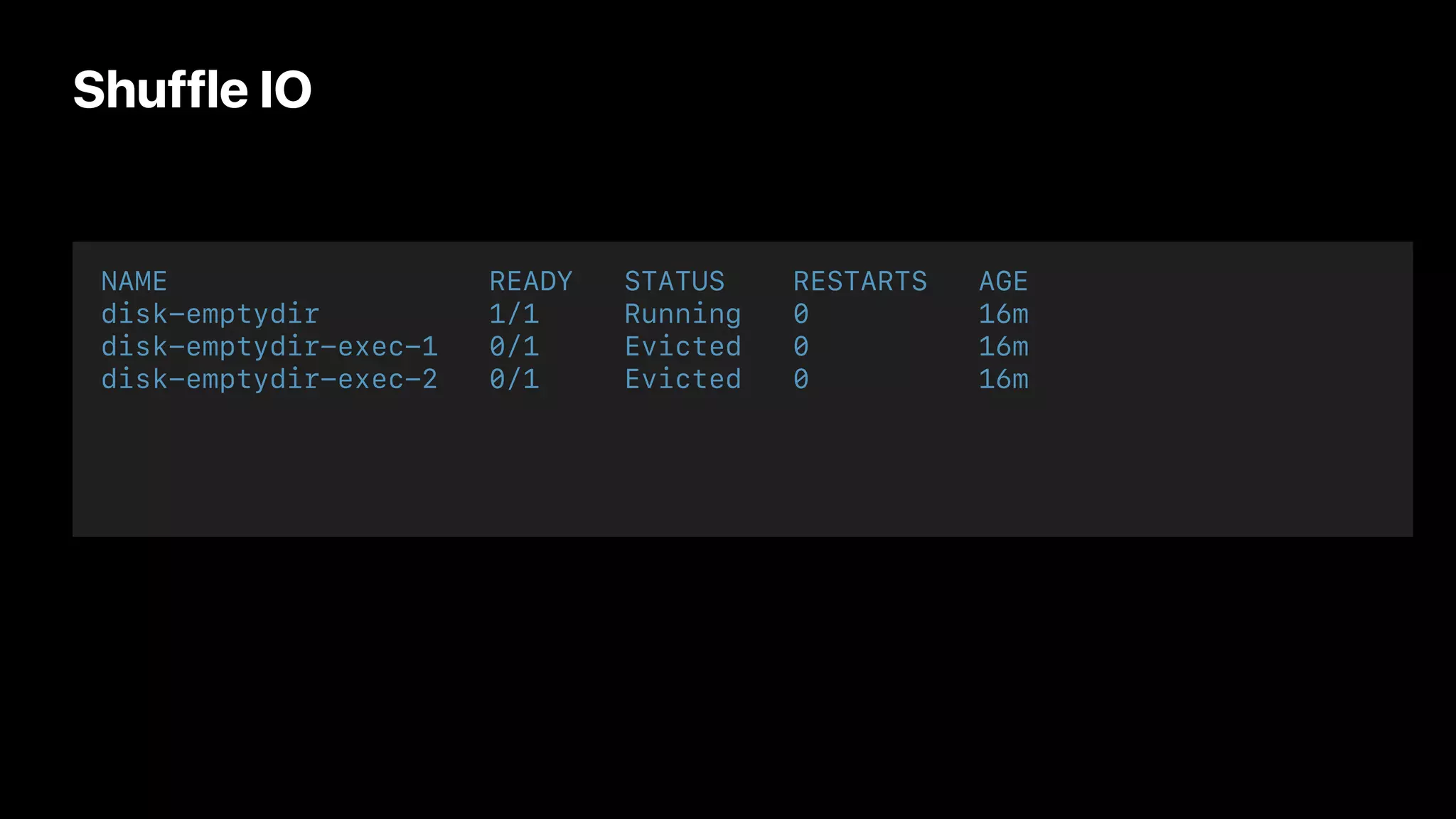

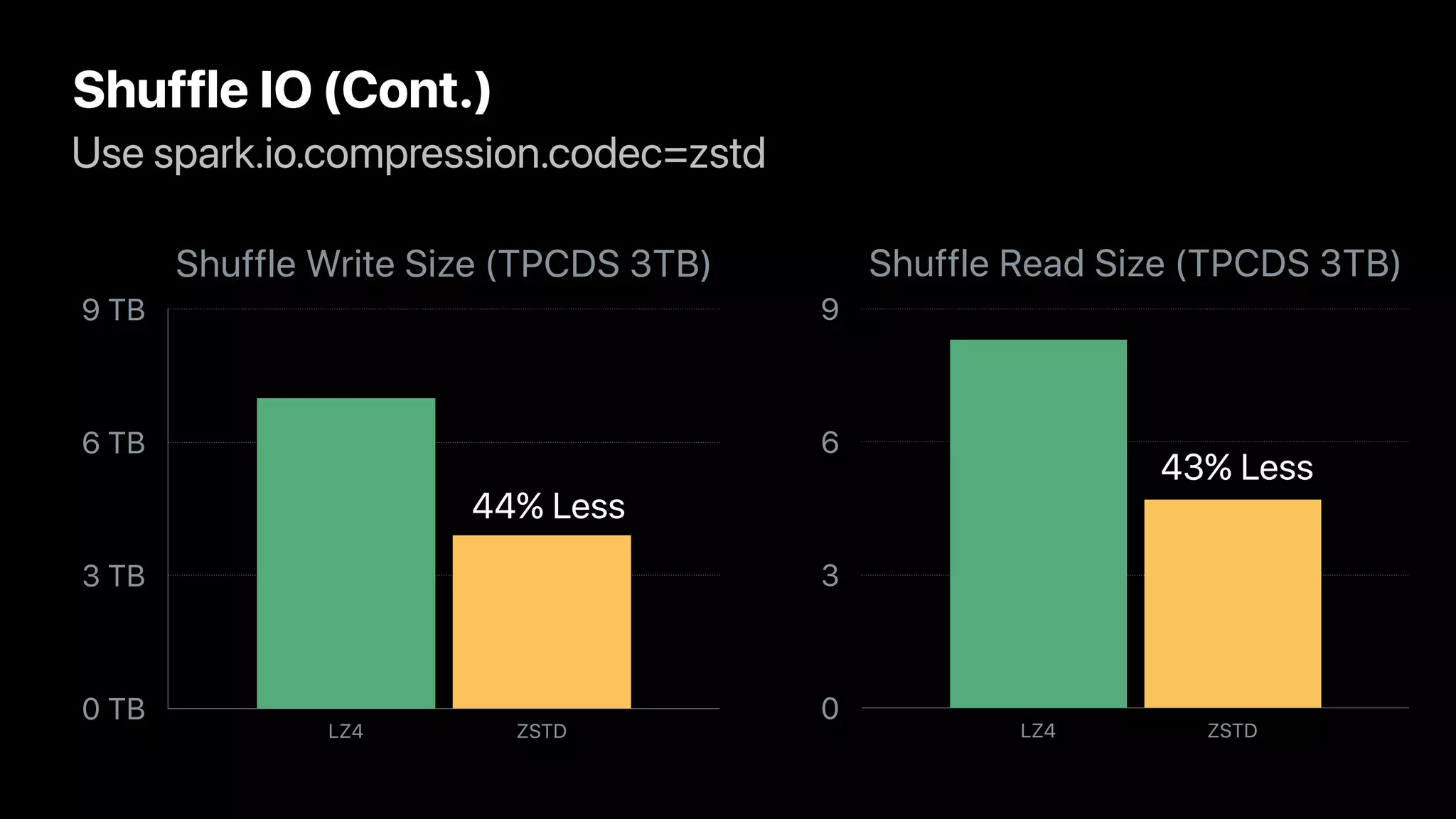

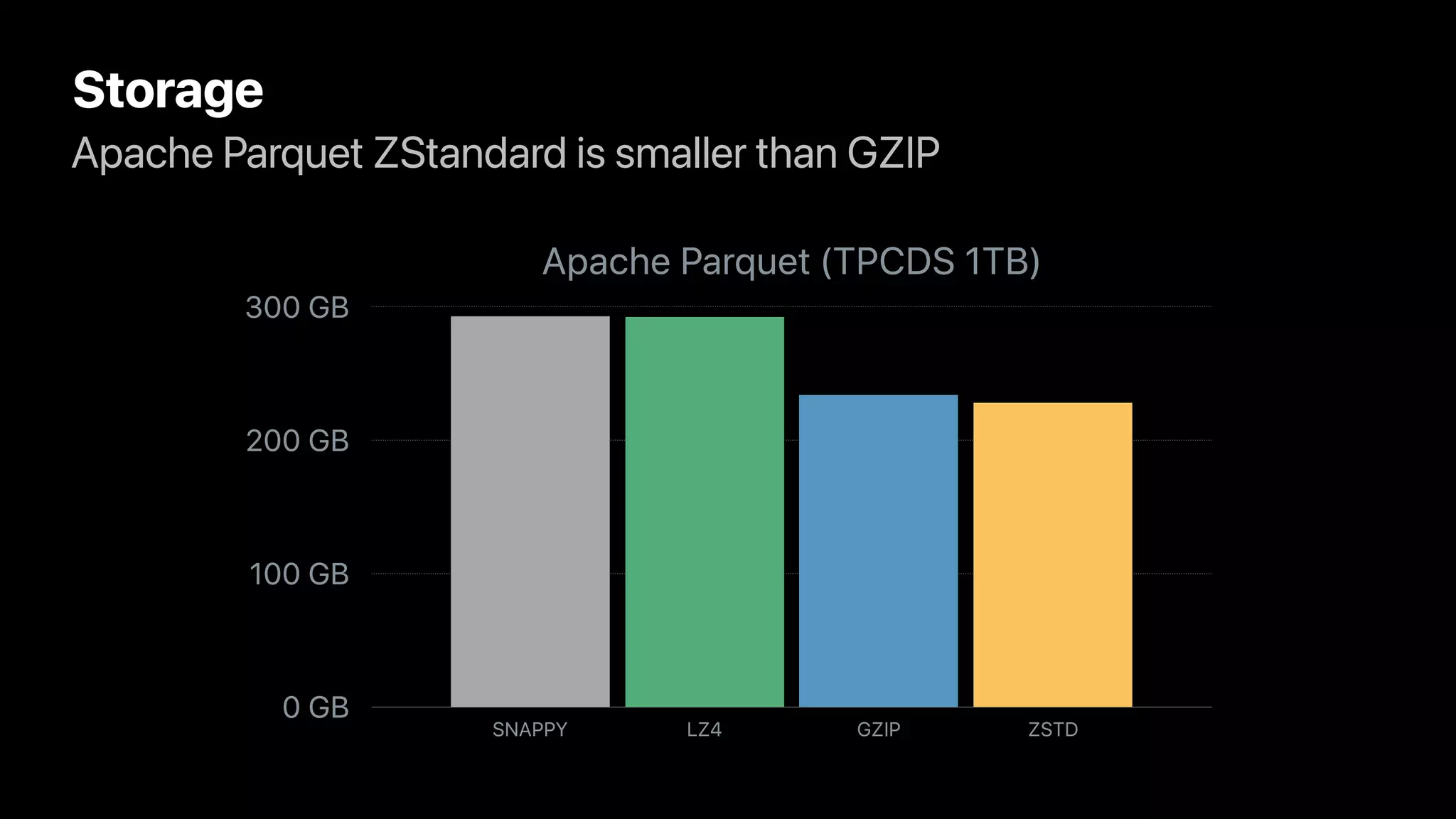

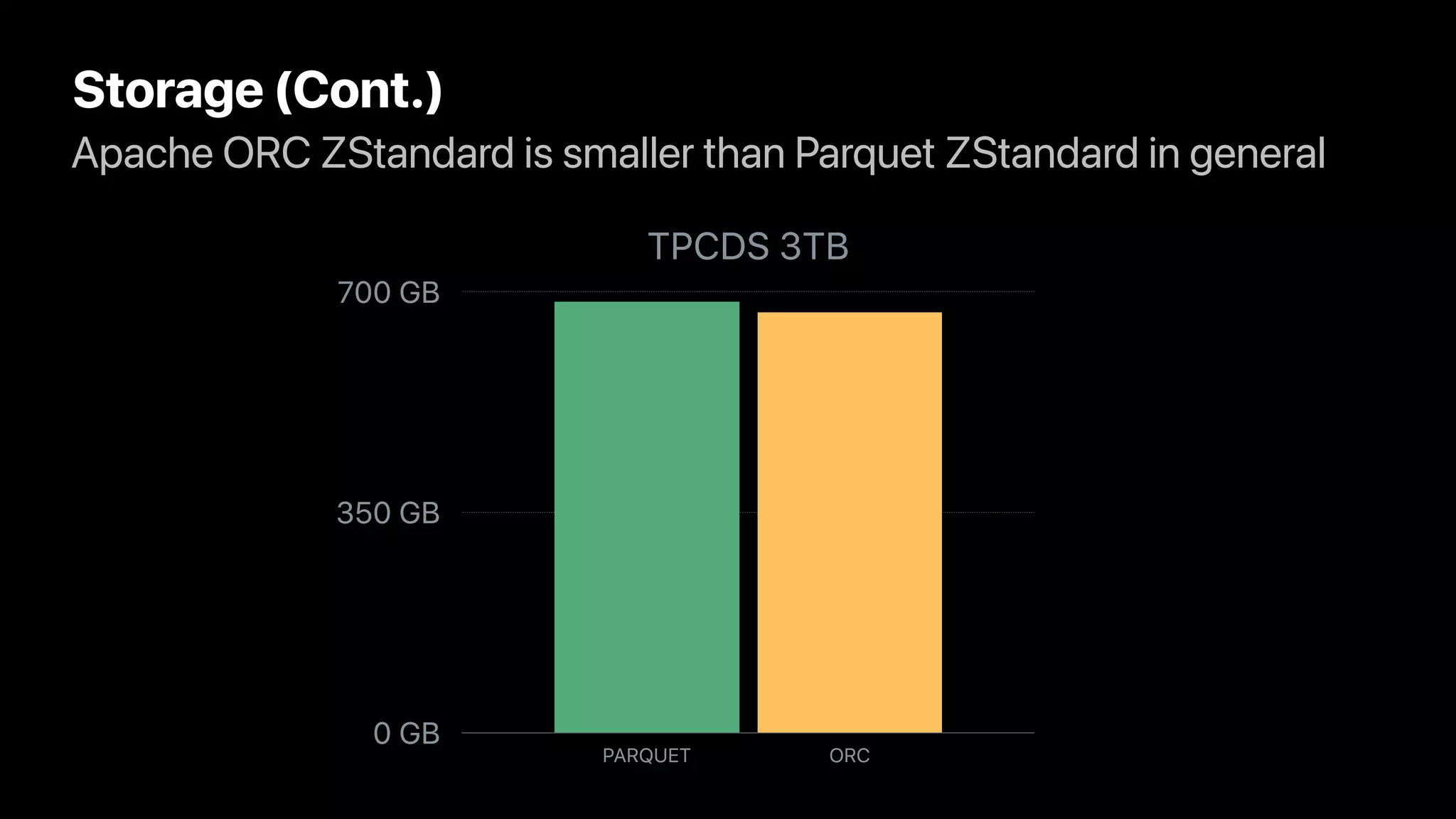

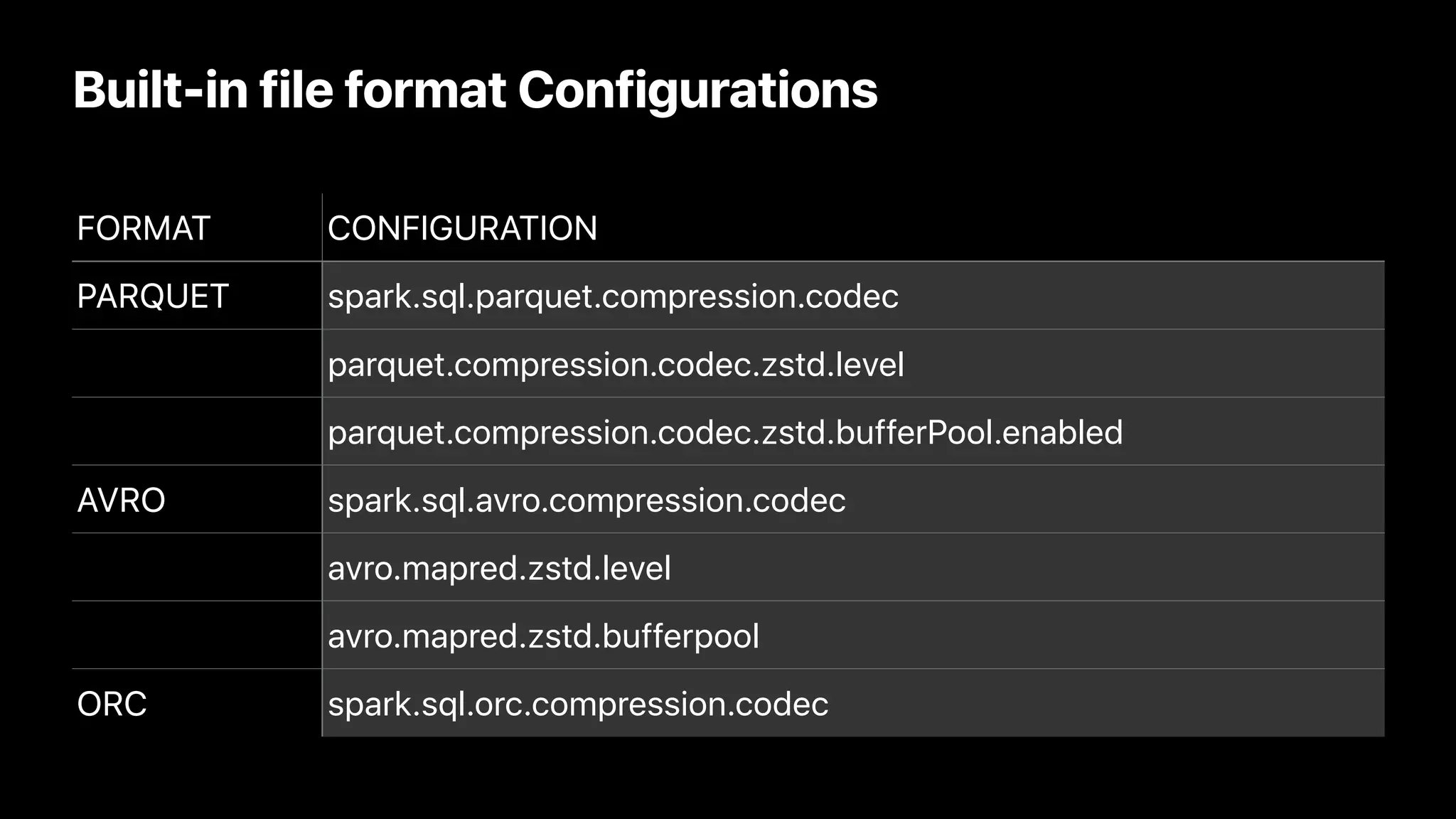

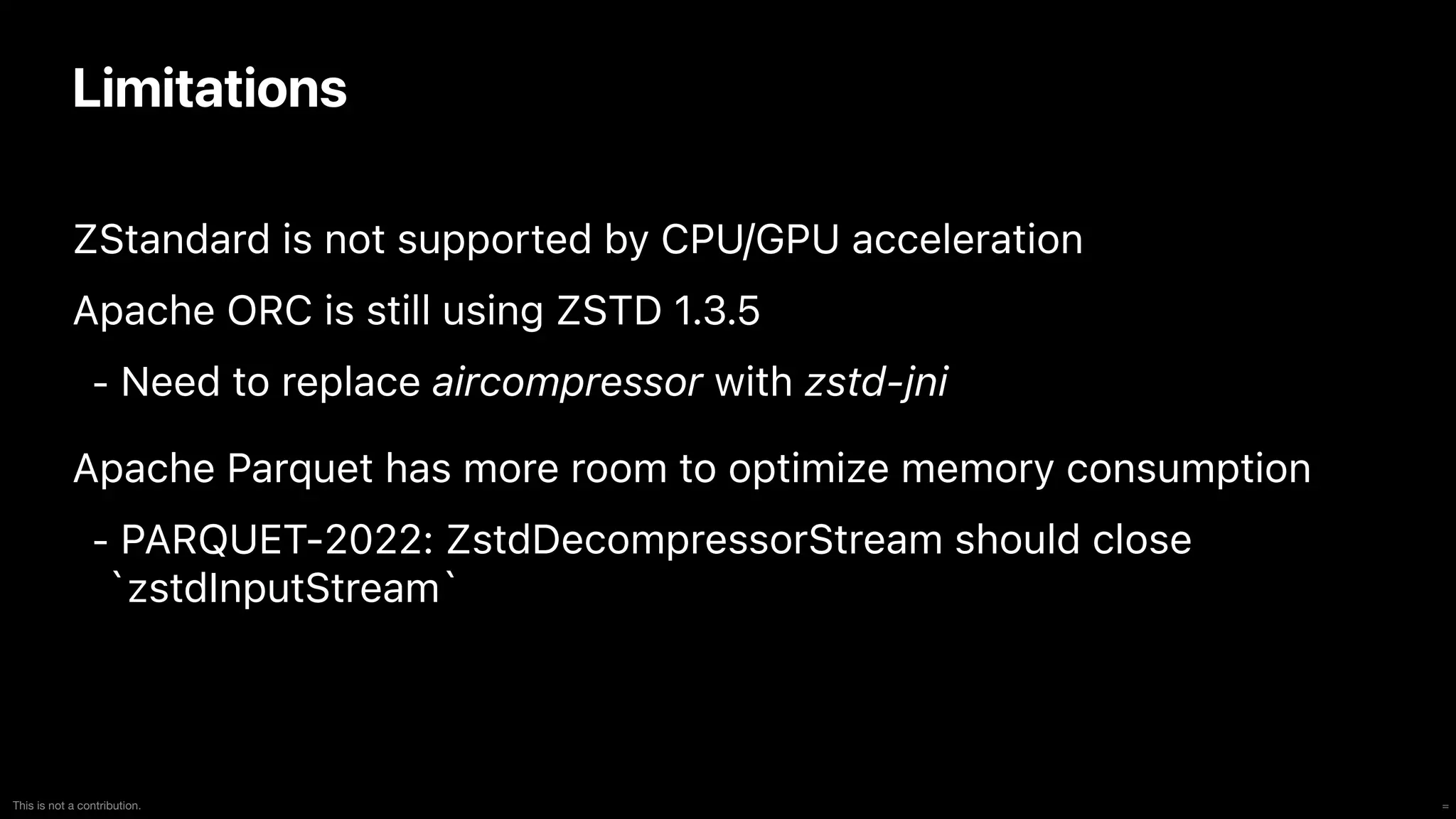

The document discusses the implementation and performance of the Zstandard compression algorithm in Apache Spark and related projects, highlighting various issues such as compatibility, buffer management, and memory consumption. It emphasizes the improvements brought by Zstandard in terms of compression ratios and speed, while also detailing limitations and specific use cases for efficient data handling. Additionally, it provides historical context for the integration of Zstandard across different data processing frameworks like Apache Parquet, ORC, and Avro.