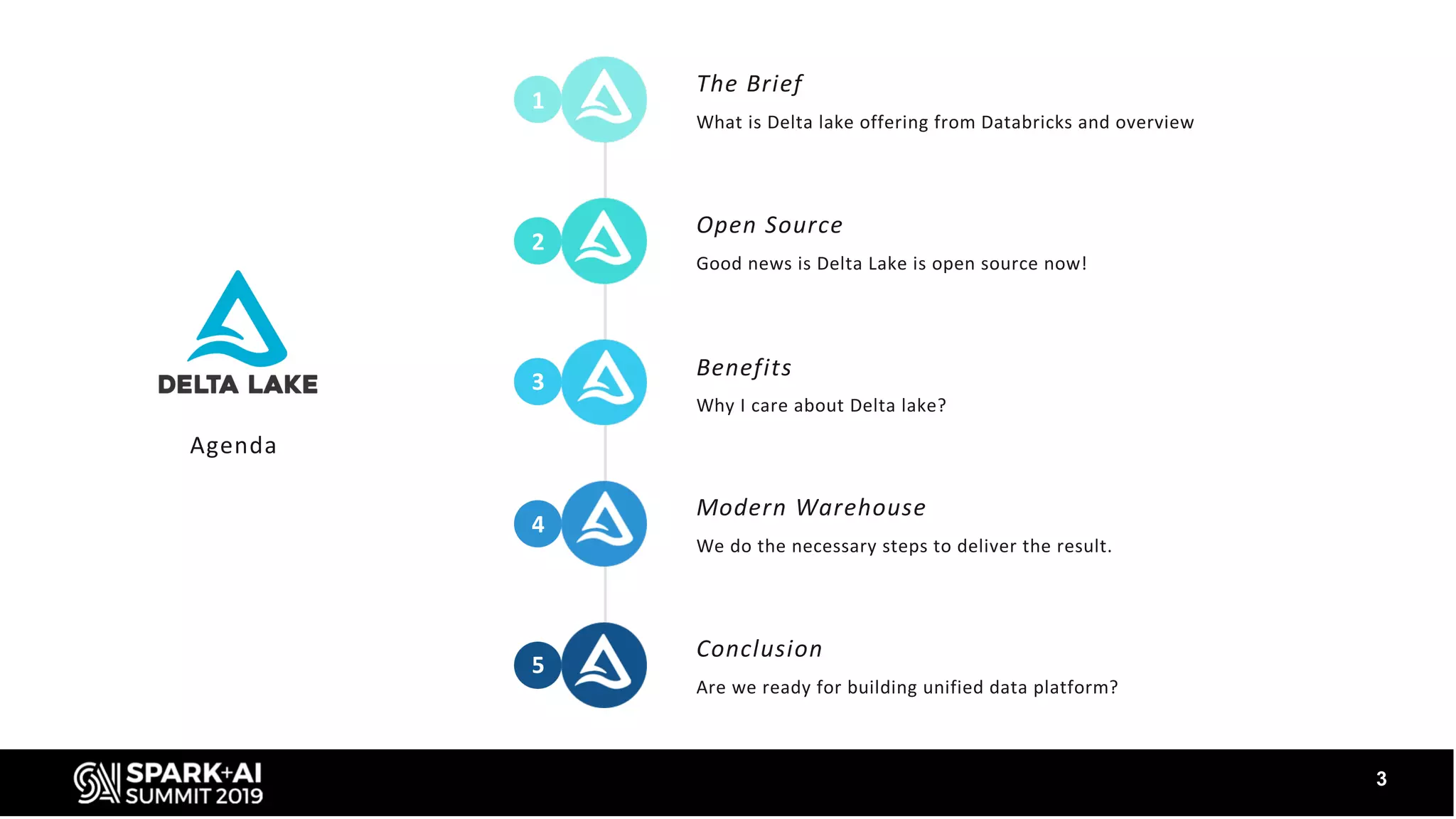

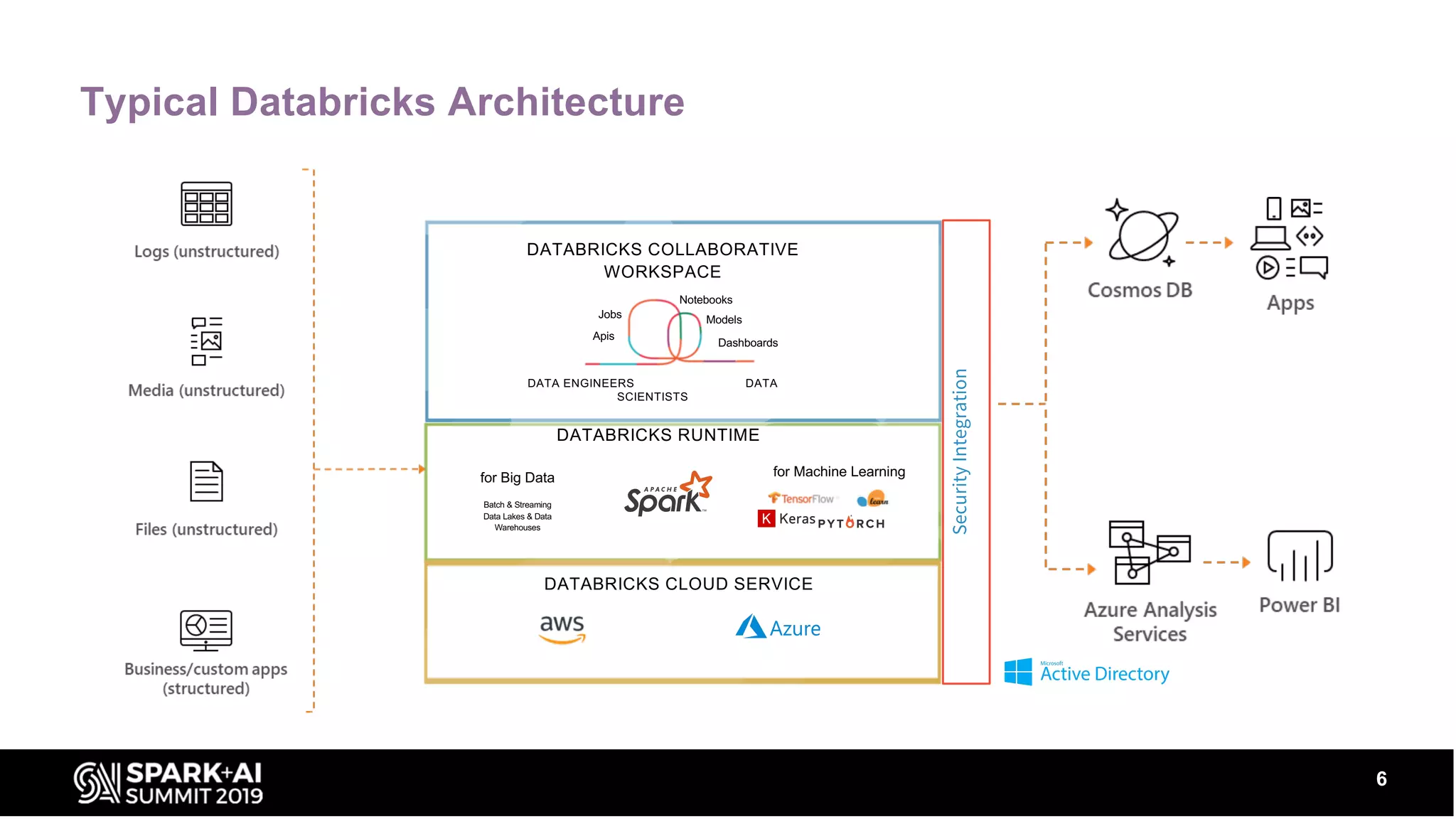

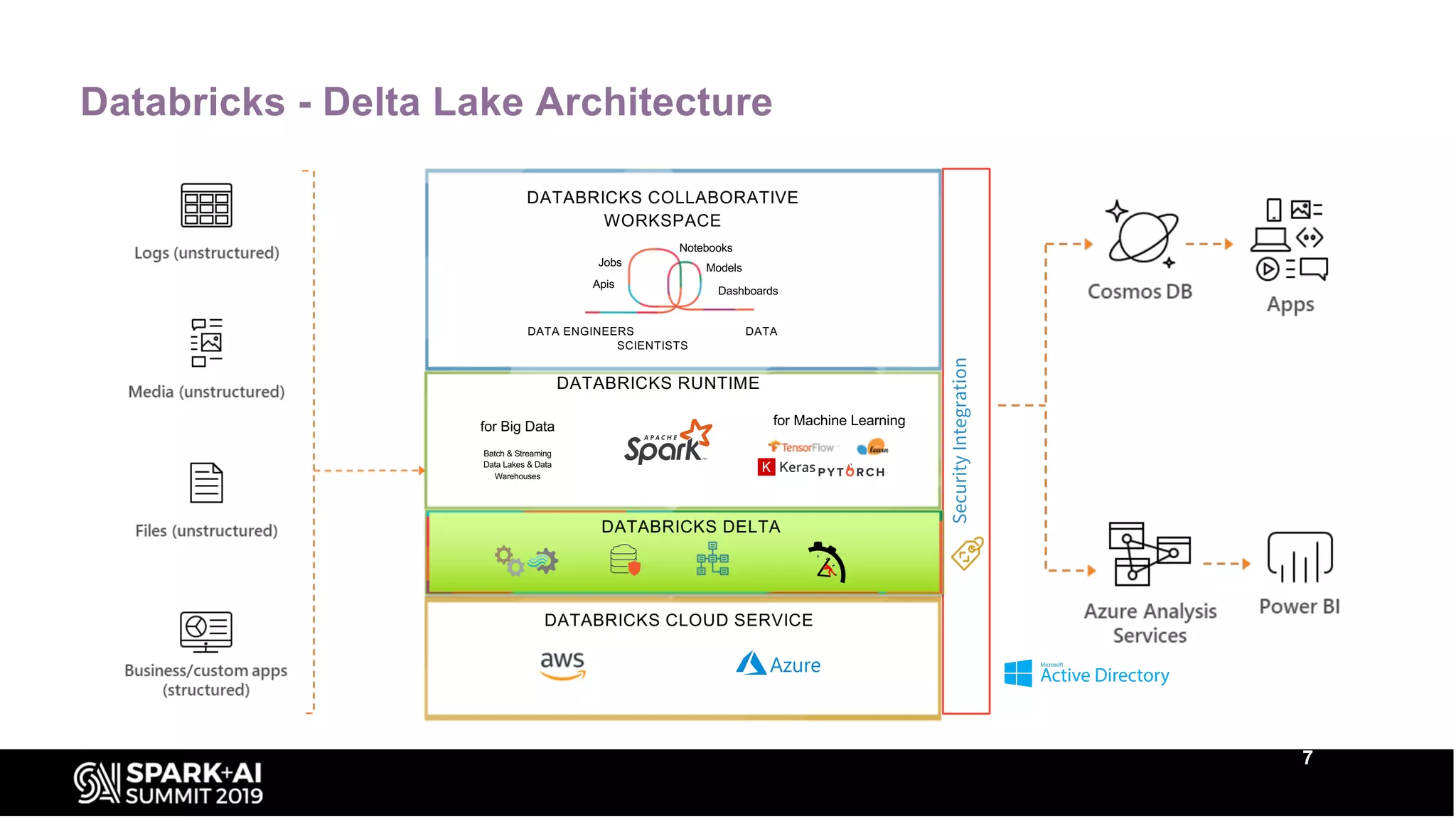

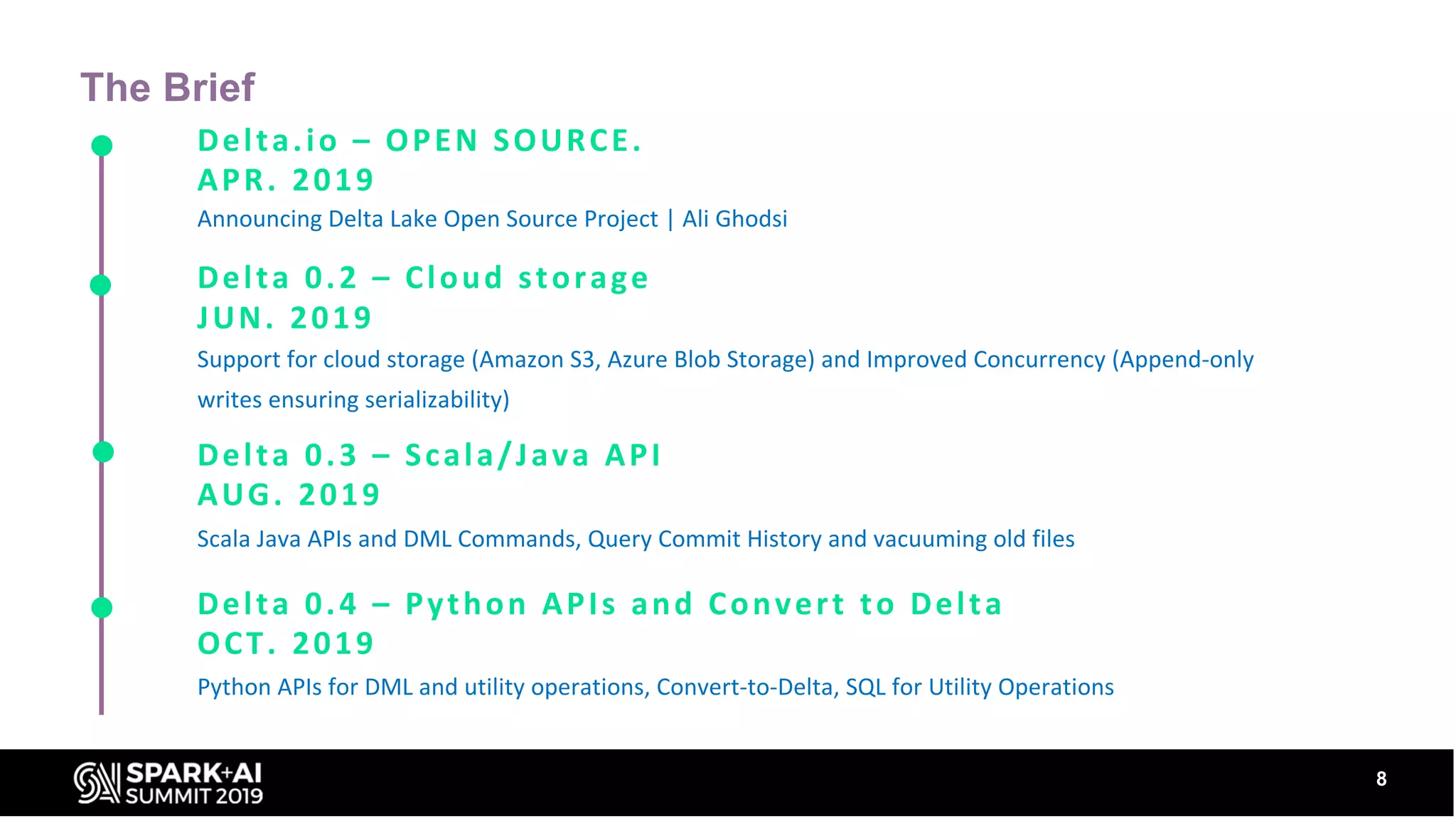

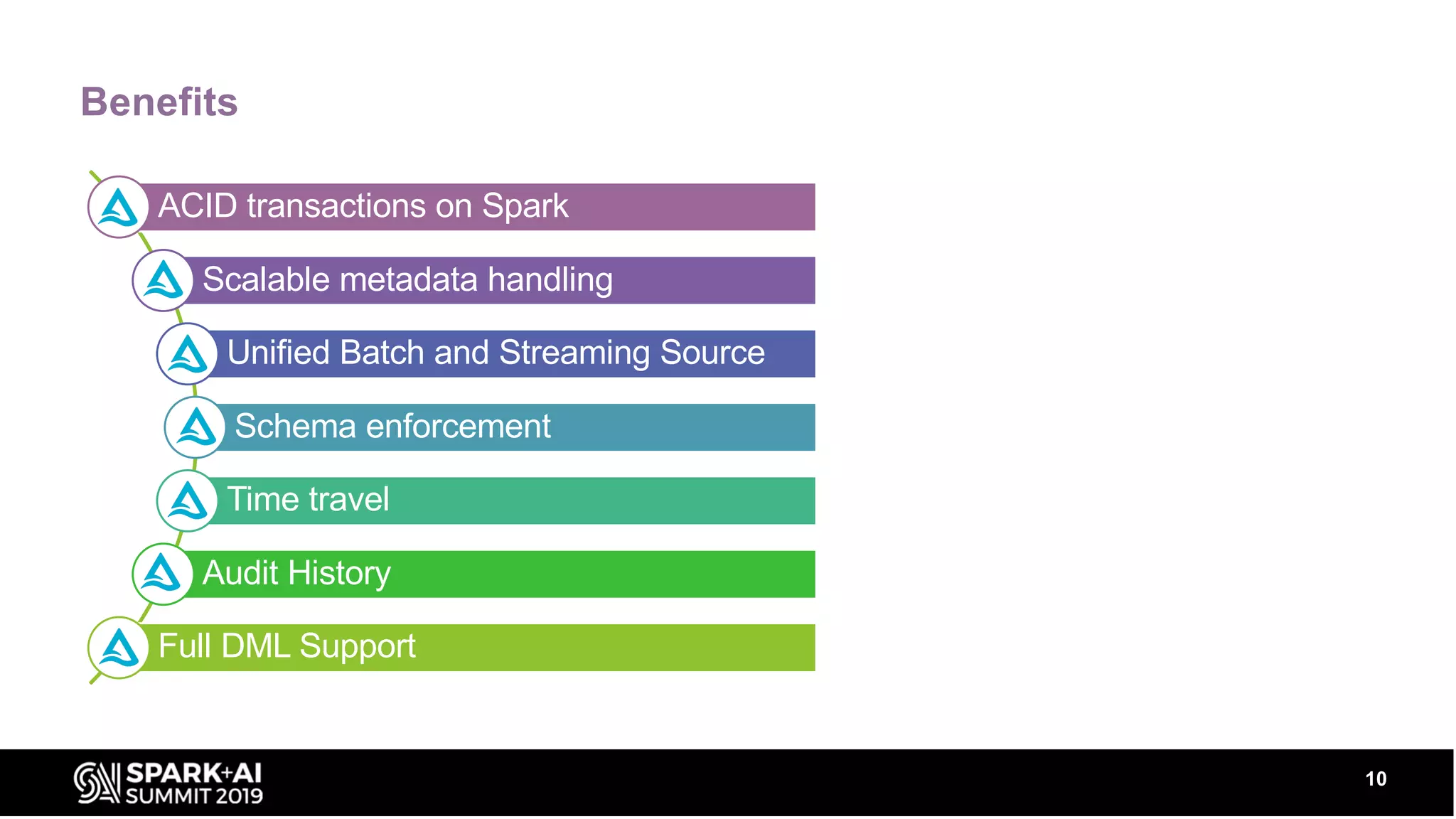

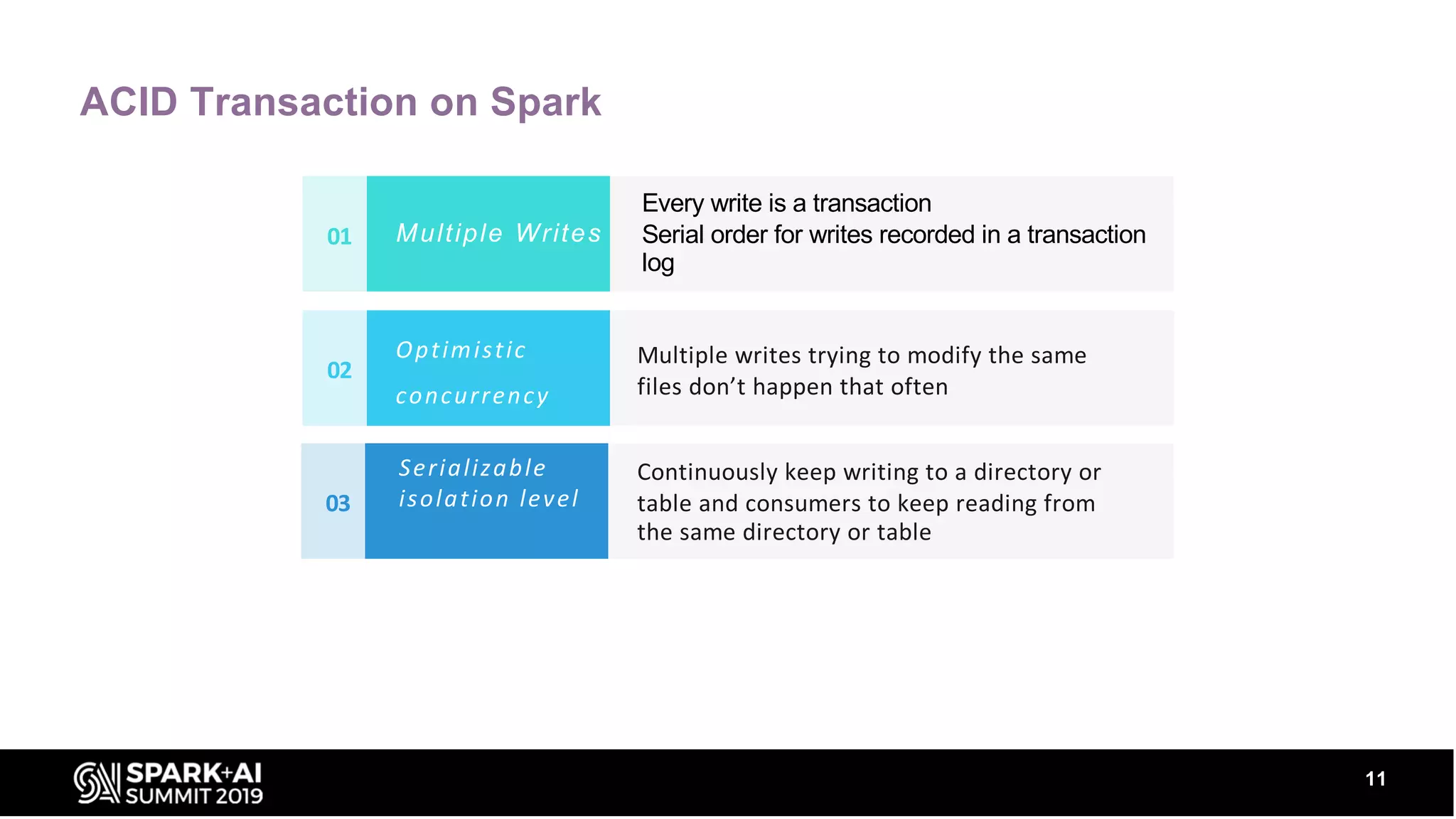

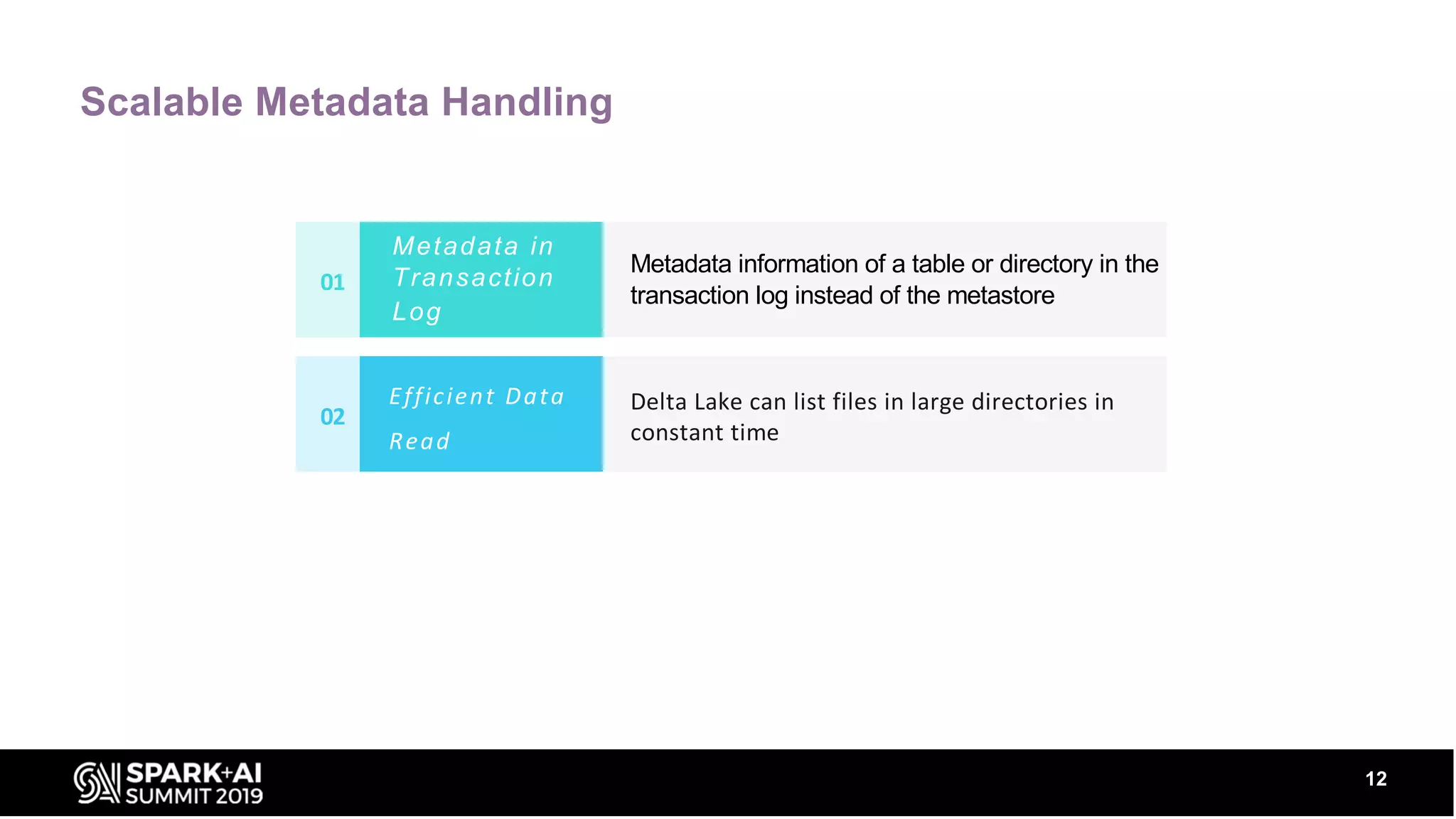

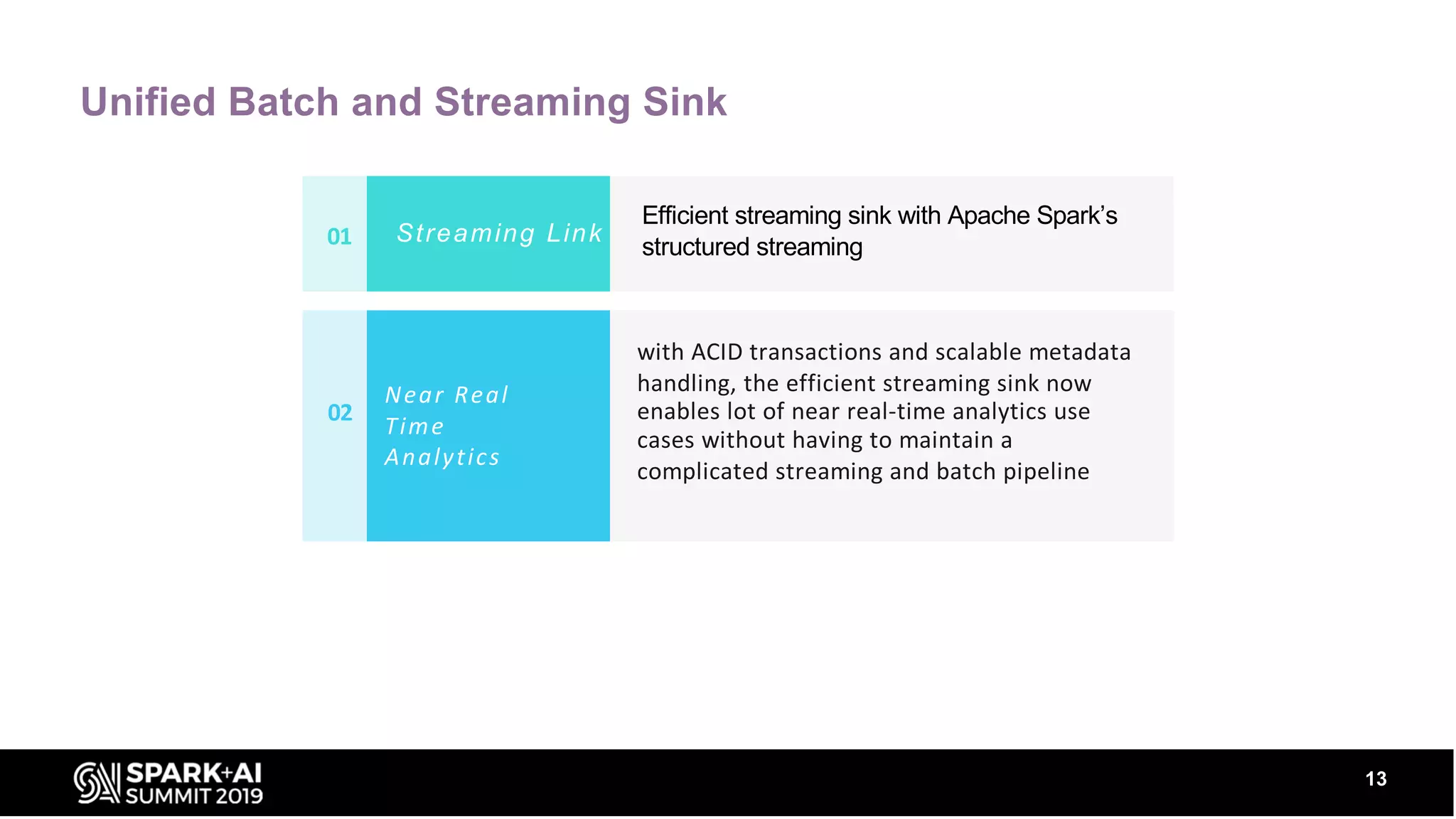

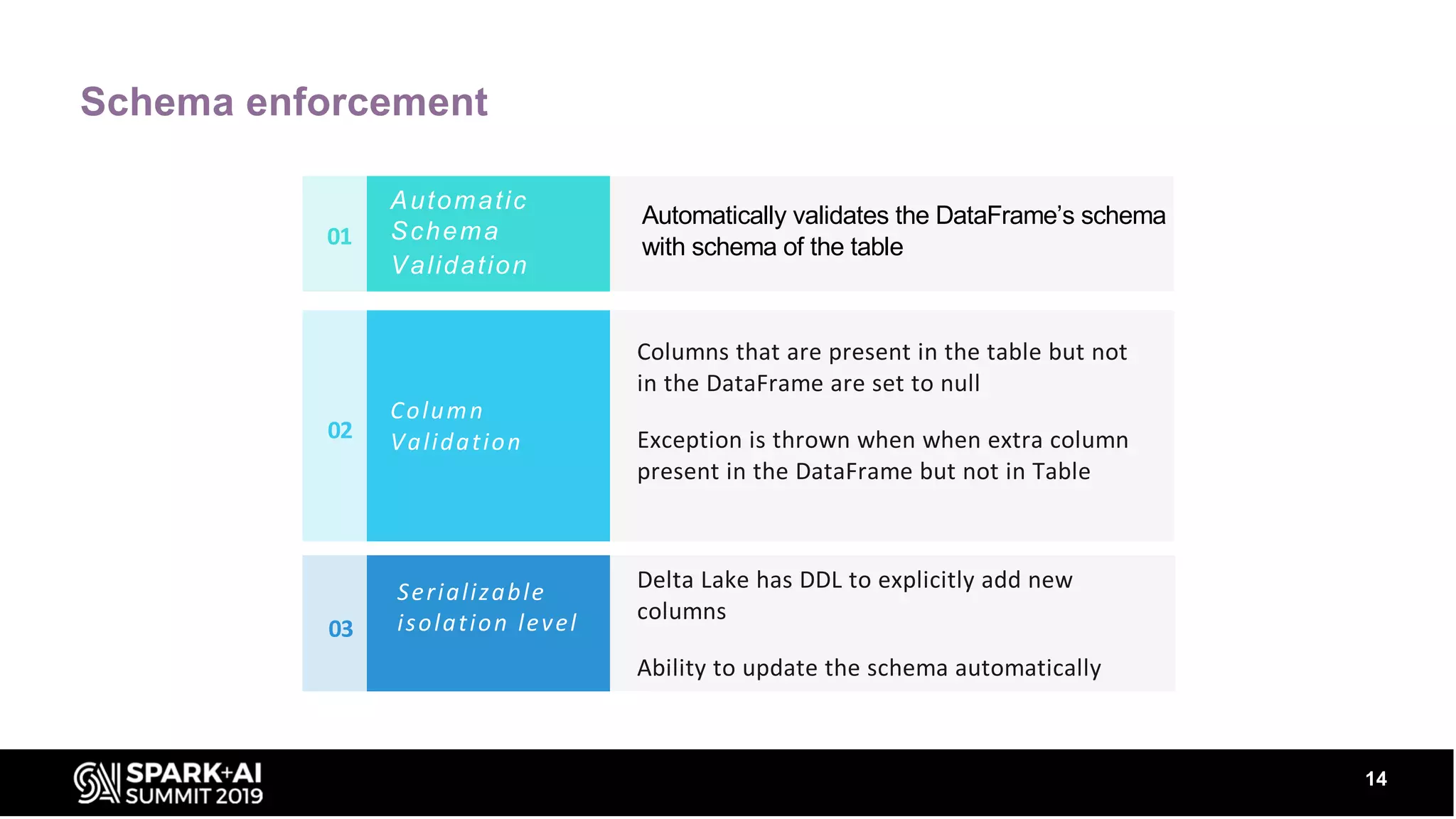

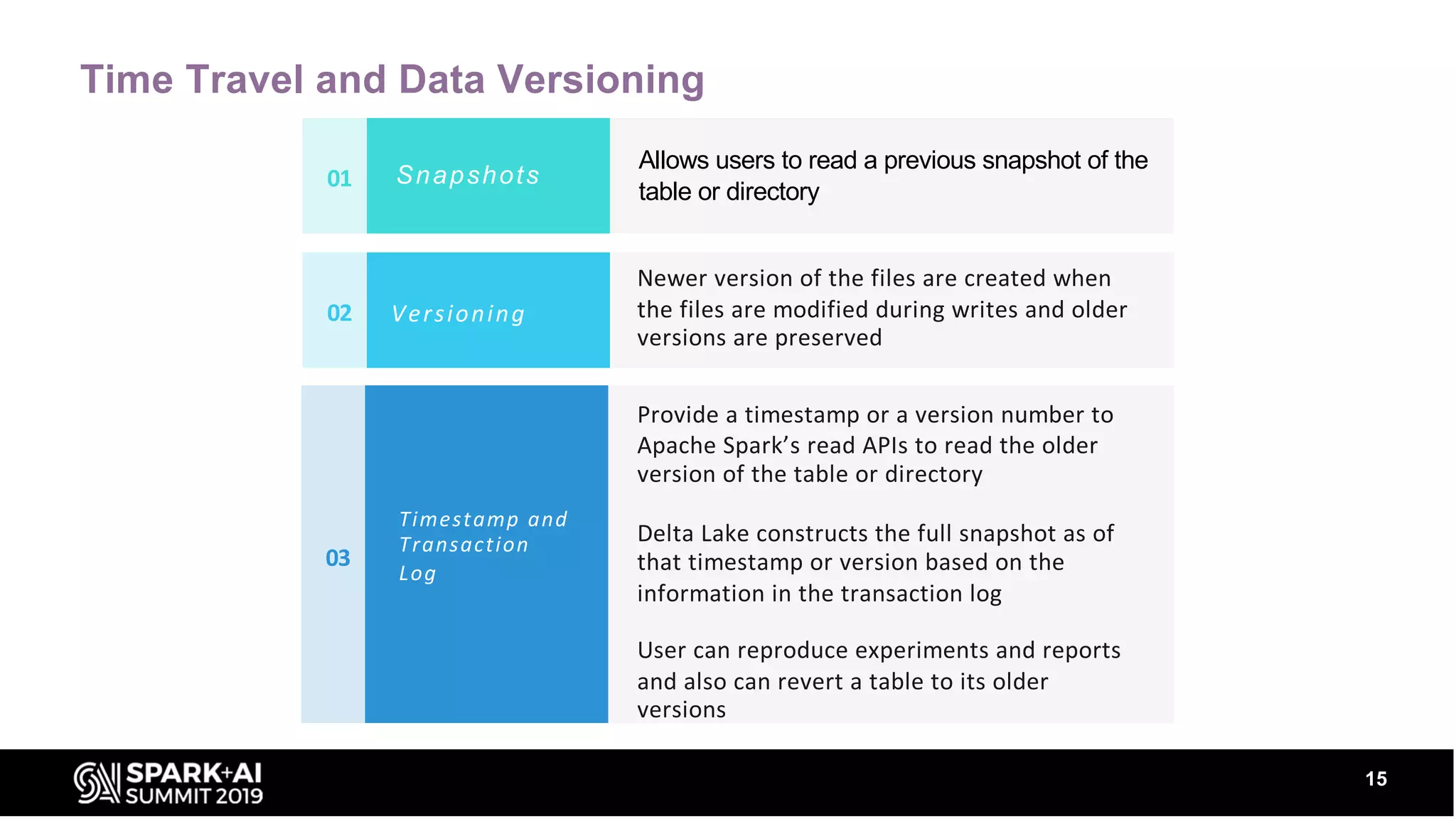

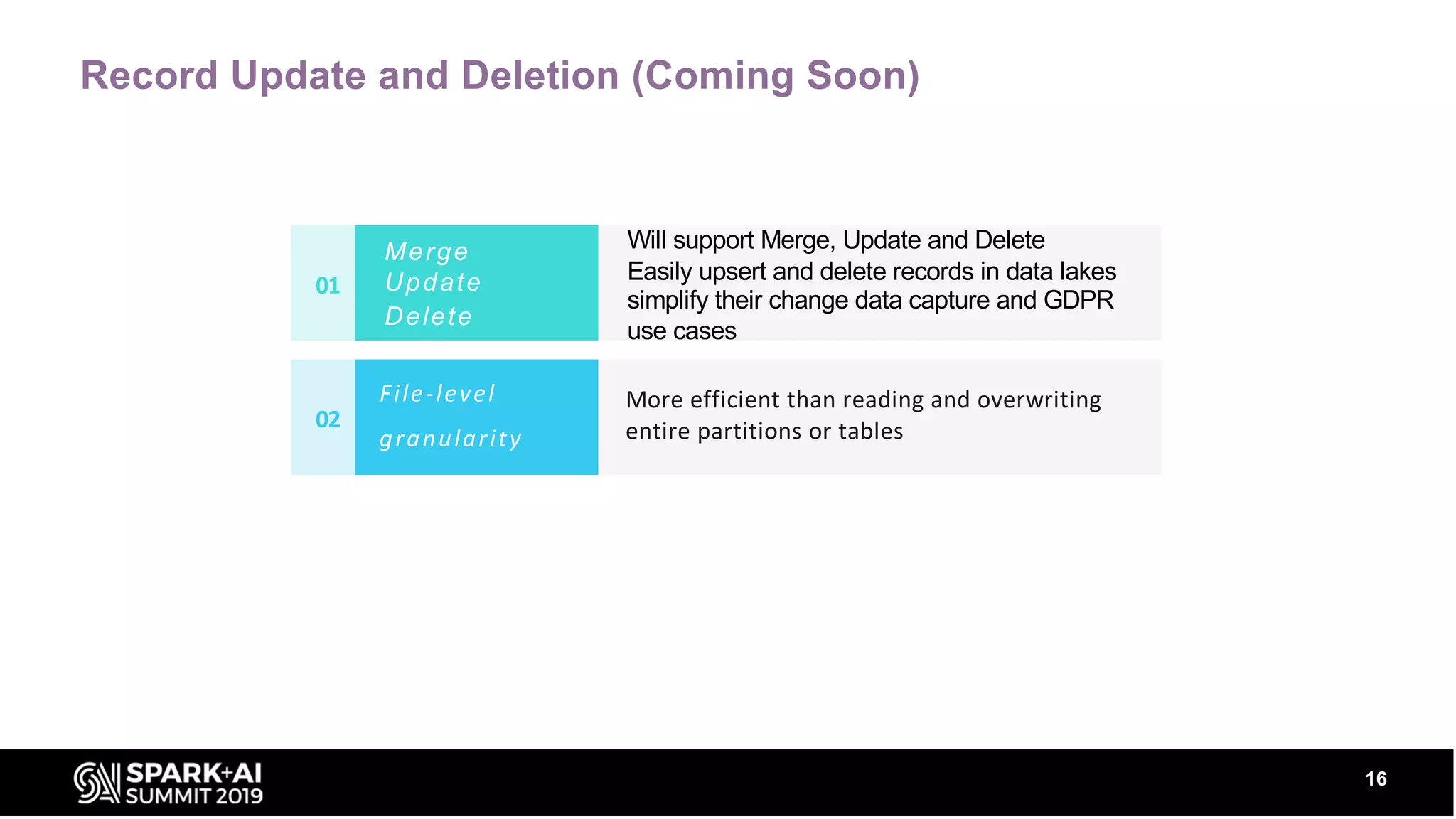

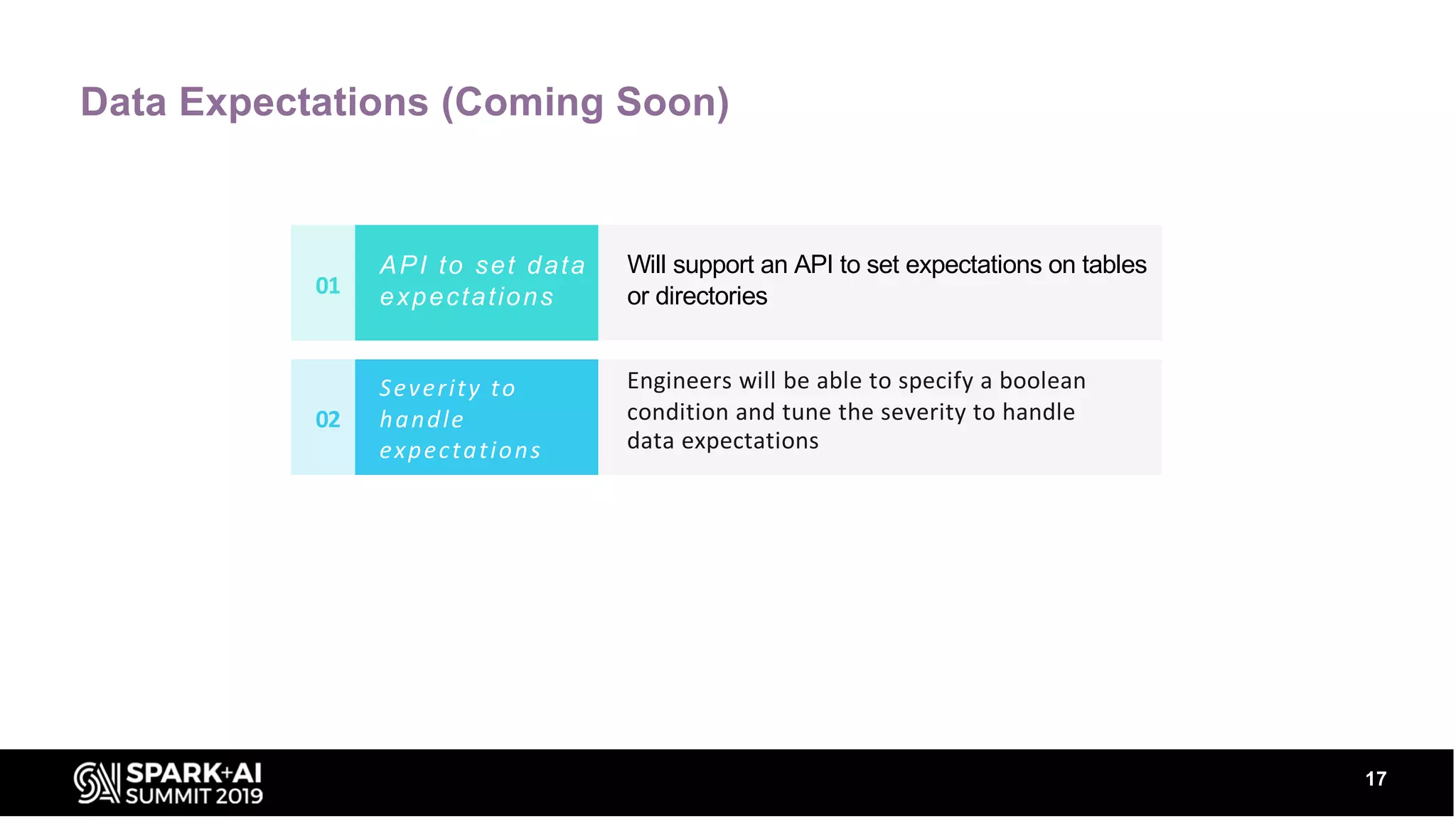

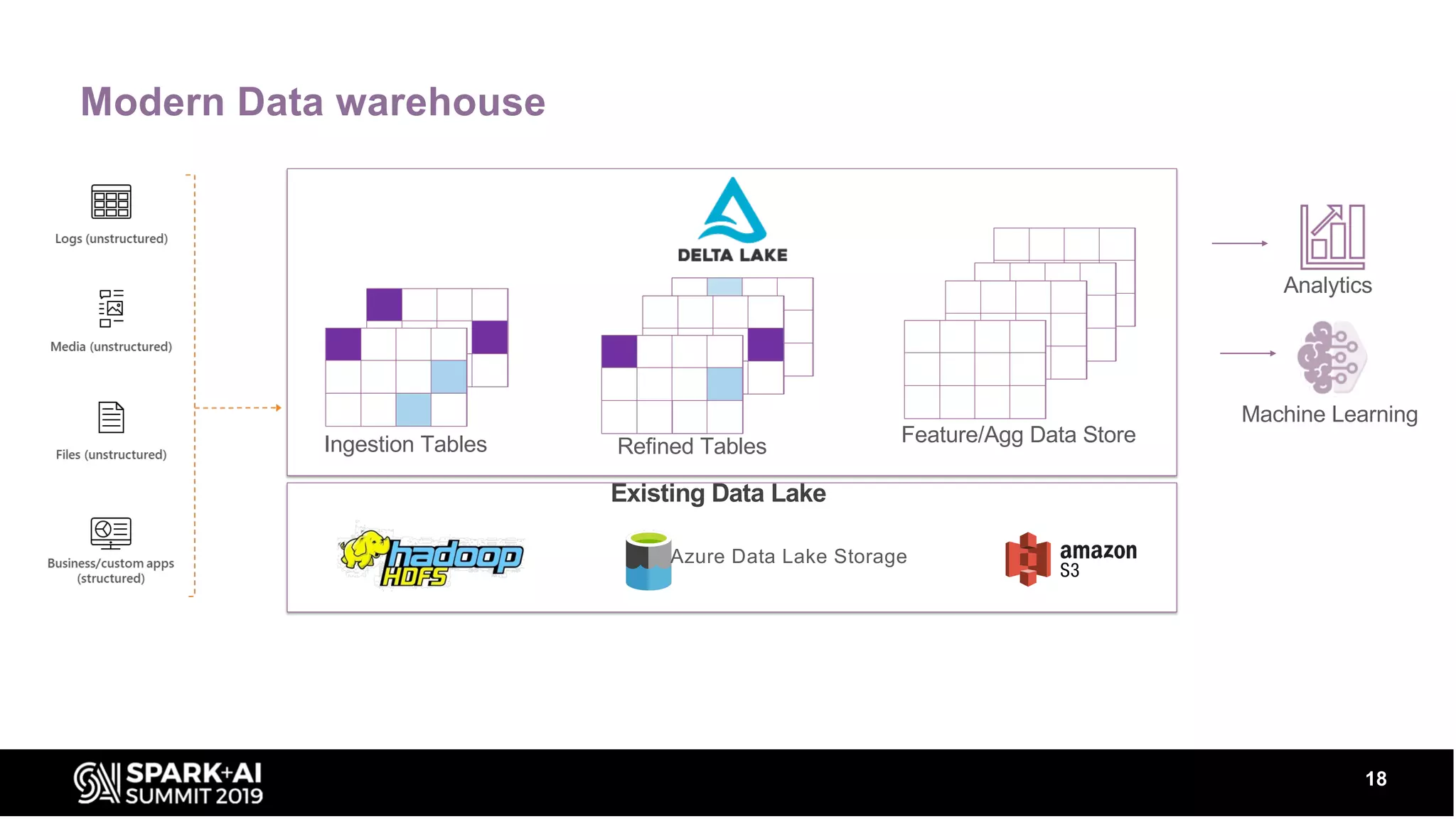

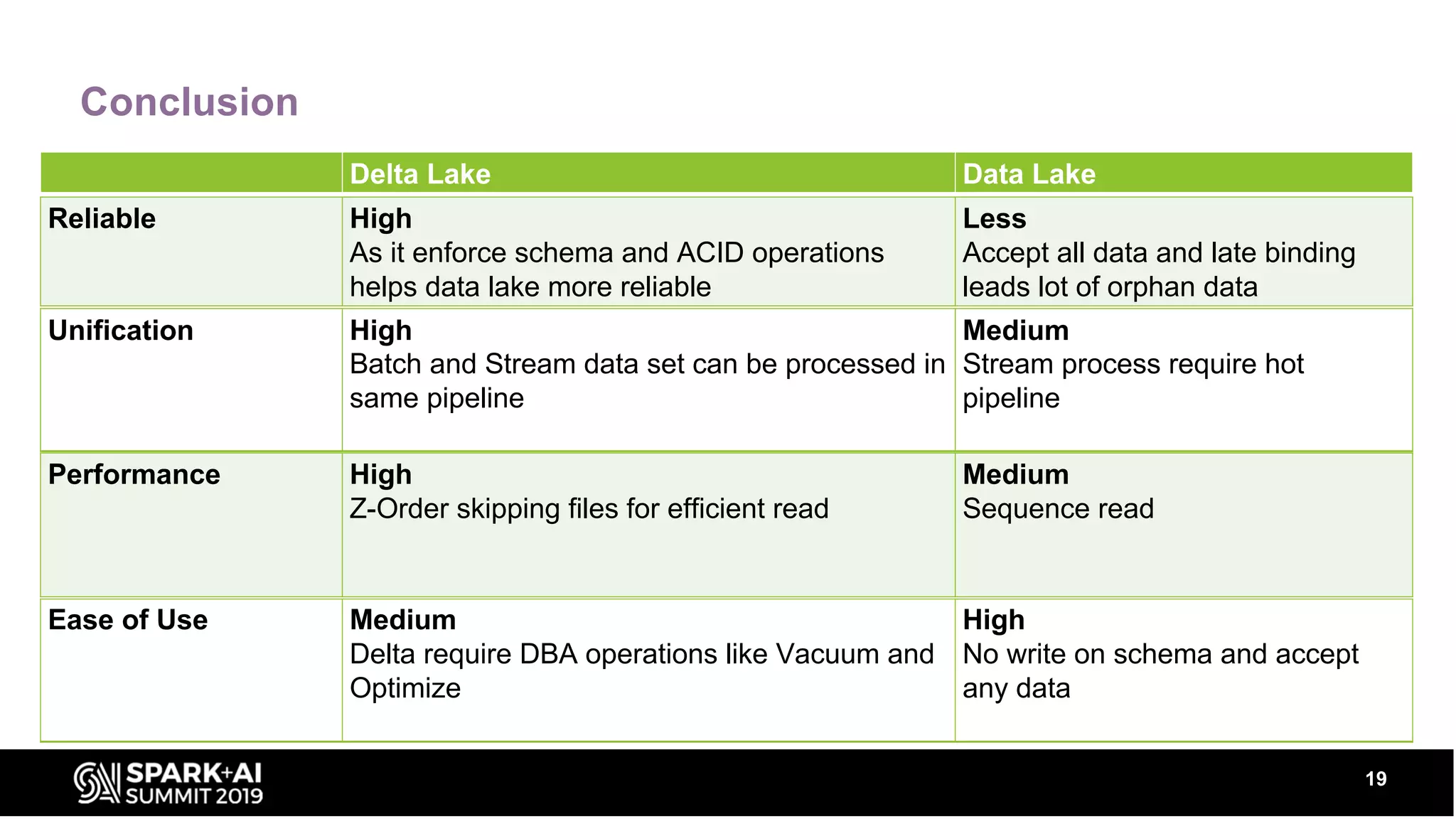

The document discusses Delta Lake, an open-source project from Databricks that enhances data lakes with features like ACID transactions, schema enforcement, and time travel capabilities. It highlights benefits such as improved metadata handling, support for both batch and streaming data, and efficient data processing. The agenda includes insights from data professionals and addresses common challenges faced with traditional data lakes.