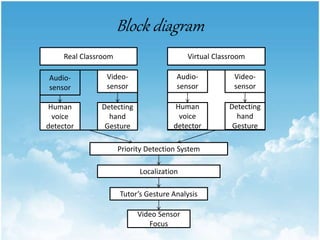

This document discusses a system for multi-speaker detection and tracking in an e-learning classroom using audio and video sensors and gesture analysis. The system uses microphones and cameras in both a real classroom and virtual classrooms to detect when students raise their hands or speak. It prioritizes which student interrupted first and focuses the PTZ camera on that student. Audio signals are used for voice detection and video signals are used to detect hand gestures. The system aims to make virtual classrooms more similar to real classrooms by enabling multiple students to ask questions simultaneously through gesture analysis and camera focus.

![References

• [1] Remote Student Localization using Audio and Video

Processing for Synchronous Interactive E-Learning Balaji

Hariharan, Aparna Vadakkepatt, Sangeeth Kumar Amrita

Centre for Wireless Networks and Applications, Amrita

Vishwa Vidyapeetham Kerala, India.

• [2] Sensors for Gesture Recognition Systems-IEEESignal

Berman, Member, IEEE, and Helman Stern, Member, IEEE.

• [3] Robust Joint Audio-Video Localization in Video

Conferencing Using Reliability Information David Lo, Rafik

A. Goubran, Member, IEEE, Richard M. Dansereau, Member,

IEEE, Graham Thompson, and Dieter Schulz .](https://image.slidesharecdn.com/multi-150221123136-conversion-gate01/85/Multi-Speaker-Detection-using-audio-and-video-sensors-17-320.jpg)