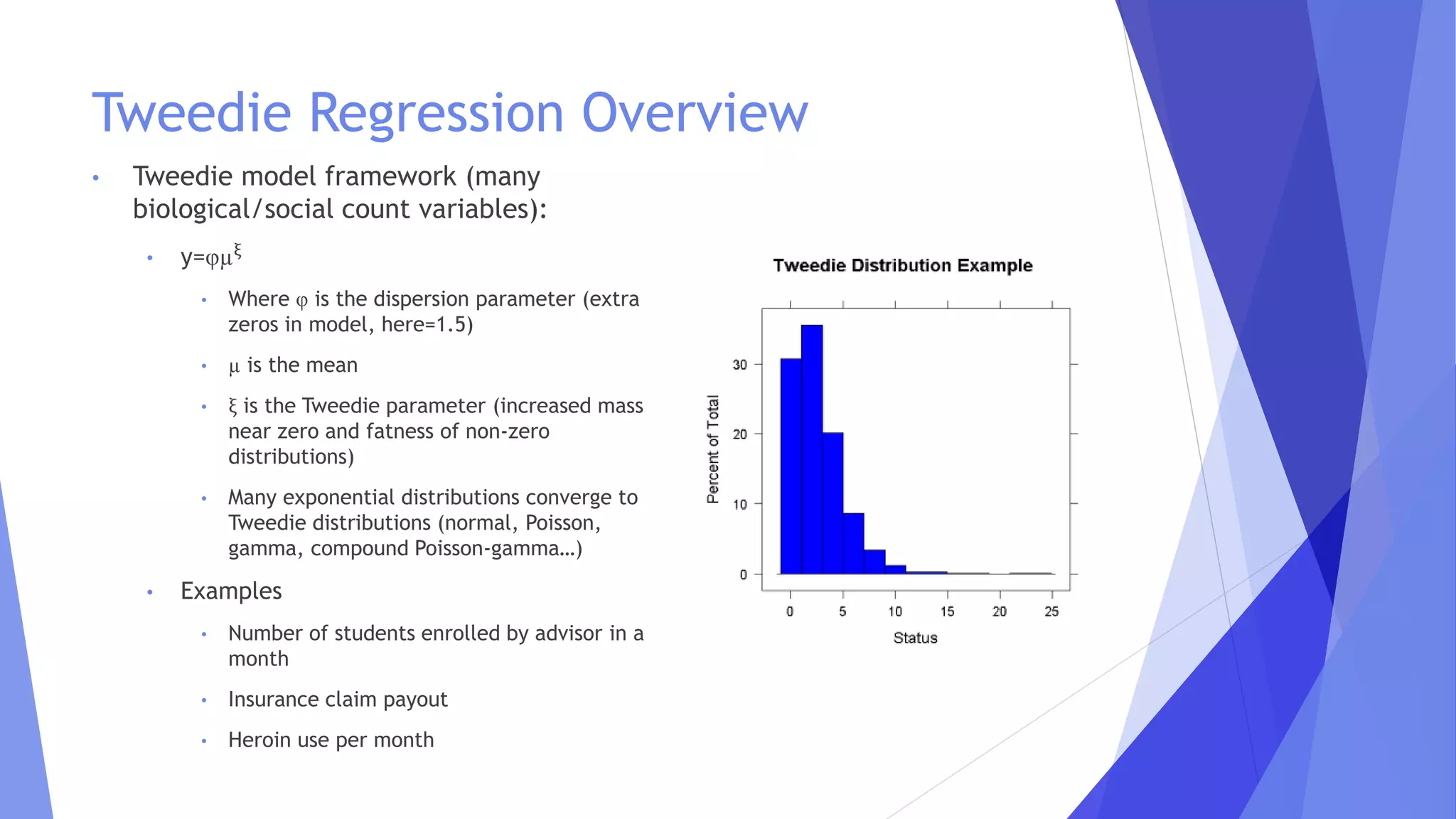

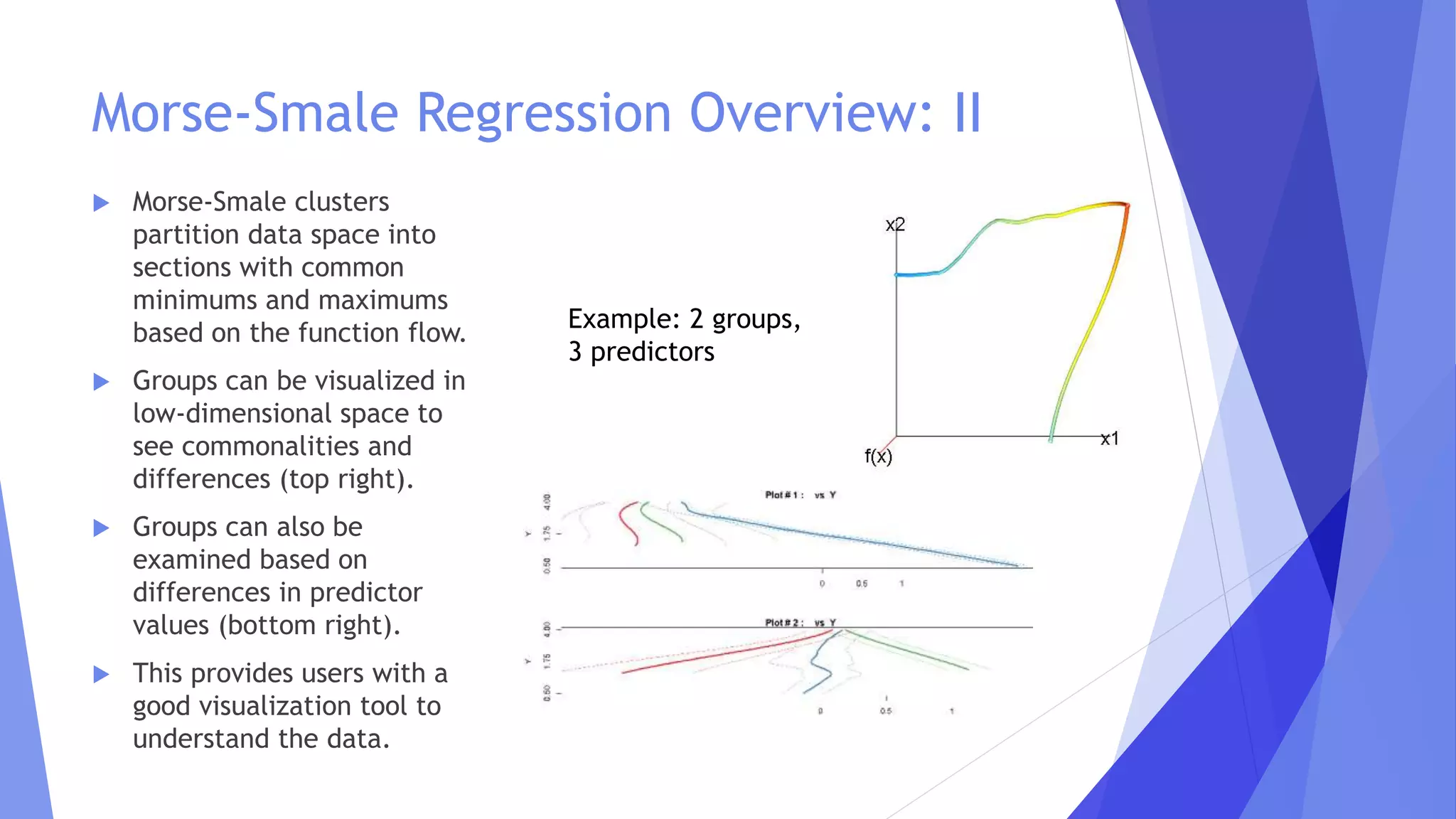

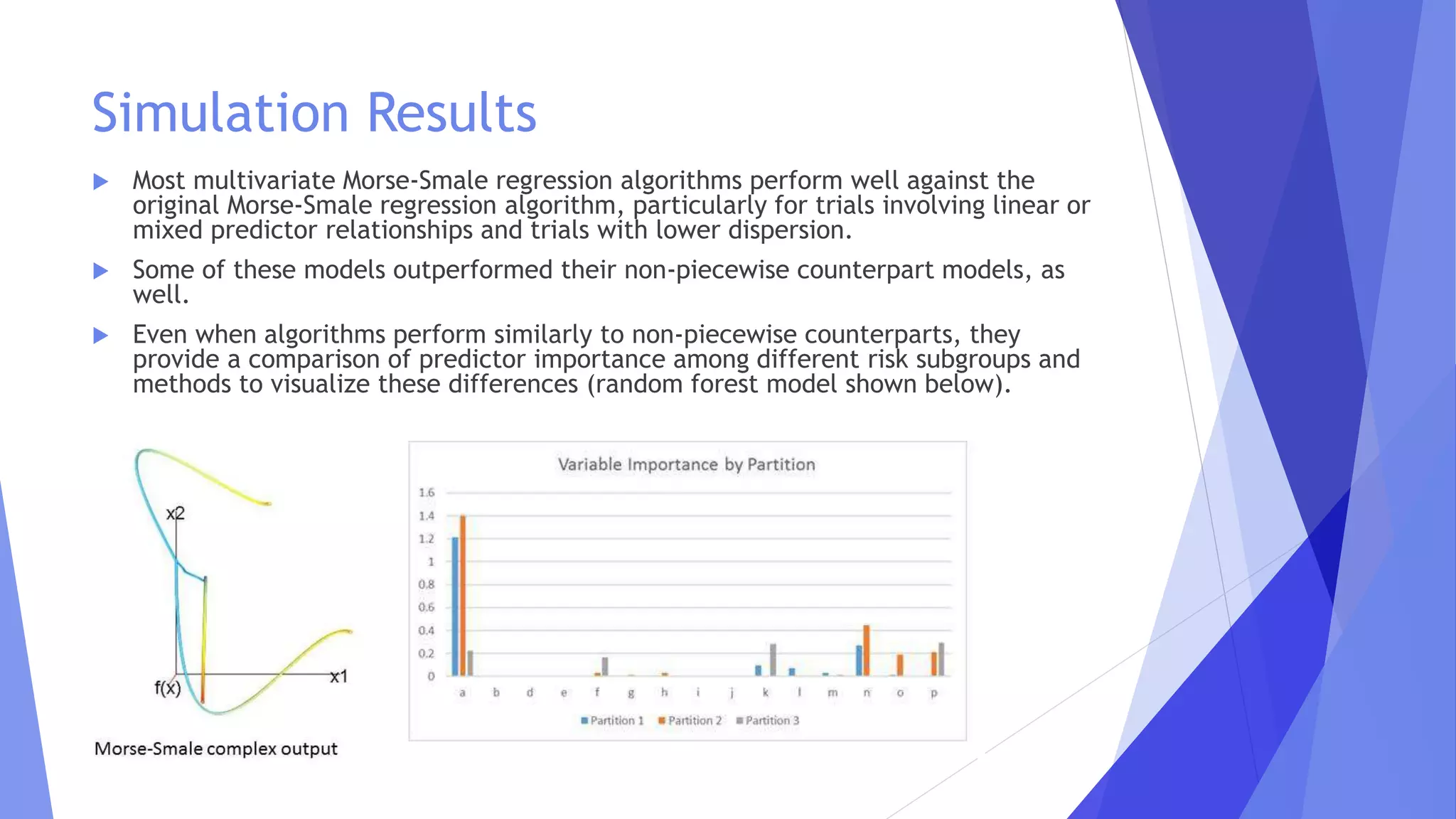

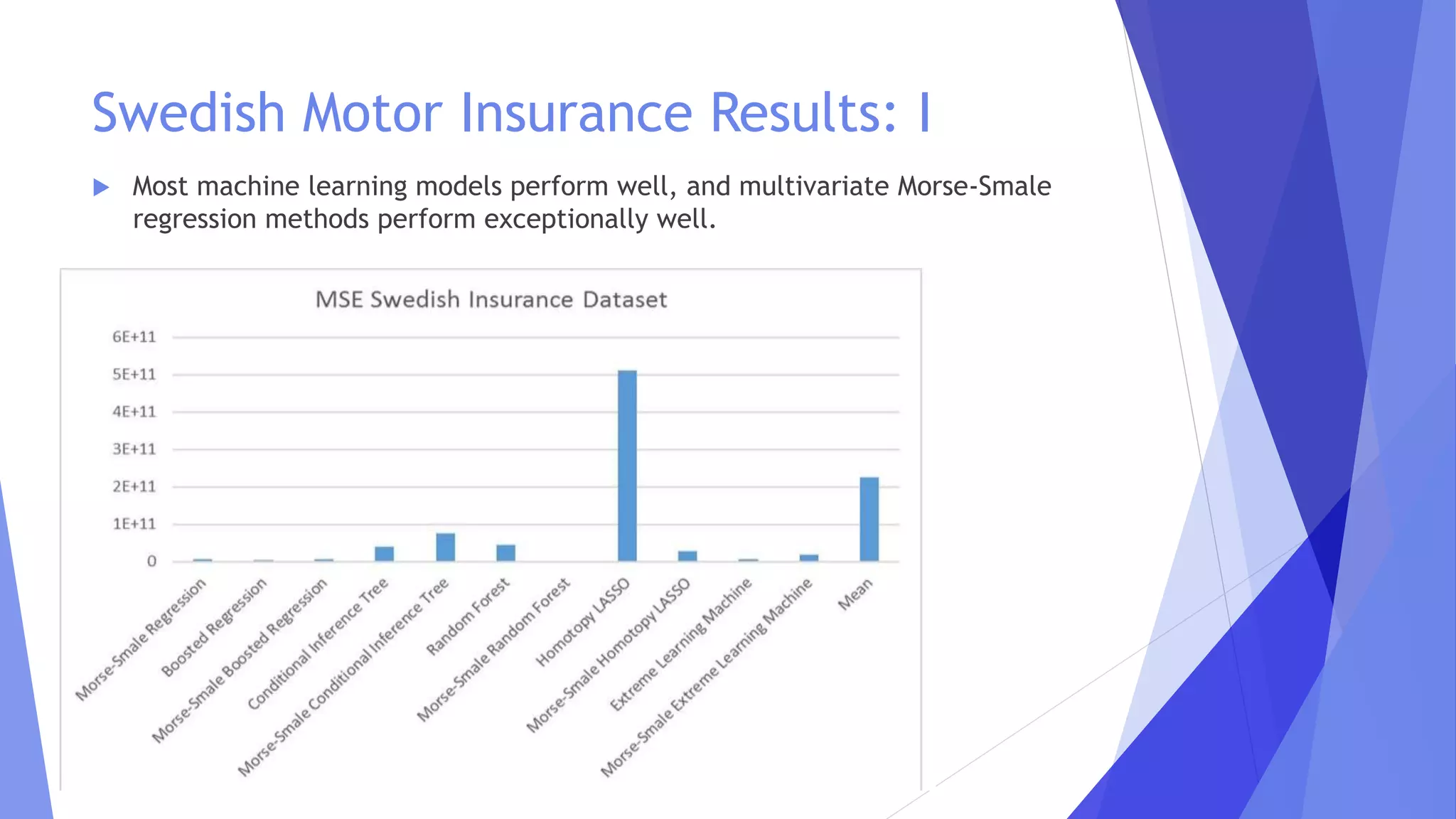

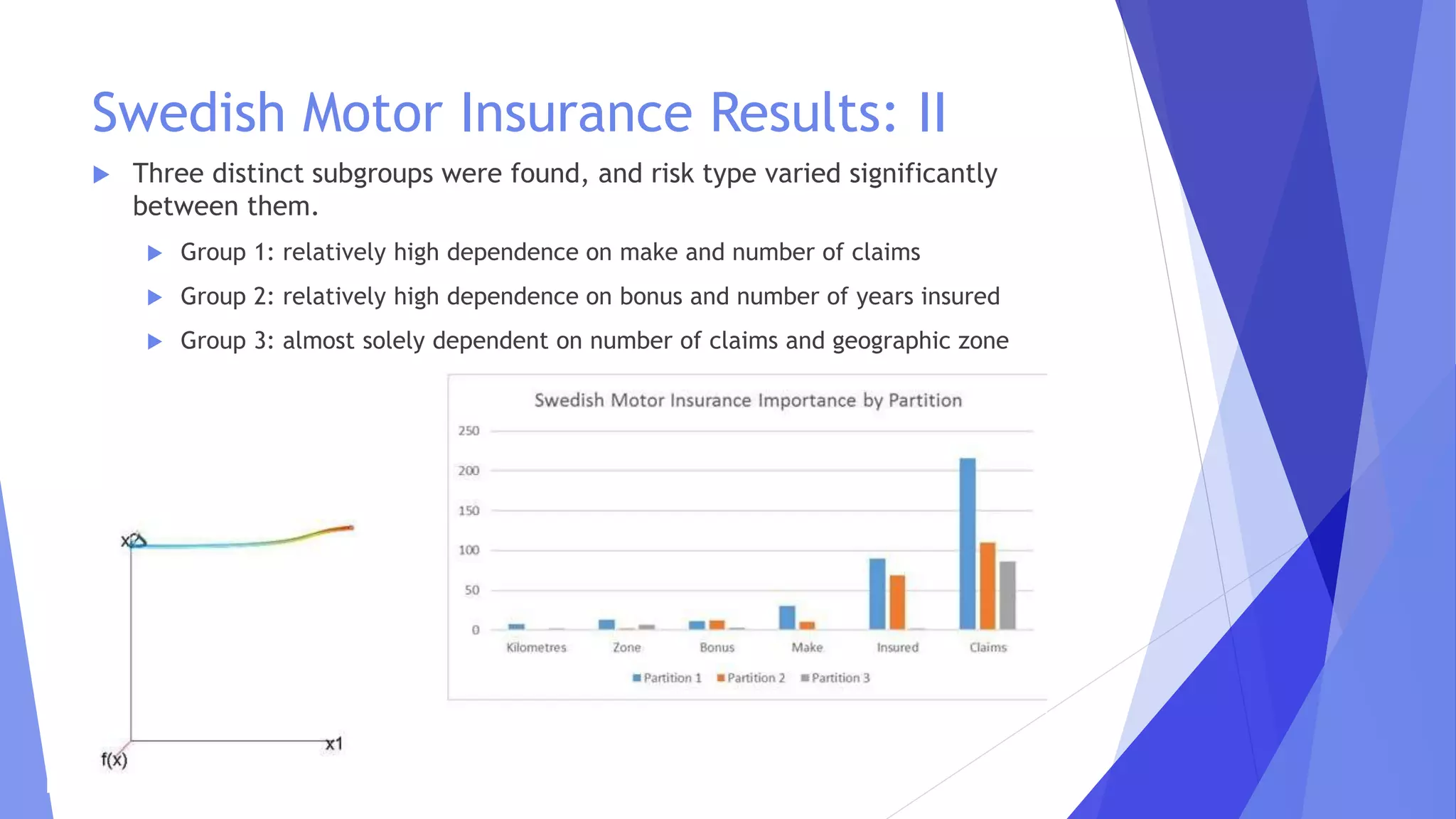

This document discusses the extensions of Morse-Smale regression for risk modeling, emphasizing the method's effectiveness in capturing complex risk types and subgroup variations. The use of machine learning and Tweedie regression is explored, highlighting their advantages in modeling insurance claims and understanding risk structure. Results from simulations and application to Swedish motor insurance indicate that multivariate Morse-Smale regression models perform well and provide valuable insights into risk differentiation.