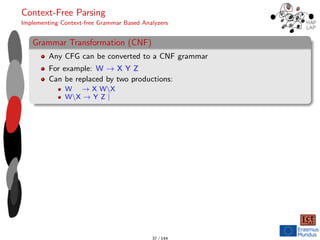

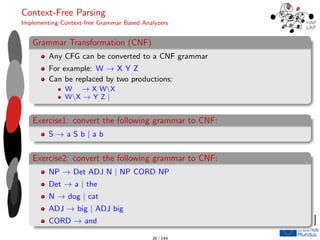

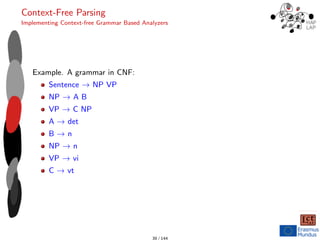

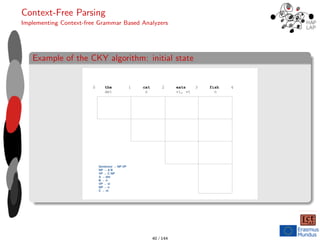

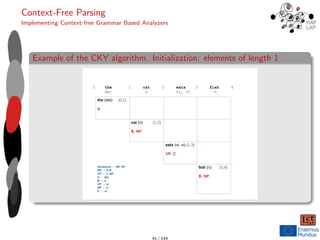

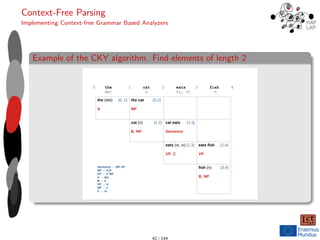

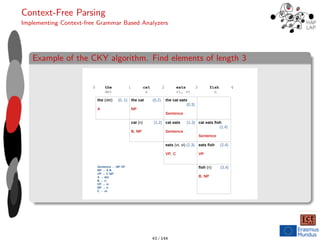

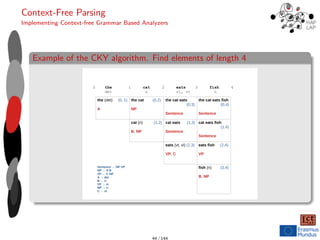

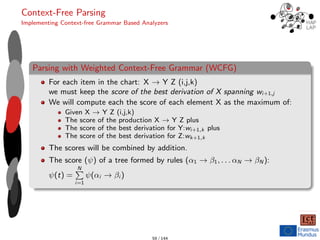

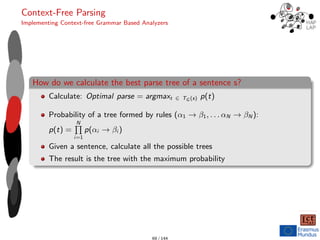

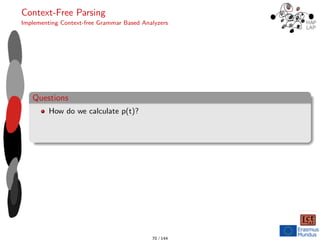

This document provides an extensive introduction to context-free grammars (CFG) and their application in natural language processing, including parsing strategies such as top-down and shift-reduce methods. Key concepts include building syntactic structures through CFGs, probabilistic CFGs, and parsing algorithms like the CKY algorithm for efficiency. The content also includes exercises and examples to demonstrate grammar rules and parsing techniques.

![Context-Free Parsing

Implementing Context-free Grammar Based Analyzers

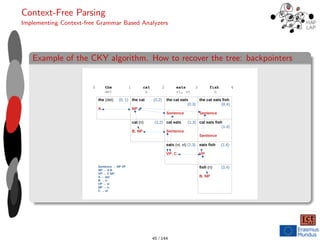

The CKY algorithm (taken from Eisenstein 2019)

228 CHAPTER 10. CONTEXT-FREE PARSING

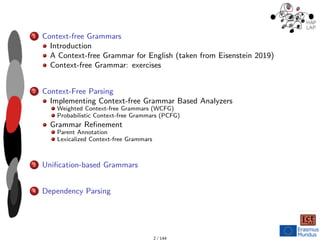

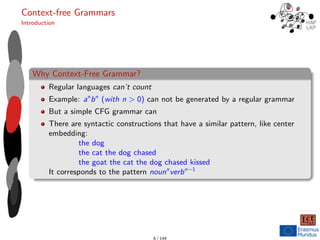

Algorithm 13 The CKY algorithm for parsing a sequence w ∈ Σ∗ in a context-free gram-

mar G = (N, Σ, R, S), with non-terminals N, production rules R, and start symbol S.

The grammar is assumed to be in Chomsky normal form (section 9.2.1). The function

PICKFROM(b[i, j, X]) selects an element of the set b[i, j, X] arbitrarily. All values of t and

b are initialized to ∅.

1: procedure CKY(w, G = (N, Σ, R, S))

2: for m ∈ {1 . . . M} do

3: t[m − 1, m] ← {X : (X → wm) ∈ R}

4: for � ∈ {2, 3, . . . , M} do � Iterate over constituent lengths

5: for m ∈ {0, 1, . . . M − �} do � Iterate over left endpoints

6: for k ∈ {m + 1, m + 2, . . . , m + � − 1} do � Iterate over split points

7: for (X → Y Z) ∈ R do � Iterate over rules

8: if Y ∈ t[m, k] ∧ Z ∈ t[k, m + �] then

9: t[m, m + �] ← t[m, m + �] ∪ X � Add non-terminal to table

10: b[m, m + �, X] ← b[m, m + �, X] ∪ (Y, Z, k) � Add back-pointers

11: if S ∈ t[0, M] then

12: return TRACEBACK(S, 0, M, b)

13: else

14: return ∅

15: procedure TRACEBACK(X, i, j, b)

16: if j = i + 1 then

17: return X

18: else

19: (Y, Z, k) ← PICKFROM(b[i, j, X])

20: return X → (TRACEBACK(Y, i, k, b), TRACEBACK(Z, k, j, b))

• Productions with more than two elements on the right-hand side can be binarized

by creating additional non-terminals, as described in section 9.2.1. For example,

the production VP → V NP NP (for ditransitive verbs) can be converted to VP →

VPditrans/NP NP, by adding the non-terminal VPditrans/NP and the production

VP /NP → V NP. 49 / 144](https://image.slidesharecdn.com/morphology-and-syntax-cfg-241119100957-31c5288d/85/Morphology-and-Syntax-CFG-for-another-random-place-49-320.jpg)

![Context-Free Parsing

Implementing Context-free Grammar Based Analyzers

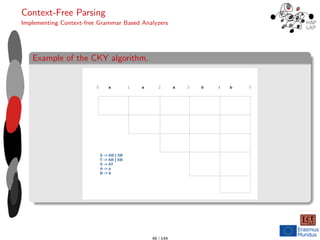

The CKY algorithm with a WCFG (Eisenstein 2019)

234 CHAPTER 10. CONTEXT-FREE PARSING

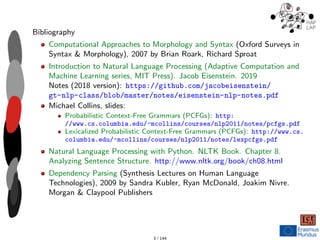

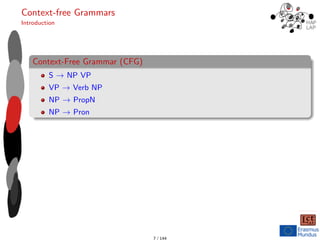

Algorithm 14 CKY algorithm for parsing a string w ∈ Σ∗ in a weighted context-free

grammar (N, Σ, R, S), where N is the set of non-terminals and R is the set of weighted

productions. The grammar is assumed to be in Chomsky normal form (section 9.2.1). The

function TRACEBACK is defined in Algorithm 13.

procedure WCKY(w, G = (N, Σ, R, S))

for all i, j, X do � Initialization

t[i, j, X] ← 0

b[i, j, X] ← ∅

for m ∈ {1, 2, . . . , M} do

for all X ∈ N do

t[m, m + 1, X] ← ψ(X → wm, (m, m + 1, m))

for � ∈ {2, 3, . . . M} do

for m ∈ {0, 1, . . . , M − �} do

for k ∈ {m + 1, m + 2, . . . , m + � − 1} do

t[m, m + �, X] ← max

k,Y,Z

ψ(X → Y Z, (m, m + �, k)) + t[m, k, Y ] + t[k, m + �, Z]

b[m, m + �, X] ← argmax

k,Y,Z

ψ(X → Y Z, (m + �, k)) + t[m, k, Y ] + t[k, m + �, Z]

return TRACEBACK(S, 0, M, b)

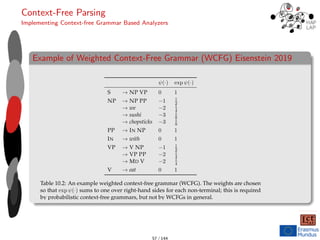

10.3.1 Parsing with weighted context-free grammars

The optimization problem in Equation 10.4 can be solved by modifying the CKY algo-

rithm. In the deterministic CKY algorithm, each cell t[i, j] stored a set of non-terminals

60 / 144](https://image.slidesharecdn.com/morphology-and-syntax-cfg-241119100957-31c5288d/85/Morphology-and-Syntax-CFG-for-another-random-place-60-320.jpg)

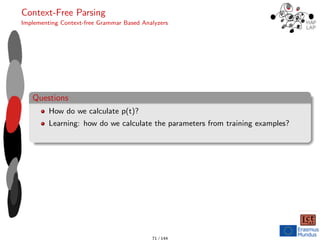

![Context-Free Parsing

Implementing Context-free Grammar Based Analyzers

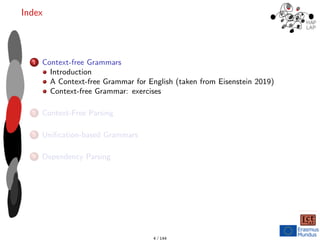

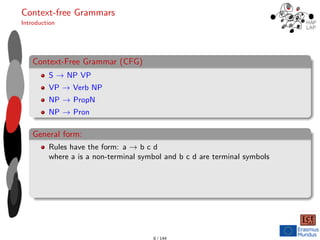

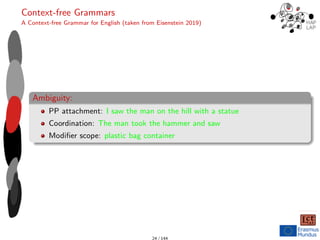

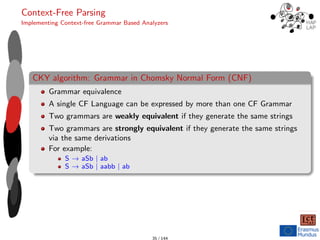

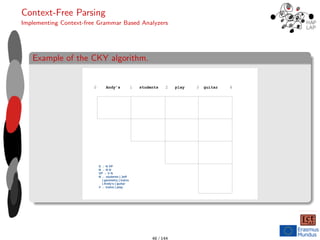

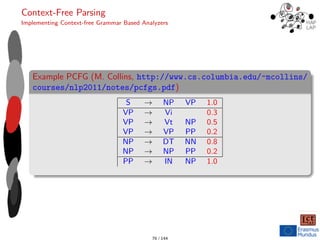

Exercise II. Given a PCFG:

S → NP NP [1.0]

NP → NP PP [0.2]

NP → NP NP [0.2]

PP → P NP [1.0]

VP → V NP [0.7]

VP → VP PP [0.3]

P → with [1.0]

V → saw [1.0]

NP → astronomers [0.1]

NP → ears [0.18]

NP → saw [0.02]

NP → stars [0.18]

NP → telescopes [0.1]

NP → astronomer’s [0.02]

Calculate the trees corresponding to the sentence astronomers saw stars with

telescopes and give the one with the highest probability.

93 / 144](https://image.slidesharecdn.com/morphology-and-syntax-cfg-241119100957-31c5288d/85/Morphology-and-Syntax-CFG-for-another-random-place-93-320.jpg)

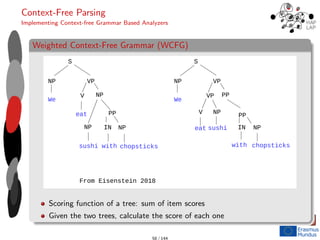

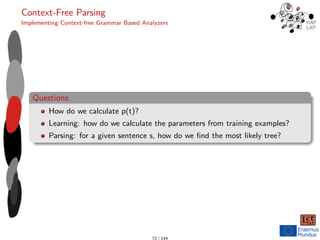

![Context-Free Parsing

Implementing Context-free Grammar Based Analyzers

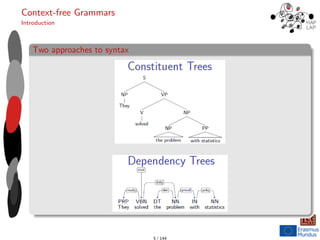

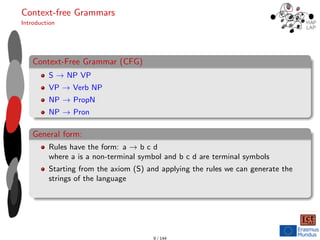

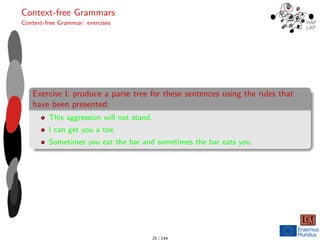

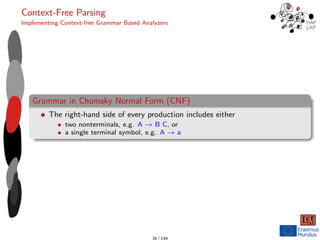

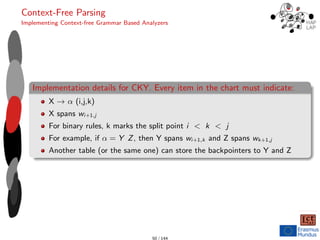

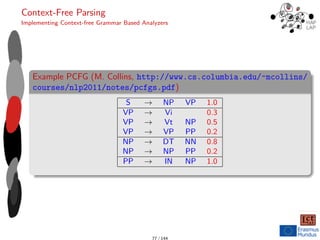

Exercise III. Given a PCFG:

S → V N [0.6]

S → NP V [0.4]

NP → D N [1.0]

D → a [0.2]

D → the [0.8]

V → support [0.6]

V → hate [0.4]

N → president [1.0]

Calculate all the sentences with p(x) > 0, each with its corresponding probability.

94 / 144](https://image.slidesharecdn.com/morphology-and-syntax-cfg-241119100957-31c5288d/85/Morphology-and-Syntax-CFG-for-another-random-place-94-320.jpg)