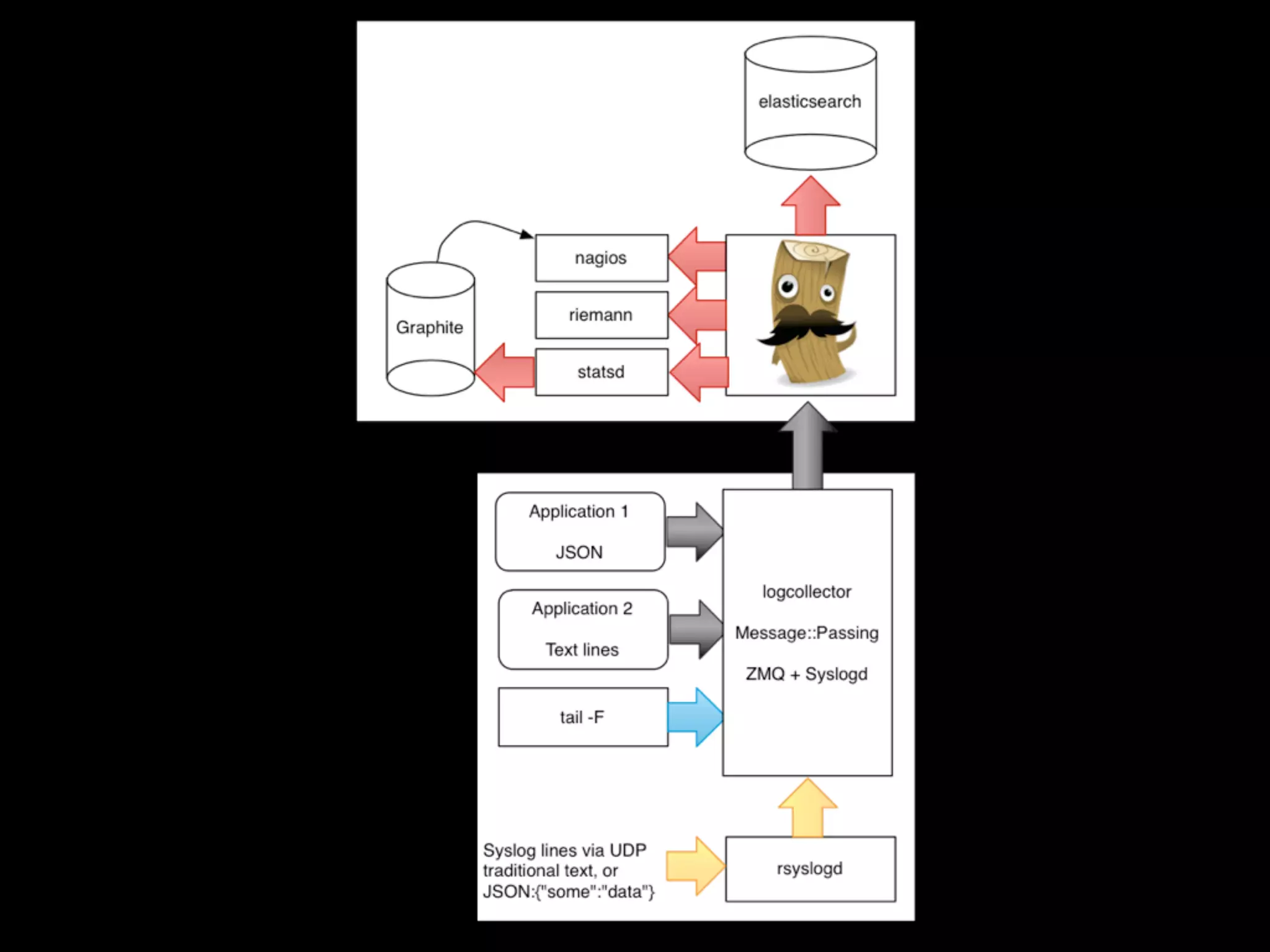

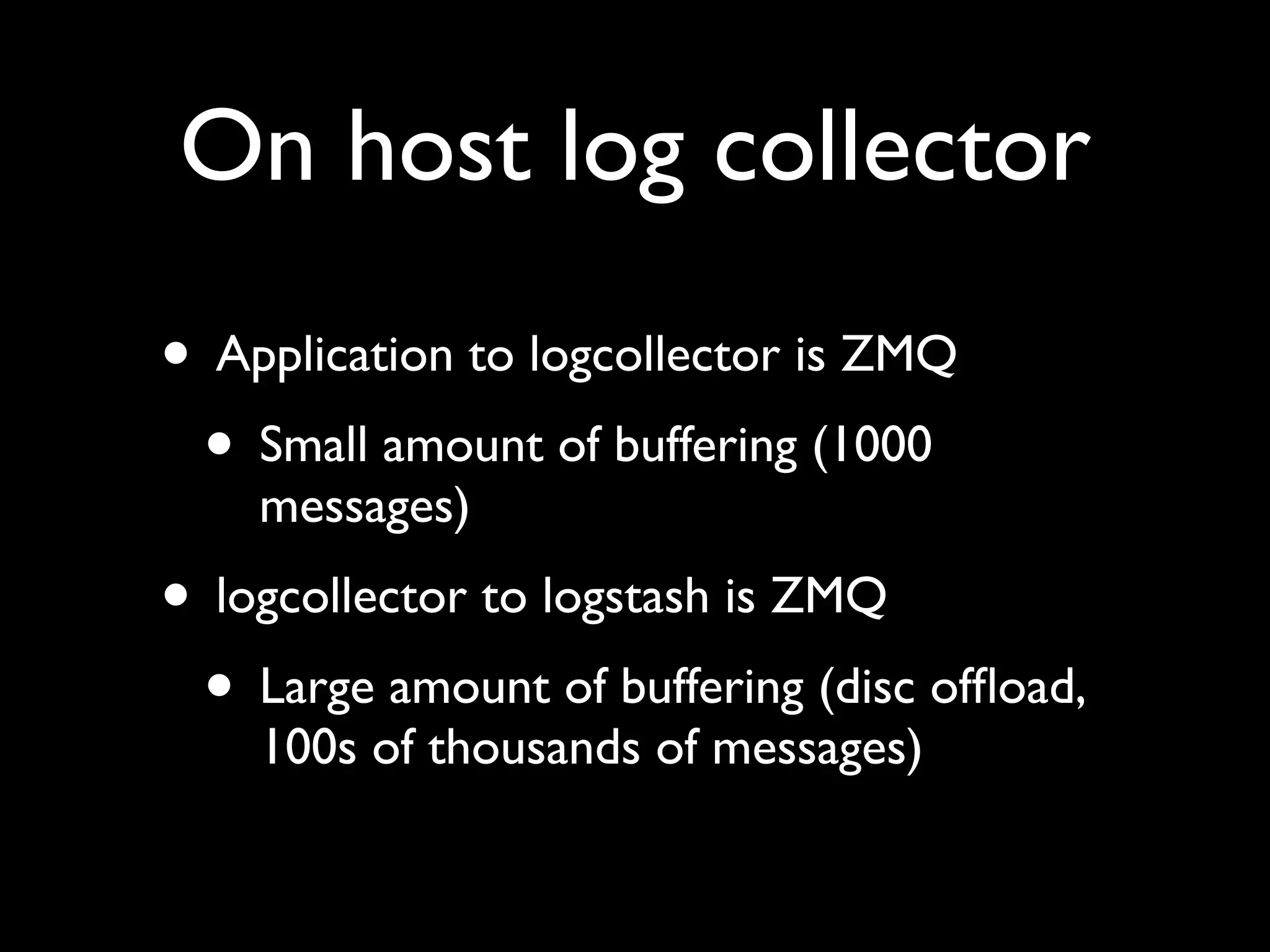

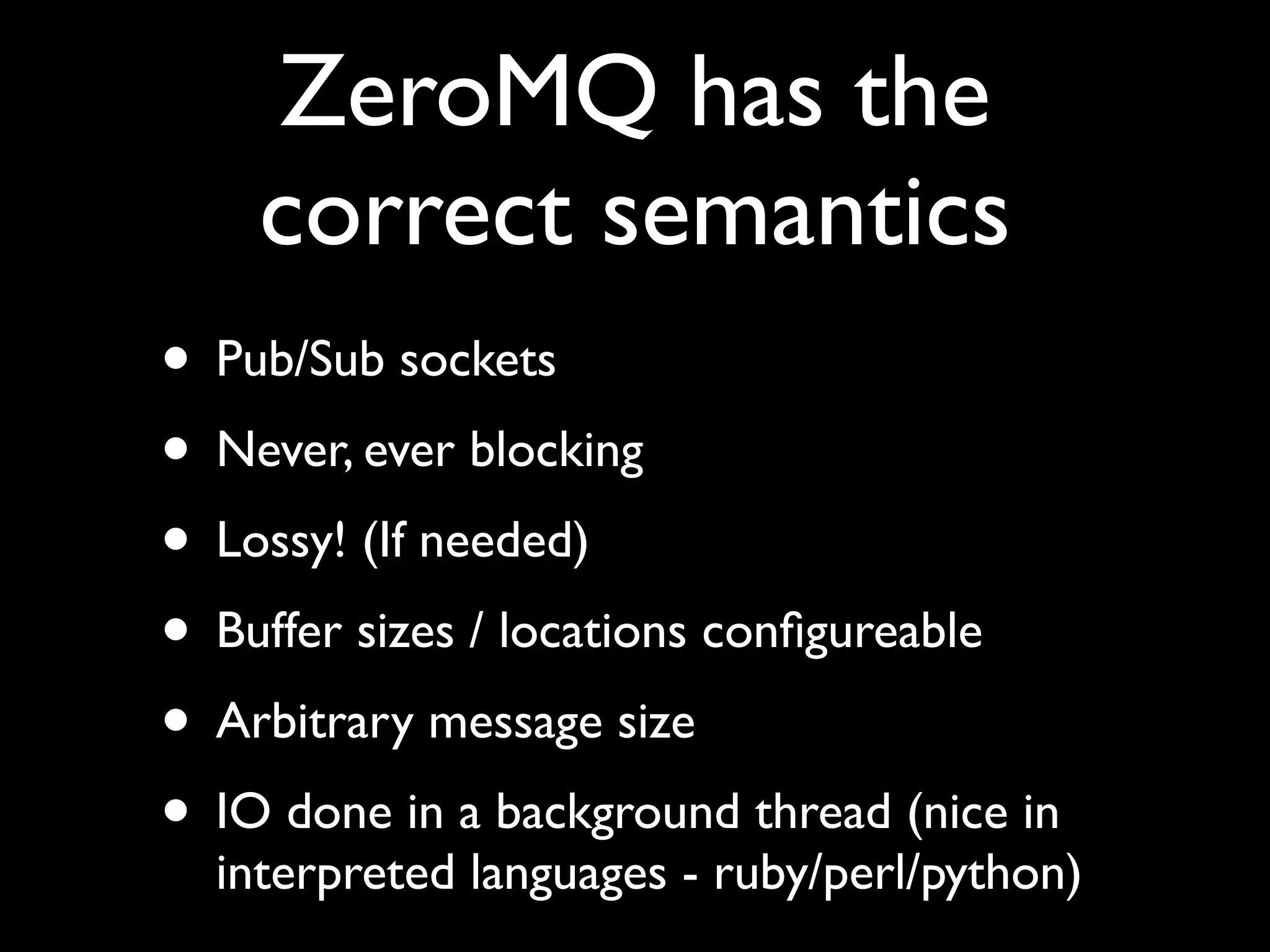

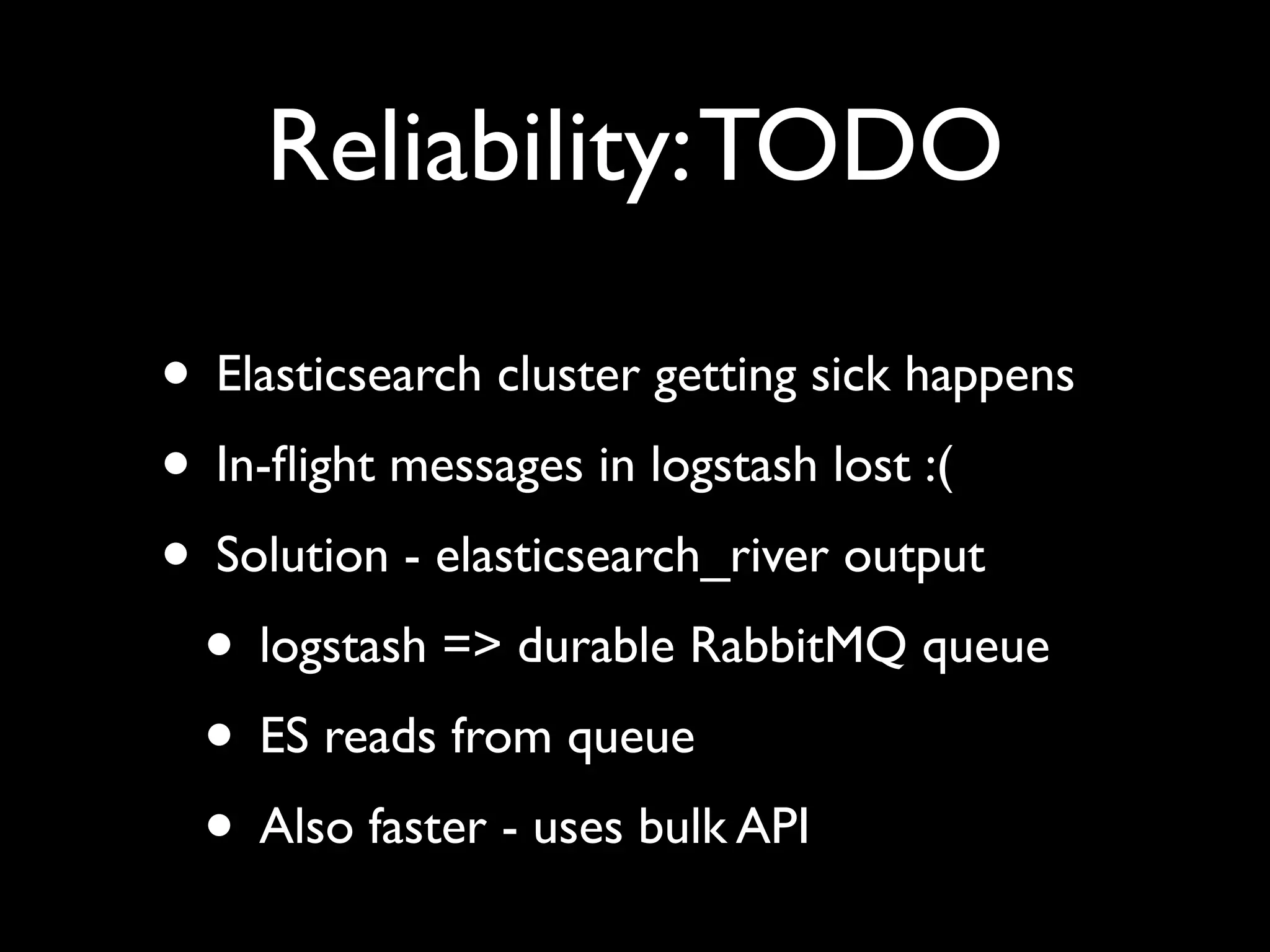

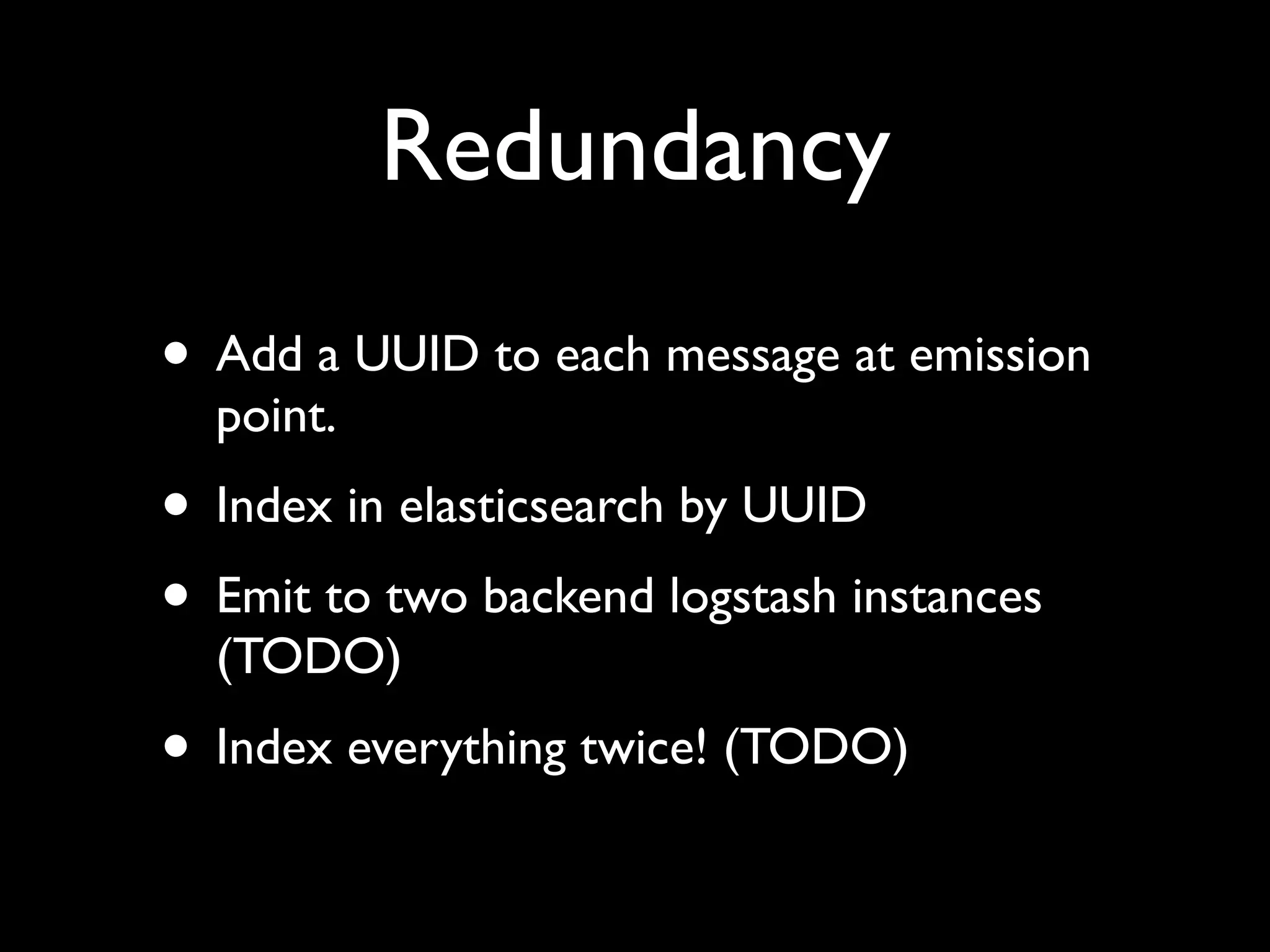

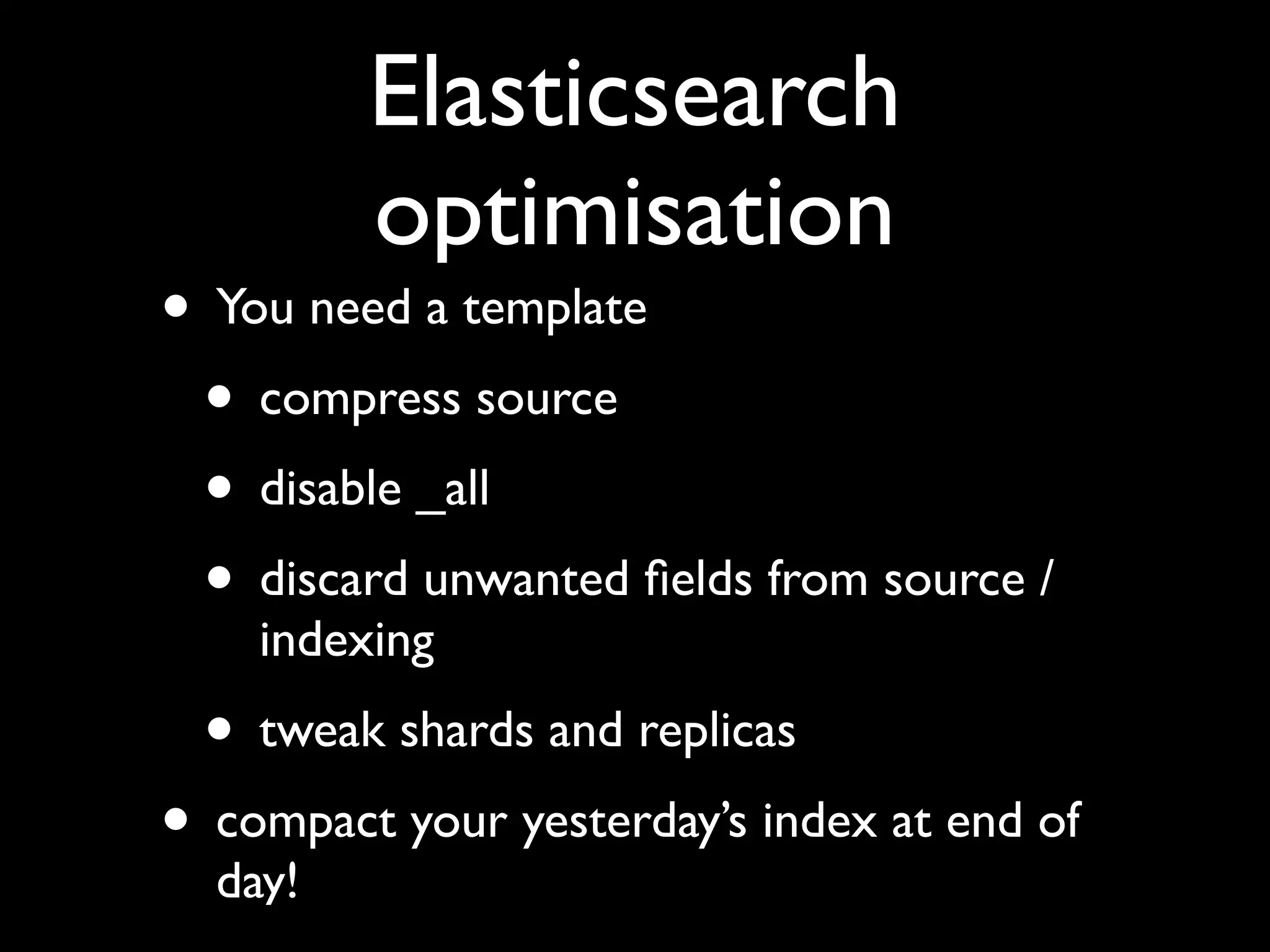

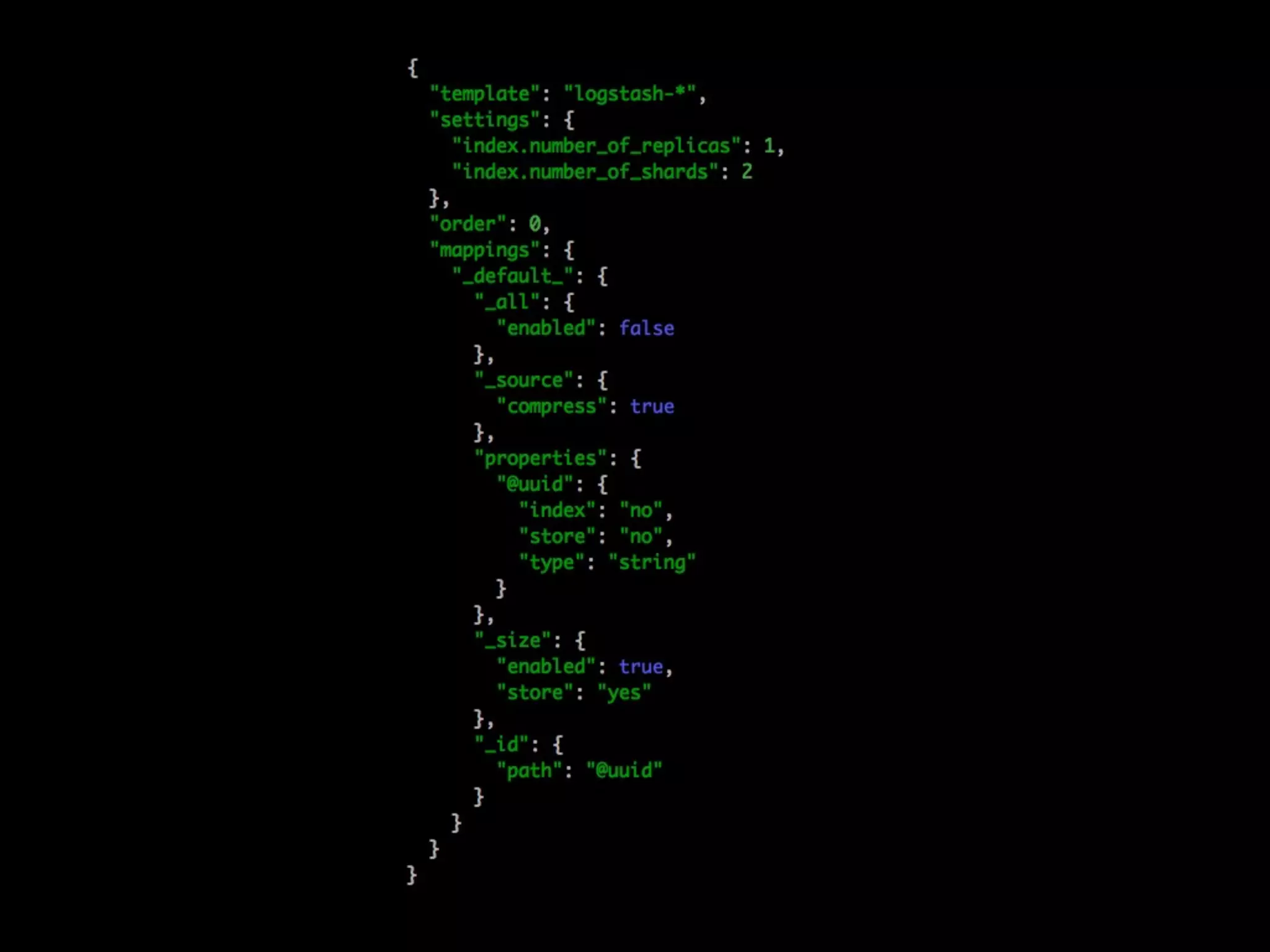

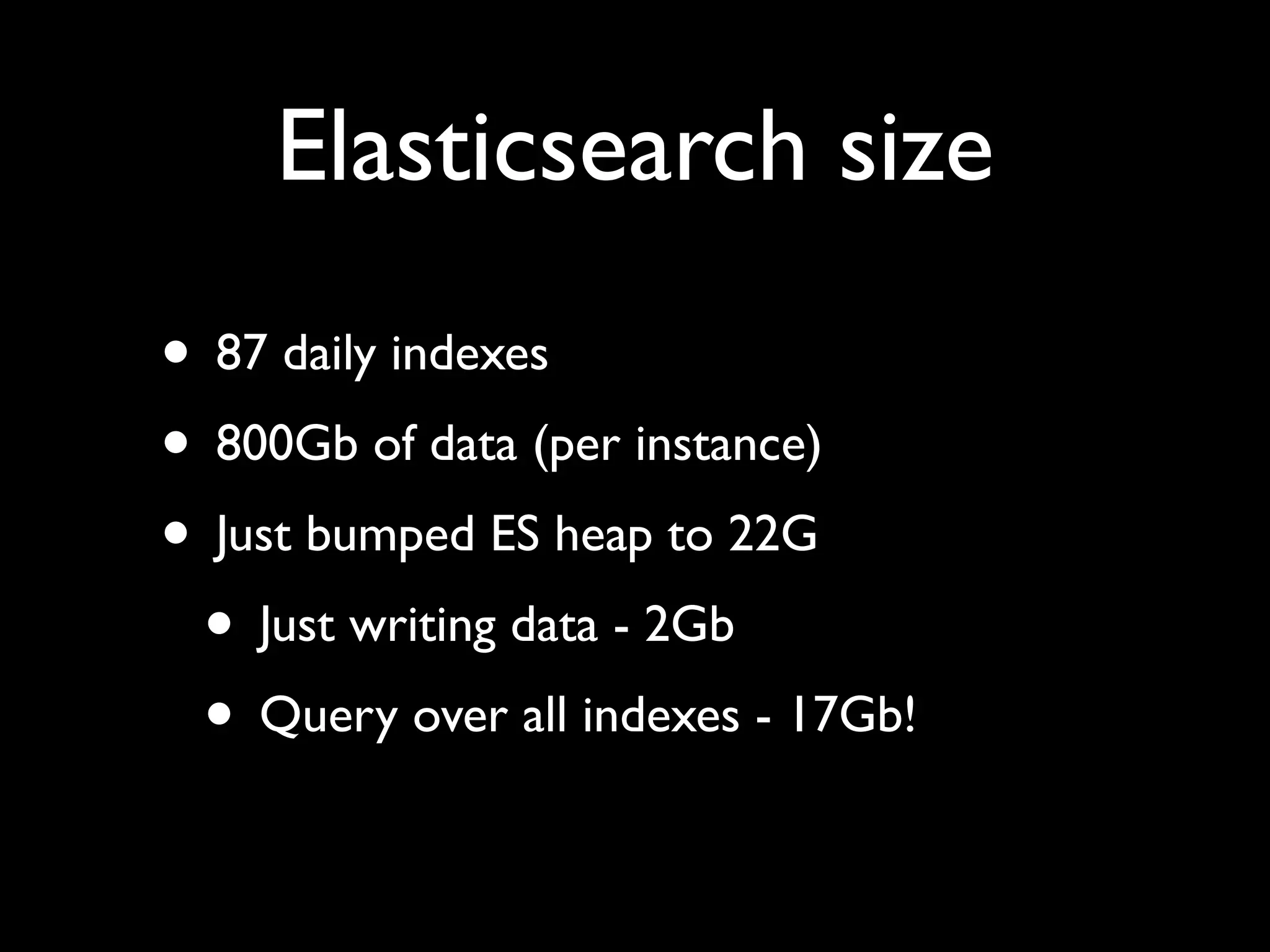

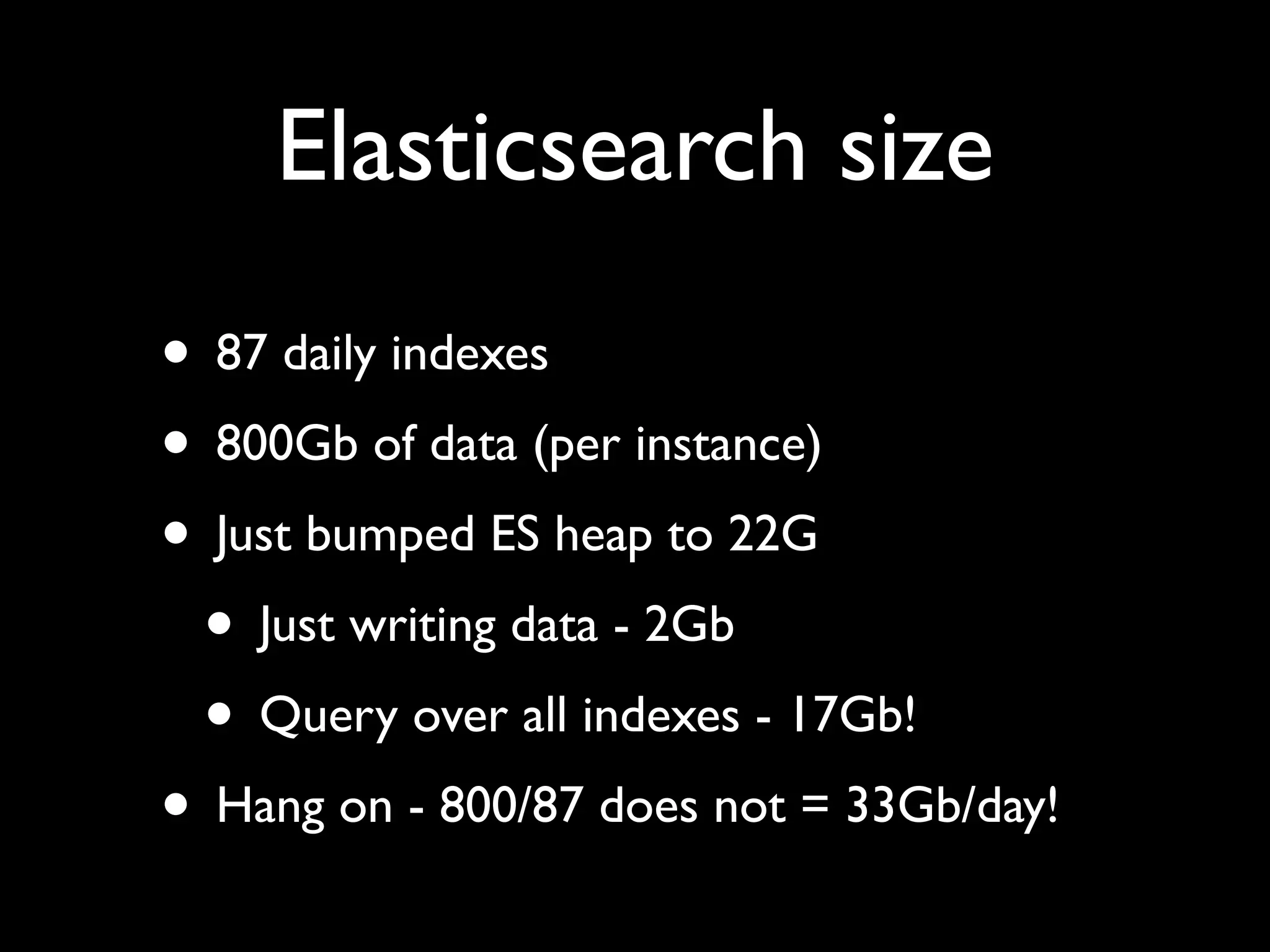

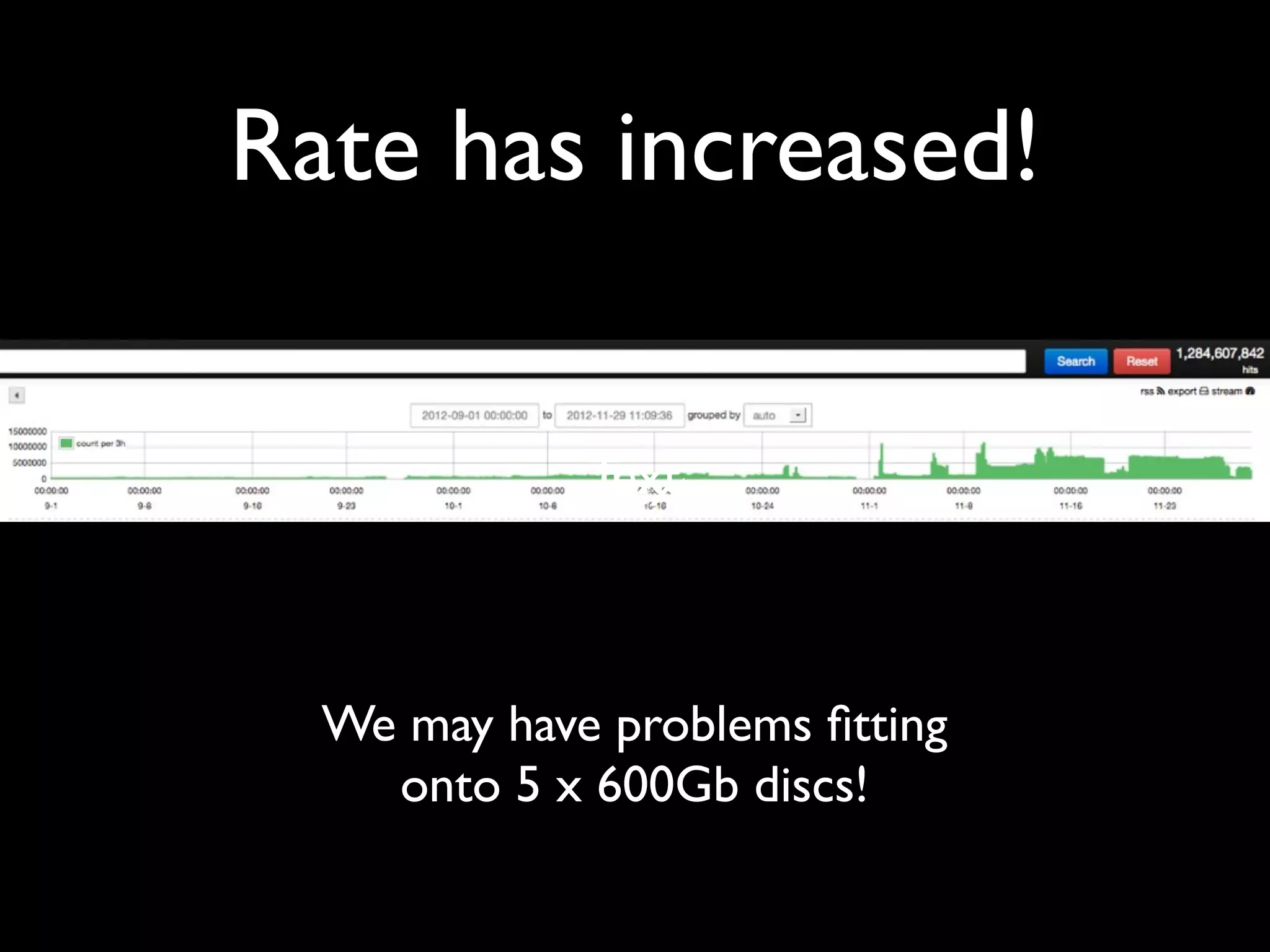

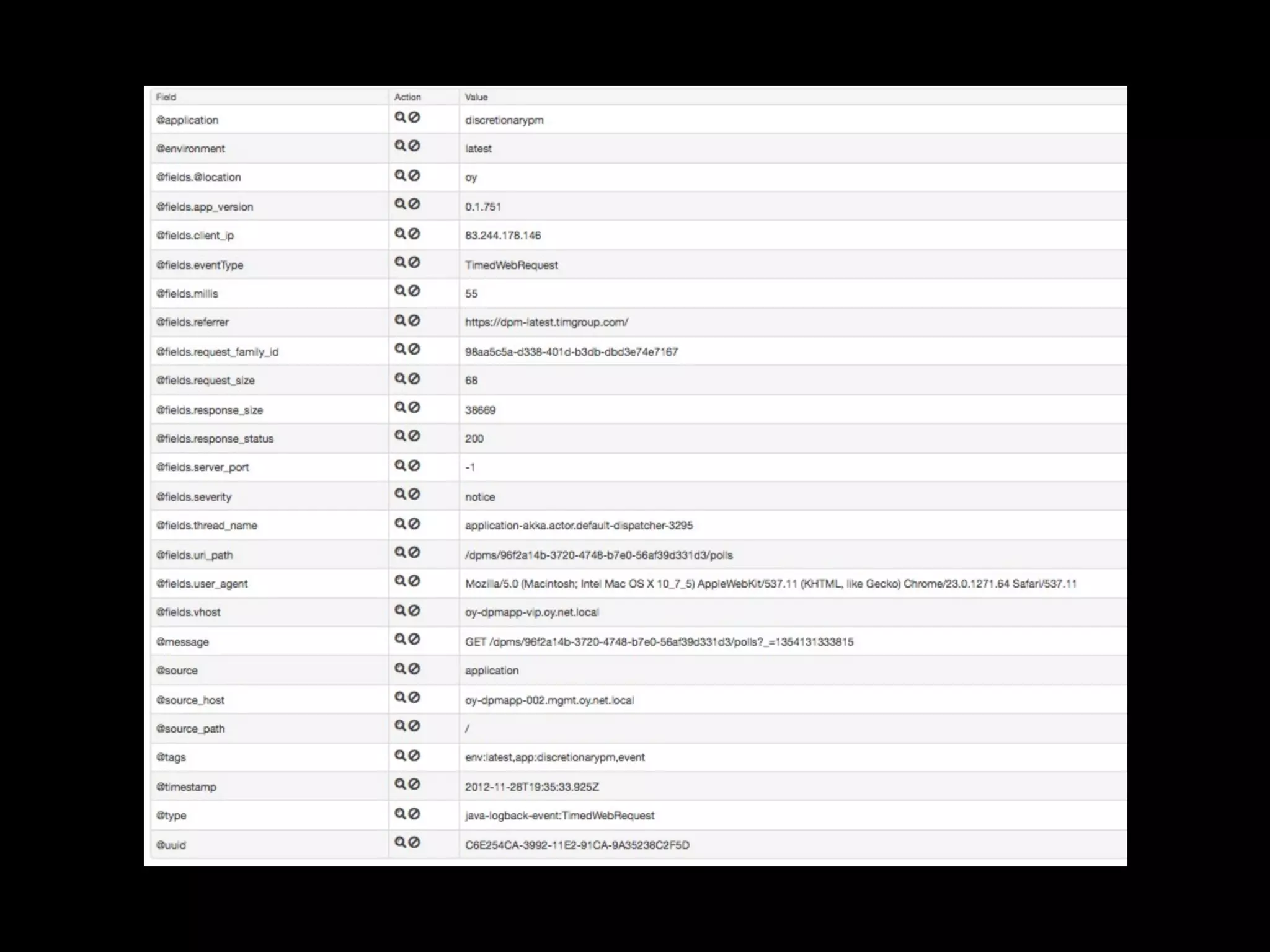

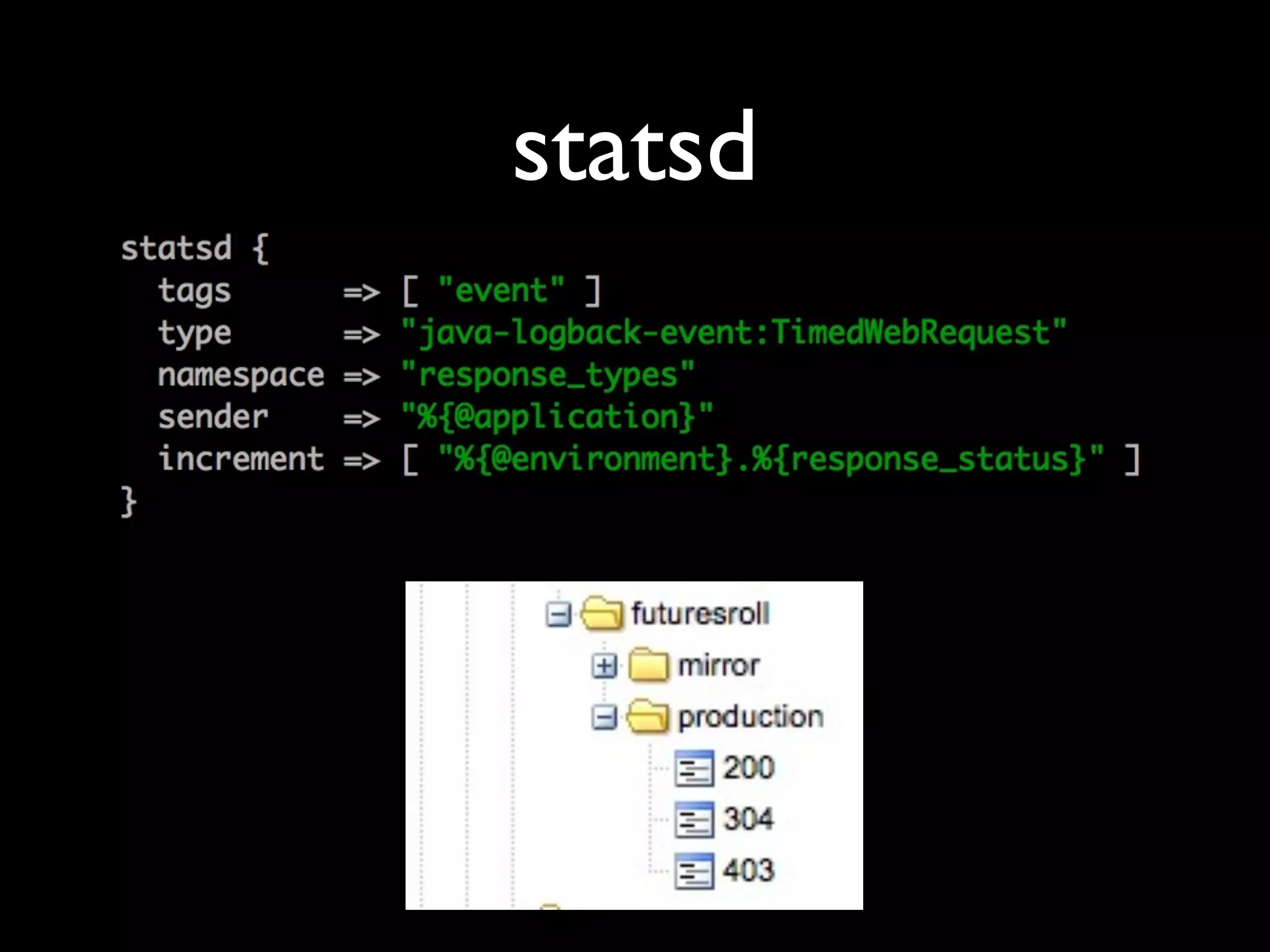

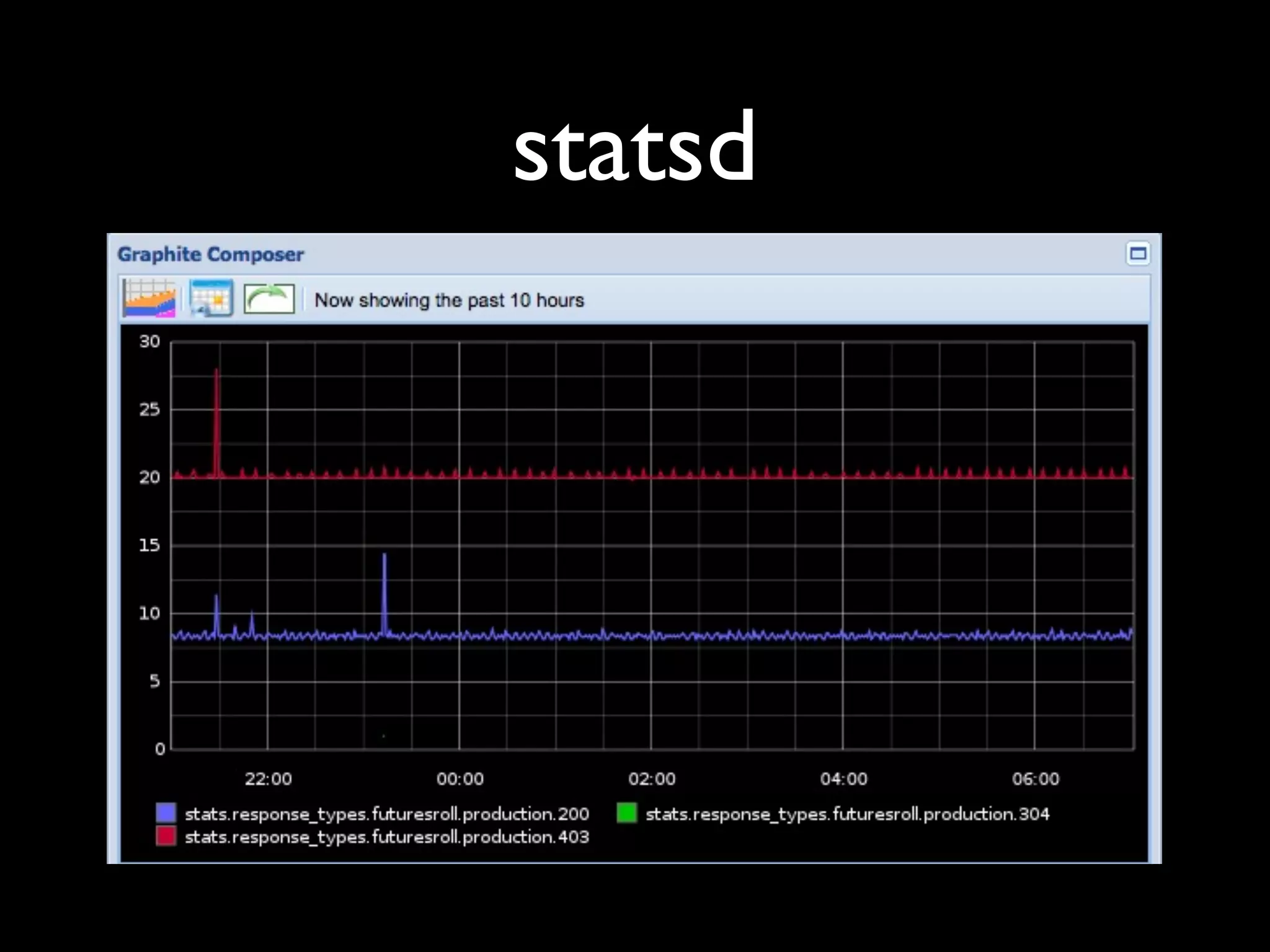

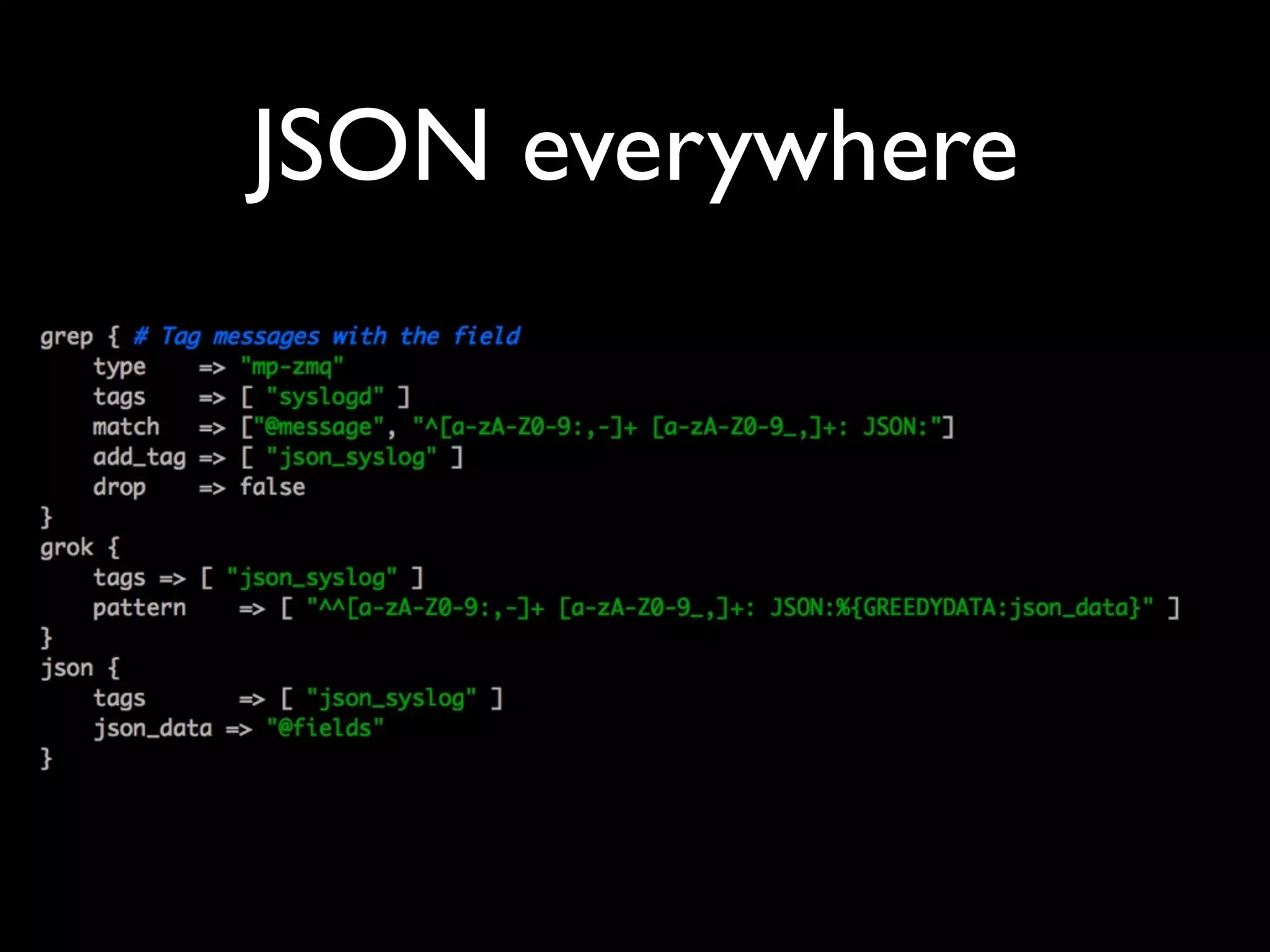

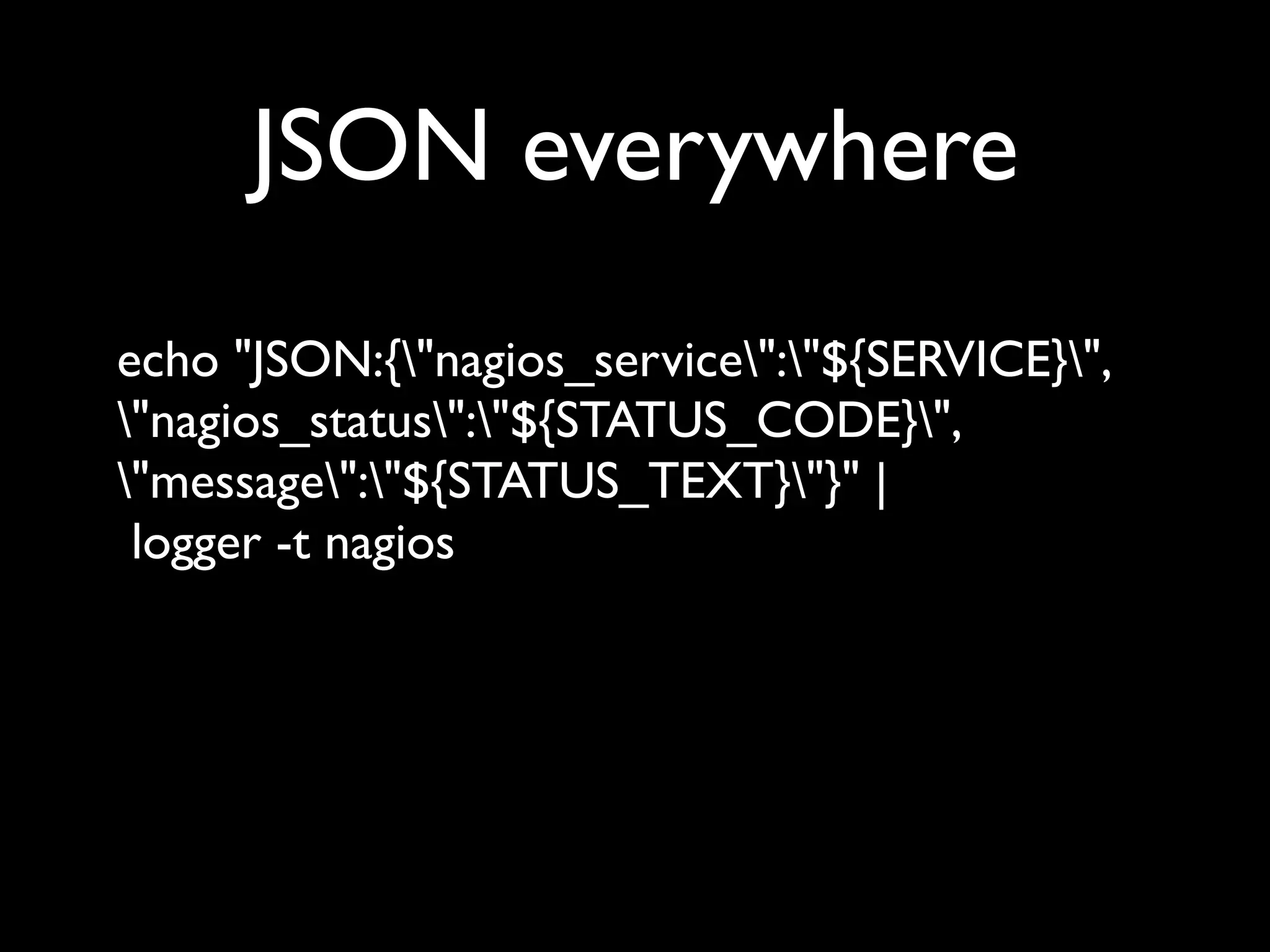

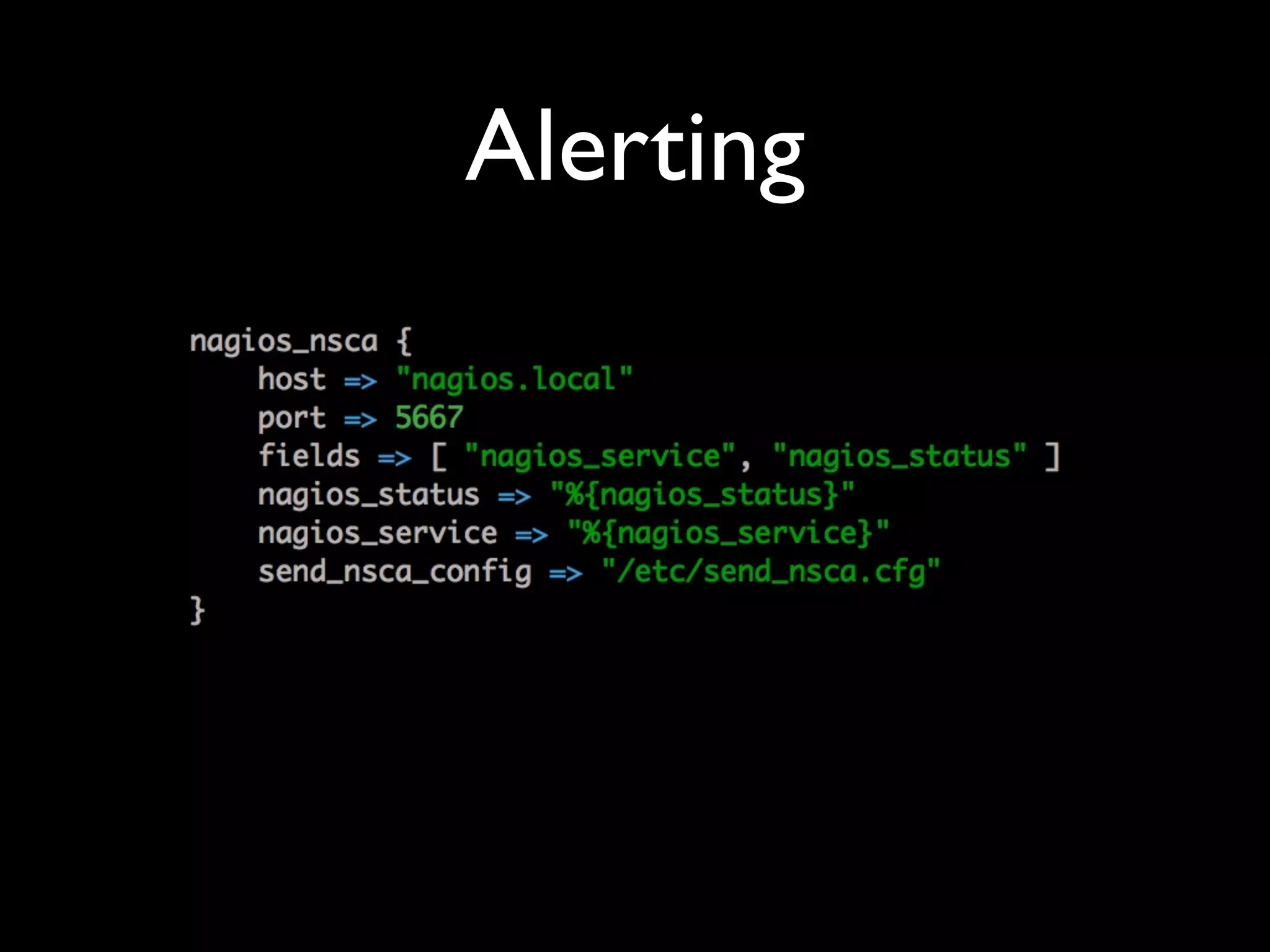

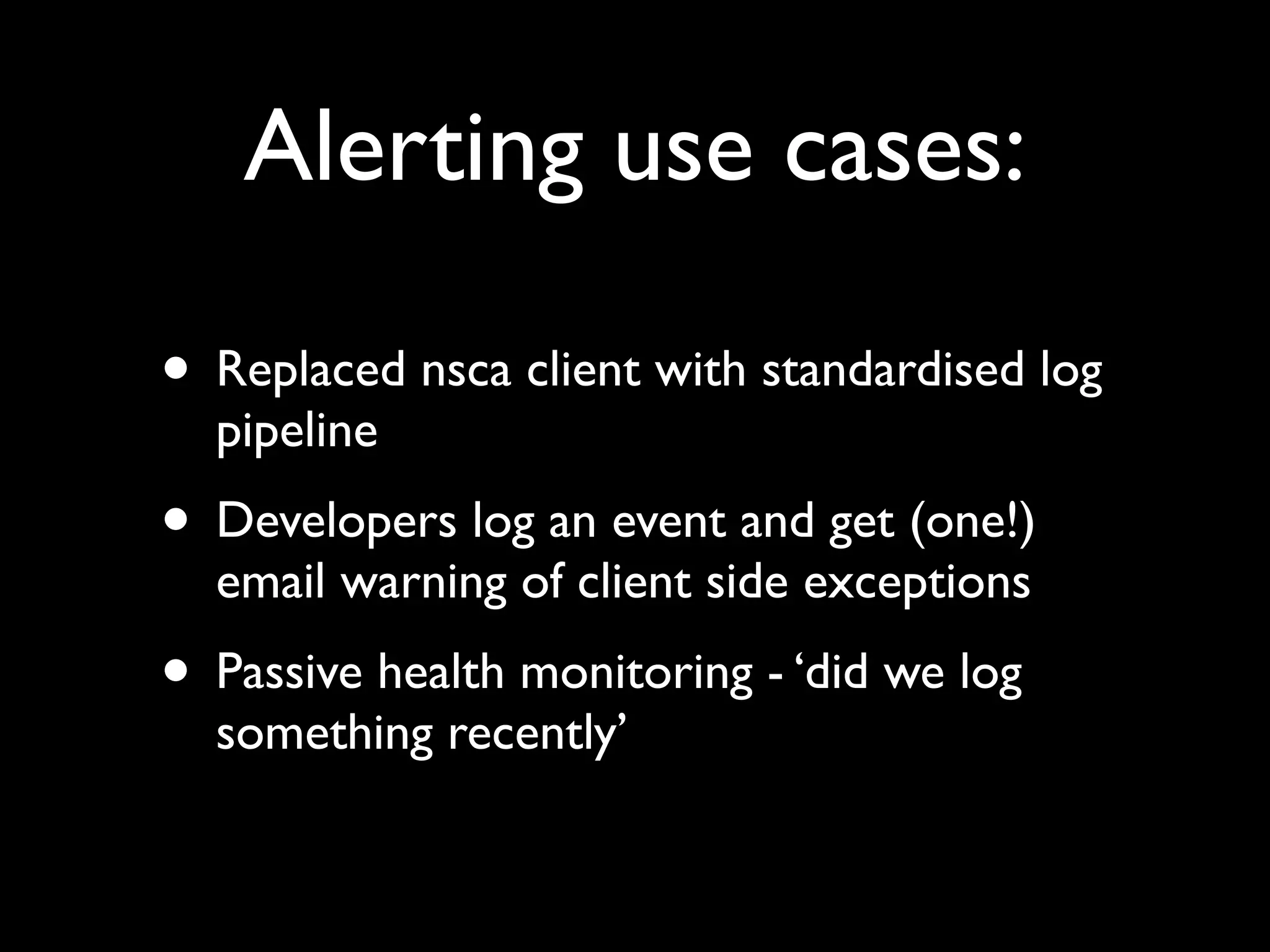

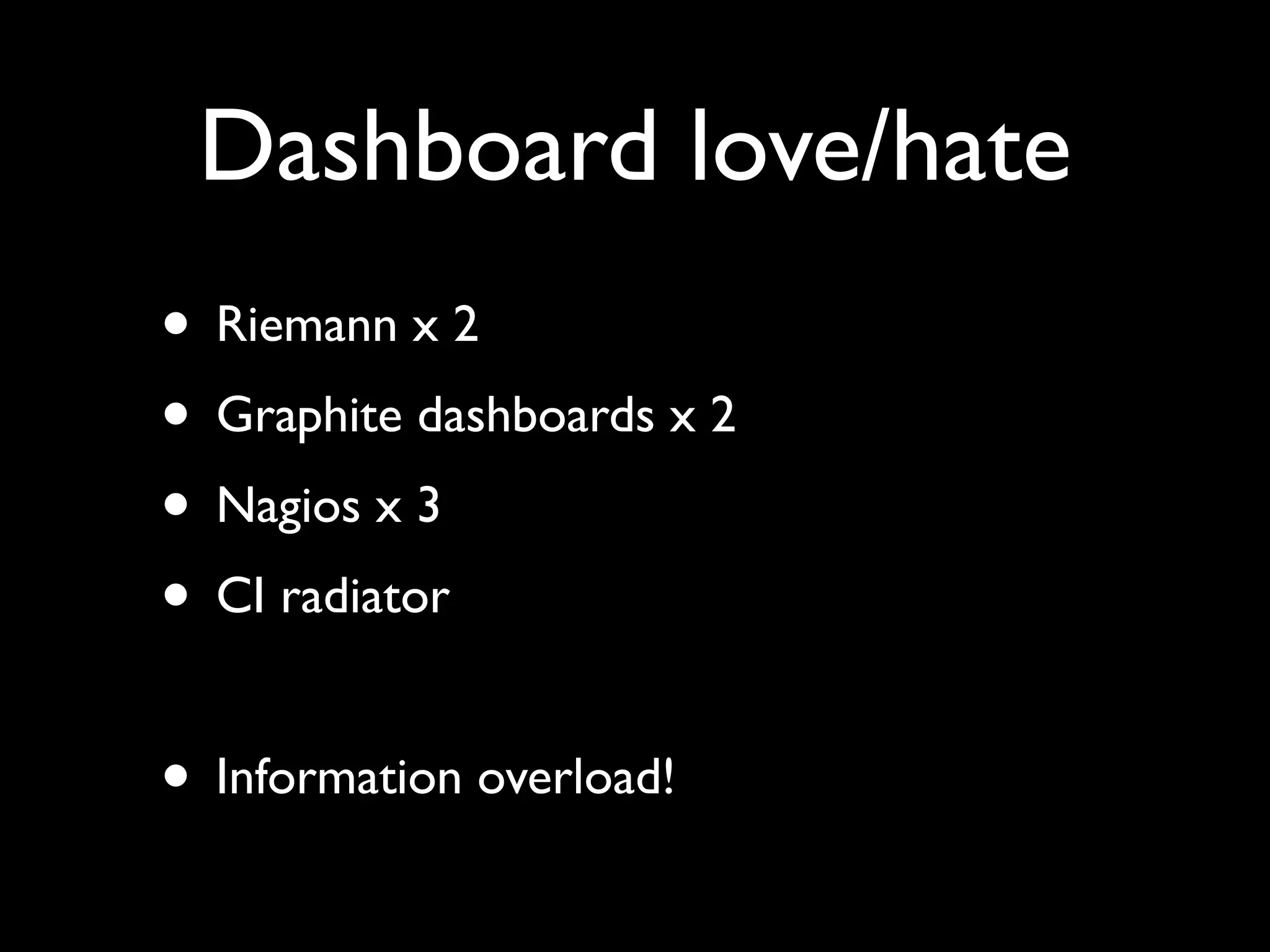

Tomas Doran presented on their implementation of Logstash at TIM Group to process over 55 million messages per day. Their applications are all Java/Scala/Clojure and they developed their own library to send structured log events as JSON to Logstash using ZeroMQ for reliability. They index data in Elasticsearch and use it for metrics, alerts and dashboards but face challenges with data growth.