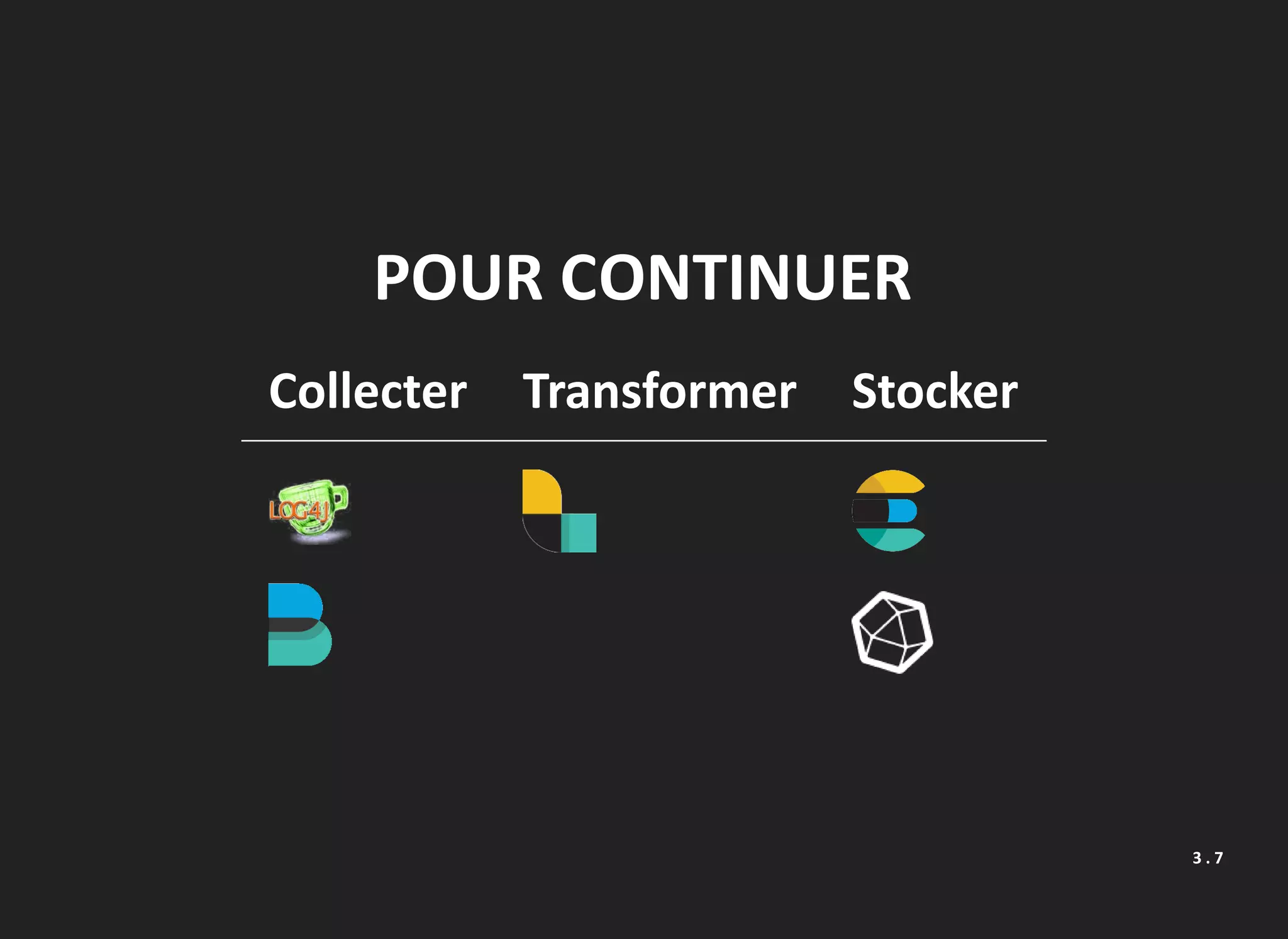

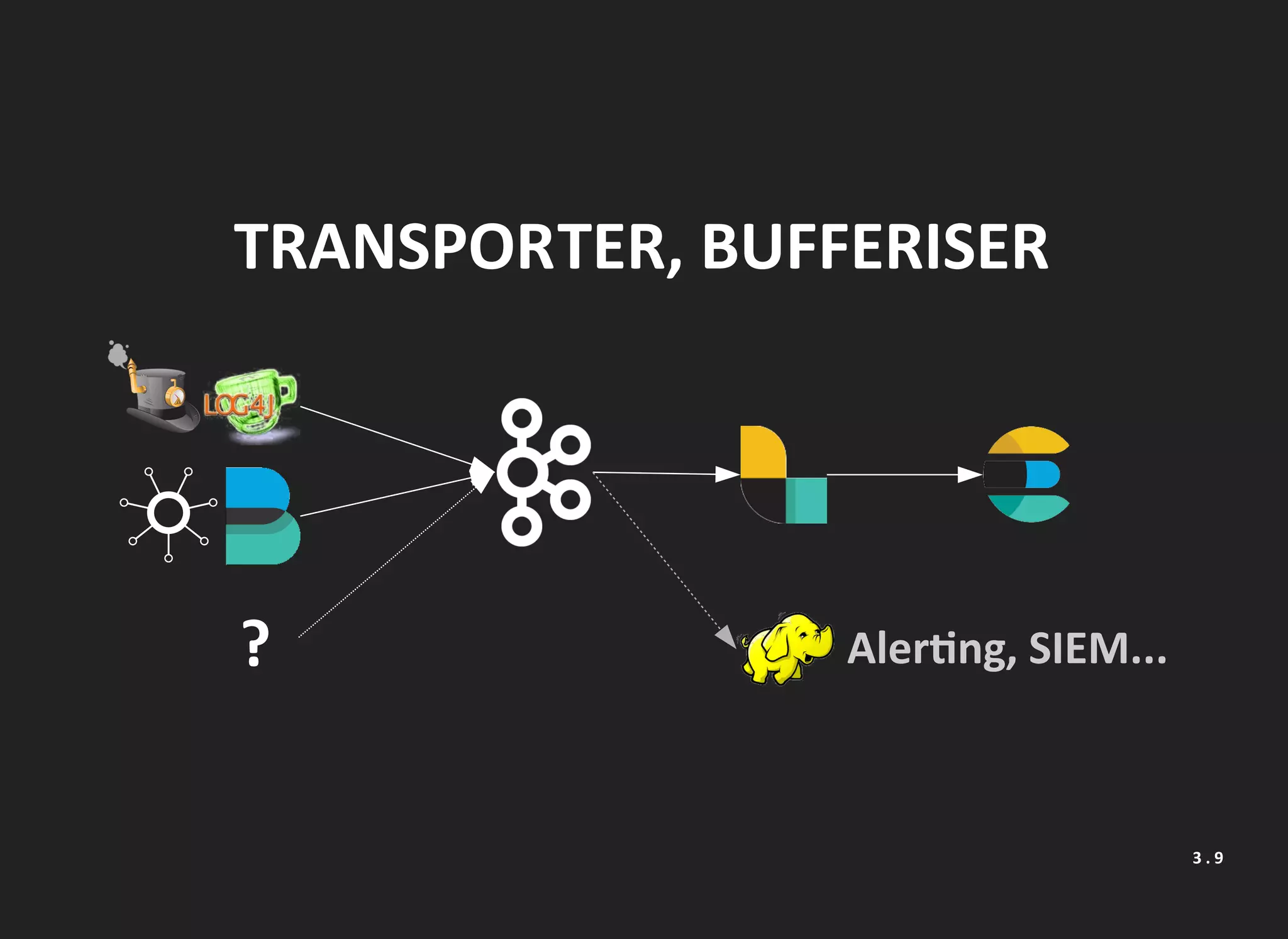

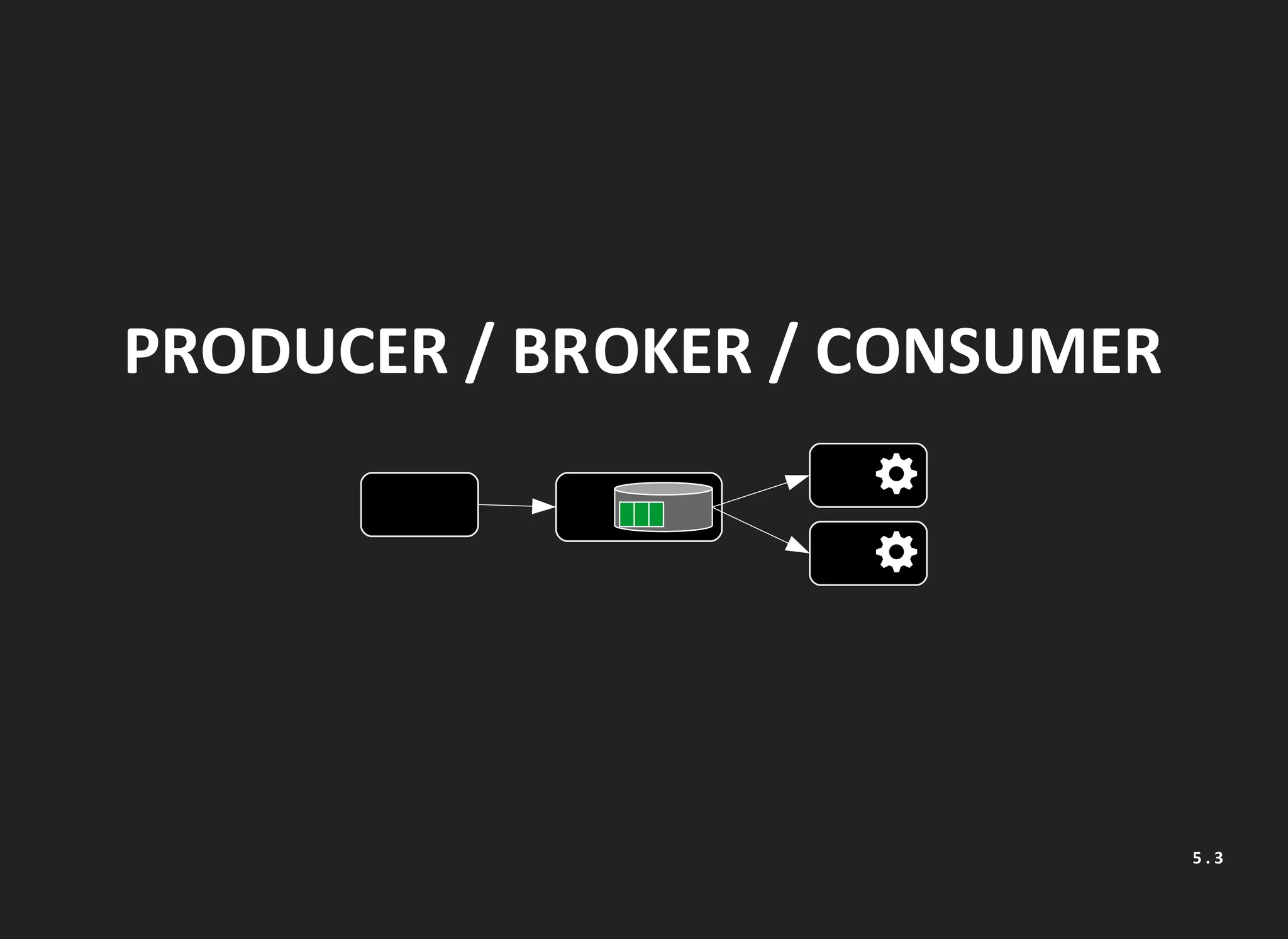

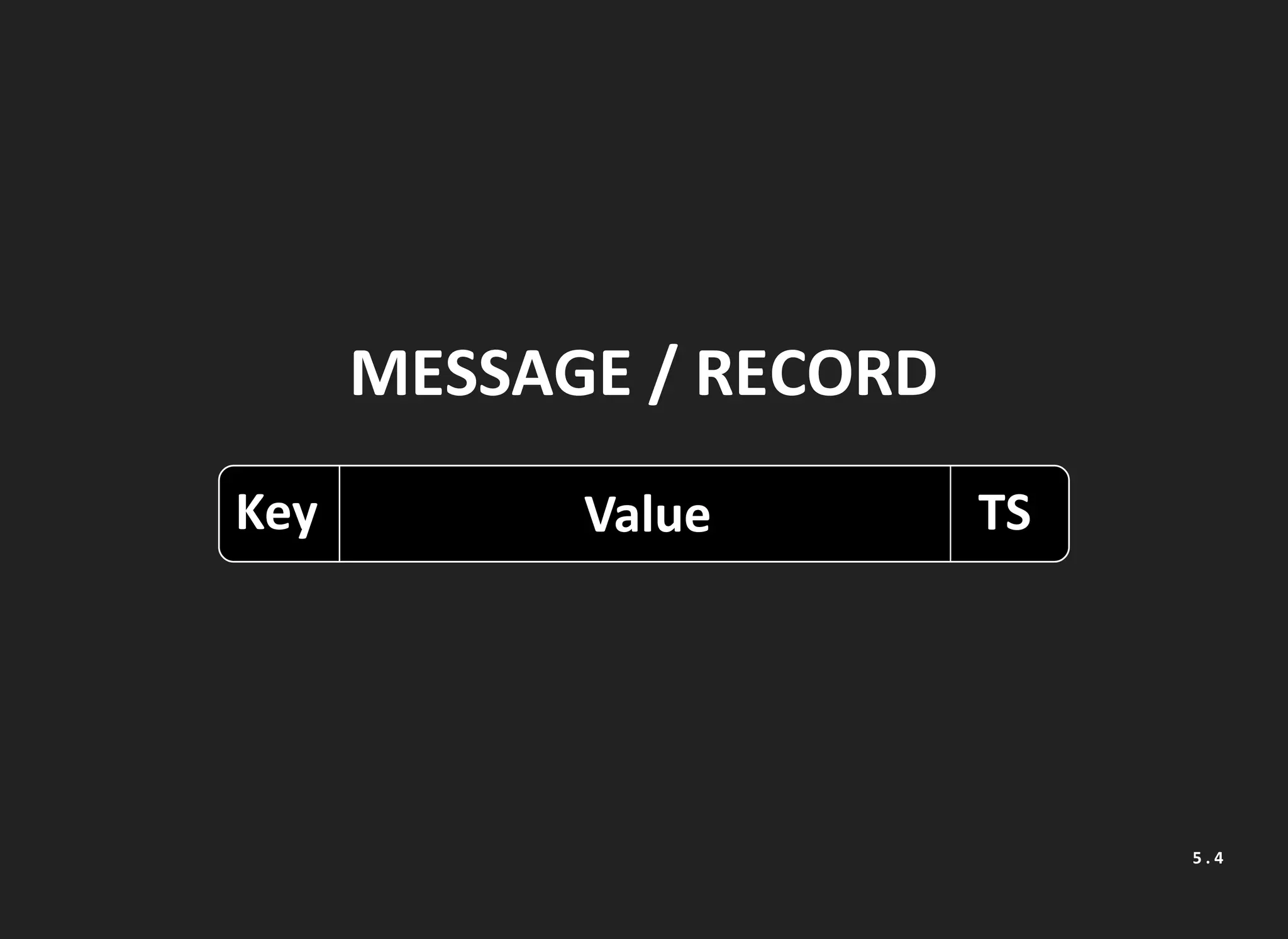

The document outlines a comprehensive guide on log processing, detailing the entire lifecycle from data collection to storage. It covers various methods including application integration, Kafka usage, and transformation pipelines in Logstash, as well as monitoring and data storage techniques using Elasticsearch. Key elements include processing logs over time, managing consumer groups, and optimizing data ingestion and indexing strategies.

![LOG

2017-03-20 22:42:03 [main] INFO Bonjour à tous

4 . 2](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-14-2048.jpg)

![BEATS & JSON

filebeat.prospectors:

- input_type: log

document_type: logback

paths:

- /var/log/log-odyssey/application.*.log

json:

keys_under_root: true

output.elasticsearch:

hosts: ["elasticsearch:9200"]

4 . 7](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-19-2048.jpg)

![BEATS & KAFKA

filebeat.prospectors:

- input_type: log

...

output.kafka:

hosts: ["kafka:9092"]

topic: logstash

4 . 8](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-20-2048.jpg)

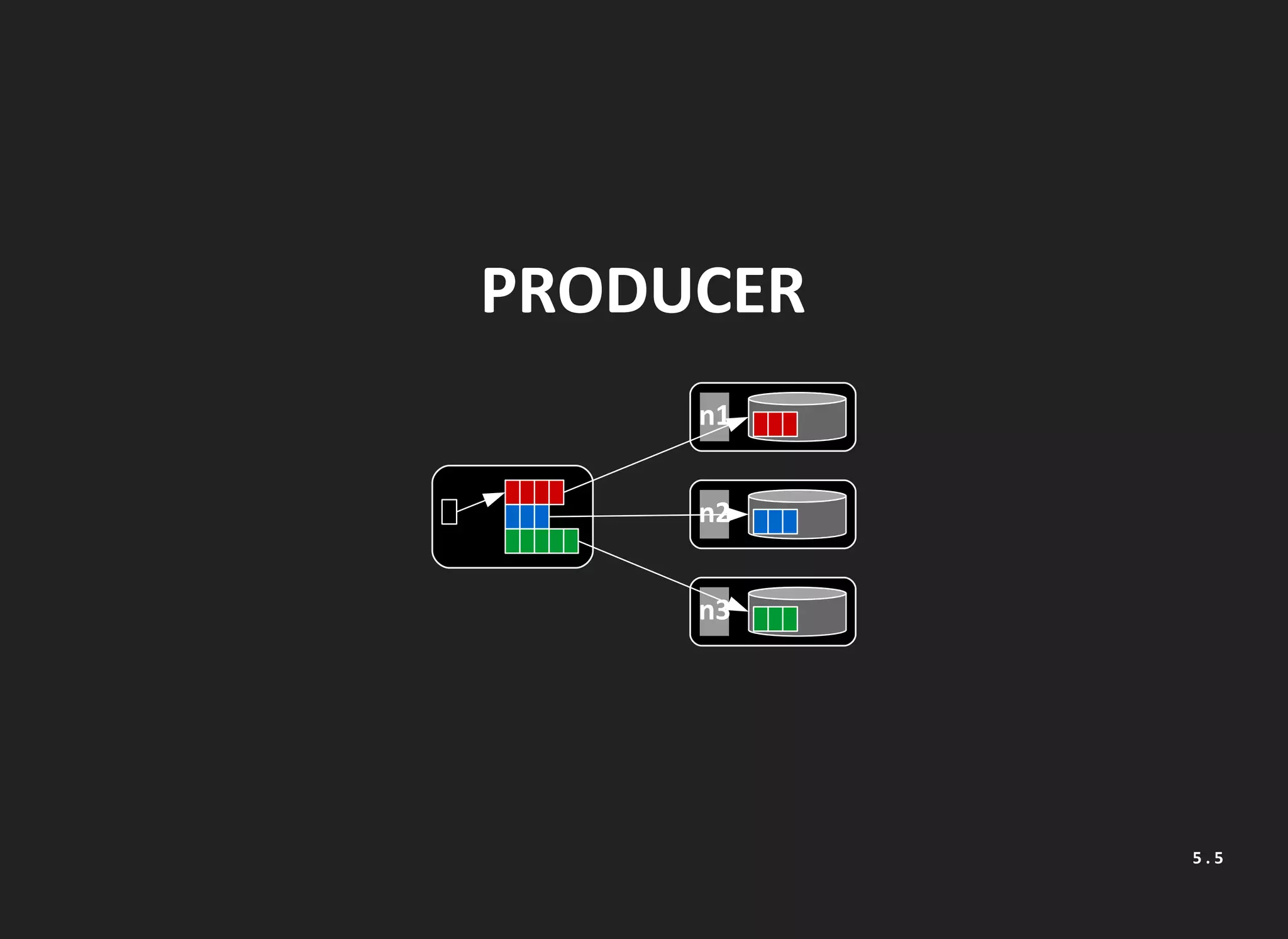

![BEATS & KAFKA

filebeat.prospectors:

- input_type: log

...

output.kafka:

hosts: ["kafka:9092"]

topic: logstash

partition.round_robin:

reachable_only: false

5 . 7](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-28-2048.jpg)

![EQUILIBRAGE DES CONSUMERS

<[... 14:27:40,752] ...: Preparing to restabilize group logstash with old generation 0>

<[... 14:27:40,753] ...: Stabilized group logstash generation 1>

<[... 14:27:40,773] ...: Assignment received from leader for group logstash for generation

<[... 14:27:48,243] ...: Preparing to restabilize group logstash with old generation 1>

<[... 14:27:49,837] ...: Stabilized group logstash generation 2>

<[... 14:27:49,845] ...: Assignment received from leader for group logstash for generation

<[... 14:27:54,969] ...: Preparing to restabilize group logstash with old generation 2>

<[... 14:27:56,621] ...: Stabilized group logstash generation 3>

5 . 10](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-31-2048.jpg)

![CONFIGURATION

input {

kafka {

bootstrap_servers => "kafka:9092"

codec => json

topics => ["logstash"]

}

}

filter {

if [type] == "jetty" {

grok {

match => { "message" =>

"%{COMBINEDAPACHELOG} (?:%{NUMBER:latency:int}|-)" }

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

6 . 3](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-37-2048.jpg)

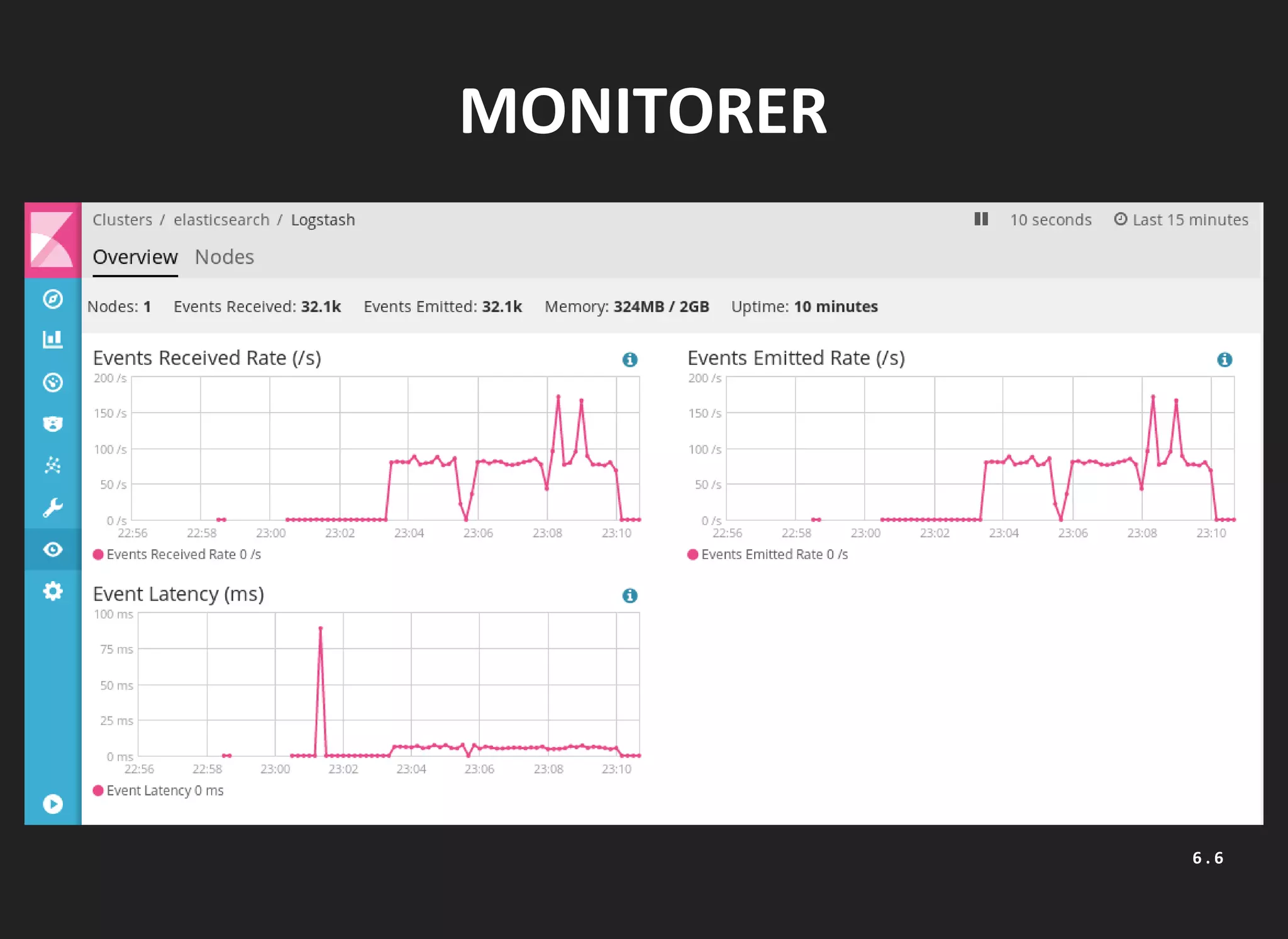

![MONITORER

filter {

ruby {

init => "require 'time'"

code => "start_time=Time.now.to_f*1000.0;

event.set('[@metadata][start_time]', start_time);"

}

# Filtrage

#....

ruby {

init => "require 'time'"

code => "end_time=Time.now.to_f=1000.0;

start_time=event.get('[@metadata][start_time]');

event.set('[logstash_duration]', end_time - start_time)"

}

metrics

6 . 7](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-41-2048.jpg)

![PIPELINE ELASTICSEARCH

PUT _ingest/pipeline/jetty

{ "description": "Jetty Access Logs",

"processors": [

{ "grok": {

"field": "message",

"patterns": [

"%{COMBINEDAPACHELOG} (?:%{NUMBER:latency:int}|-)" ] } },

{ "date": {

"field": "timestamp",

"formats": [

"dd/MMM/yyyy:HH:mm:ss Z" ] } },

{ "date_index_name": {

"field": "@timestamp",

"index_name_prefix": "logs-",

"date_rounding" : "d" } }

6 . 9](https://image.slidesharecdn.com/lodyssedelalog-170425183516/75/L-odyssee-de-la-log-43-2048.jpg)