The document provides an overview of Linux clustering using Corosync and Pacemaker for high availability, detailing the architecture, installation, and configuration steps for creating a two-node cluster. It highlights key features of Corosync for node communication and Pacemaker for resource management, including failure detection and recovery. Additionally, the document outlines the process for adding and managing cluster resources through the CRM shell, ensuring efficient and reliable clustering solutions.

![Clustering Page 5

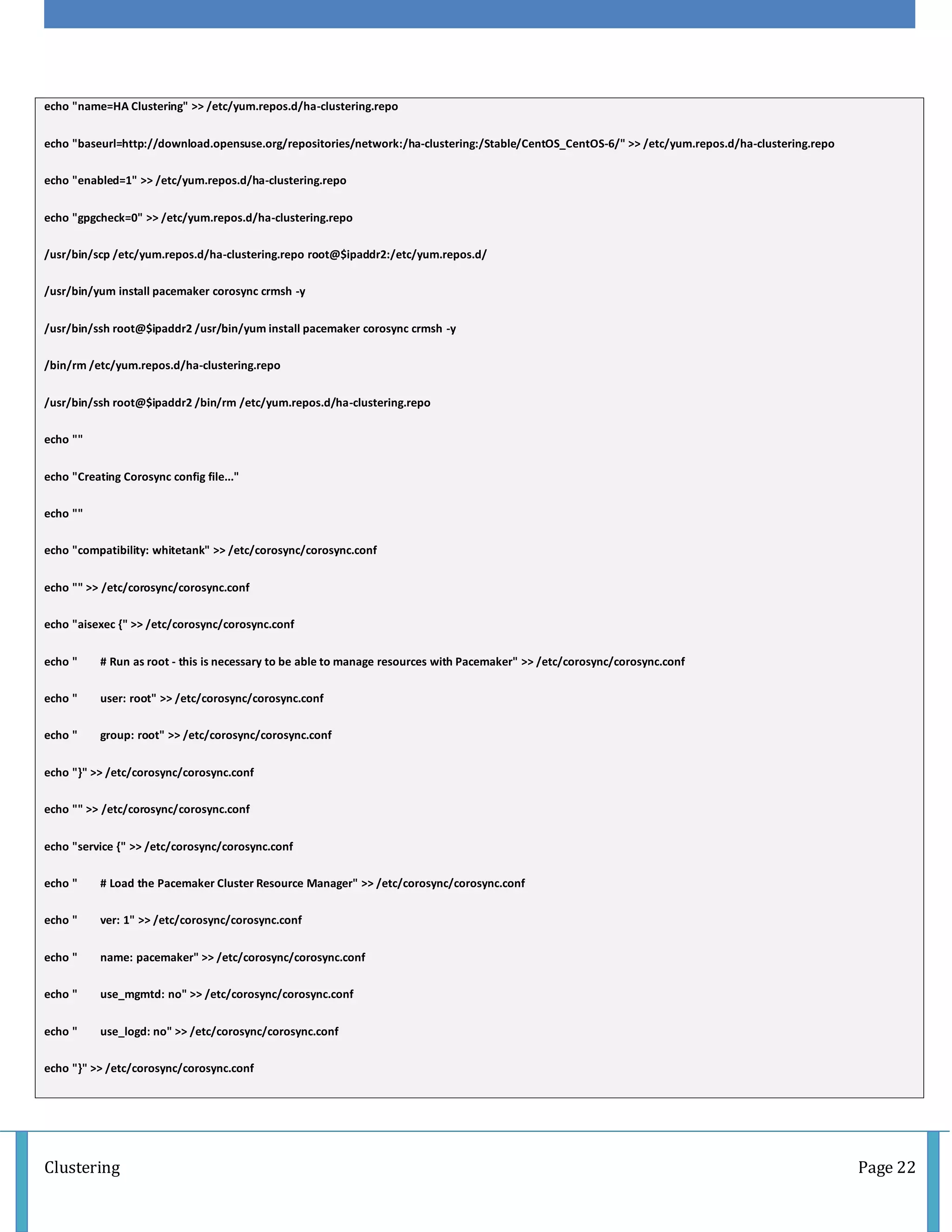

Install and Configure Linux Cluster

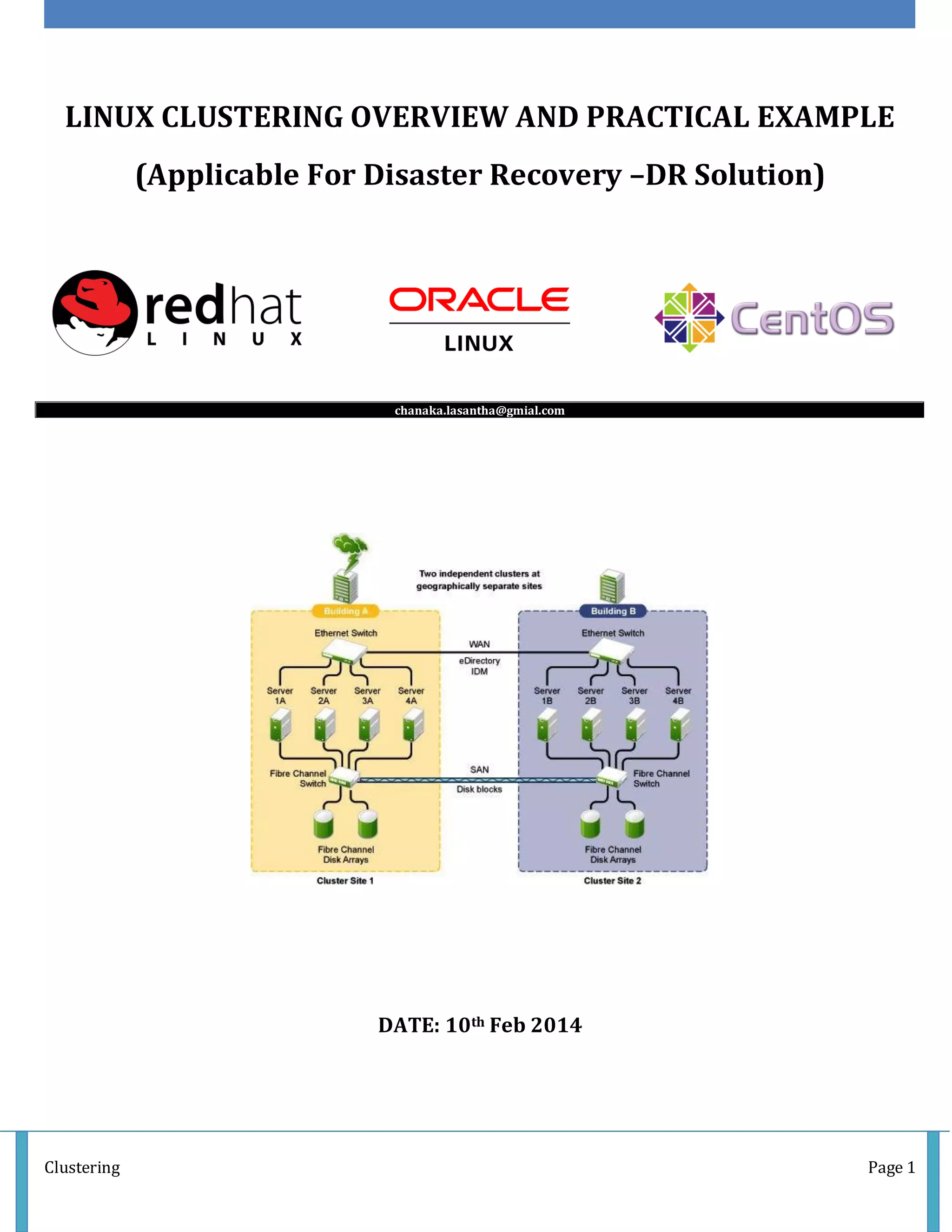

In the following steps we will configure a two node Linux Cluster – multiple nodes Linux Cluster is also available with

Corosync and Pacemaker.

1. Make sure you have successfully set up DNS resolution and NTP time synchronization for both your Linux Cluster nodes.

2. Add HA-Clustering Repository from OpenSuse on both nodes! You will need this Repository to install CRM Shell, to

manage Pacemaker resources:

vim /etc/yum.repos.d/ha-clustering.repo

[haclustering]

name=HA Clustering

baseurl=http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/

enabled=1

gpgcheck=0

3. Install Corosync, Pacemaker and CRM Shell. Run this command on both Linux Cluster nodes:

/usr/bin/yum install pacemaker corosync crmsh -y

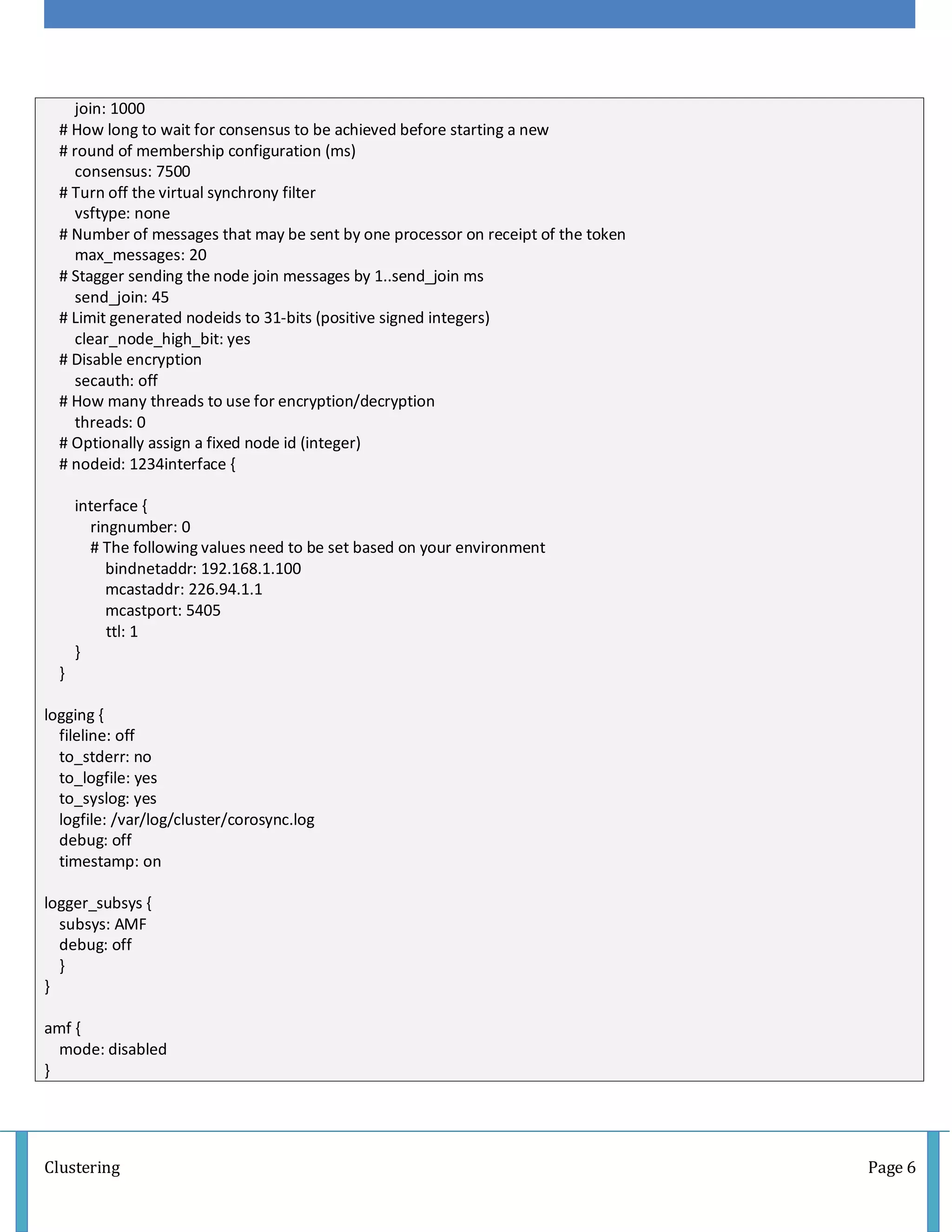

4. Create Corosync configuration file which must be located in “/etc/corosync/” folder. You can copy /paste the following

configuration and edit the “bindnetaddr: 192.168.1.100″ to the IP address of your first Linux Cluster node:

vim /etc/corosync/corosync.conf

compatibility: whitetank

aisexec {

# Run as root - this is necessary to be able to manage resources with Pacemaker

user: root

group: root

}

service {

# Load the Pacemaker Cluster Resource Manager

ver: 1

name: pacemaker

use_mgmtd: no

use_logd: no

}

totem {

version: 2

#How long before declaring a token lost (ms)

token: 5000

# How many token retransmits before forming a new configuration

token_retransmits_before_loss_const: 10

# How long to wait for join messages in the membership protocol (ms)](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-5-2048.jpg)

![Clustering Page 7

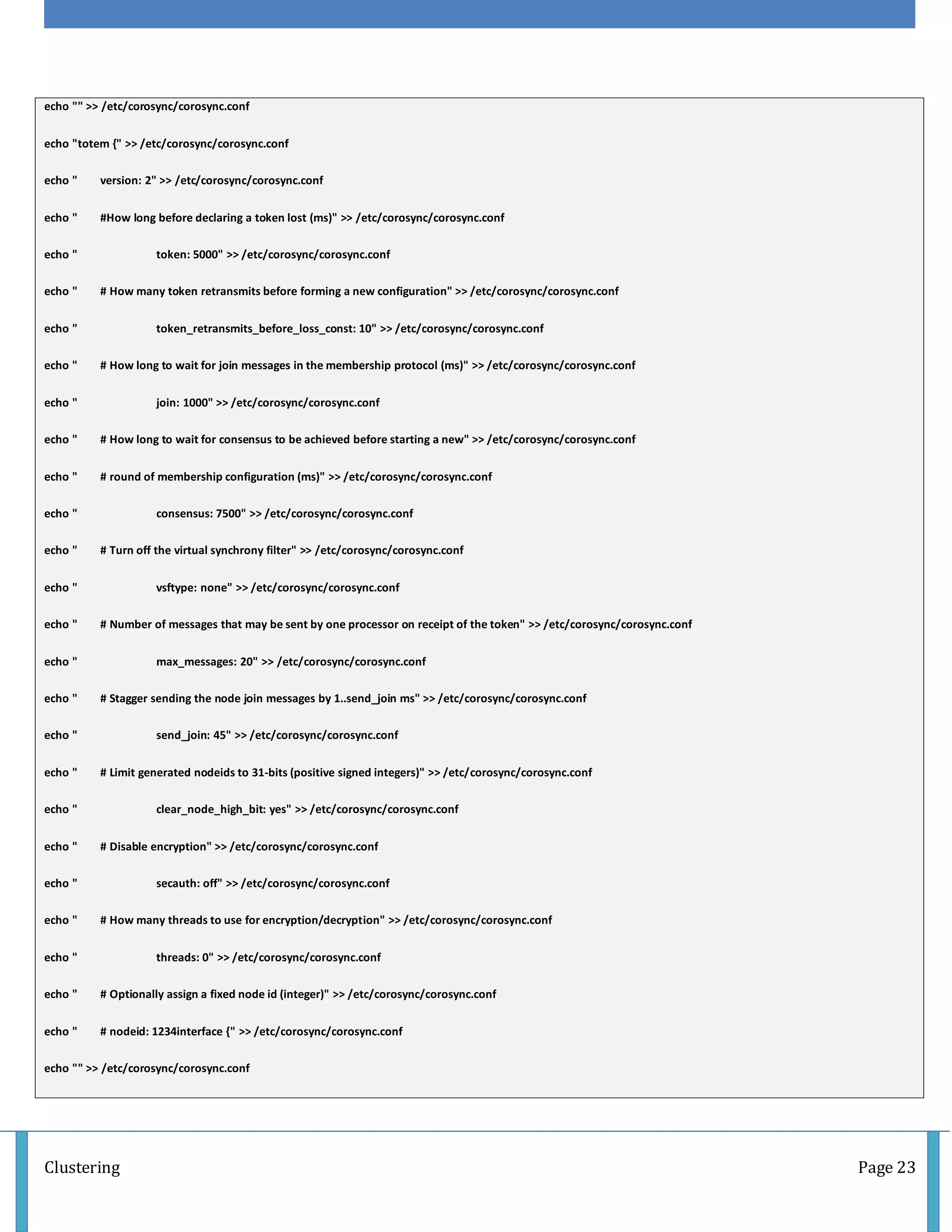

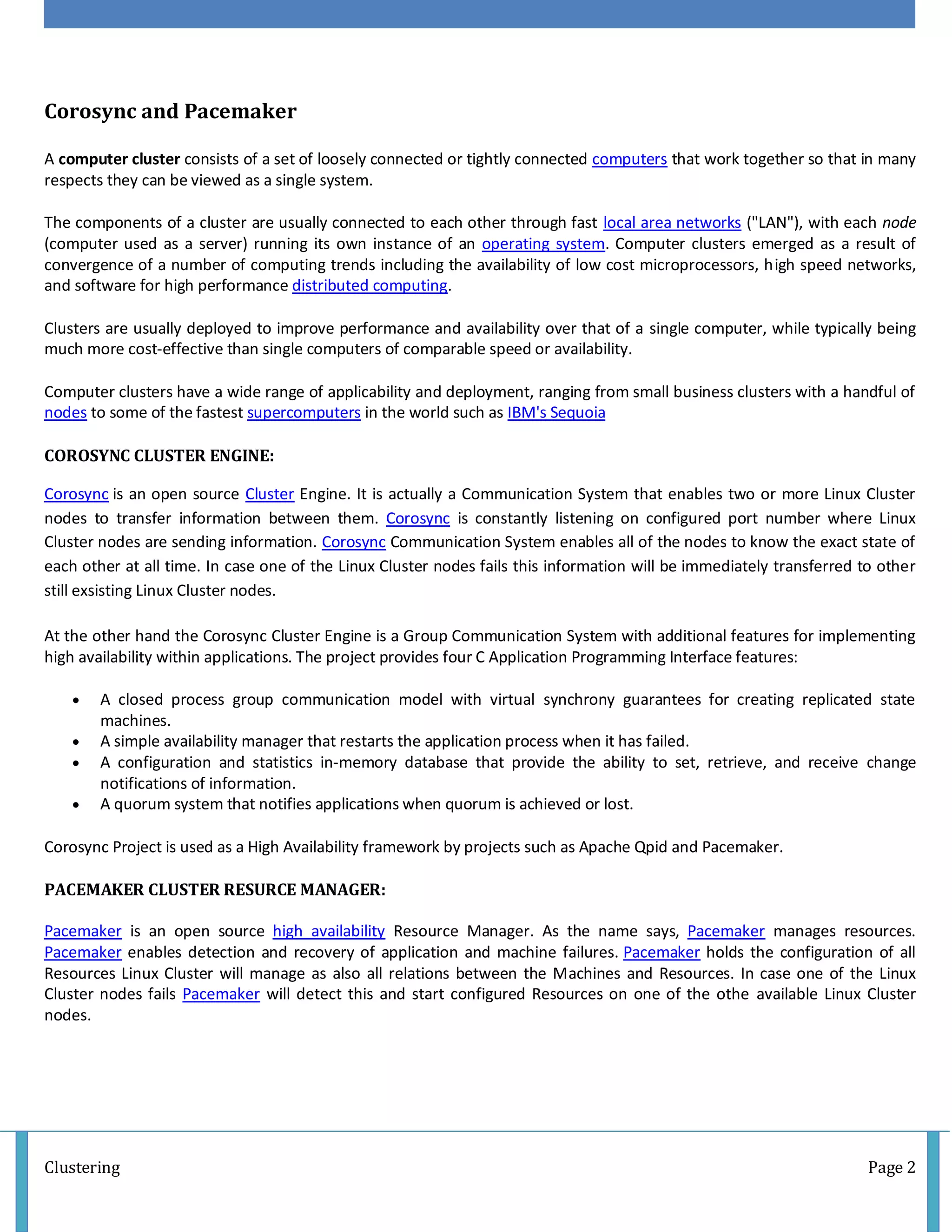

5. Copy Corosync configuration file to the second Linux Cluster node and edit the “bindnetaddr: 192.168.1.100″ to the IP

address of your second Linux Cluster node.

6. Generate Corosync Authentication Key by running “corosync-keygen” – This might take some time!. The key is located in

“/etc/corosync” directory, file is named “authkey”:

[root@foo1 /]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Press keys on your keyboard to generate entropy (bits = 176).

Press keys on your keyboard to generate entropy (bits = 240).

Press keys on your keyboard to generate entropy (bits = 304).

Press keys on your keyboard to generate entropy (bits = 368).

Press keys on your keyboard to generate entropy (bits = 432).

Press keys on your keyboard to generate entropy (bits = 496).

Press keys on your keyboard to generate entropy (bits = 560).

Press keys on your keyboard to generate entropy (bits = 624).

Press keys on your keyboard to generate entropy (bits = 688).

Press keys on your keyboard to generate entropy (bits = 752).

Press keys on your keyboard to generate entropy (bits = 816).

Press keys on your keyboard to generate entropy (bits = 880).

Press keys on your keyboard to generate entropy (bits = 944).

Press keys on your keyboard to generate entropy (bits = 1008).

Writing corosync key to /etc/corosync/authkey.

7. Transfer the “/etc/corosync/authkey” file to the second Linux Cluster node.

8. Start Corosync service on both nodes:

[root@foo1 /]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@foo2 /]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

9. Start Pacemaker service on both nodes:

[root@foo1 /]# service pacemaker start

Starting Pacemaker Cluster Manager: [ OK ]

[root@foo2 ~]# service pacemaker start

Starting Pacemaker Cluster Manager: [ OK ]](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-7-2048.jpg)

![Clustering Page 8

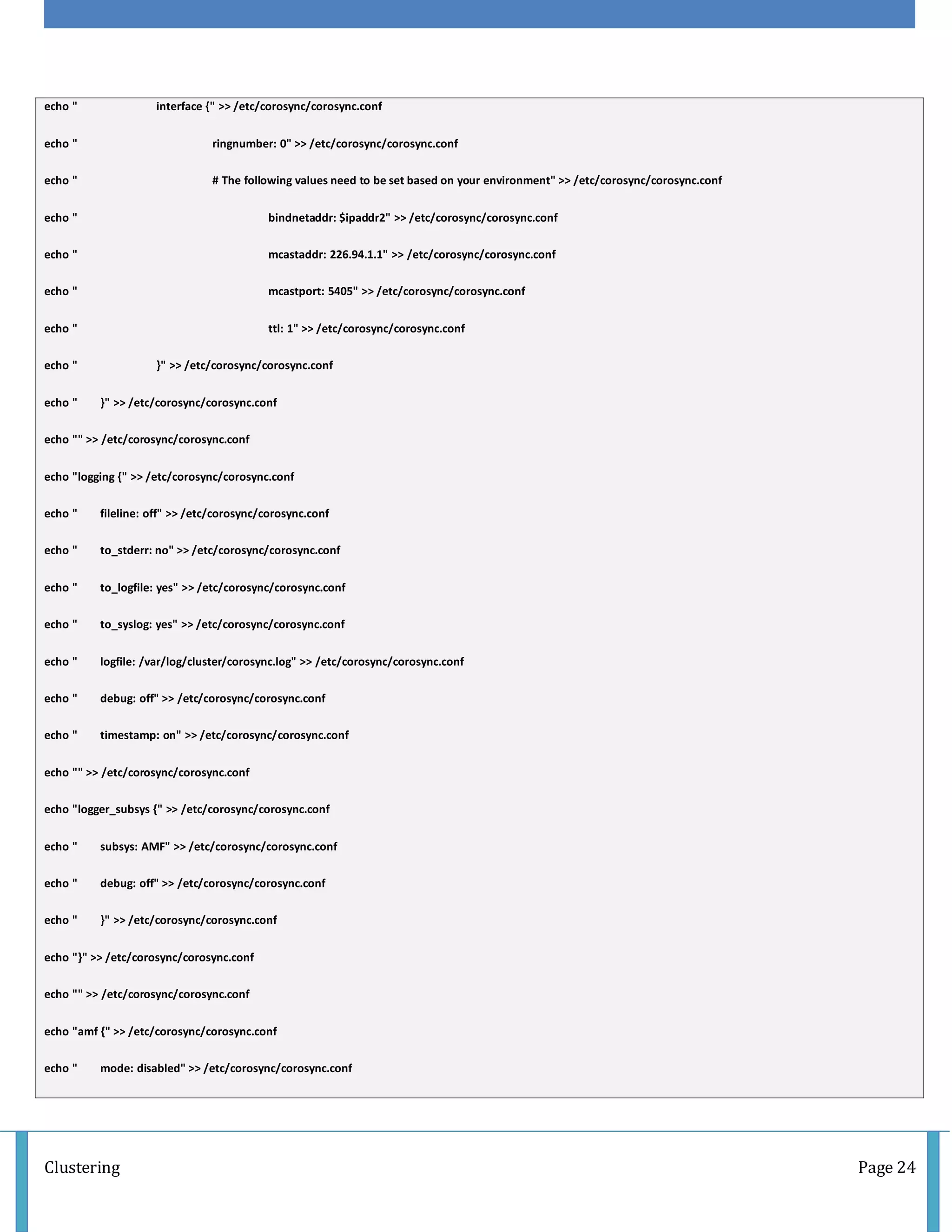

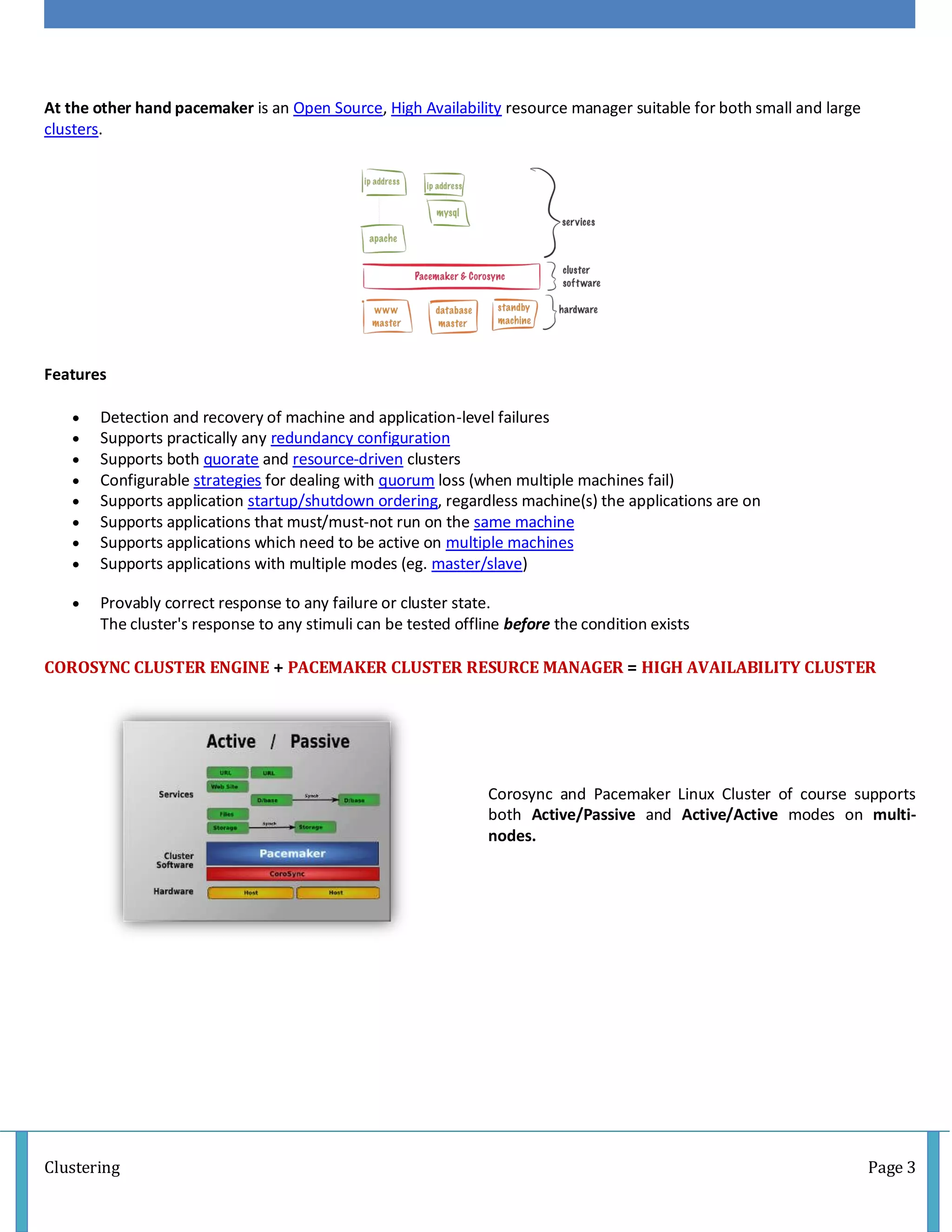

10. After a few seconds you can check your Linux Cluster status with “crm status” command:

[root@foo1 /]# crm status

Last updated: Thu Sep 19 15:28:49 2013

Last change: Thu Sep 19 15:11:57 2013 via crmd on foo2.geekpeek.net

Stack: classic openais (with plugin)

Current DC: foo1.geekpeek.net - partition with quorum

Version: 1.1.9-2.2-2db99f1

2 Nodes configured, 2 expected votes

0 Resources configured.

Online: [ foo1.geekpeek.net foo2.geekpeek.net ]

As we can see the status says 2 nodes are configured in this Linux Cluster – foo1.geekpeek.net and foo2.geekpeek.net. Both

nodes are online. Current DC is foo1.geekpeek.net.

NEXT STEP is to configure Pacemaker resources – applications, IP addresses, mount points in the cluster

Adding and Deleting Cluster Resources

1. CRM Shell

CRM Shell is a command line interface to configure and manage Pacemaker. The CRM Shell should be installed on all your

nodes; you can install it from HA-Clustering Repository. Add the following lines to “/etc/yum.repos.d/ha-clustering.repo” file:

[haclustering]

name=HA Clustering

baseurl=http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/

enabled=1

gpgcheck=0

Once installed we can run “crm” command from linux command line and manage our Pacemaker instance. Below is an

example of running “crm help” command. If you want help on additional “crm” commands run for example “crm cib help “:

[root@foo1 ~]# crm help

This is crm shell, a Pacemaker command line interface.

Available commands:

cib manage shadow CIBs

resource resources management

configure CRM cluster configuration

node nodes management

options user preferences

history CRM cluster history](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-8-2048.jpg)

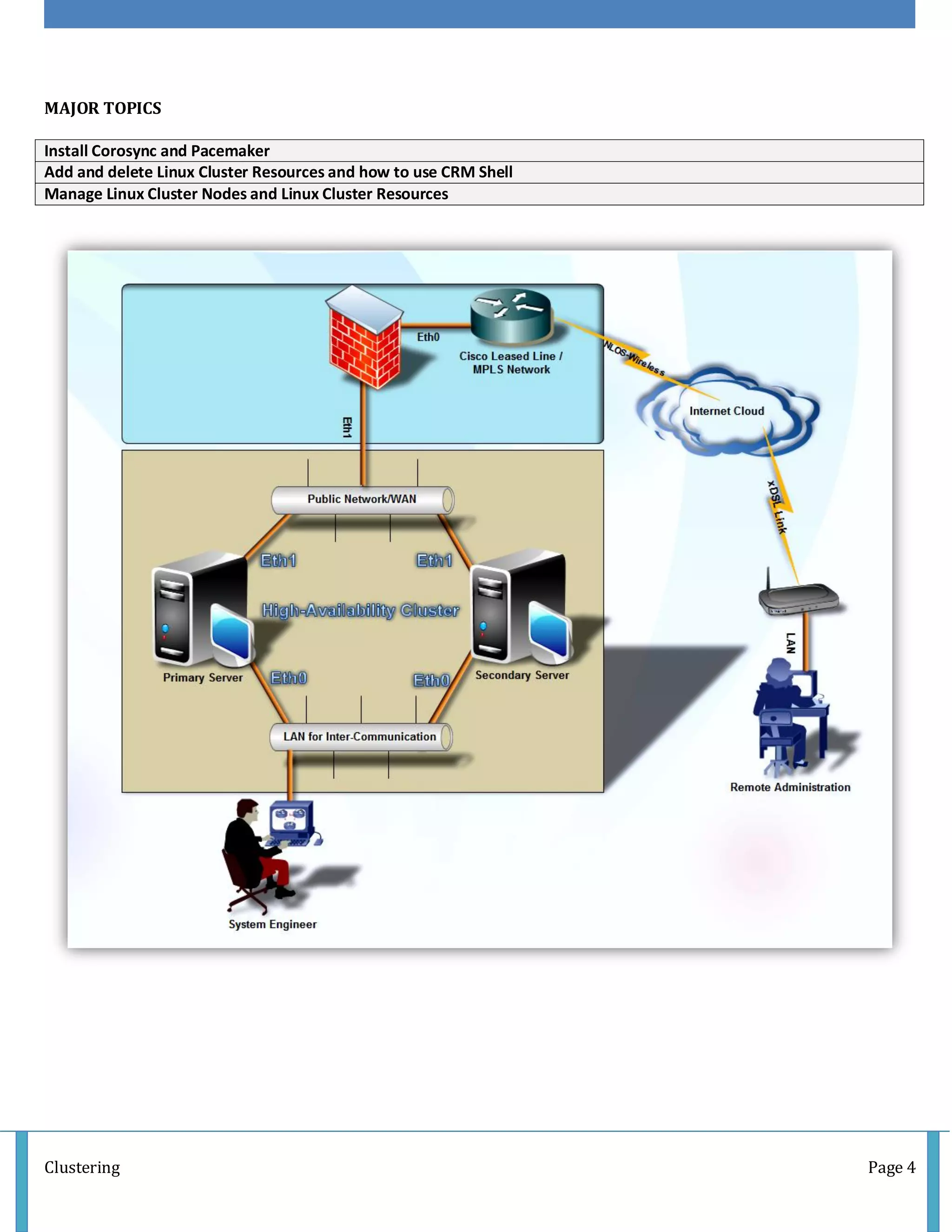

![Clustering Page 9

site Geo-cluster support

ra resource agents information center

status show cluster status

help,? show help (help topics for list of topics)

end,cd,up go back one level

quit,bye,exit exit the program

View Linux Cluster Status

[root@foo1 ~]# crm status

Last updated: Mon Oct 7 13:41:11 2013

Last change: Mon Oct 7 13:41:08 2013 via crm_attribute on foo1.geekpeek.net

Stack: classic openais (with plugin)

Current DC: foo1.geekpeek.net - partition with quorum

Version: 1.1.9-2.6-2db99f1

2 Nodes configured, 2 expected votes

0 Resources configured.

Online: [ foo1.geekpeek.net foo2.geekpeek.net ]

View Linux Cluster Configuration

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

Adding Cluster Resources

Every cluster resource is defined by a Resource Agent. Resource Agents must provide Linux Cluster with a complete resource

status and availability at any time! The most important and most used Resource Agent classes are:

LSB (Linux Standard Base) – These are common cluster resource agents found in /etc/init.d directory (init scripts).

OCF (Open Cluster Framework) – These are actually extended LSB cluster resource agents and usually support

additional parameters

From this we can presume it is always better to use OCF (if available) over LSB Resource Agents since OCF support additional

configuration parameters and are optimized for Cluster Resources.](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-9-2048.jpg)

![Clustering Page 10

We can check for available Resource Agents by running the “crm ra list” and the desired resource agent:

[root@foo1 ~]# crm ra list lsb

auditd blk-availability corosync corosync-

notifyd crond halt ip6tables iptables iscsi iscsid

killall logd lvm2-lvmetad lvm2-

monitor mdmonitor multipathd netconsole netfs network nfs

nfslock pacemaker postfix quota_nld rdisc restorecond rpcbind rpcgssd rpcidmapd

rpcsvcgssd

rsyslog sandbox saslauthd single sshd udev-post winbind

[root@foo1 ~]# crm ra list ocf

ASEHAagent.sh AoEtarget AudibleAlarm CTDB ClusterMon Delay Dummy EvmsSCC

Evmsd

Filesystem HealthCPU HealthSMART ICP IPaddr IPaddr2 IPsrcaddr IPv6addr

LVM

LinuxSCSI MailTo ManageRAID ManageVE NodeUtilization Pure-

FTPd Raid1 Route SAPDatabase

SAPInstance SendArp ServeRAID SphinxSearchDaemon Squid Stateful SysInfo SystemH

ealth VIPArip

VirtualDomain WAS WAS6 WinPopup Xen Xinetd anything apache ap

ache.sh

asterisk clusterfs.sh conntrackd controld db2 dhcpd drbd drbd.sh eDir88

ethmonitor exportfs fio fs.sh iSCSILogicalUnit iSCSITarget ids ip.sh iscsi

jboss ldirectord lvm.sh lvm_by_lv.sh lvm_by_vg.sh lxc mysql mysql-

proxy mysql.sh

named named.sh netfs.sh nfsclient.sh nfsexport.sh nfsserver nfsserver.sh nginx

o2cb

ocf-

shellfuncs openldap.sh oracle oracledb.sh orainstance.sh oralistener.sh oralsnr pgsql

ping

pingd portblock postfix postgres-

8.sh pound proftpd remote rsyncd rsyslog

samba.sh script.sh scsi2reservation service.sh sfex slapd smb.sh svclib_nfslock sy

mlink

syslog-ng tomcat tomcat-5.sh tomcat-6.sh varnish vm.sh vmware zabbixserver

We configure cluster resources with “crm configure primitive” command following by a Resource Name, Resource Agent and

Additional Parameters (example):

crm configure primitive resourcename resourceagent parameters

We can see HELP and additional Resource Agent parameters by running “crm ra meta” command following by a resource

name (example):

[root@foo1 ~]# crm ra meta IPaddr2](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-10-2048.jpg)

![Clustering Page 11

Before we start adding Resources to our Cluster we need to disable STONITH (Shoot The Other Node In The Head) – since

we are not using it in our configuration:

[root@foo1 ~]# crm configure property stonith-enabled=false

We can check the Linux Cluster configuration by running “crm configure show” command:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

..to confirm STONITH was disabled!

Adding IP Address Resource

Let’s add IP address resource to our Linux Cluster. The information we need to configure IP address is:

Cluster Resource Name: ClusterIP

Resource Agent: ocf:heartbeat:IPaddr2 (get this info with “crm ra meta IPaddr2″)

IP address: 192.168.1.150

Netmask: 24

Monitor interval: 30 seconds (get this info with “crm ra meta IPaddr2″)

Run the following command on a Linux Cluster node to configure ClusterIP resource:

[root@foo1 ~]# crm configure primitive ClusterIP ocf:heartbeat:IPaddr2 params ip=192.168.1.150 cidr_netmask="24" op

monitor interval="30s"

Check Cluster Configuration with:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.61.150" cidr_netmask="24"

op monitor interval="30s"

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-11-2048.jpg)

![Clustering Page 12

stonith-enabled="false"

last-lrm-refresh="1381240623"

Check Cluster Status with:

[root@foo1 ~]# crm status

Last updated: Tue Oct 8 15:59:19 2013

Last change: Tue Oct 8 15:58:11 2013 via cibadmin on foo1.geekpeek.net

Stack: classic openais (with plugin)

Current DC: foo1.geekpeek.net - partition with quorum

Version: 1.1.9-2.6-2db99f1

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ foo1.geekpeek.net foo2.geekpeek.net ]

ClusterIP (ocf::heartbeat:IPaddr2): Started foo1.geekpeek.net

As we can see a new resource called ClusterIP is configured in the Cluster and started on foo1.geekpeek.net node.

Adding Apache (httpd) Resource

Next resource is an Apache Web Server. Prior to Apache Cluster Resource Configuration, httpd package must be installed

and configured on both nodes! The information we need to configure Apache Web Server is:

Cluster Resource Name: Apache

Resource Agent: ocf:heartbeat:apache (get this info with “crm ra meta apache”)

Configuration file location: /etc/httpd/conf/httpd.conf

Monitor interval: 30 seconds (get this info with “crm ra meta apache”)

Start timeout: 40 seconds (get this info with “crm ra meta apache”)

Stop timeout: 60 seconds (get this info with “crm ra meta apache”)

Run the following command on a Linux Cluster node to configure Apache resource:

[root@foo1 ~]# crm configure primitive Apache ocf:heartbeat:apache params configfile=/etc/httpd/conf/httpd.conf op

monitor interval="30s" op start timeout="40s" op stop timeout="60s"

Check Cluster Configuration with:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

meta target-role="Started"](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-12-2048.jpg)

![Clustering Page 13

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.61.150" cidr_netmask="24"

op monitor interval="30s"

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1381240623"

Check Cluster Status with:

[root@foo1 ~]# crm status

Last updated: Thu Oct 10 11:13:59 2013

Last change: Thu Oct 10 11:07:38 2013 via cibadmin on foo1.geekpeek.net

Stack: classic openais (with plugin)

Current DC: foo1.geekpeek.net - partition with quorum

Version: 1.1.9-2.6-2db99f1

2 Nodes configured, 2 expected votes

2 Resources configured.

Online: [ foo1.geekpeek.net foo2.geekpeek.net ]

ClusterIP (ocf::heartbeat:IPaddr2): Started foo1.geekpeek.net

Apache (ocf::heartbeat:apache): Started foo2.geekpeek.net

As we can see both Cluster Resources (Apache and ClusterIP) are configured and started – ClusterIP is started on

foo1.geekpeek.net Cluster node and Apache is started on foo2.geekpeek.net node.

Apache and ClusterIP are at the moment running on different Cluster nodes but we will fix this later, setting Resource

Constraints like: colocation (colocating resources), order (order in which resources start and stop), …

Deleting Cluster Resources

We can delete the configured Cluster Resources with “crm configure delete” command following by a Resource Name we

want to delete (example :)

crm configure delete resourcename

We must always stop the Cluster Resource prior to deleting it!!

We can stop the Resource by running “crm resource stop” command following by a Resource Name we want to stop.

We can check the Linux Cluster configuration by running “crm configure show” command and see, if the Cluster Resource

was successfully removed from Cluster Configuration.

Deleting Apache (httpd) Resource](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-13-2048.jpg)

![Clustering Page 14

Let’s stop and delete our Apache Cluster Resource configured in the steps above:

[root@foo1 ~]# crm resource stop Apache

[root@foo1 ~]# crm configure delete Apache

Check Cluster Configuration with:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.61.150" cidr_netmask="24"

op monitor interval="30s"

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1381240623"

… to confirm Apache resource was deleted from Cluster Configuration.

Deleting IP Address Resource

Next let’s stop and delete ClusterIP Resource:

[root@foo1 ~]# crm resource stop ClusterIP

[root@foo1 ~]# crm configure delete ClusterIP

Check Cluster Configuration with:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

property $id="cib-bootstrap-options"

dc-version="1.1.9-2.6-2db99f1"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1381240623"

… to confirm the ClusterIP Resource was deleted from our Cluster Configuration.

Pre-configured resources are ClusterIP and Apache on nodes foo1.geekpeek.net and foo2.geekpeek.net.](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-14-2048.jpg)

![Clustering Page 15

1. Cluster Node Management

CRM Shell is also used for Linux Cluster node management using “crm node” commands.

The following examples cover the basic Linux Cluster node management commands i usually use. Additional help is

available by executing “crm node help” command! Take note, that all changes made with “crm node” commands are saved

as Linux Cluster Node attributes – if we want to remove it we must run “crm node attribute nodename delete attribute“.

List Cluster Nodes – Lists the Linux Cluster nodes – “crm node list“

[root@foo1 ~]# crm node list

foo1.geekpeek.net: normal

foo2.geekpeek.net: normal

Maintenance Mode – Puts Linux Cluster node in maintenance mode – “crm node maintenance nodename“

[root@foo1 ~]# crm node maintenance foo1.geekpeek.net

[root@foo1 ~]# crm node status

<nodes>

<node id="foo1.geekpeek.net" uname="foo1.geekpeek.net">

<instance_attributes id="nodes-foo1.geekpeek.net">

<nvpair id="nodes-foo1.geekpeek.net-maintenance" name="maintenance" value="on"/>

</instance_attributes>

</node>

<node id="foo2.geekpeek.net" uname="foo2.geekpeek.net"/>

</nodes>

Once we put a Linux Cluster node into a Maintenance Mode we need to run “crm node ready nodename” to get it back

online!

Ready Mode – Returns Linux Cluster node from maintenance mode – “crm node ready nodename“

[root@foo1 ~]# crm node ready foo1.geekpeek.net

[root@foo1 ~]# crm node status

<nodes>

<node id="foo1.geekpeek.net" uname="foo1.geekpeek.net">

<instance_attributes id="nodes-foo1.geekpeek.net">

<nvpair id="nodes-foo1.geekpeek.net-maintenance" name="maintenance" value="off"/>

</instance_attributes>

</node>

<node id="foo2.geekpeek.net" uname="foo2.geekpeek.net"/>

</nodes>](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-15-2048.jpg)

![Clustering Page 16

Show/Delete/Set Node Attribute – Shows/Deletes/Sets the desired attributes set on Linux Cluster node – “crm node

attribute nodename show/delete/set attribute“

[root@foo1 ~]# crm node attribute foo1.geekpeek.net delete maintenance

Deleted nodes attribute: id=nodes-foo1.geekpeek.net-maintenance name=maintenance

[root@foo1 ~]# crm node status

<nodes>

<node id="foo1.geekpeek.net" uname="foo1.geekpeek.net">

<instance_attributes id="nodes-foo1.geekpeek.net"/>

</node>

<node id="foo2.geekpeek.net" uname="foo2.geekpeek.net"/>

</nodes>

Standby Mode – Puts the Linux Cluster node into a Standby mode – “crm node standby nodename“

[root@foo1 ~]# crm node standby foo1.geekpeek.net

[root@foo1 ~]# crm node status

<nodes>

<node id="foo1.geekpeek.net" uname="foo1.geekpeek.net">

<instance_attributes id="nodes-foo1.geekpeek.net">

<nvpair id="nodes-foo1.geekpeek.net-standby" name="standby" value="on"/>

</instance_attributes>

</node>

<node id="foo2.geekpeek.net" uname="foo2.geekpeek.net"/>

</nodes>

Online Mode – Returns Linux Cluster node to Online mode from Standby – “crm node online nodename“

[root@foo1 ~]# crm node online foo1.geekpeek.net

[root@foo1 ~]# crm node status

<nodes>

<node id="foo1.geekpeek.net" uname="foo1.geekpeek.net">

<instance_attributes id="nodes-foo1.geekpeek.net">

<nvpair id="nodes-foo1.geekpeek.net-standby" name="standby" value="off"/>

</instance_attributes>

</node>

<node id="foo2.geekpeek.net" uname="foo2.geekpeek.net"/>

</nodes>

2. Cluster Resource Management

CRM Shell is used for Linux Cluster management. We can use “crm configure” with “group, order, location, colocation, …”

parameters and “crm resource” with “start, stop, status, migrate, cleanup, …“.

The following examples cover the basic Linux Cluster resource management commands you might find useful. Additional

help is available by executing “crm configure help” or “crm resource help” command.](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-16-2048.jpg)

![Clustering Page 17

Our current Linux Cluster resource configuration is:

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.1.150" cidr_netmask="24"

op monitor interval="30s"

property $id="cib-bootstrap-options"

dc-version="1.1.10-1.el6_4.4-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1383902488"

Group Linux Cluster Resources “crm configure group groupname resource1 resource2″)

Group your Linux Cluster resources and start/stop and manage your resource group with one single command.

[root@foo1 ~]# crm configure group HTTP-GROUP ClusterIP Apache

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.1.150" cidr_netmask="24"

op monitor interval="30s"

group HTTP-GROUP ClusterIP Apache

property $id="cib-bootstrap-options"

dc-version="1.1.10-1.el6_4.4-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1383902488"](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-17-2048.jpg)

![Clustering Page 18

In this example we created a resource group called HTTP-GROUP with ClusterIP and Apache resources. We can now manage

all our grouped resources by starting, stopping and managing HTTP-GROUP group resource.

Linux Cluster Resources Start/Stop Order “crm configure order ordername inf: resource1 resource2:start“

With this command we can configure start and stop order of our Linux Cluster resources.

[root@foo1 ~]# crm configure order ClusterIP-before-Apache inf: ClusterIP Apache:start

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.1.150" cidr_netmask="24"

op monitor interval="30s"

order ClusterIP-before-Apache inf: ClusterIP Apache:start

property $id="cib-bootstrap-options"

dc-version="1.1.10-1.el6_4.4-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1383902488"

In this example we configured the start and stop order of our ClusterIP and Apache resources. As configured, ClusterIP

resource will start first and only then Apache resource can be started. When stopping, Apache resource will be stopped and

only then ClusterIP resource can be stopped too.

Linux Cluster Resources Colocation “crm configure colocation colocationname inf: resource1 resource2“

We can configure Linux Cluster resources colocation. Like said we colocate the desired resources and make sure we always

run desired resources on the same node at all time.

[root@foo1 ~]# crm configure colocation IP-with-APACHE inf: ClusterIP Apache

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.1.150" cidr_netmask="24"

op monitor interval="30s"](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-18-2048.jpg)

![Clustering Page 19

group HTTP-GROUP ClusterIP Apache

colocation IP-with-APACHE inf: ClusterIP Apache

order ClusterIP-before-Apache inf: ClusterIP Apache:start

property $id="cib-bootstrap-options"

dc-version="1.1.10-1.el6_4.4-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1384349363"

In this example, we configured colocation for ClusterIP and Apache resources. ClusterIP and Apache resources will

always be started and running together, on the same Linux Cluster node.

Linux Cluster Resources Prefered Location “crm configure location locationname resource score:

clusternode“

We can configure a prefered location for our Linux Cluster resources or resource groups. We must always set the

location score – ositive values indicate the resource should run on this node. Negative values indicate the resource

should not run on this node.

[root@foo1 ~]# crm configure location HTTP-GROUP-prefer-FOO1 HTTP-GROUP 50: foo1.geekpeek.net

[root@foo1 ~]# crm configure show

node foo1.geekpeek.net

node foo2.geekpeek.net

primitive Apache ocf:heartbeat:apache

params configfile="/etc/httpd/conf/httpd.conf"

op monitor interval="30s"

op start timeout="40s" interval="0"

op stop timeout="60s" interval="0"

primitive ClusterIP ocf:heartbeat:IPaddr2

params ip="192.168.61.150" cidr_netmask="24"

op monitor interval="30s"

group HTTP-GROUP ClusterIP Apache

location HTTP-GROUP-prefer-FOO1 HTTP-GROUP 50: foo1.geekpeek.net

colocation IP-with-APACHE inf: ClusterIP Apache

order ClusterIP-before-Apache inf: ClusterIP Apache:start

property $id="cib-bootstrap-options"

dc-version="1.1.10-1.el6_4.4-368c726"

cluster-infrastructure="classic openais (with plugin)"

expected-quorum-votes="2"

stonith-enabled="false"

last-lrm-refresh="1384349363"](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-19-2048.jpg)

![Clustering Page 20

In this example we configured the preferred location of HTTP-GROUP resource group. By configuring score 50, HTTP-GROUP

will prefer to run on foo1.geekpeek.net node but will still in case of foo1 failure move to foo2.geekpeek.net. When foo2

recovers, HTTP-GROUP will move back to preferred foo1.geekpeek.net.

Checking the status of our Linux Cluster Nodes and Resources:

[root@foo1 ~]# crm status

Last updated: Wed Nov 13 15:30:45 2013

Last change: Wed Nov 13 15:01:06 2013 via cibadmin on foo1.geekpeek.net

Stack: classic openais (with plugin)

Current DC: foo2.geekpeek.net - partition with quorum

Version: 1.1.10-1.el6_4.4-368c726

2 Nodes configured, 2 expected votes

2 Resources configured

Online: [ foo1.geekpeek.net foo2.geekpeek.net ]

Resource Group: HTTP-GROUP

ClusterIP (ocf::heartbeat:IPaddr2): Started foo1.geekpeek.net

Apache (ocf::heartbeat:apache): Started foo1.geekpeek.net

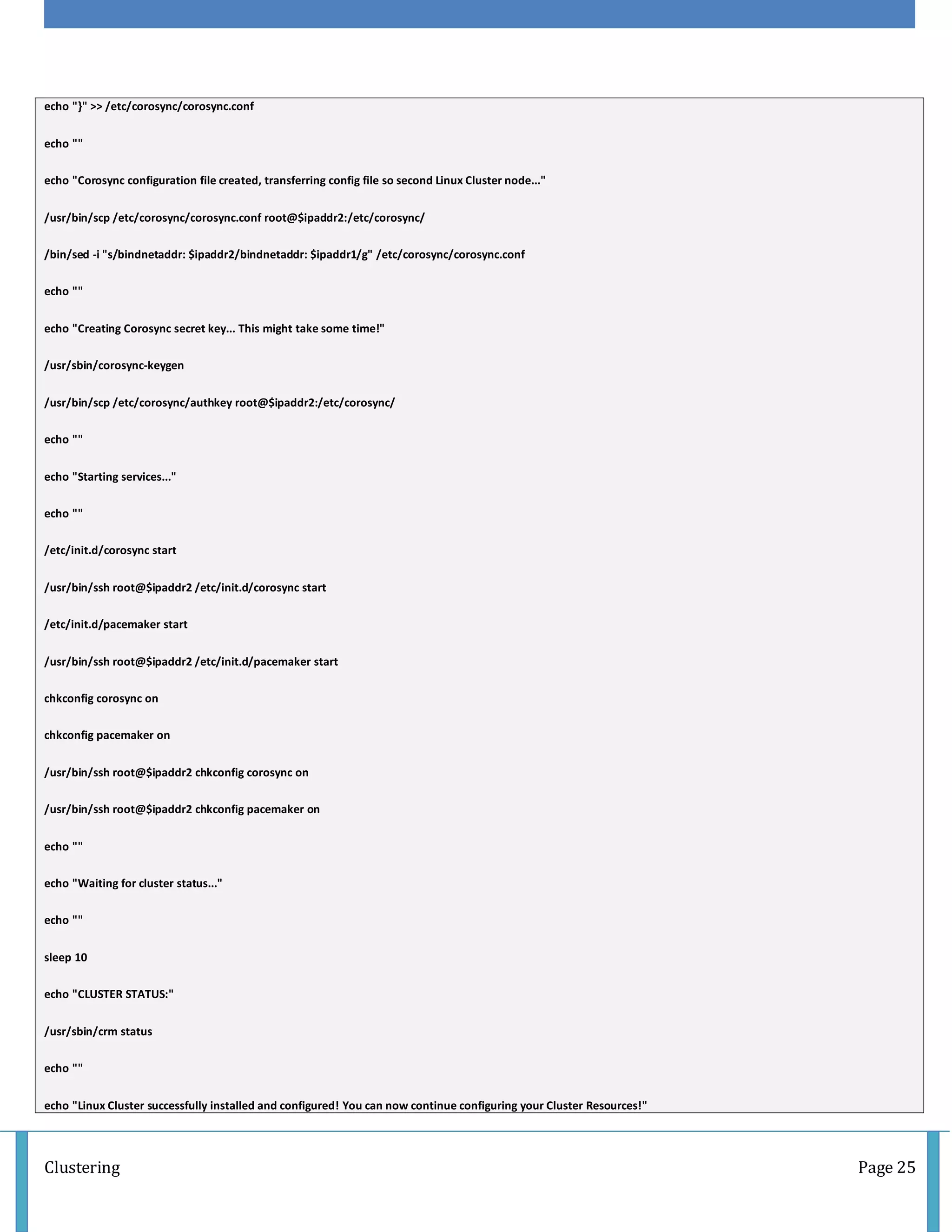

All in One with Script:

#!/bin/sh

#

# linux-cluster-install-v01.sh (19 September 2013)

# GeekPeek.Net scripts - Install and Configure Linux Cluster on CentOS 6

#

# INFO: This script was tested on CentOS 6.4 minimal installation. Script first configures SSH Key Authentication between nodes.

# The script installs Corosync, Pacemaker and CRM Shell for Pacemaker management packages on both nodes. It creates default

# Corosync configuration file and Authentication Key and transfers both to the second node. It configures Corosync and Pacemaker

# to start at boot and starts the services on both nodes. It shows the current status of the Linux Cluster.

#

# CODE:

echo "Root SSH must be permited on the second Linux Cluster node. DNS resolution for Linux Cluster nodes must be properly configured!"

echo "NTP synchronization for both Linux Cluster nodes must also be configured!"

echo ""](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-20-2048.jpg)

![Clustering Page 21

echo "Are all of the above conditions satisfied? (y/n)"

read rootssh

case $rootssh in

y)

echo "Please enter the IP address of the first Linux Cluster node."

read ipaddr1

echo "Please enter the IP address of the second Linux Cluster node."

read ipaddr2

echo ""

echo "Generating SSH key..."

/usr/bin/ssh-keygen

echo ""

echo "Copying SSH key to the second Linux Cluster node..."

echo "Please enter the root password for the second Linux Cluster node."

/usr/bin/ssh-copy-id root@$ipaddr2

echo ""

echo "SSH Key Authentication successfully set up ... continuing Linux Cluster package installation..."

;;

n)

echo "Root access must be enabled on the second machine...exiting!"

exit 1

;;

*)

echo "Unknown choice ... exiting!"

exit 2

esac

echo "[haclustering]" >> /etc/yum.repos.d/ha-clustering.repo](https://image.slidesharecdn.com/linuxclusteringsolution-170110091329/75/Linux-clustering-solution-21-2048.jpg)