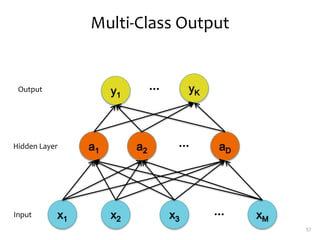

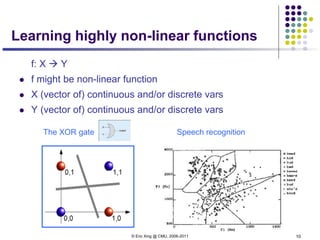

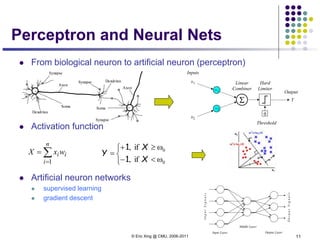

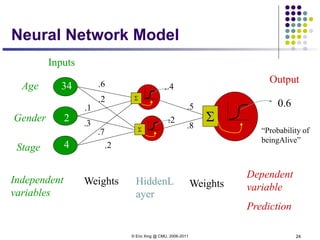

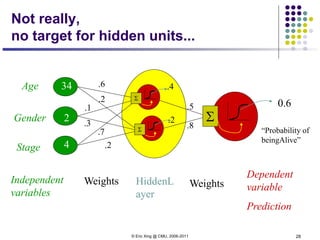

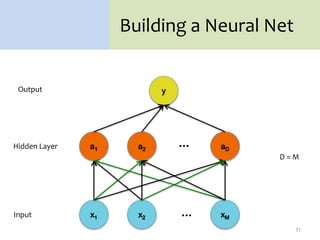

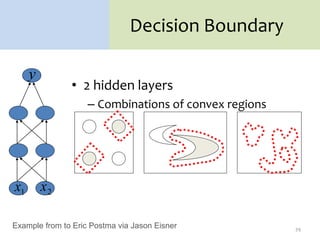

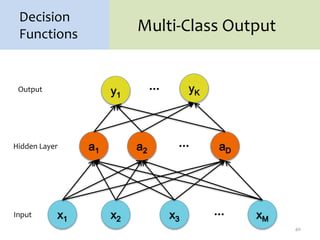

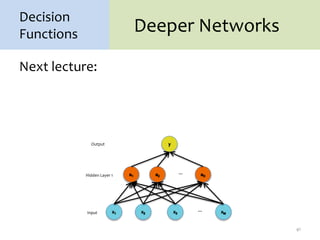

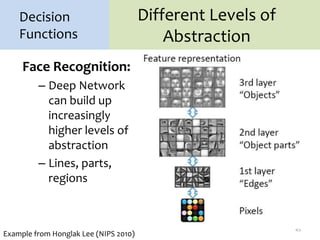

1. Neural networks are a type of machine learning model that can learn highly non-linear functions to map inputs to outputs. They consist of interconnected layers of nodes that mimic biological neurons.

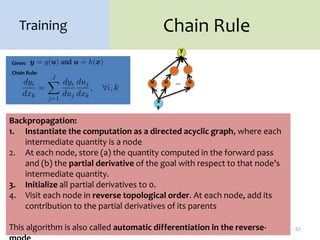

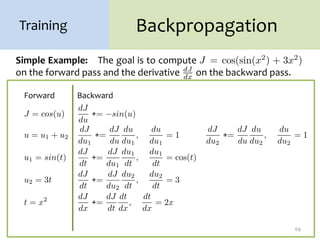

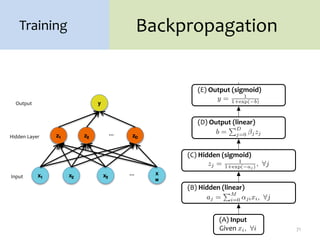

2. Backpropagation is an algorithm that allows neural networks to be trained using gradient descent by efficiently computing the gradient of the loss function with respect to the network parameters. It works by propagating gradients from the output layer back through the network using the chain rule.

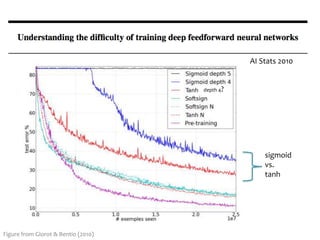

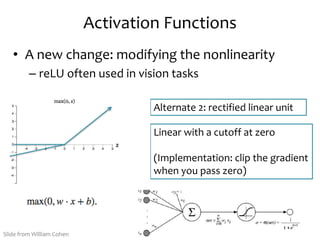

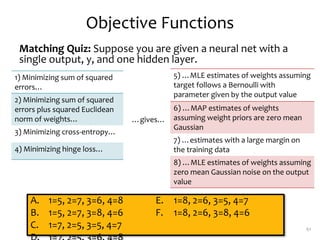

3. There are many design decisions that go into building a neural network architecture, such as the number of hidden layers and nodes, choice of activation functions, objective function, and training algorithm like stochastic gradient descent. Common activation functions are the sigmoid, tanh, and rectified linear

![Activation Functions

• A new change: modifying the nonlinearity

– The logistic is not widely used in modern ANNs

Alternate 1:

tanh

Like logistic function but

shifted to range [-1, +1]

Slide from William Cohen](https://image.slidesharecdn.com/lecture15-neural-nets2-220923142645-ef962b57/85/lecture15-neural-nets-2-pptx-52-320.jpg)