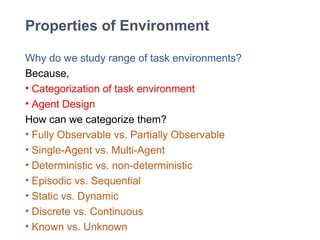

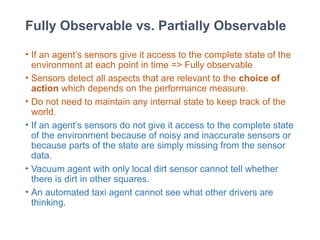

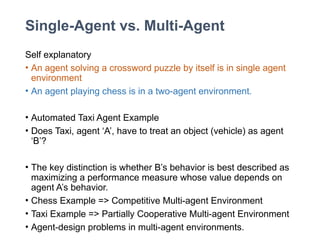

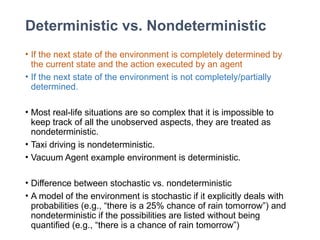

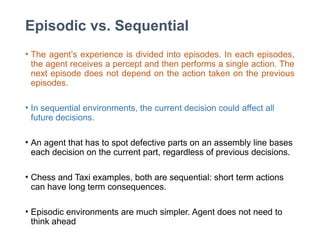

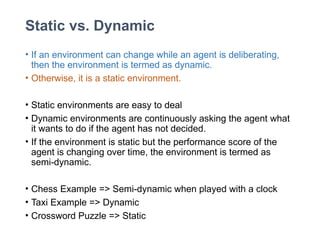

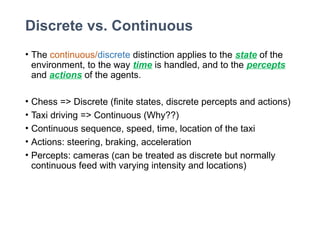

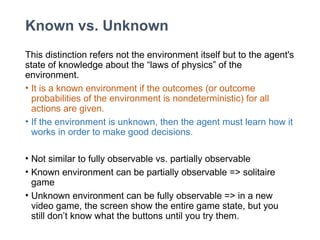

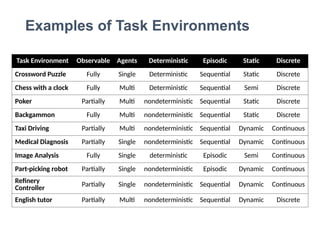

The document discusses the properties of environments in artificial intelligence, categorizing them based on characteristics such as observability, agent type, determinism, and dynamics. It highlights the differences between single-agent and multi-agent environments, and defines episodic versus sequential decision-making scenarios, along with static versus dynamic and discrete versus continuous environments. Examples are provided to illustrate each category, emphasizing the importance of understanding these properties for effective agent design.