1) Lucata has introduced version 1.0 of its GraphBLAS library, which is optimized for parallel graph analysis on Lucata's Pathfinder architecture.

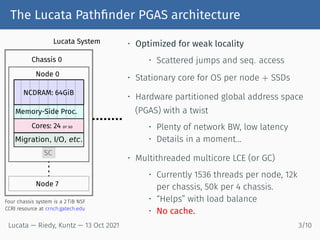

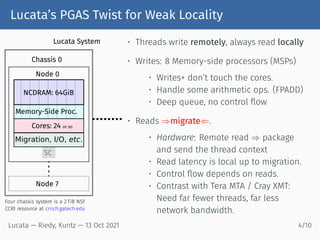

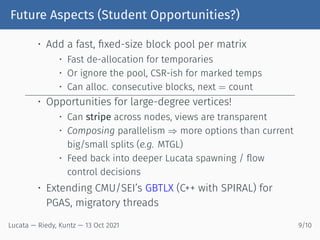

2) Lucata's Pathfinder uses a partitioned global address space (PGAS) model where threads write remotely but always read locally via thread migration, reducing network bandwidth needs.

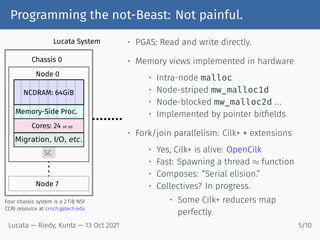

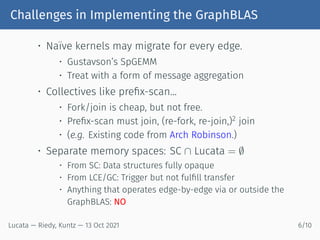

3) Programming the Lucata architecture for graph algorithms requires approaches like message aggregation to reduce thread migration, but its fork/join parallelism model keeps spawning costs low.