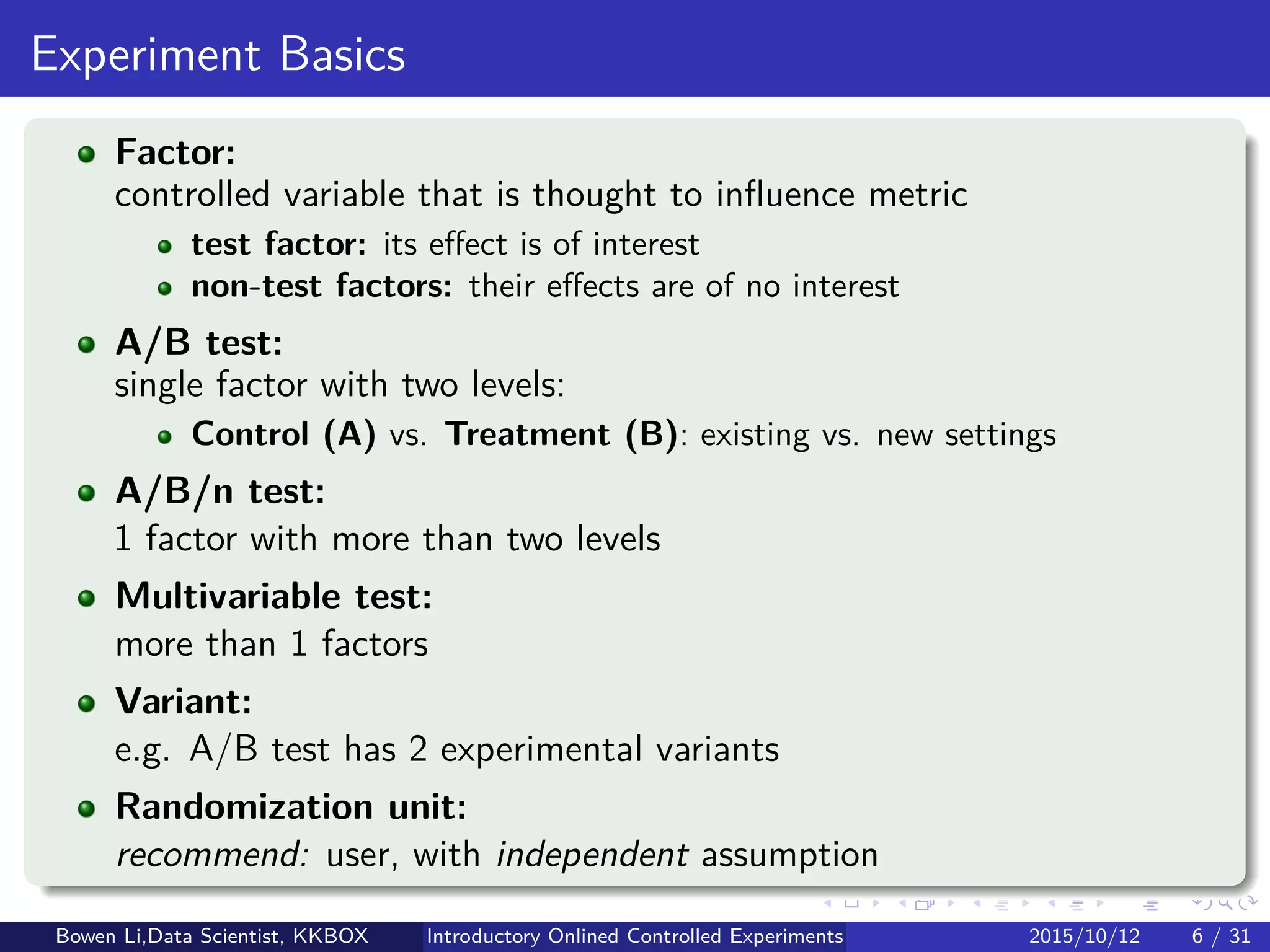

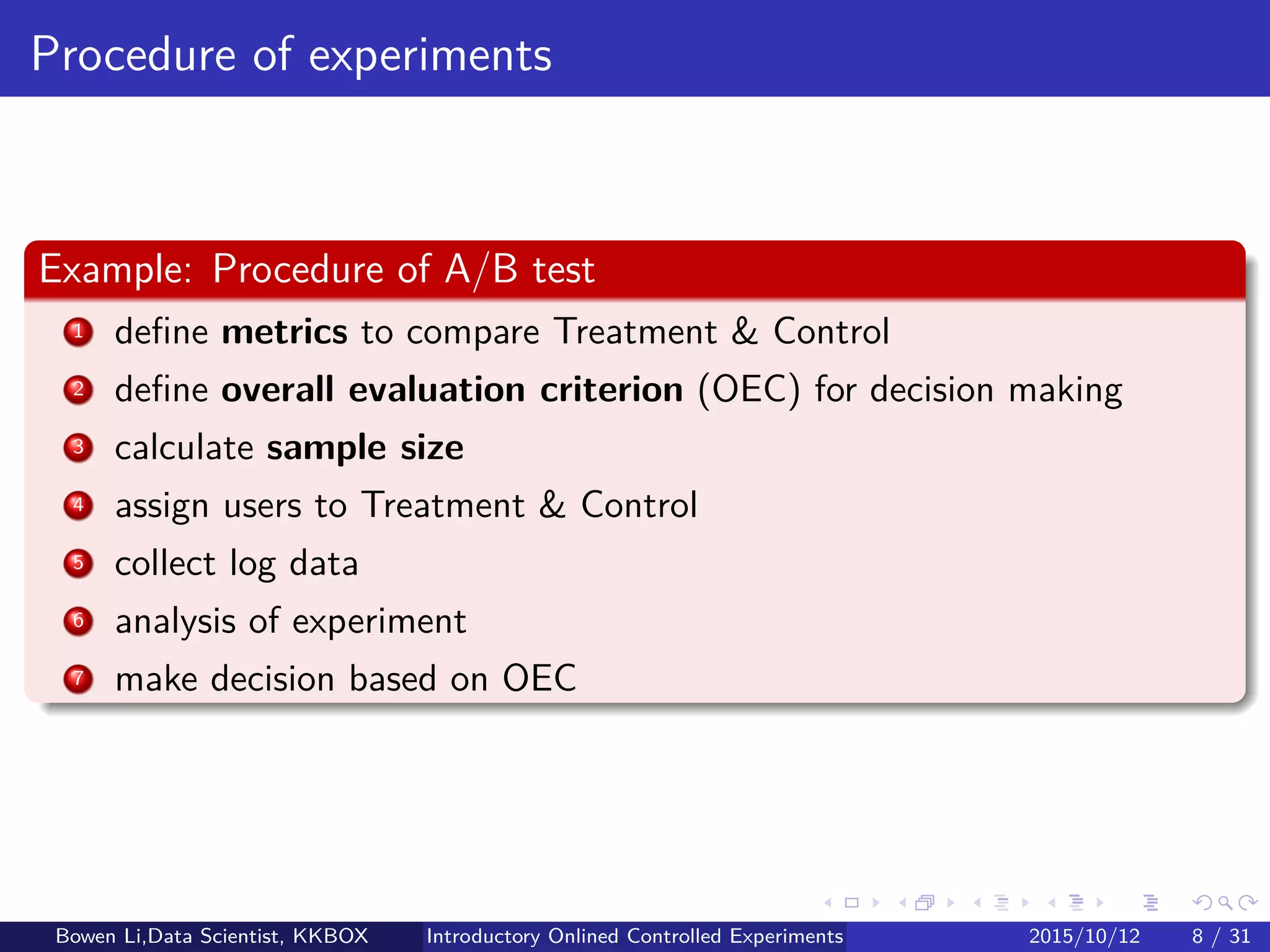

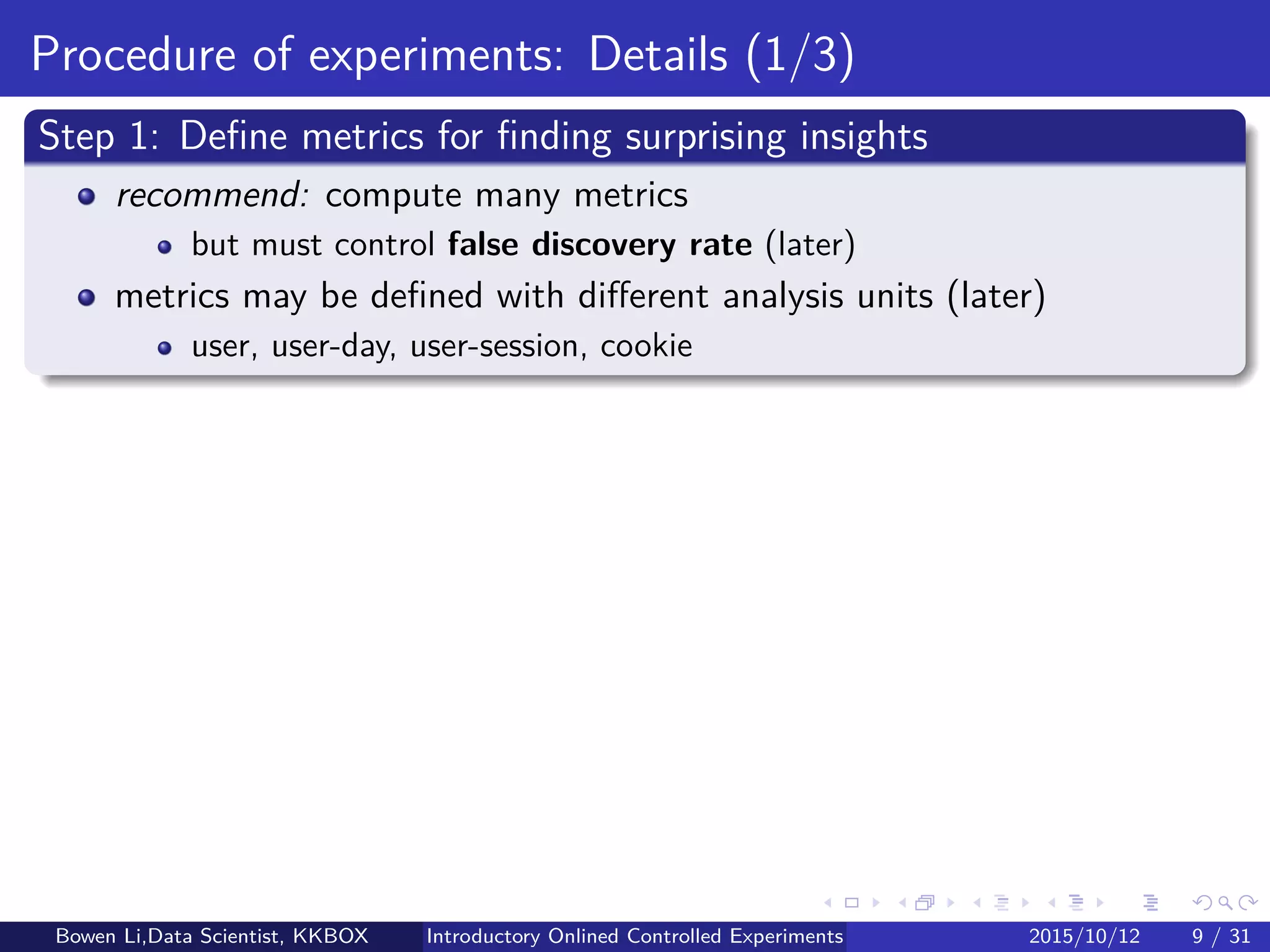

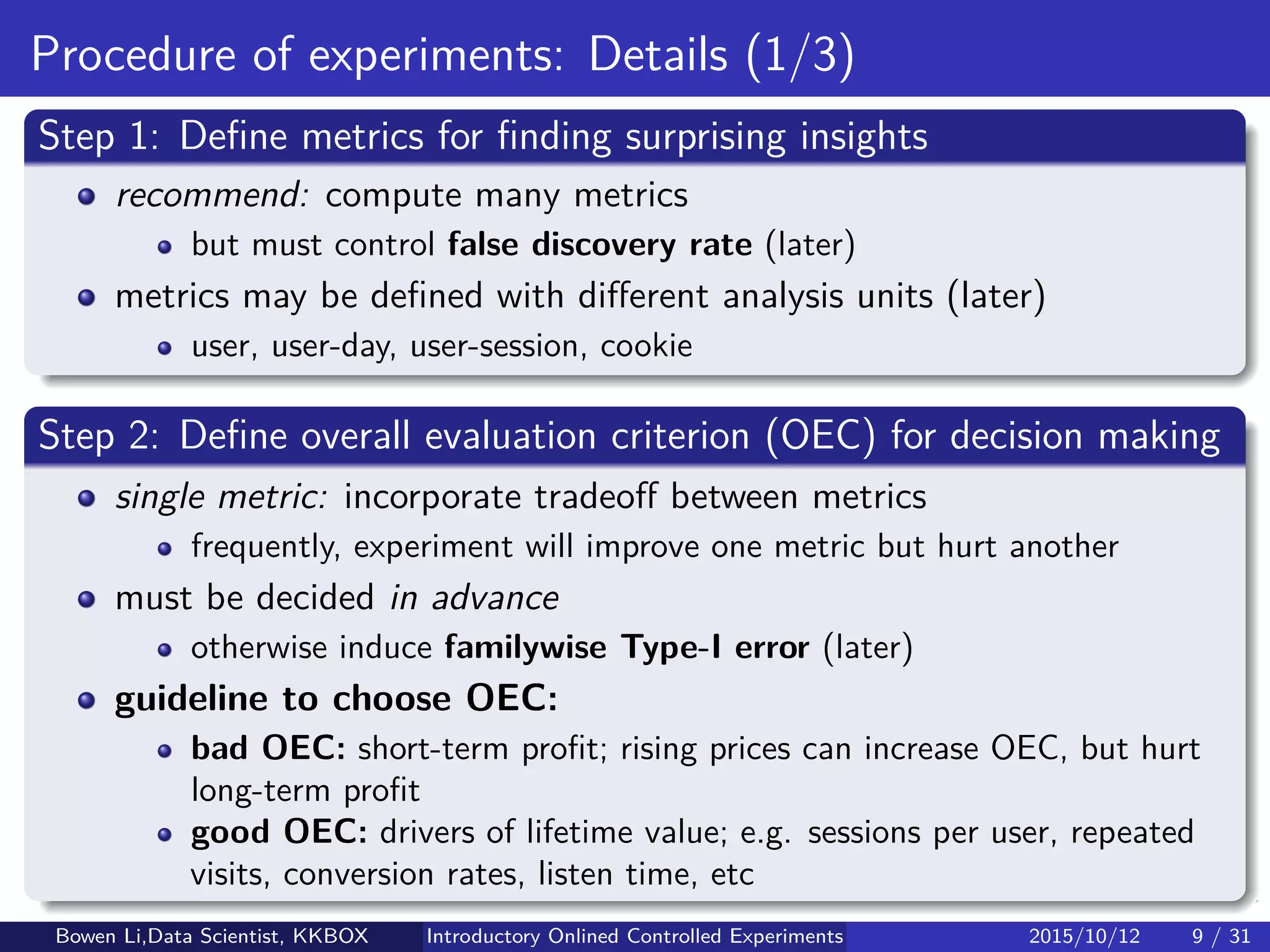

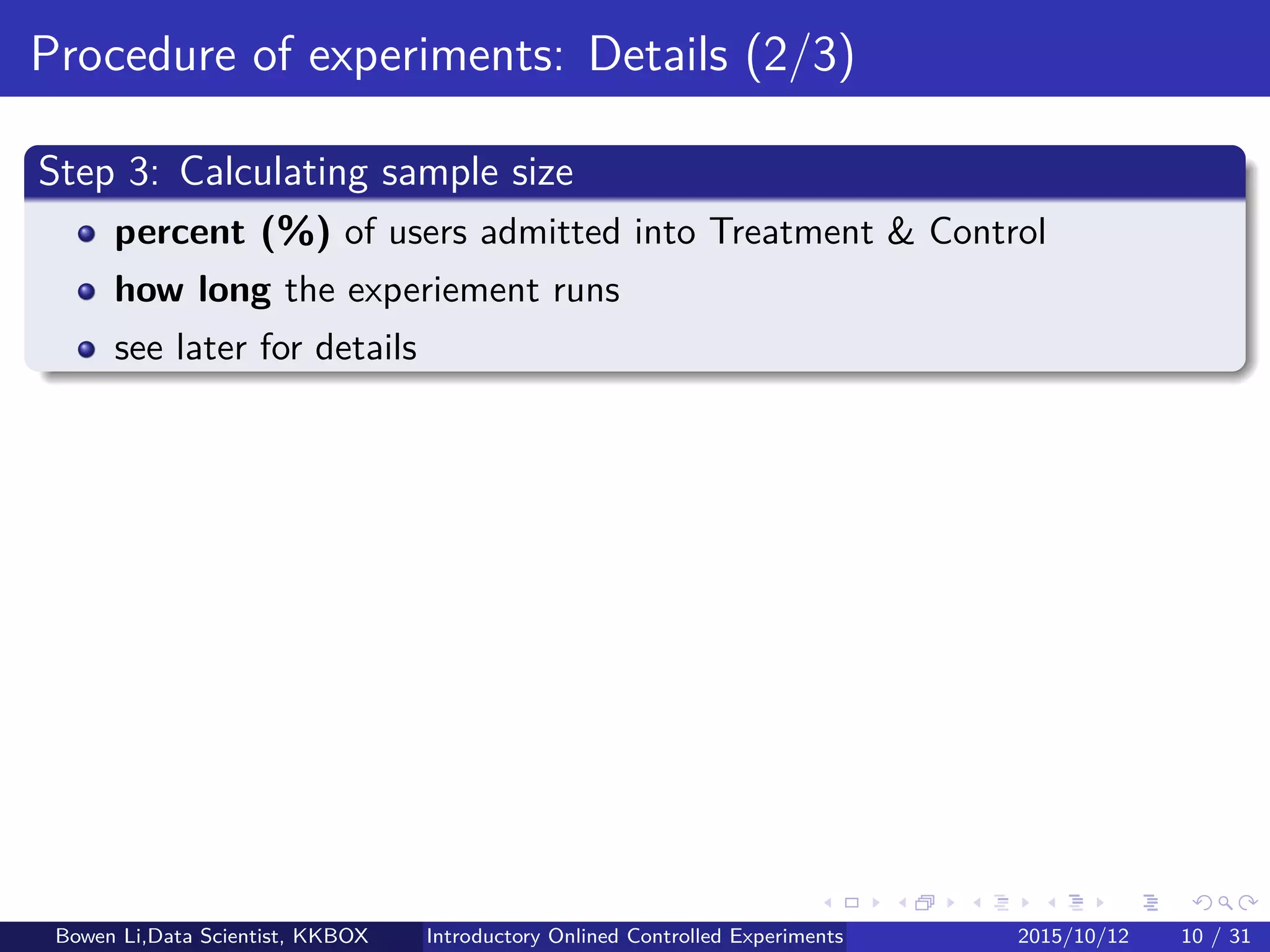

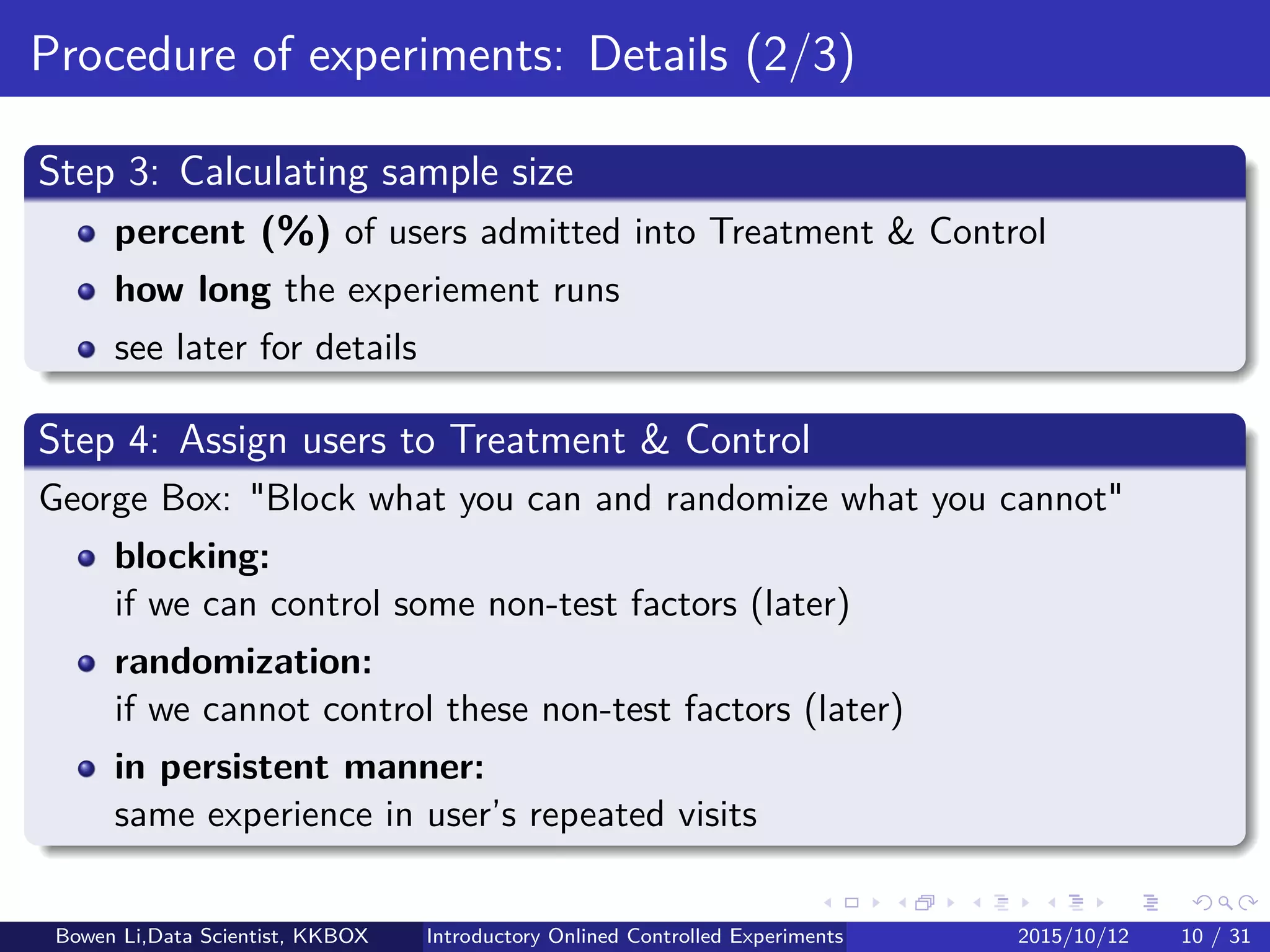

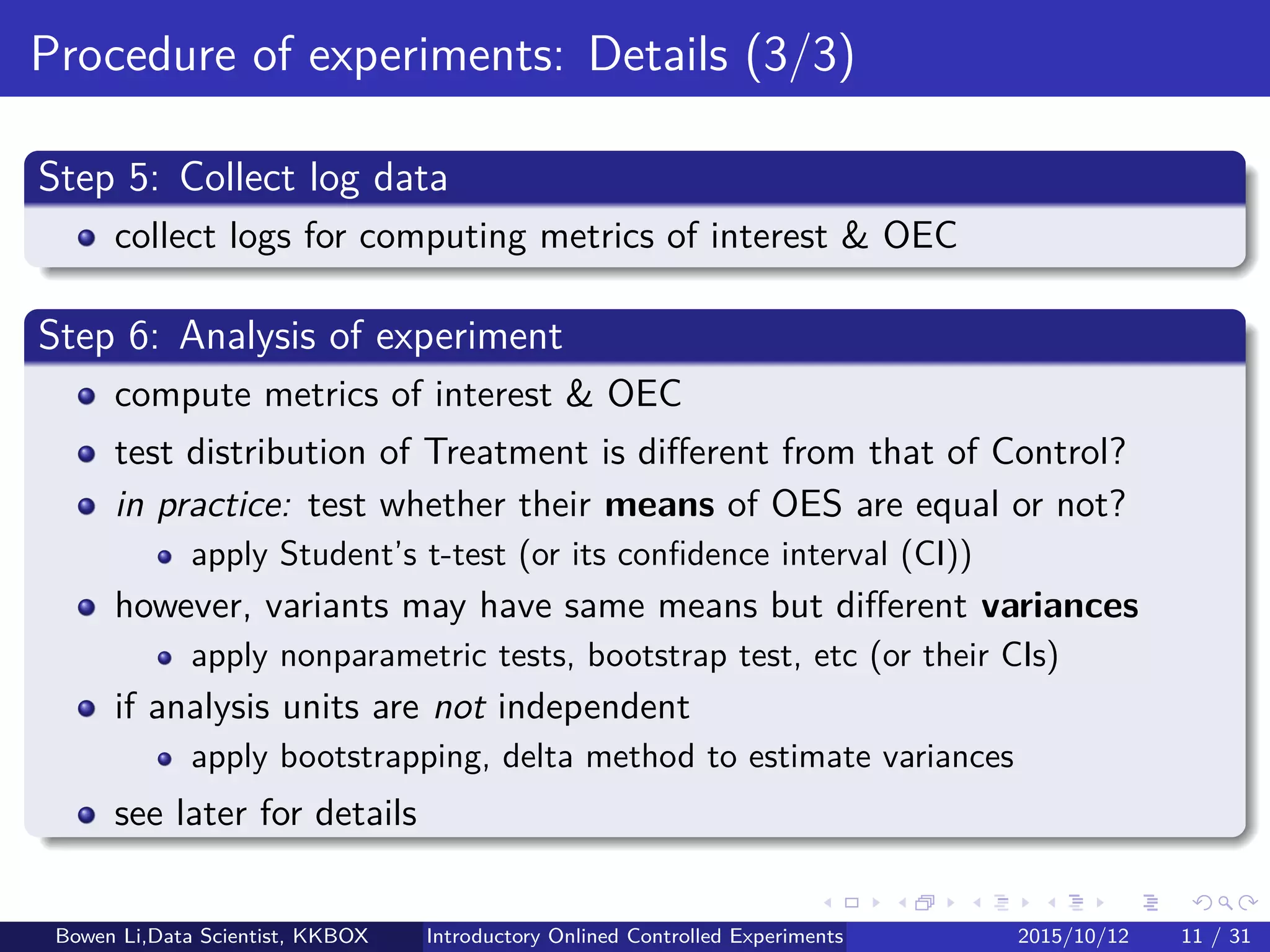

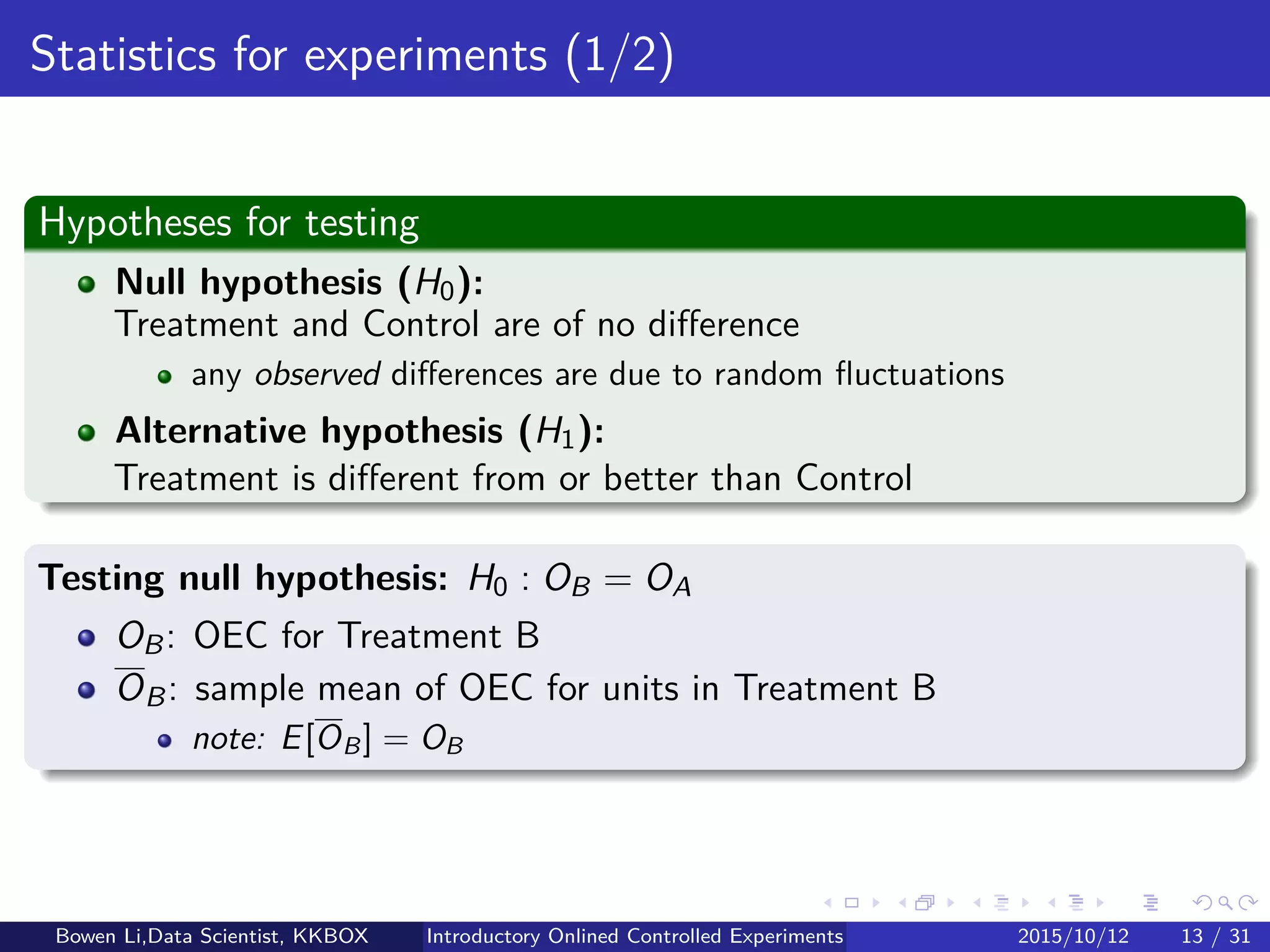

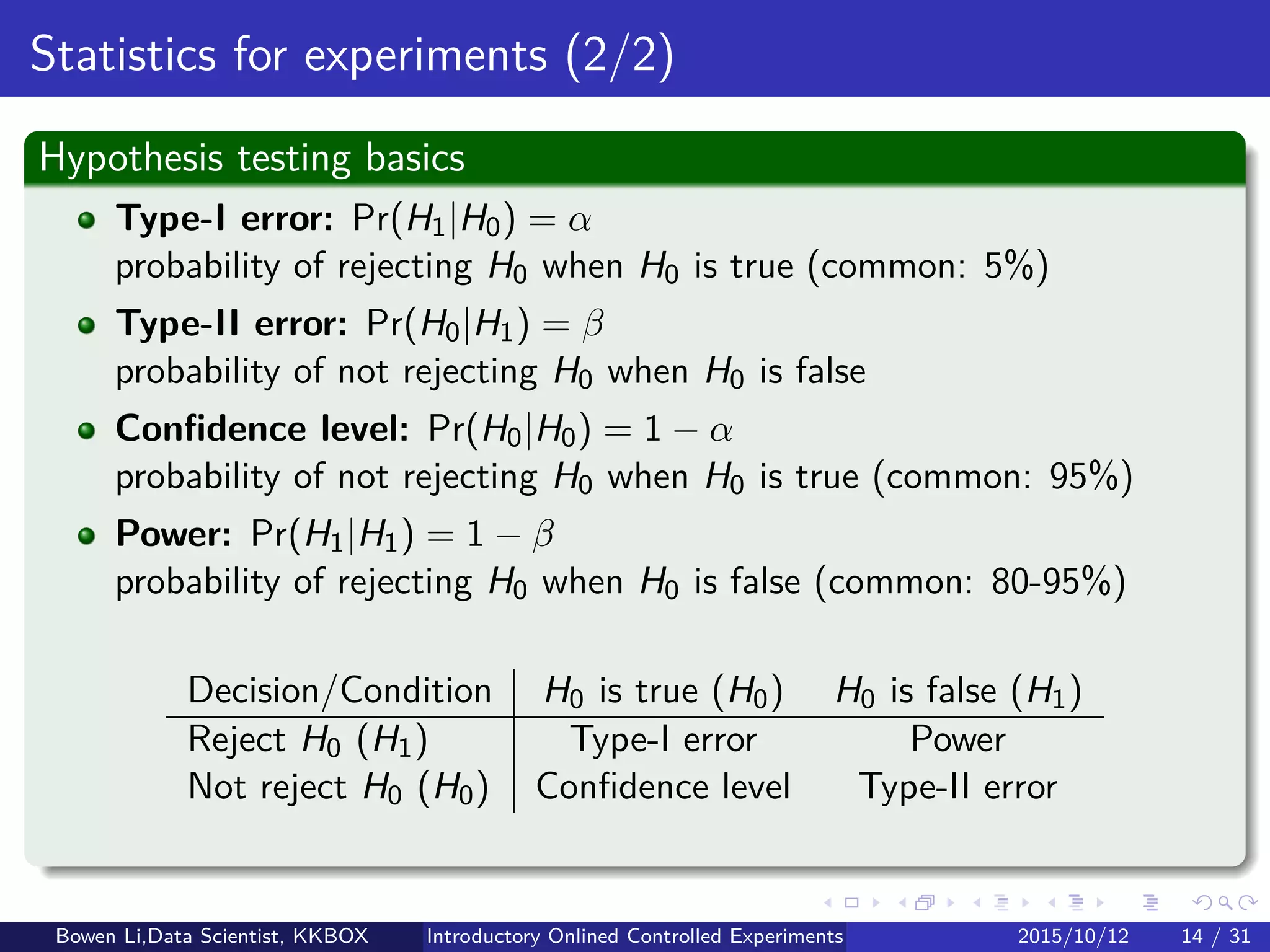

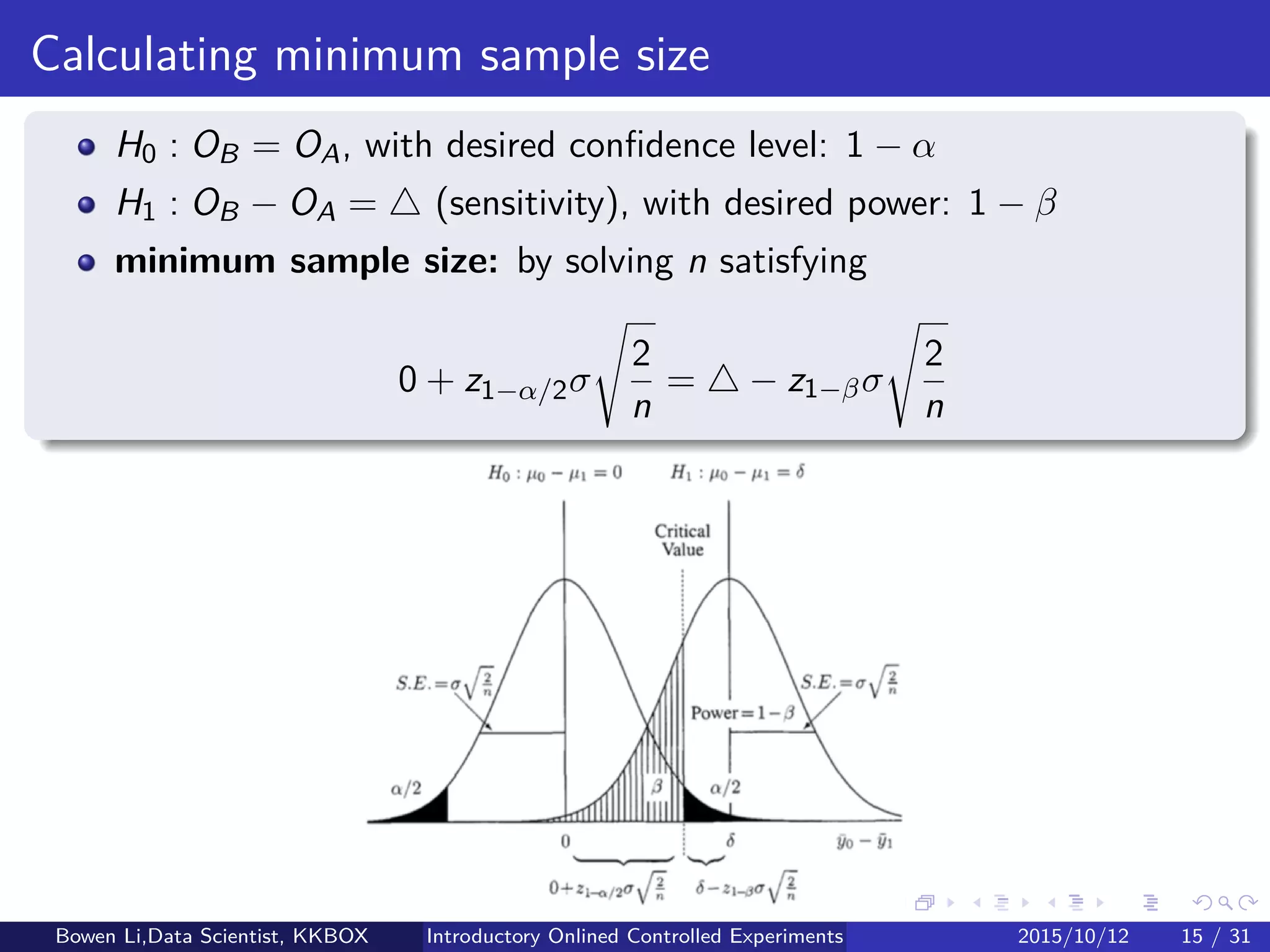

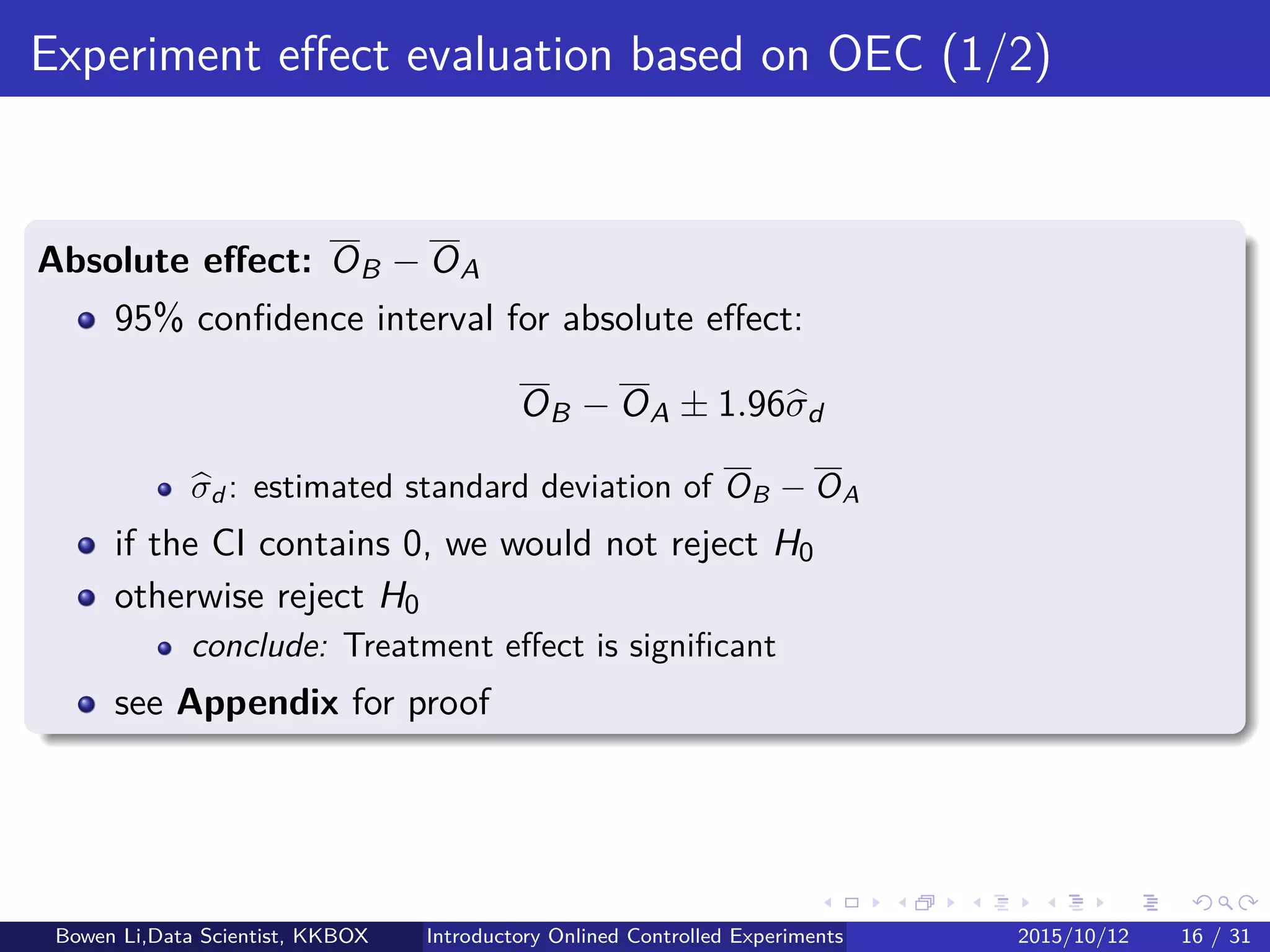

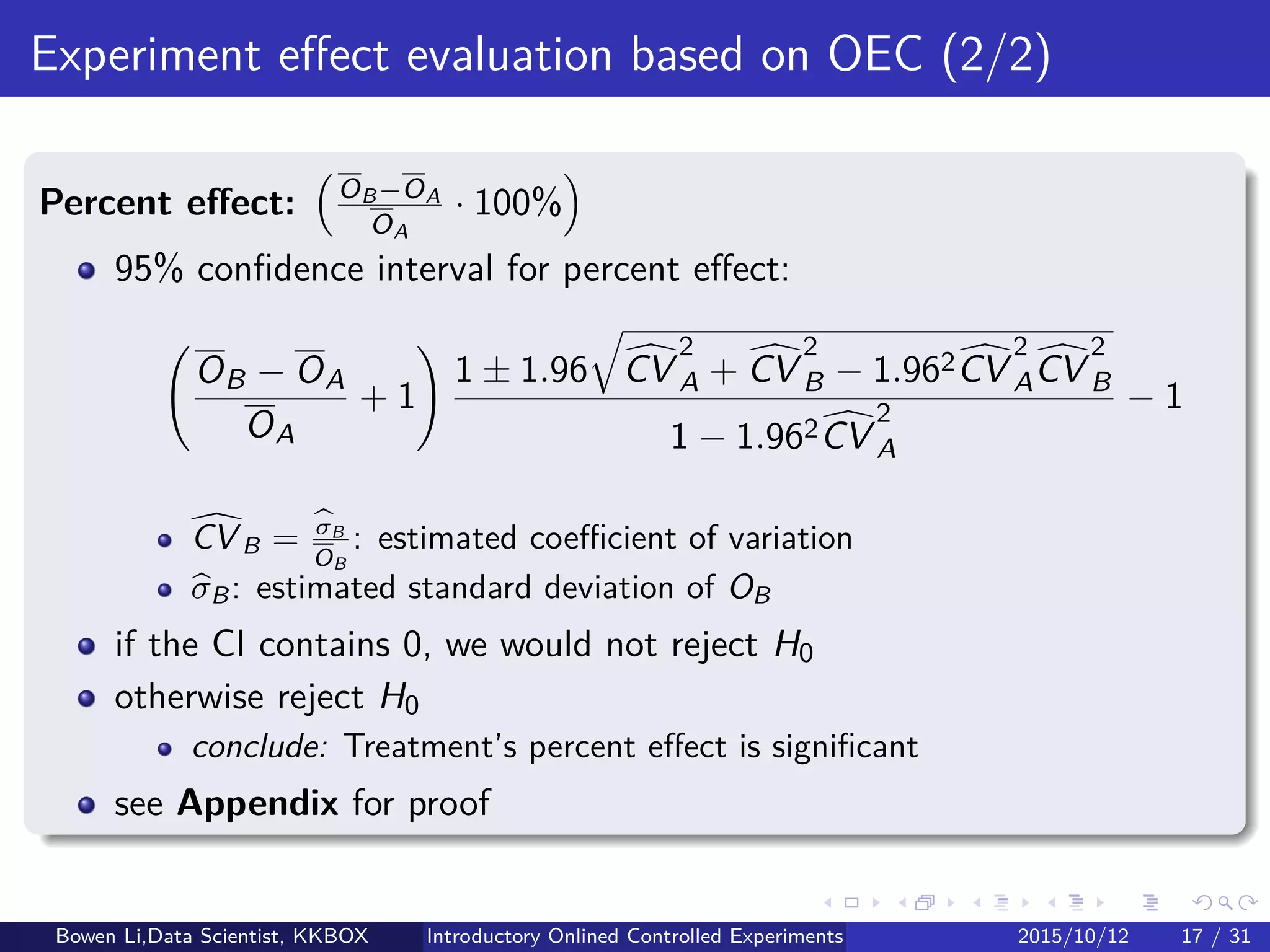

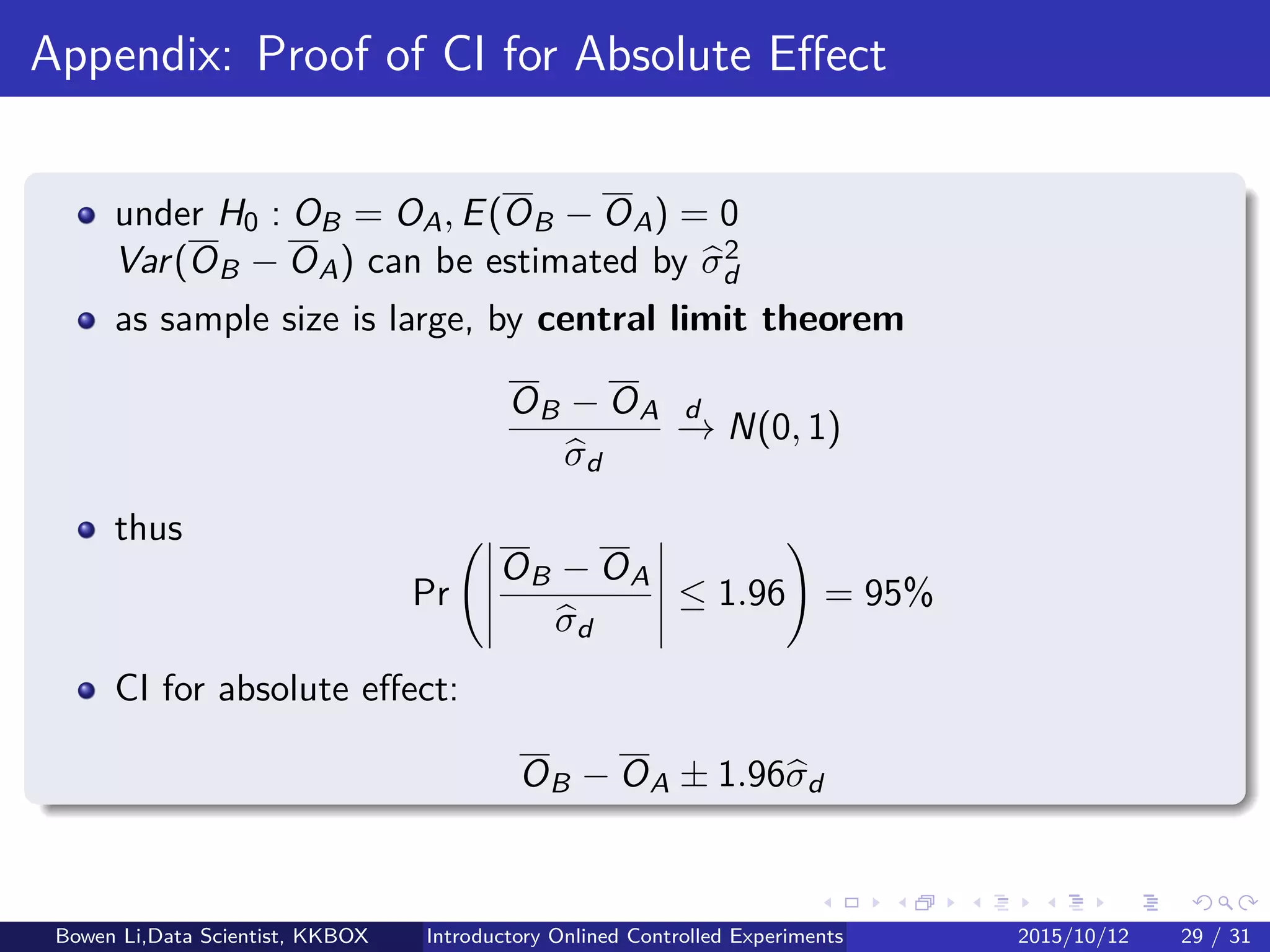

The document discusses introductory online controlled experiments. It outlines the basic procedure for A/B tests, including defining metrics and the overall evaluation criterion, calculating sample size, randomly assigning users to treatment and control, collecting log data, online monitoring, and analyzing the experiment results. It also covers experiment designs, statistics for hypothesis testing and estimating experiment effects, and sample size calculation. The goal is to scientifically test hypotheses about whether specific changes can improve key metrics and establish causal relationships.

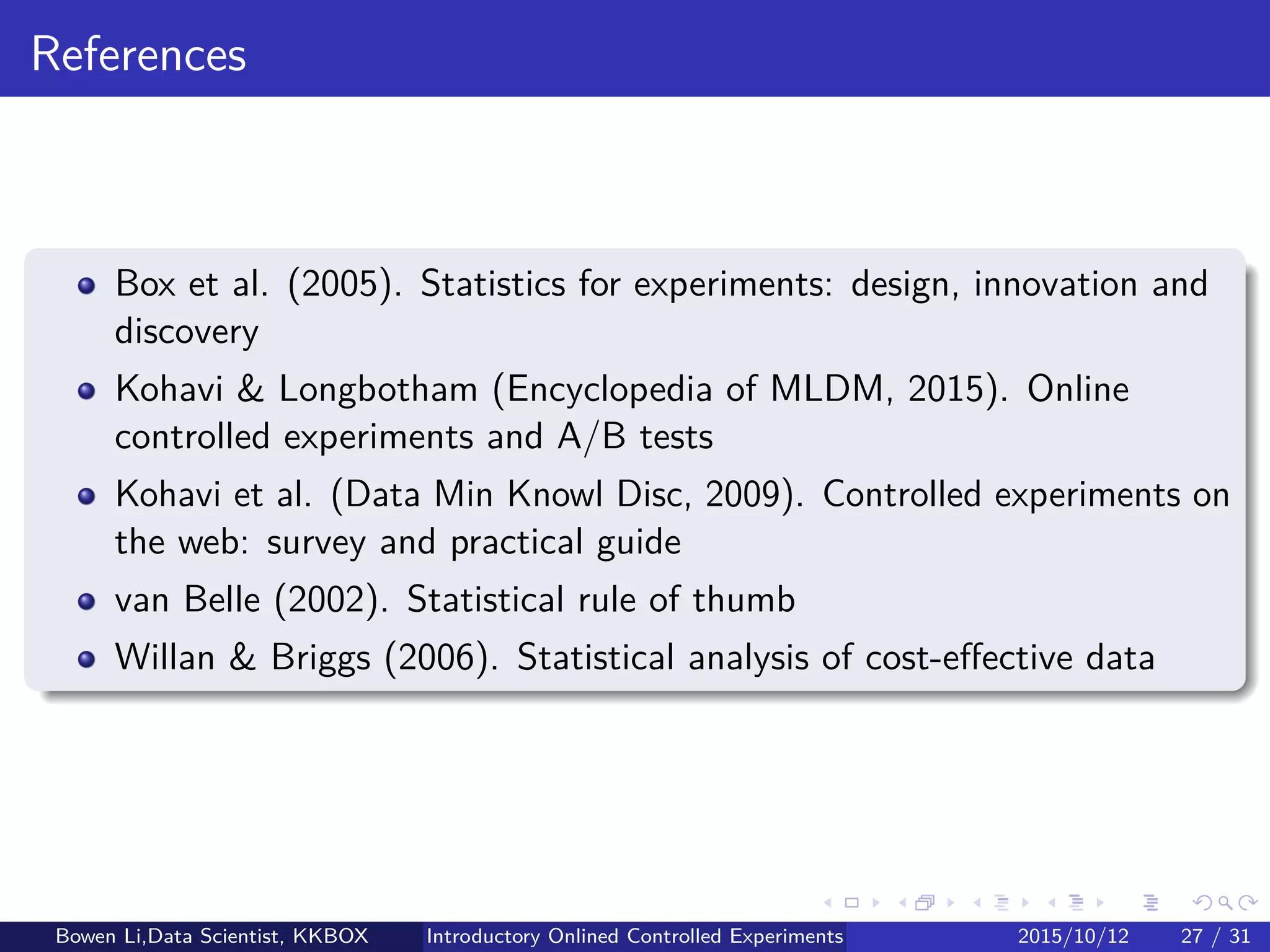

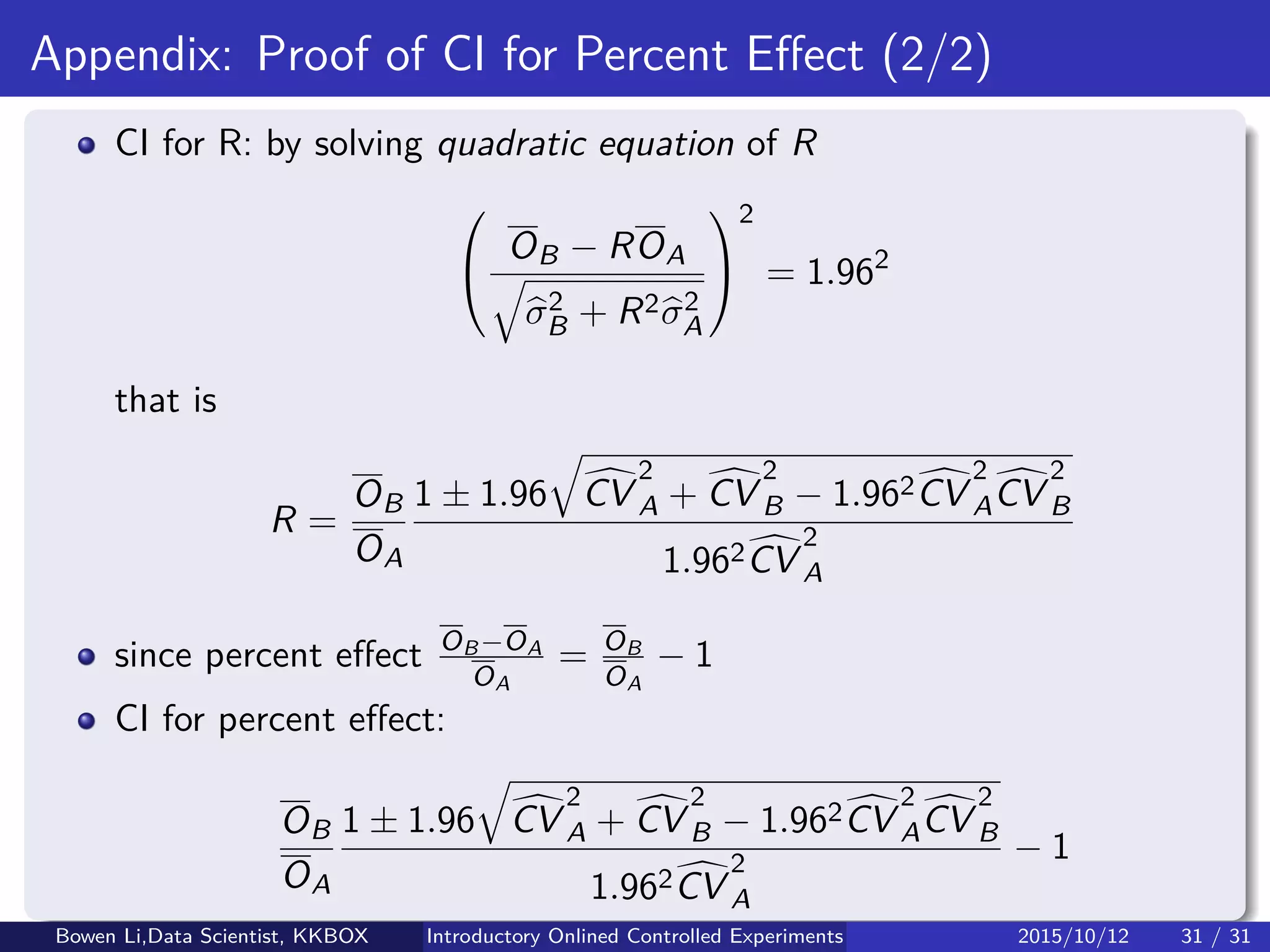

![Appendix: Derivations of CI for Percent Effect (1/2)

Fieller (1954):

Define R = OB

OA

Obtain CI for R based on OB − ROA

Apply Central Limit Theorem

OB − ROA

d

−→ N(0, Var[OB − ROA])

Var[OB − ROA] = σ2

B + R2σ2

A (since Cov(OB, OA) = 0)

Thus

OB − ROA

σ2

B + R2σ2

A

d

−→ N(0, 1)

Pr

OB − ROA

σ2

B + R2σ2

A

≤ 1.96

= 95%

Bowen Li, Staff Data Scientist @Vpon Introductory Online Controlled Experiments 2016/04/08 34 / 35](https://image.slidesharecdn.com/introonlinecontrolledexpslides20151012-151013160751-lva1-app6891/75/Introductory-Online-Controlled-Experiments-41-2048.jpg)