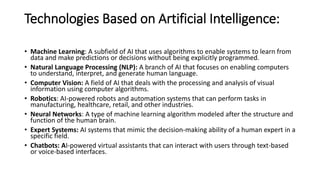

The document provides a comprehensive overview of artificial intelligence (AI), defining it as the simulation of human intelligence processes by machines and detailing its scope, including various applications across sectors like healthcare, finance, and e-commerce. It discusses the historical evolution of AI, approaches to AI development, its tools, potential drawbacks like bias and job displacement, and future technologies such as reinforcement learning and quantum AI. Furthermore, it offers resources for further reading and learning on AI topics.