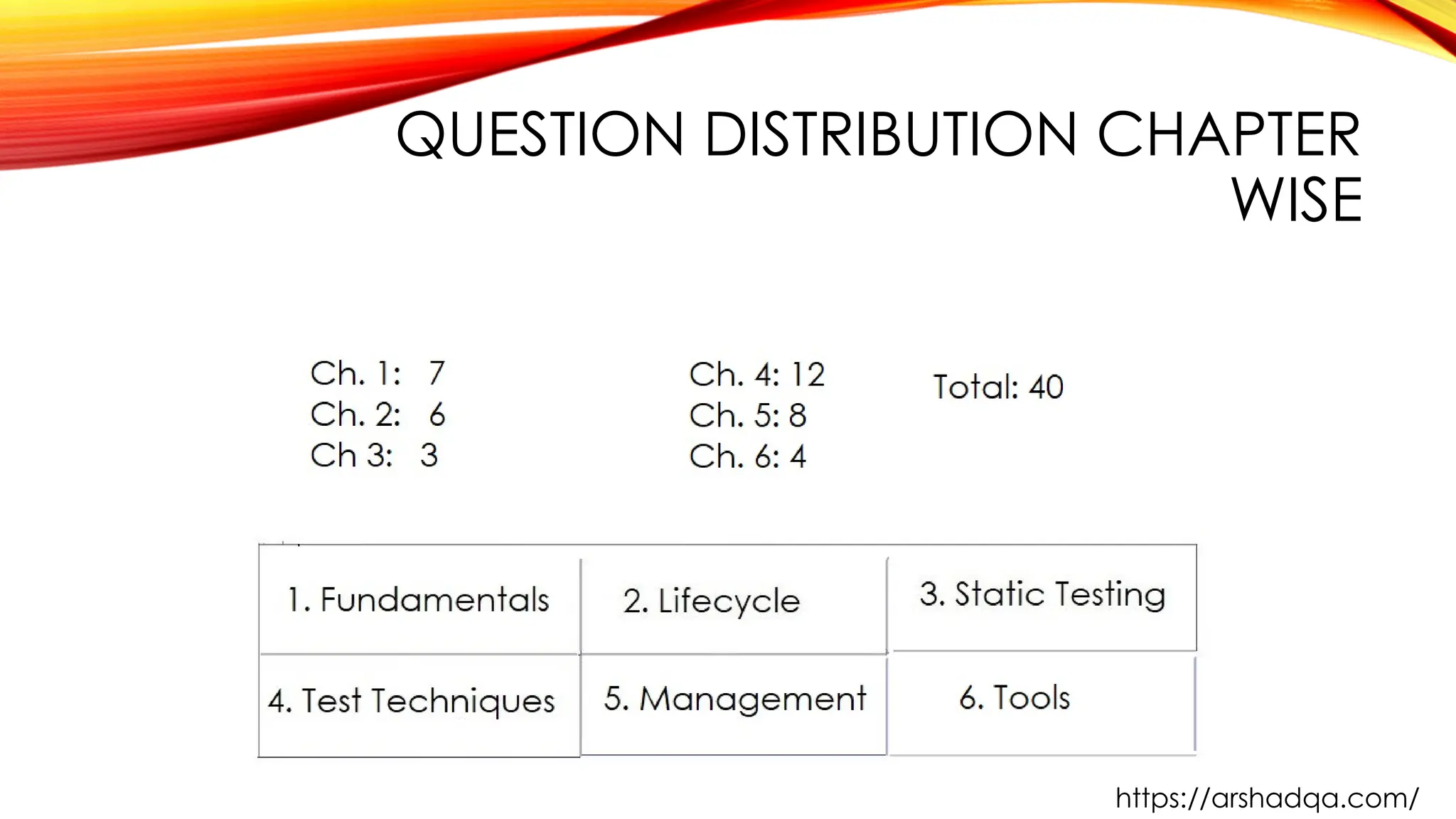

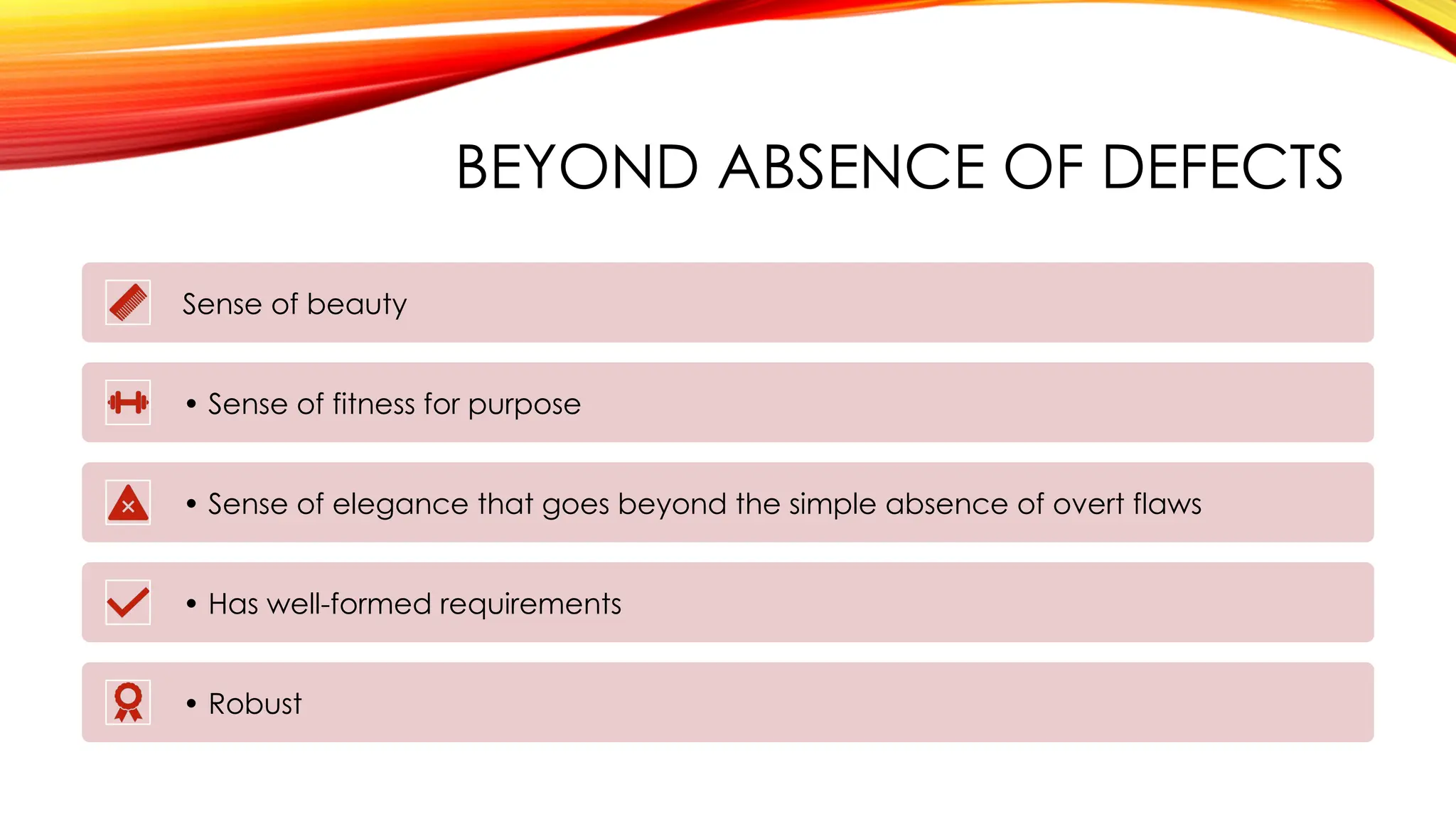

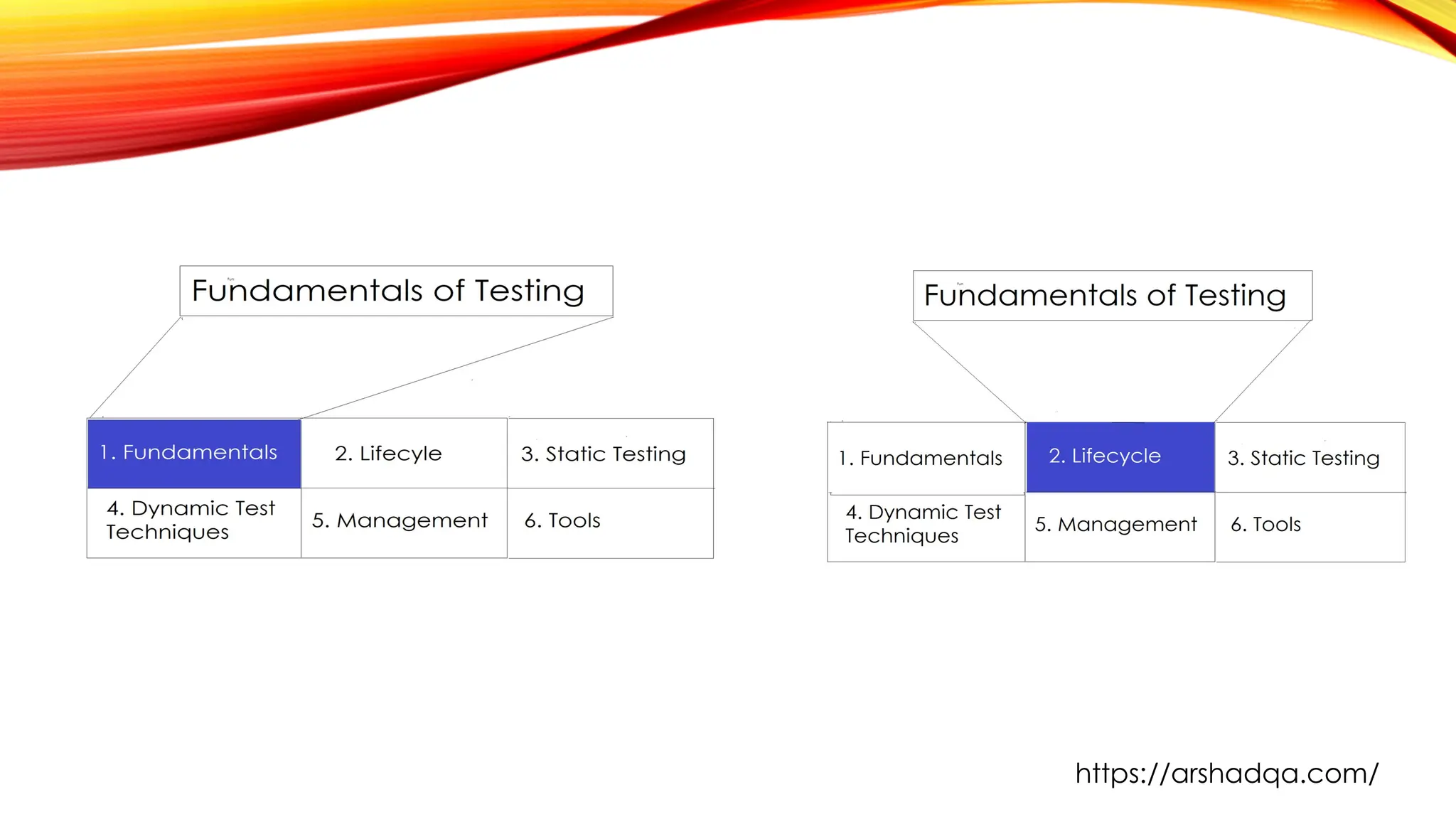

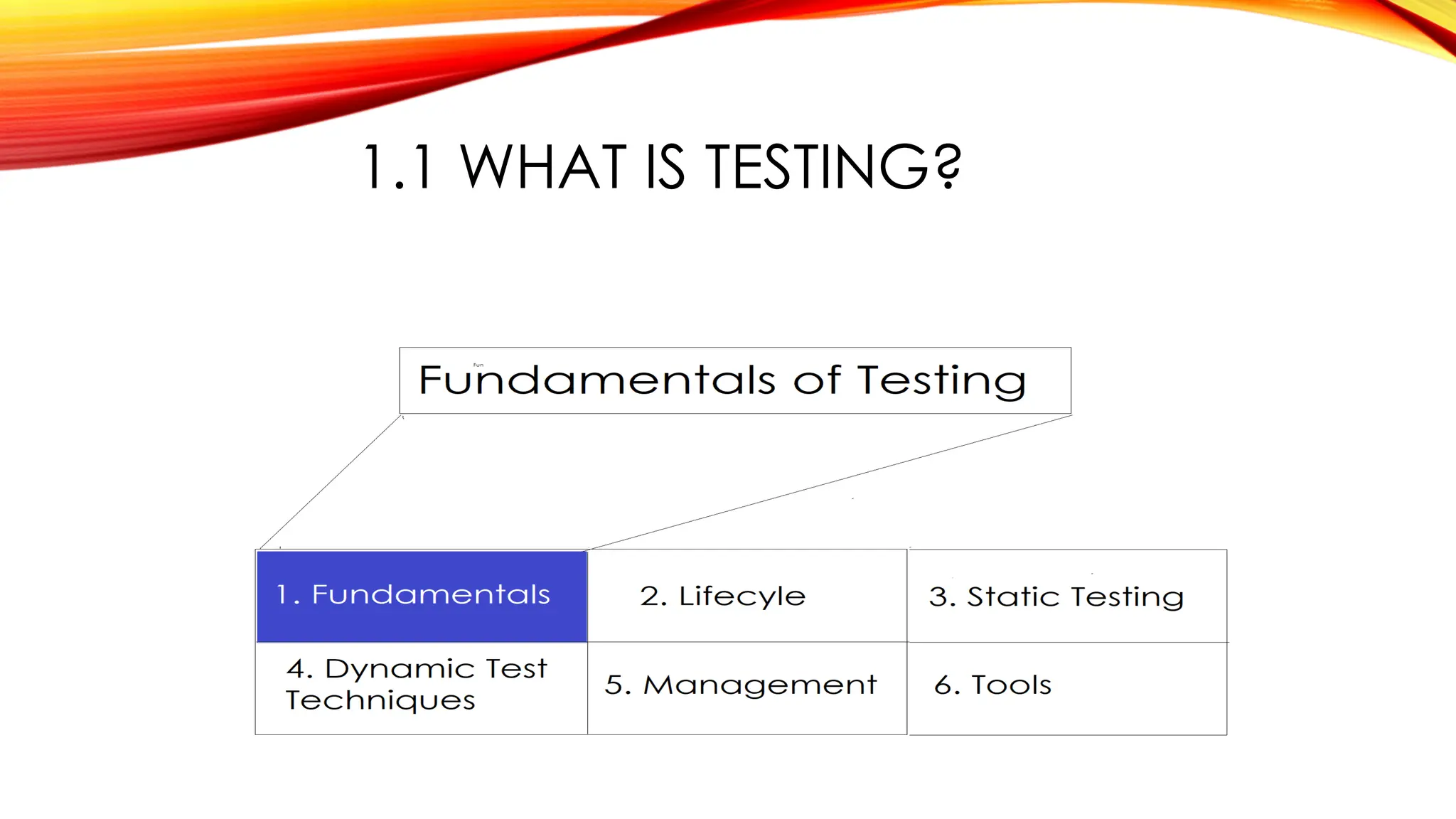

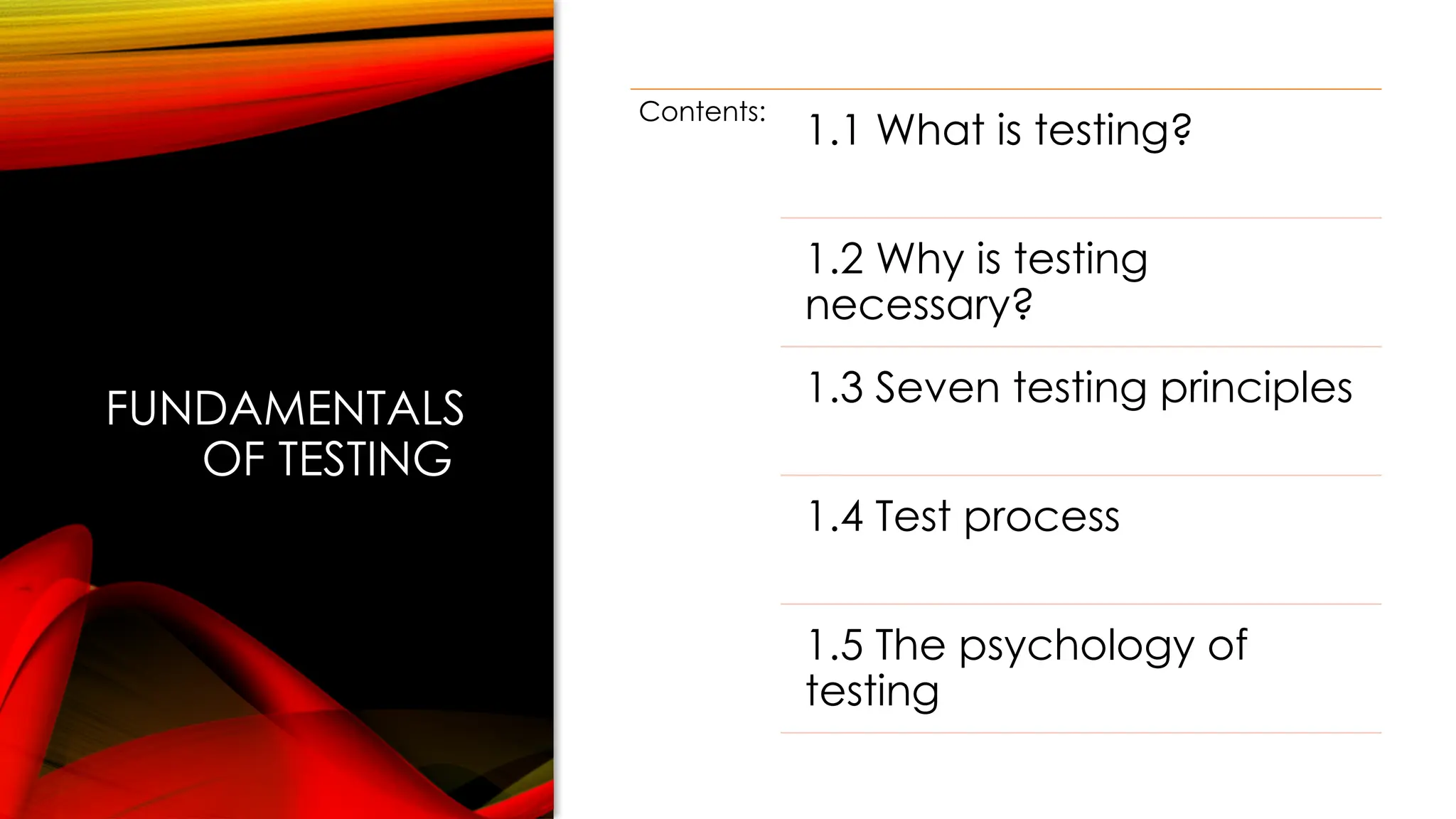

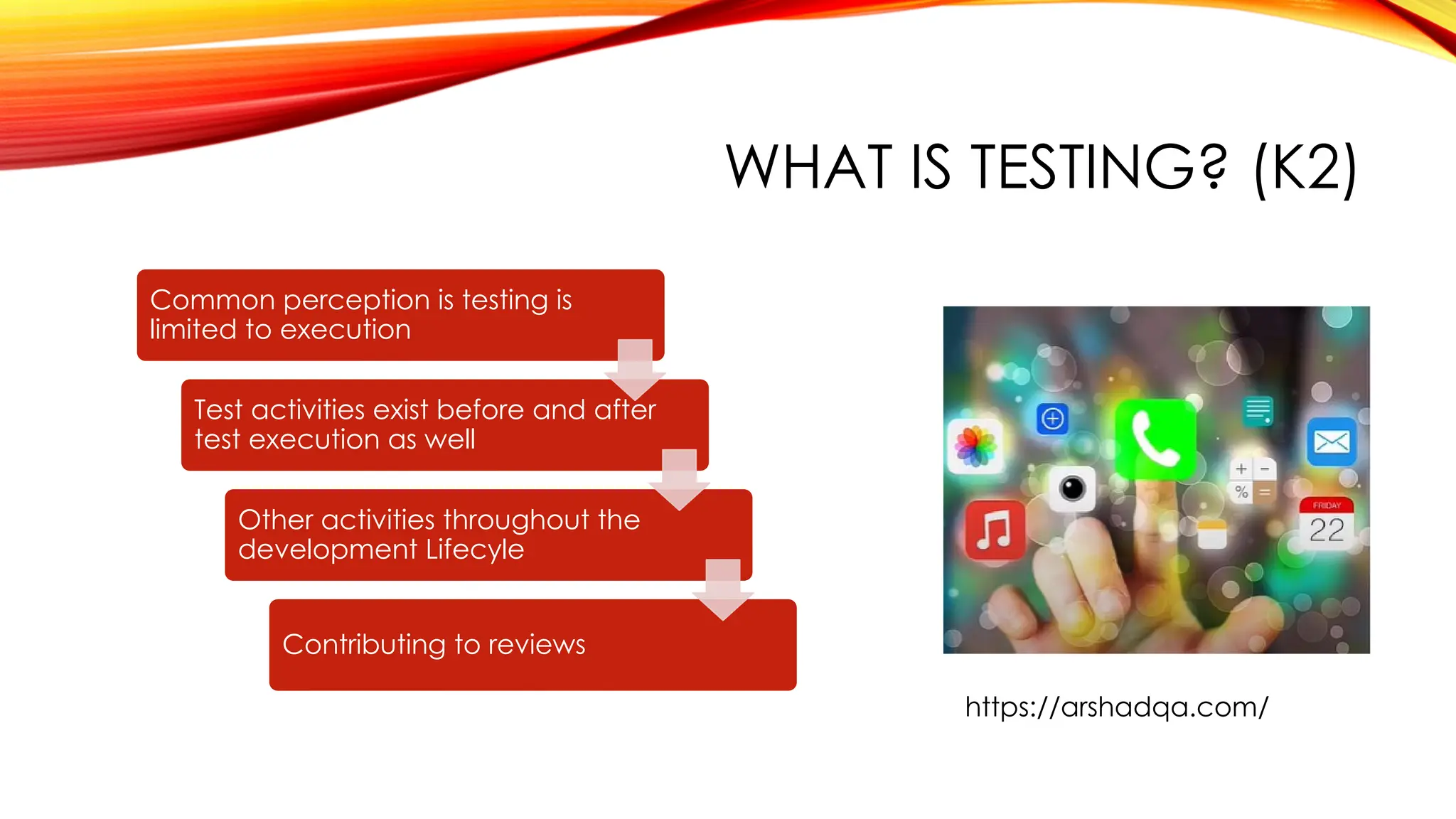

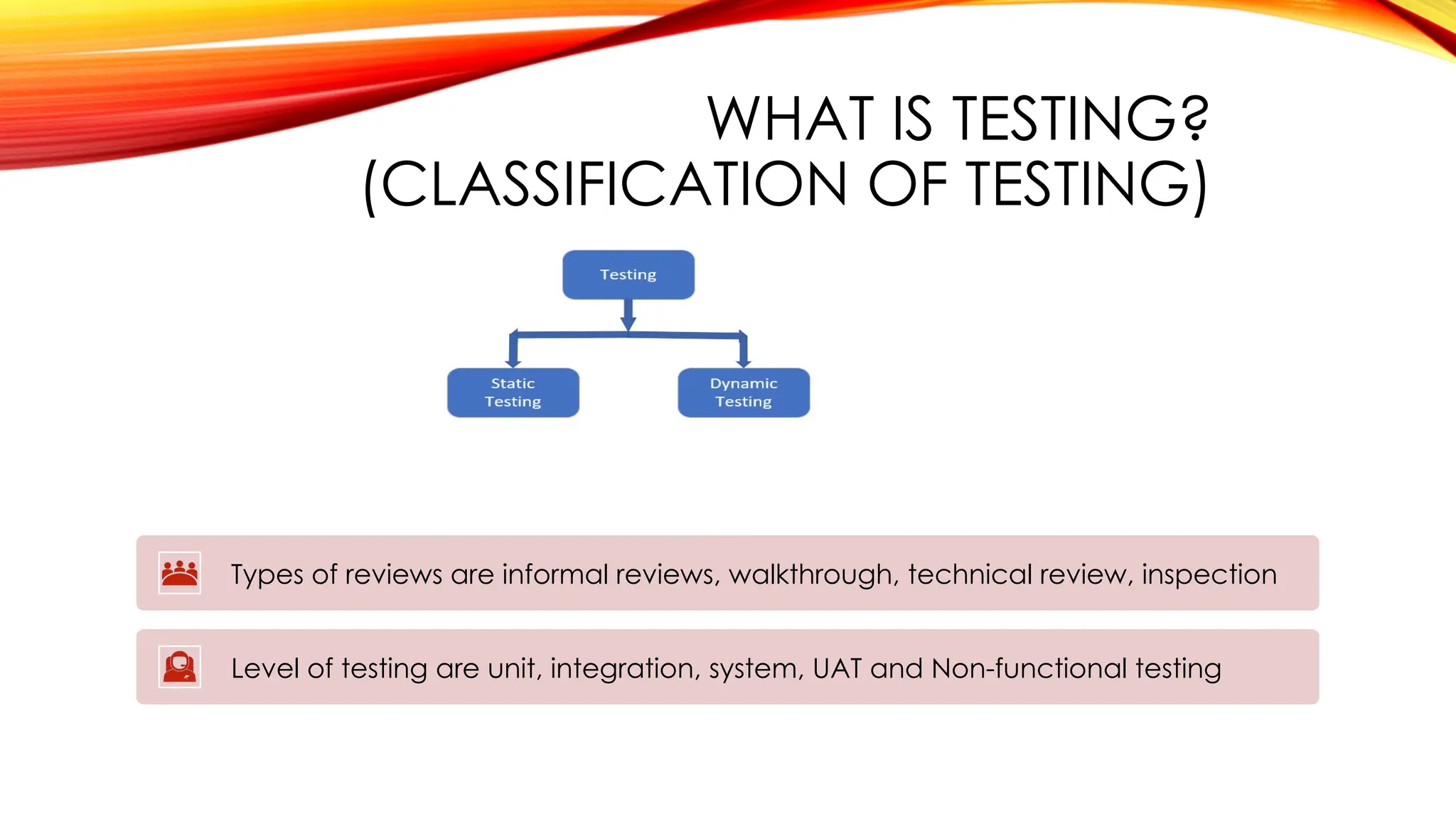

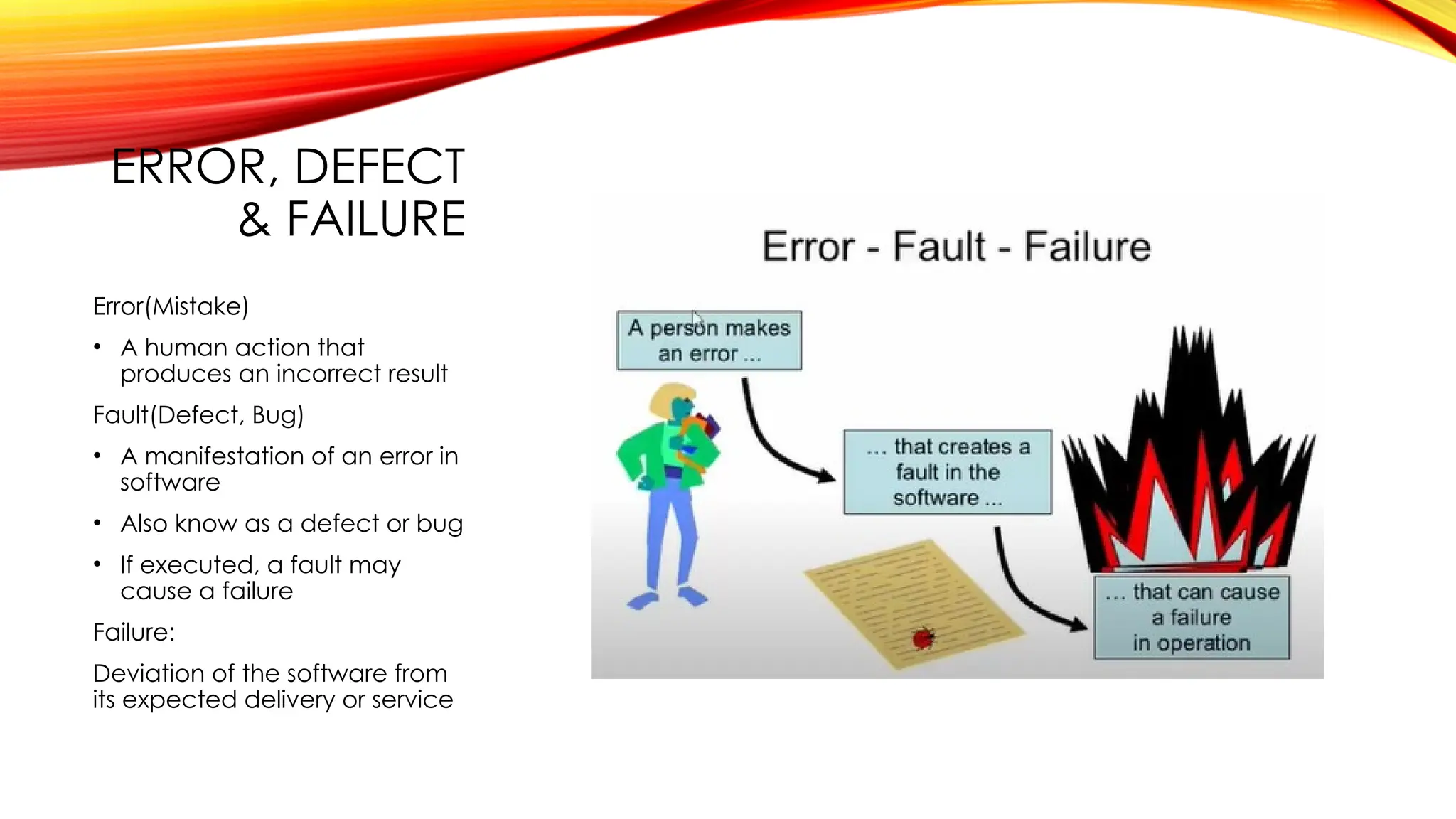

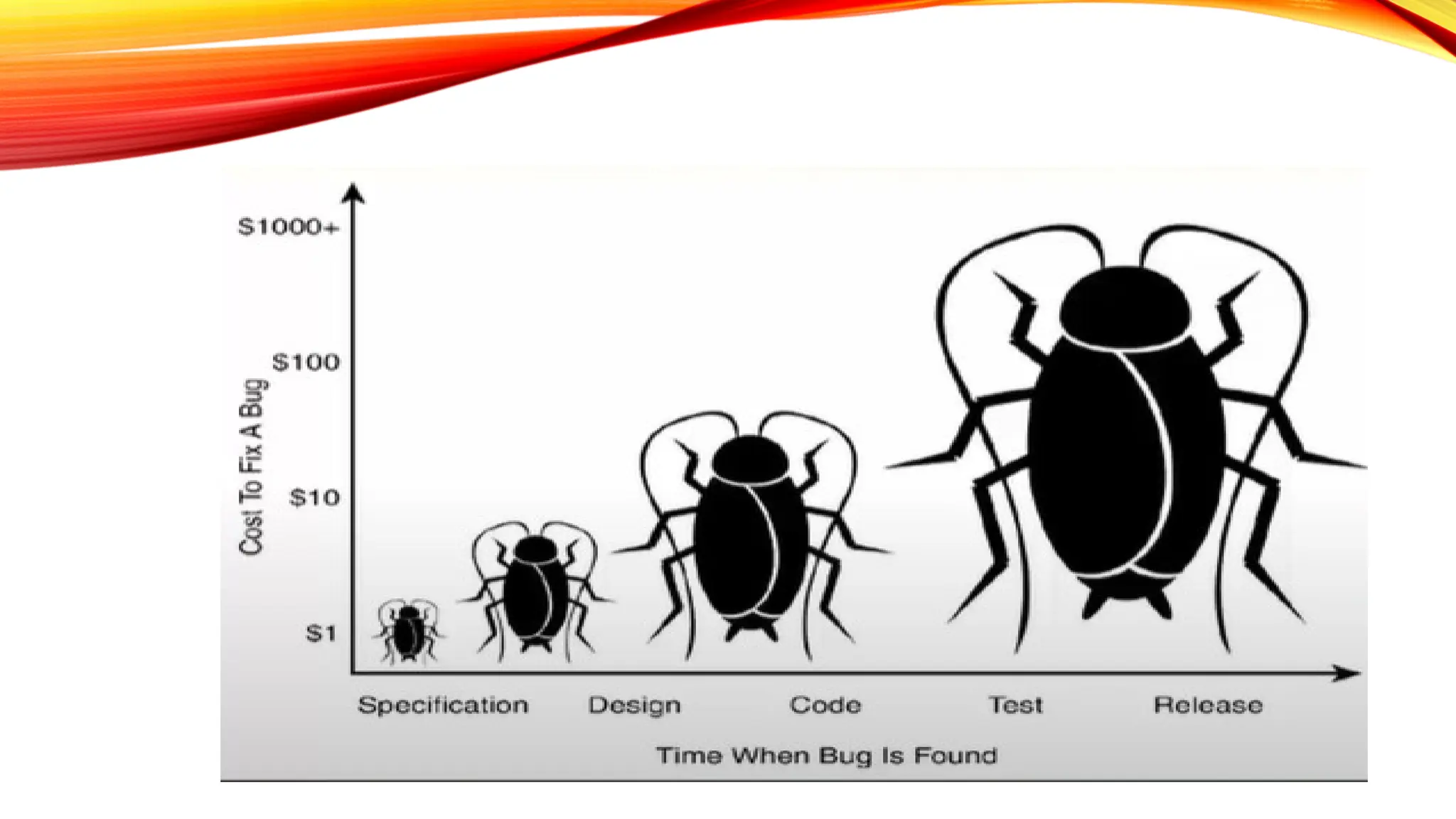

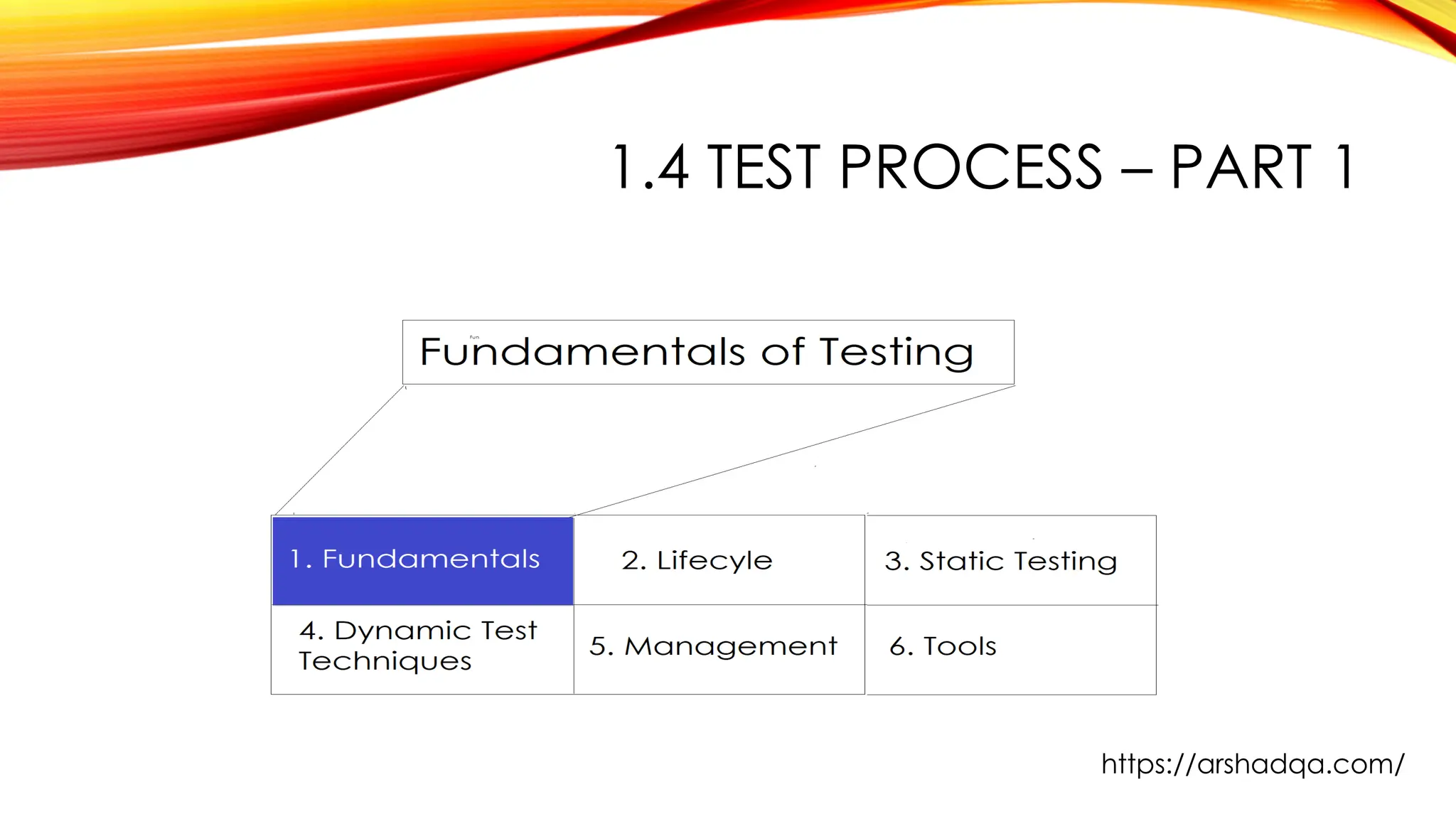

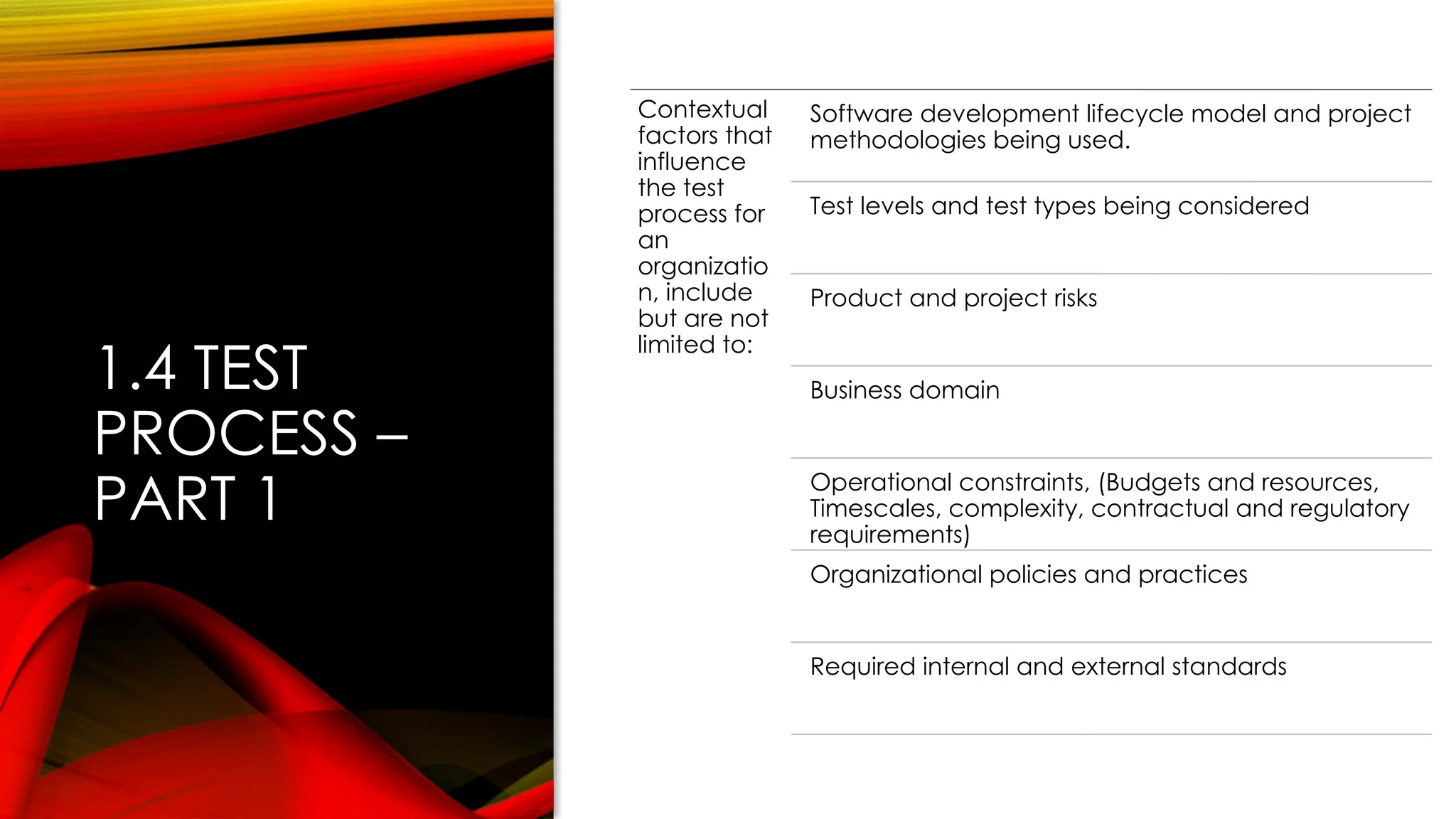

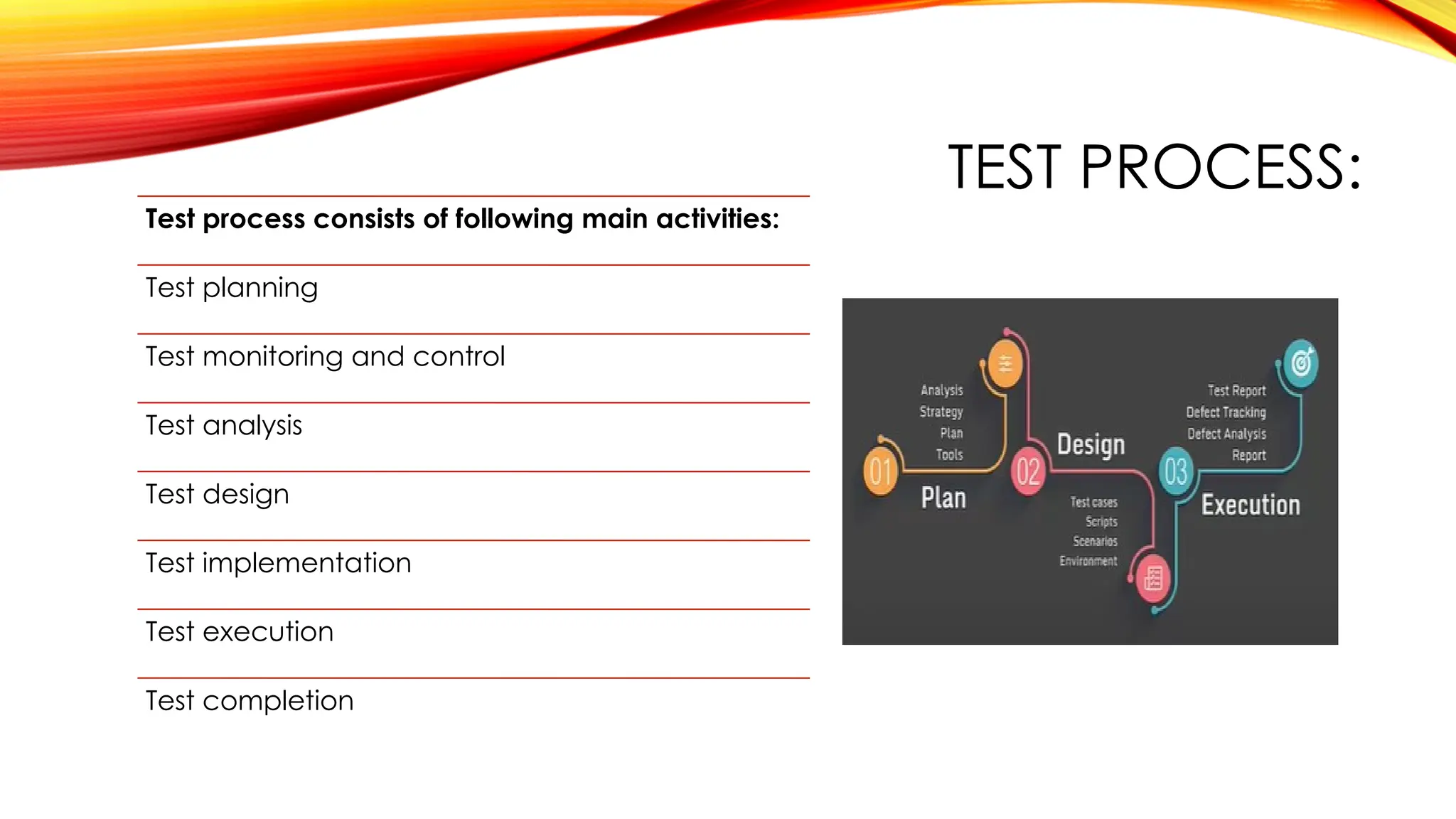

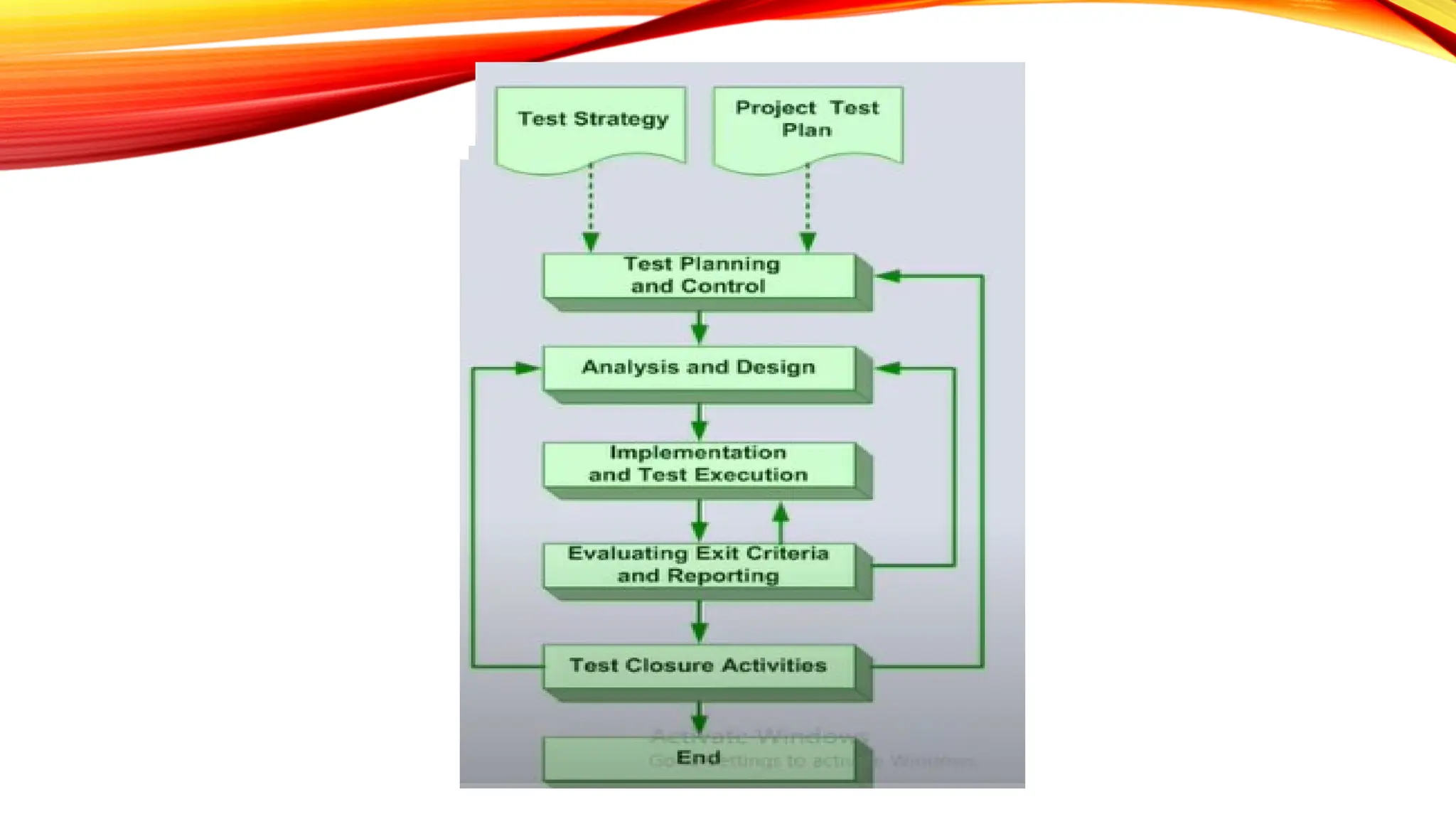

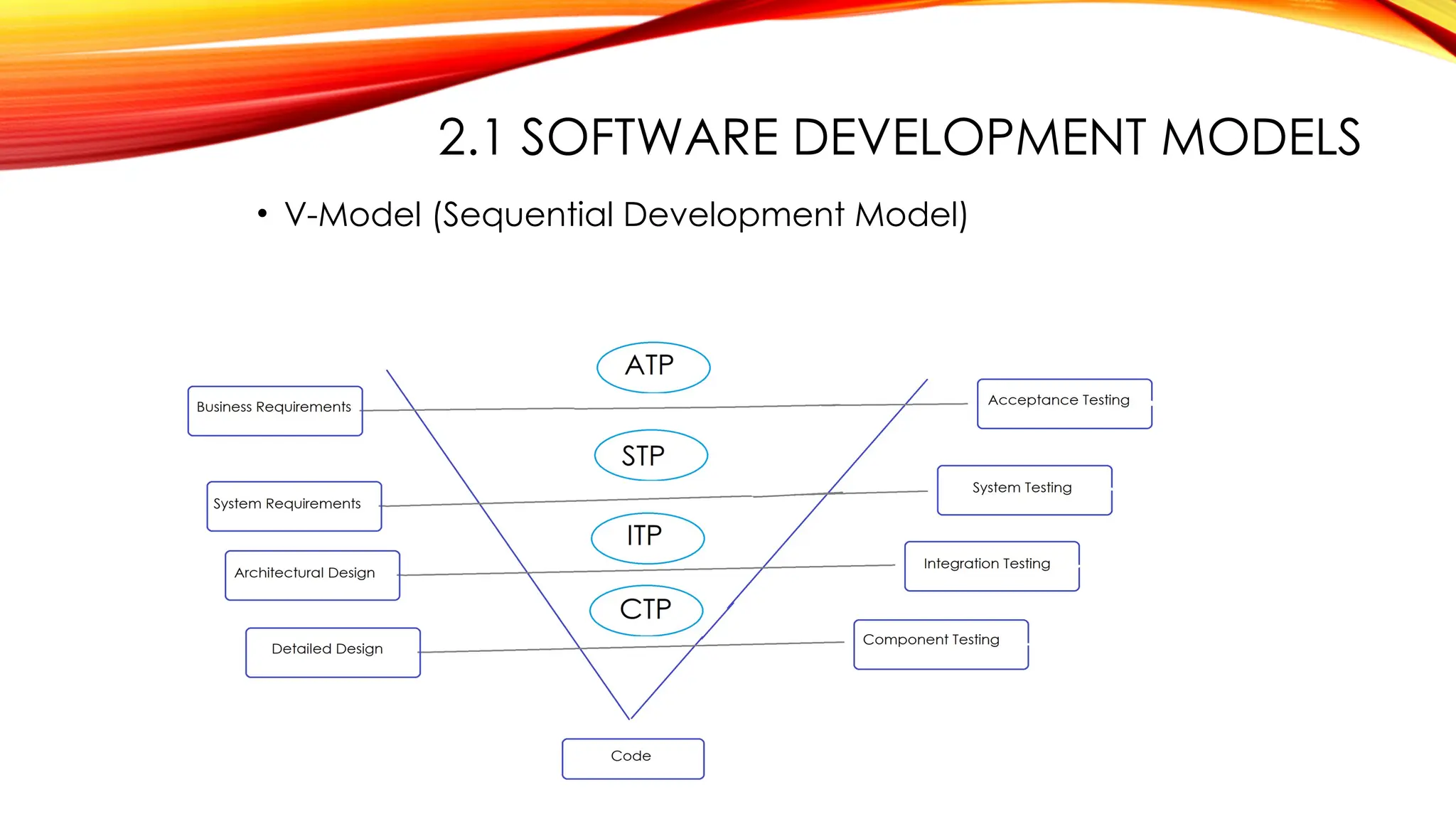

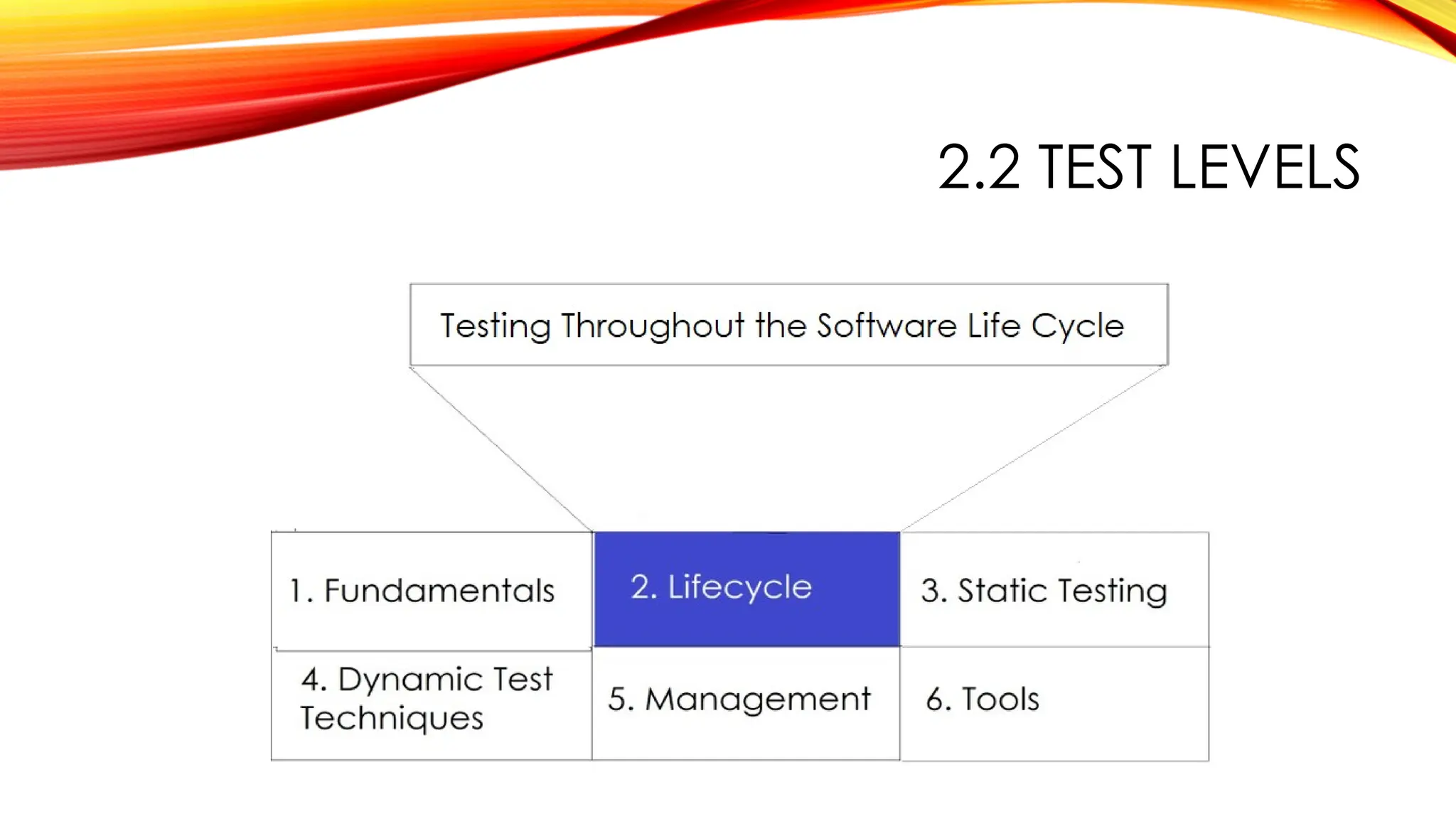

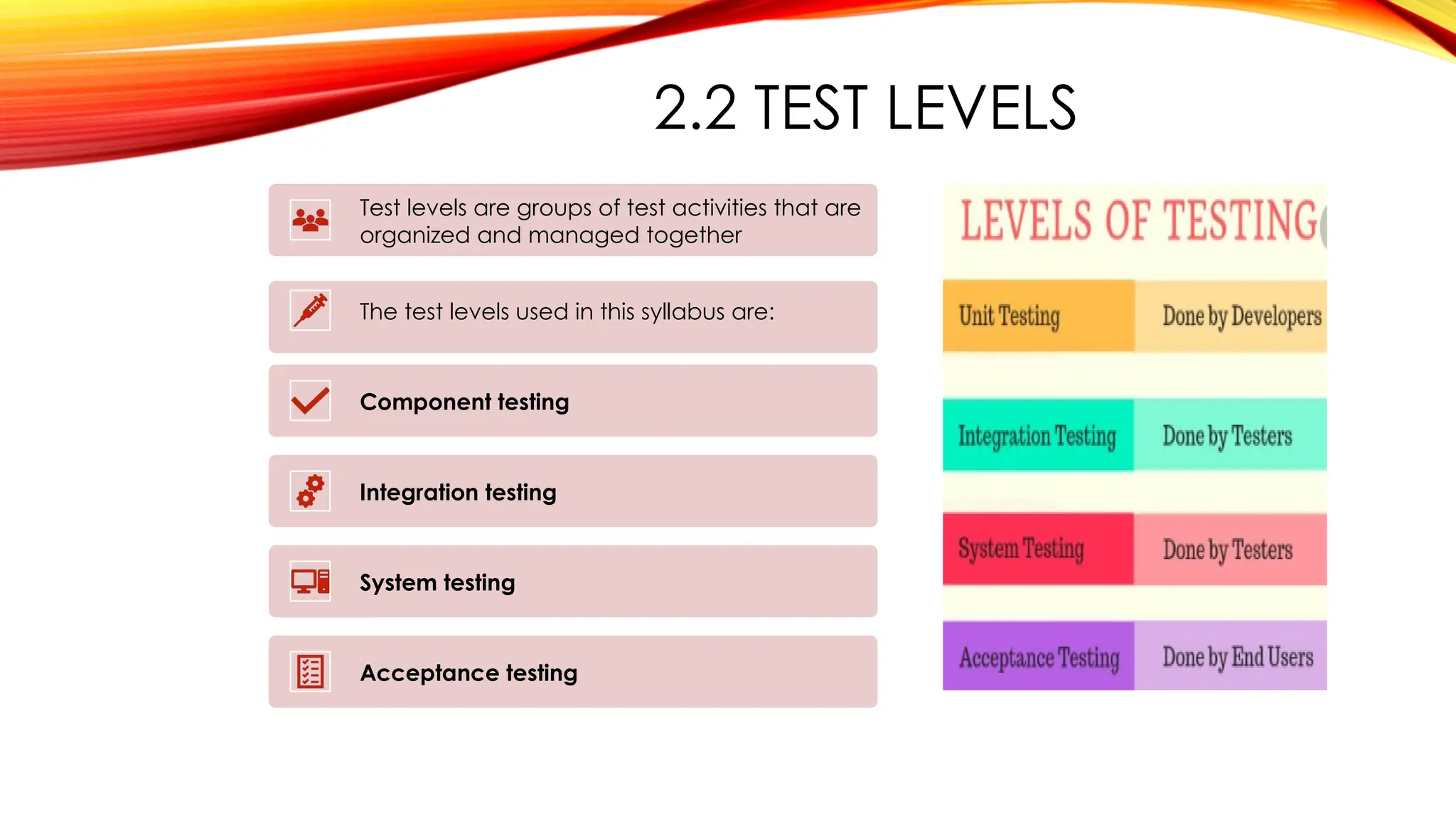

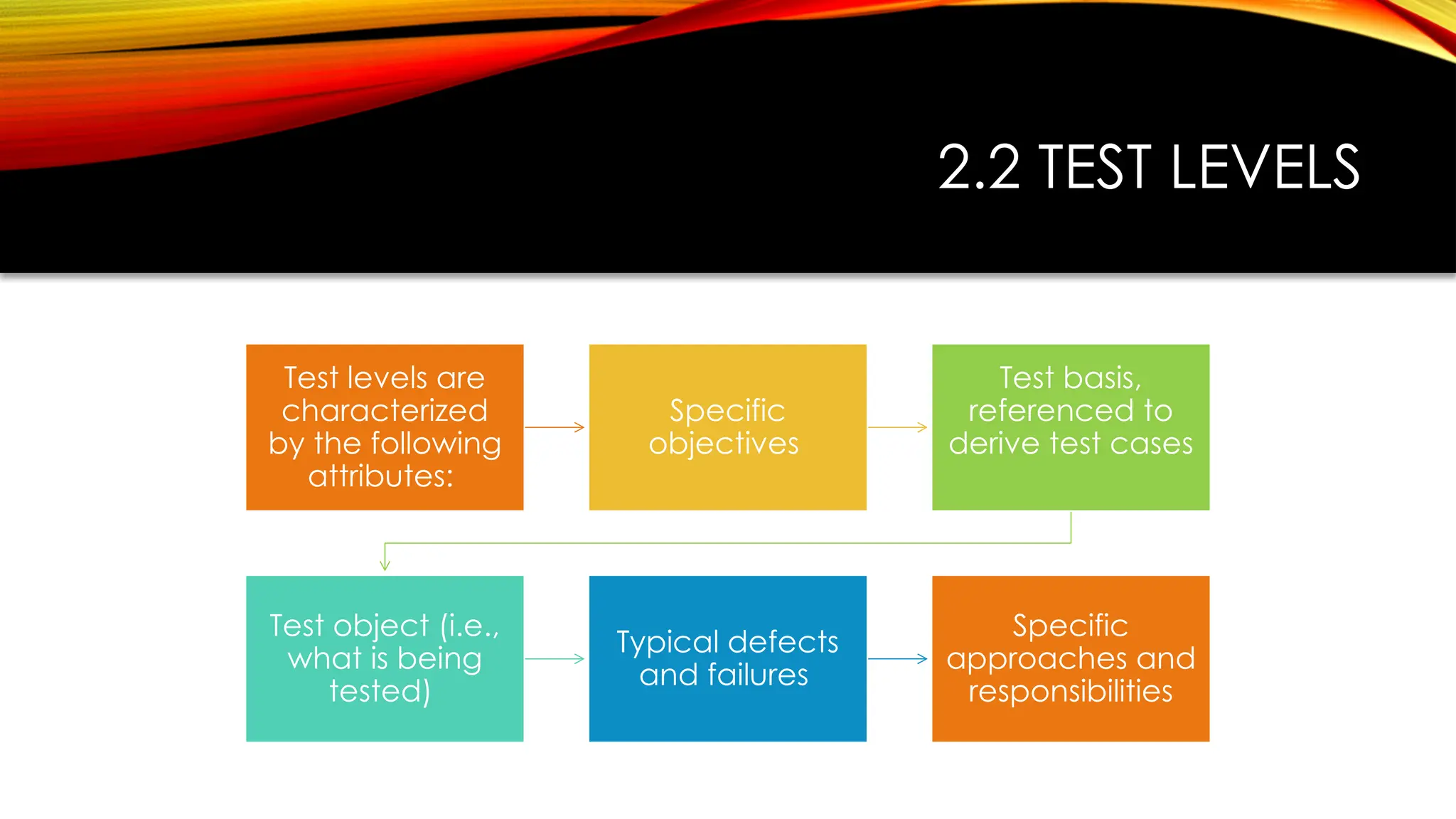

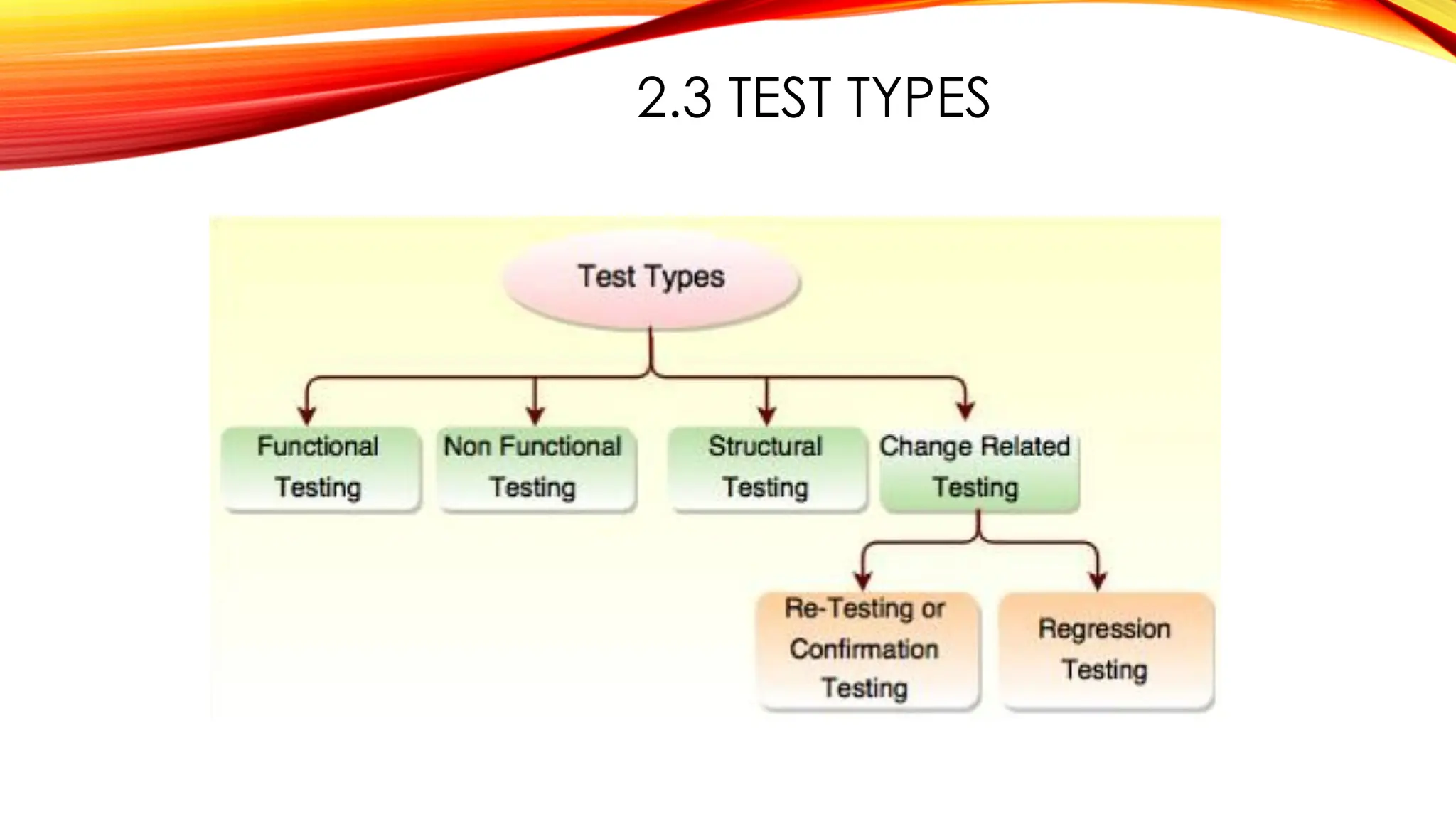

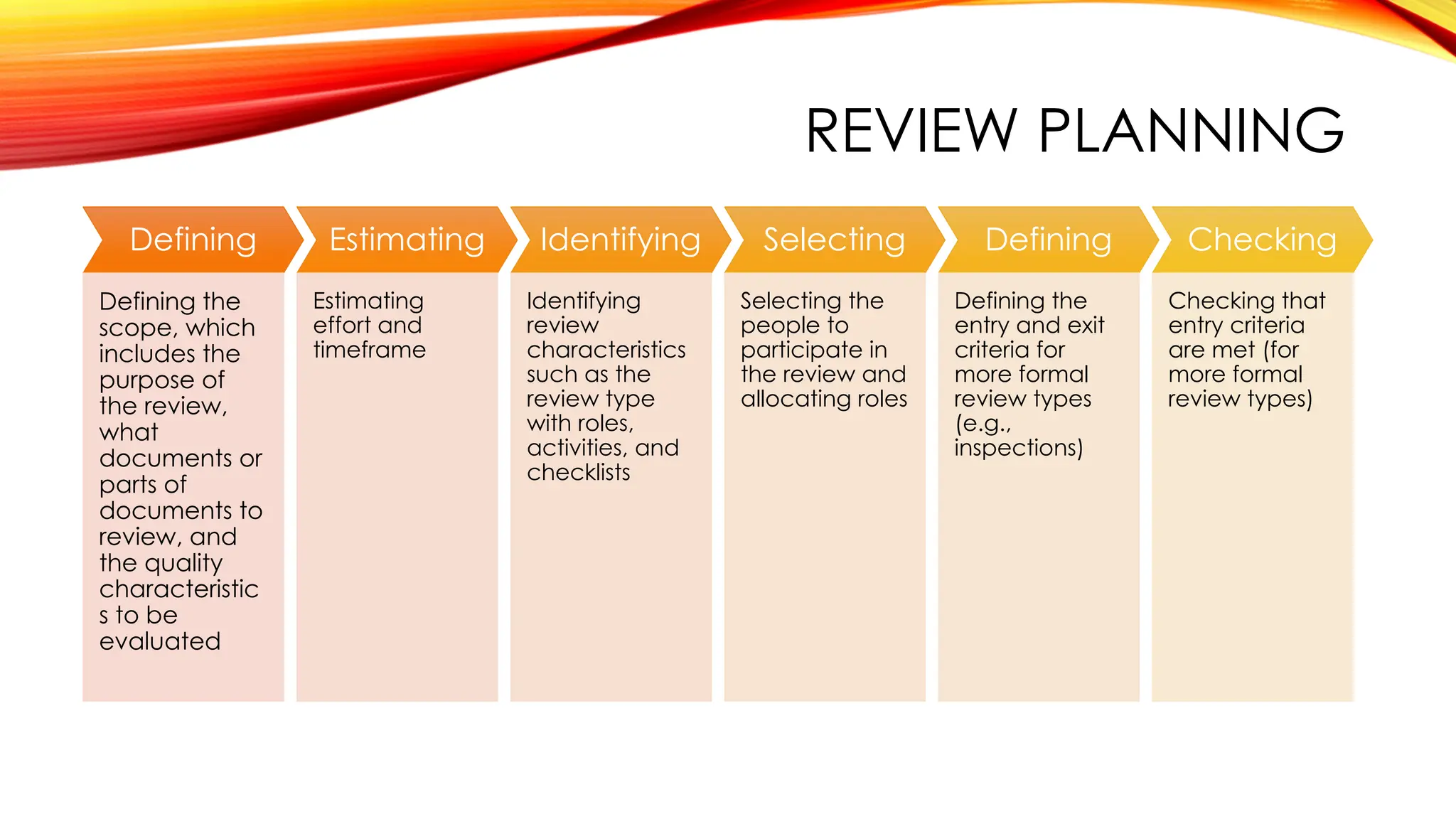

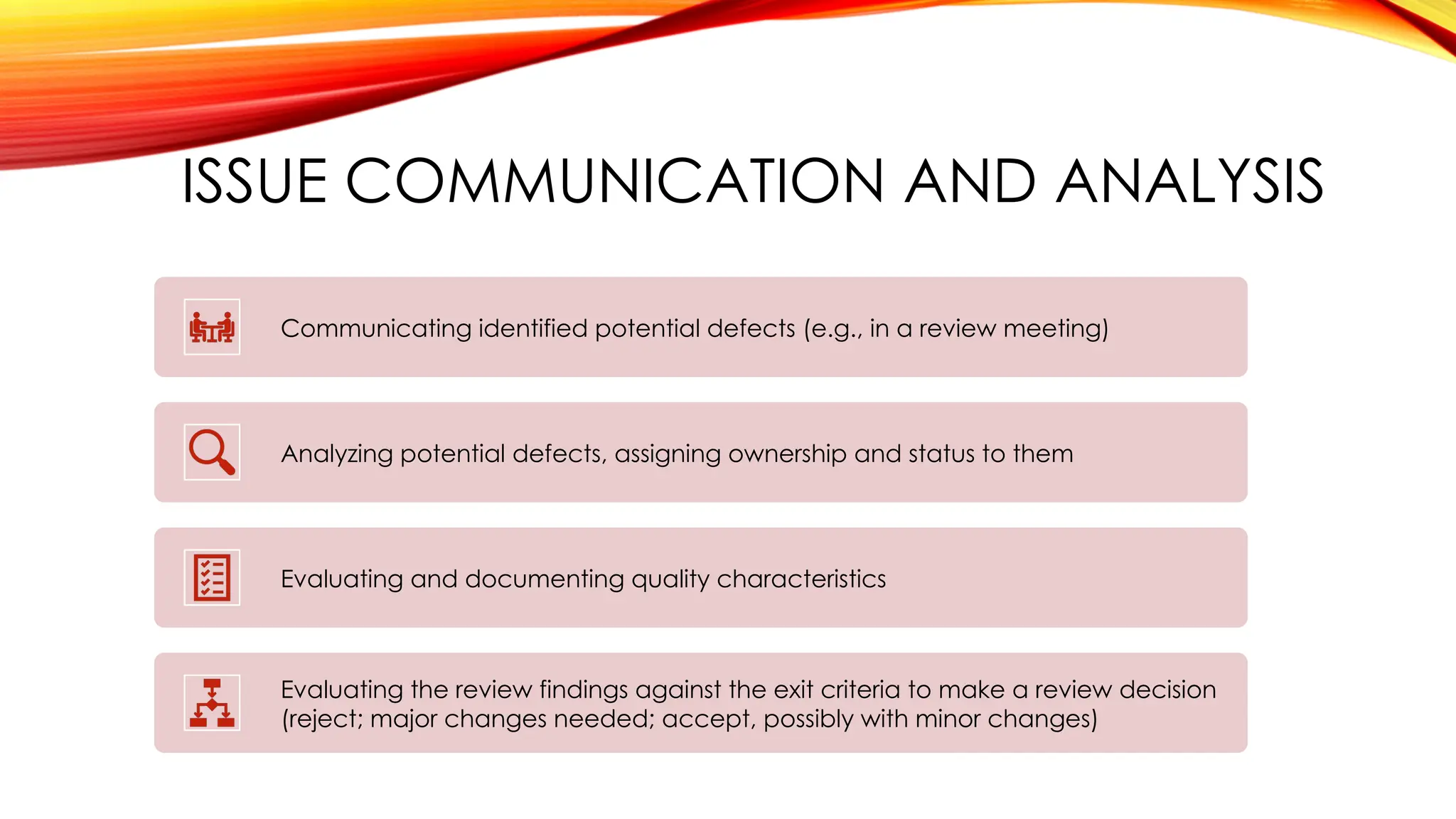

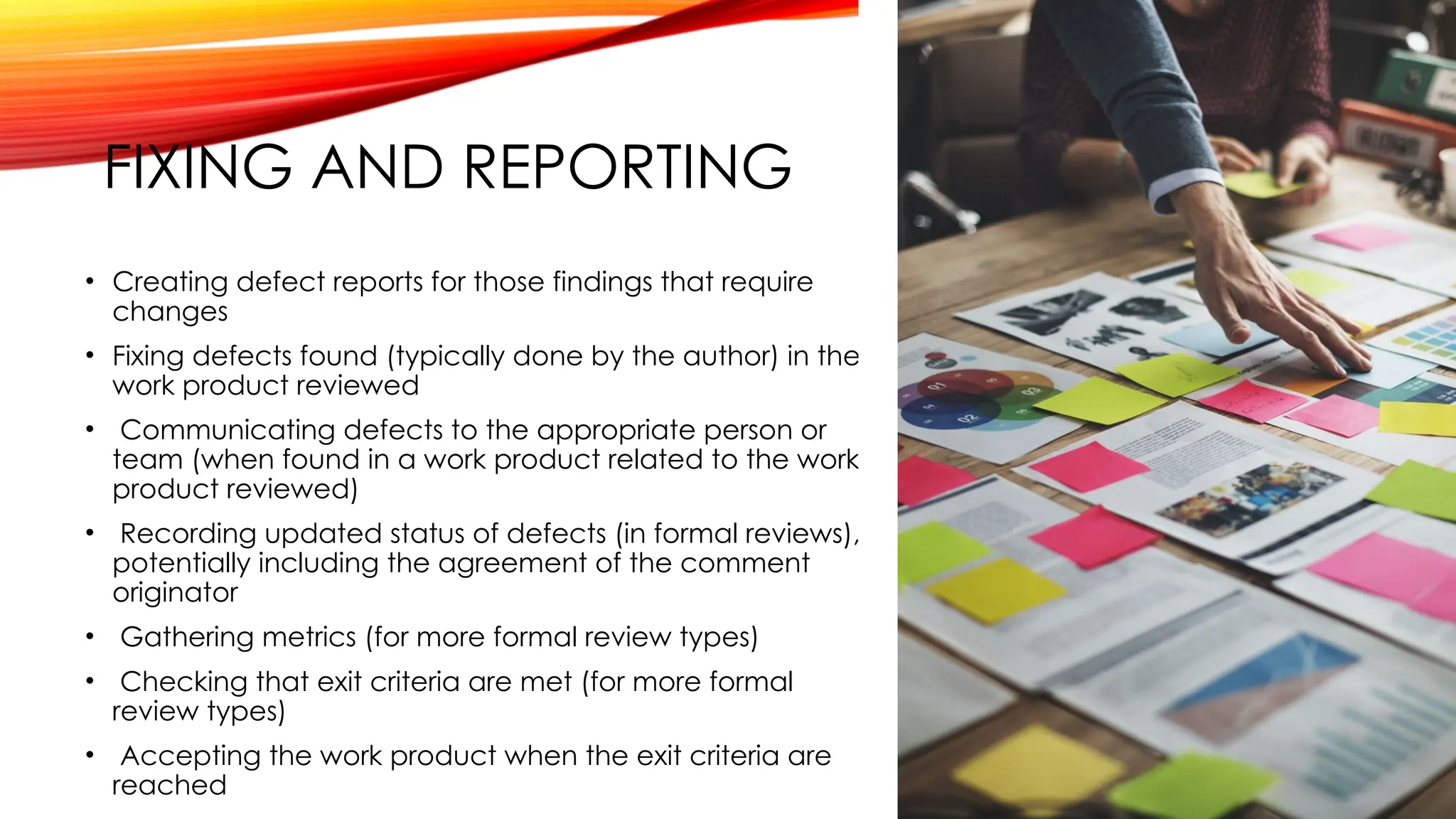

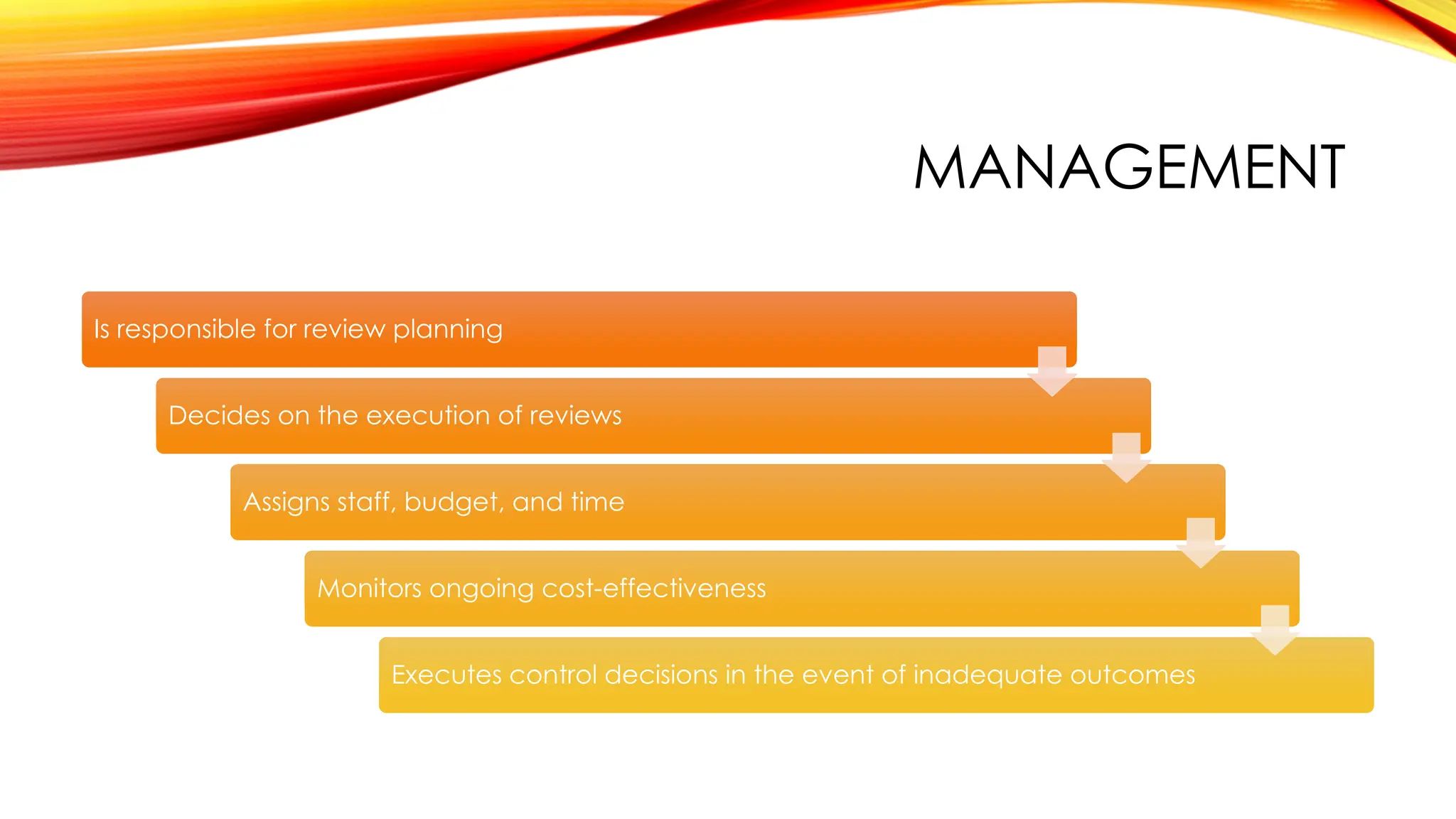

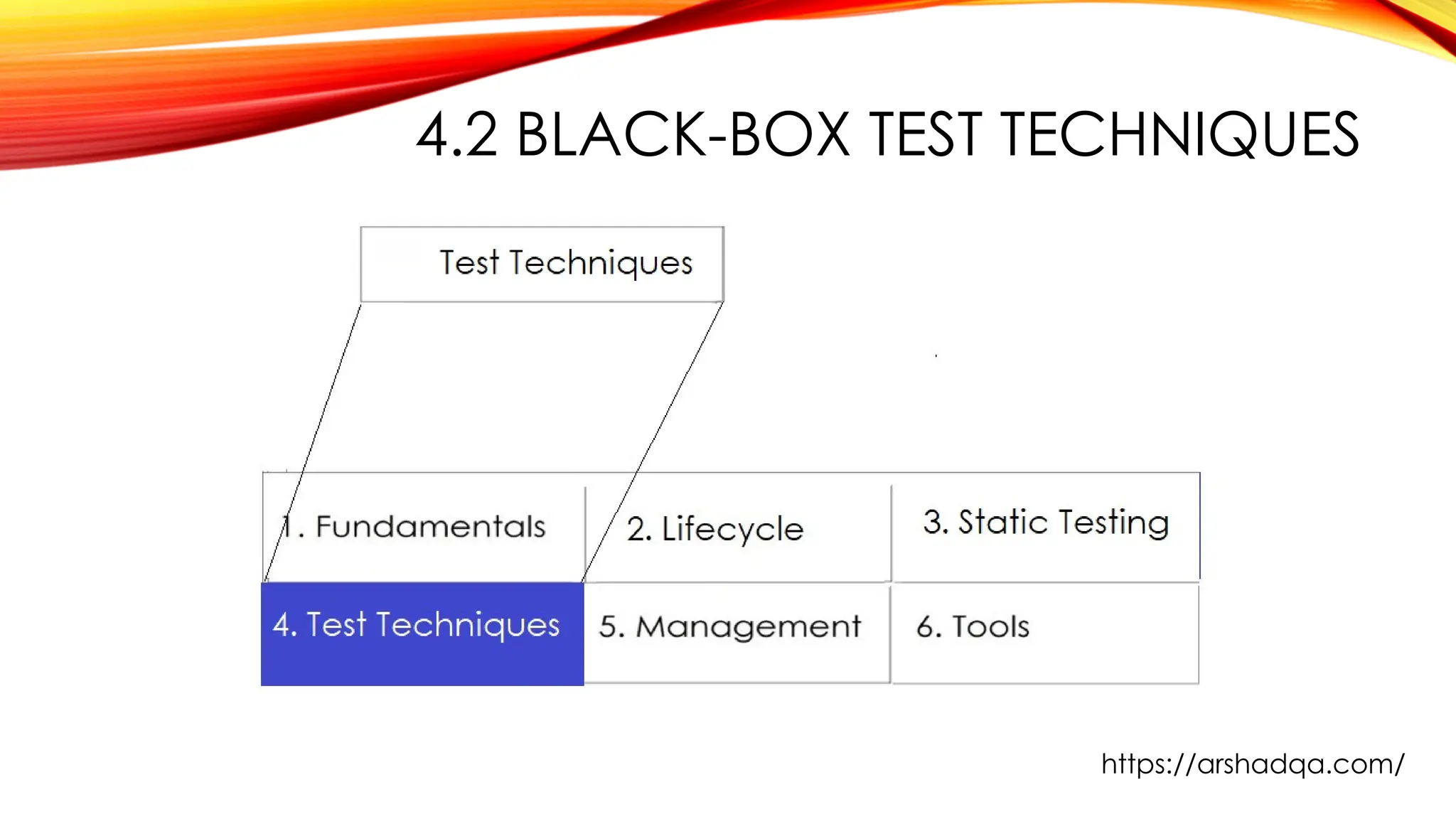

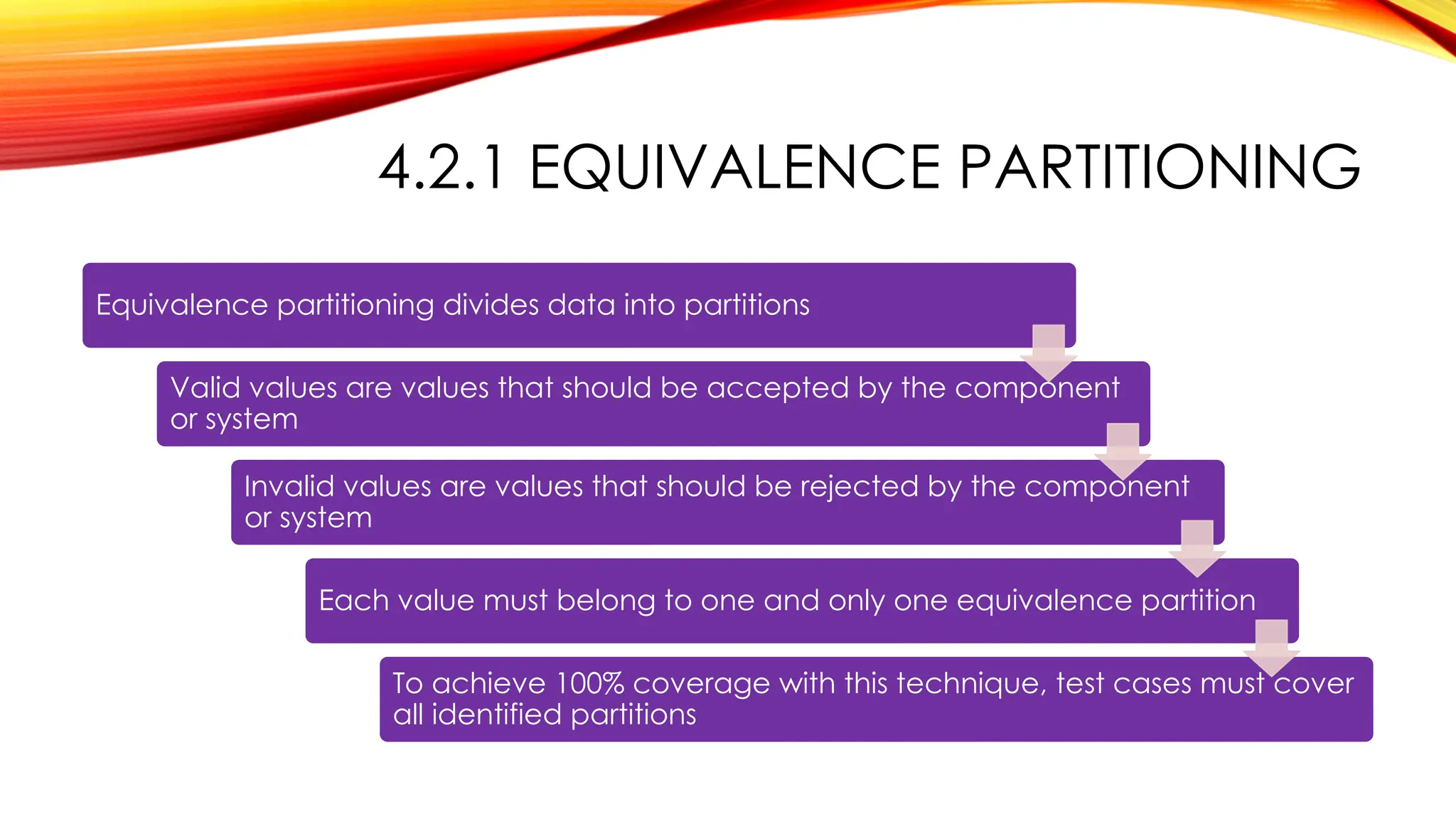

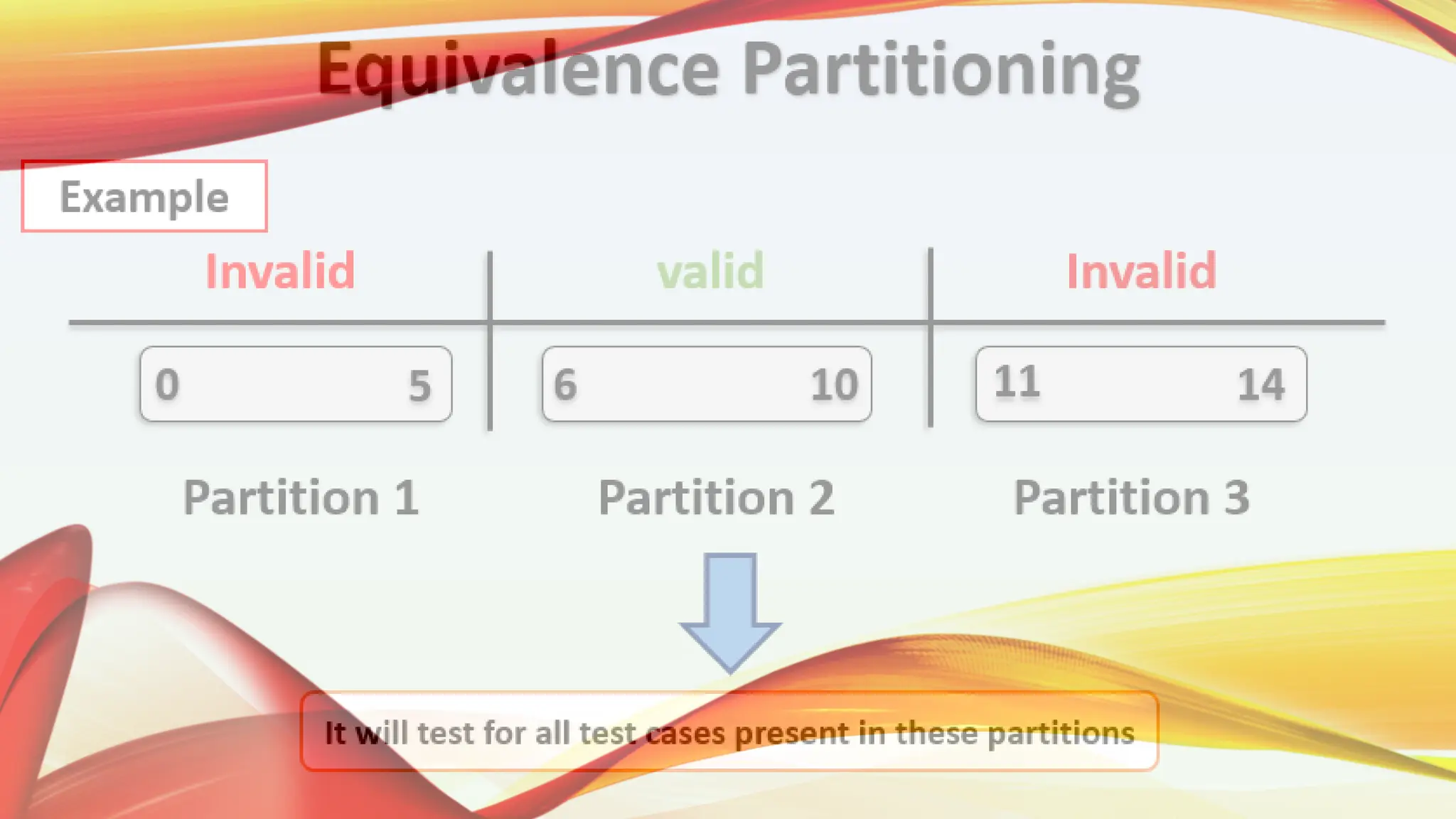

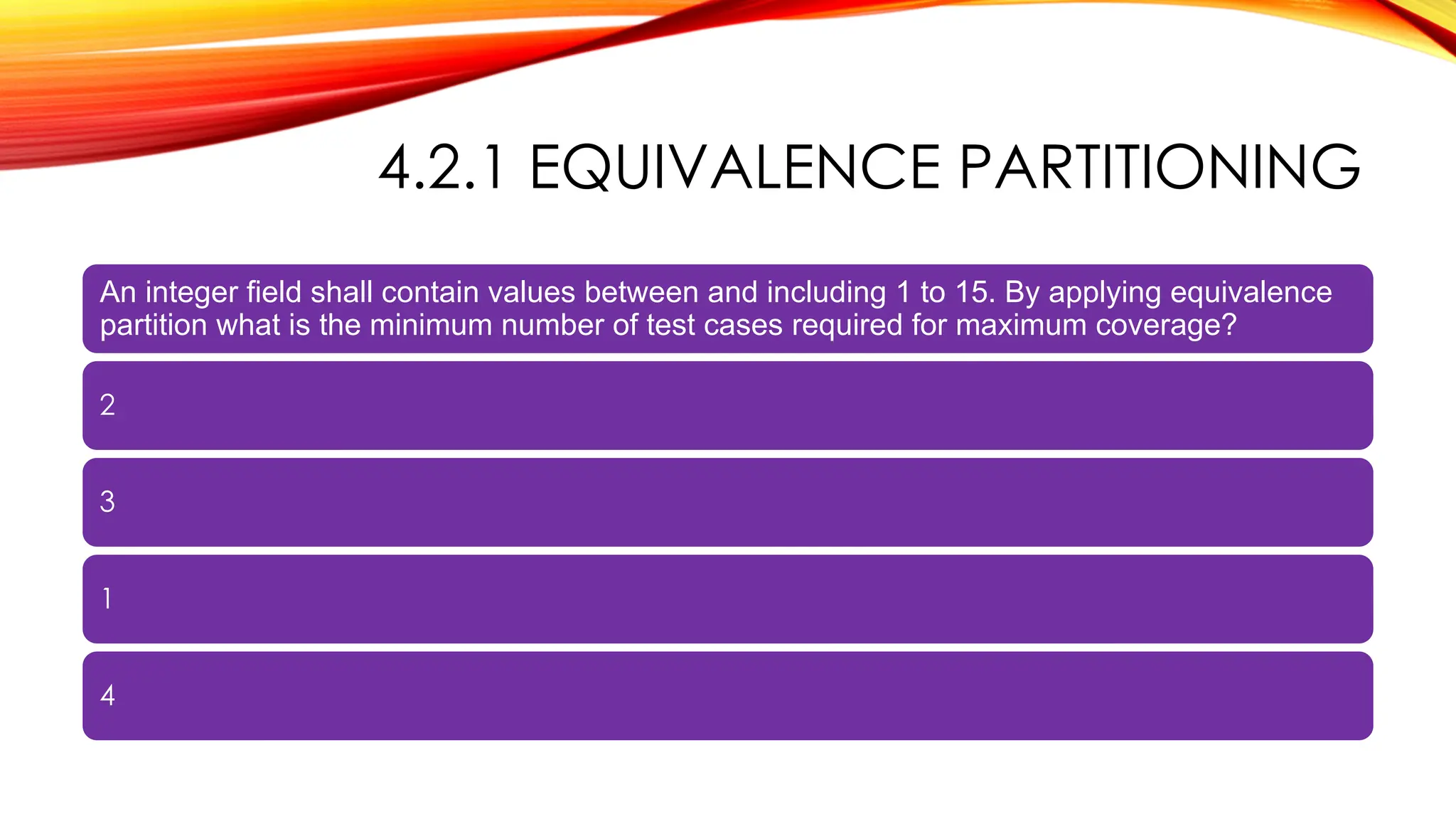

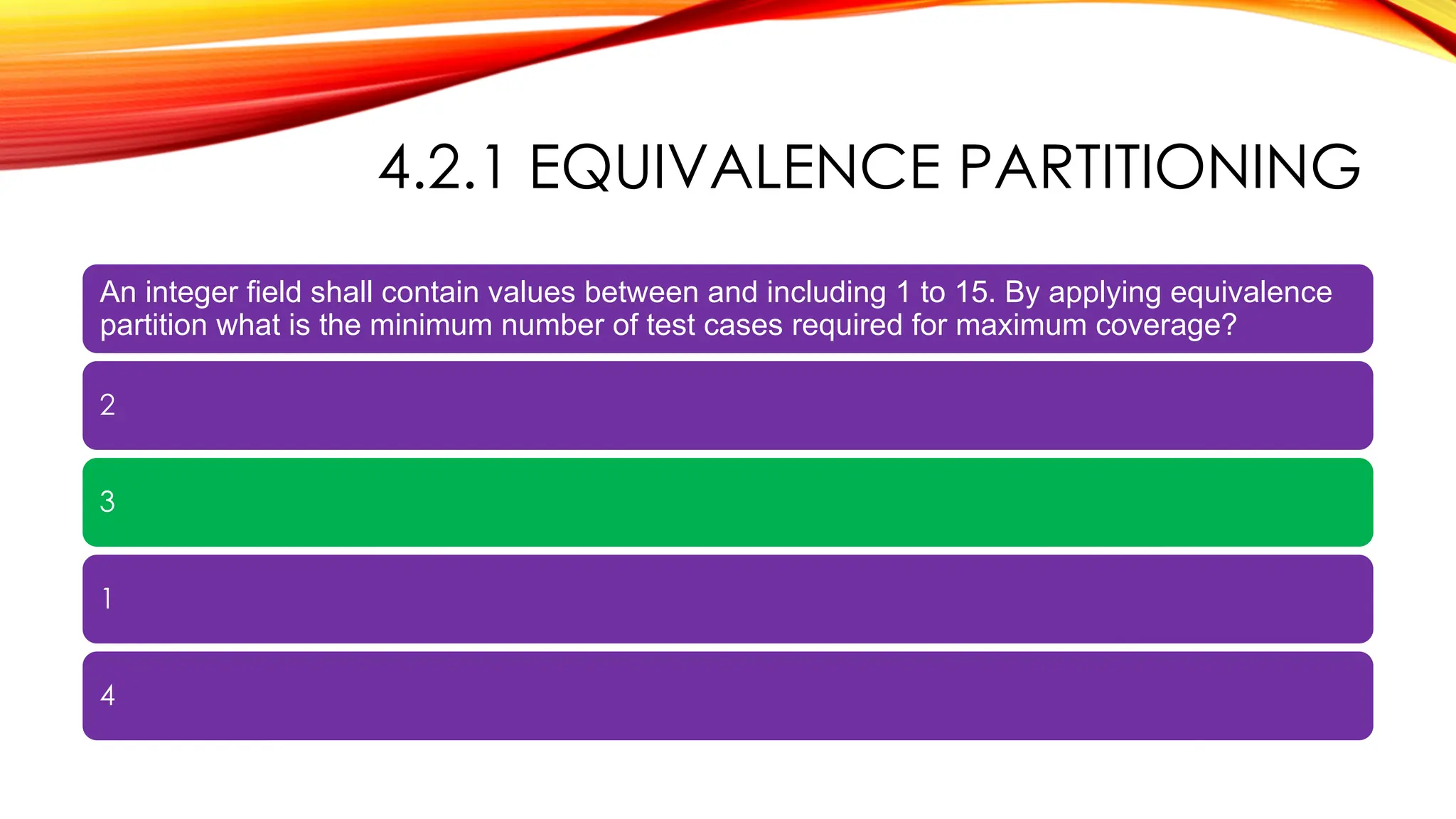

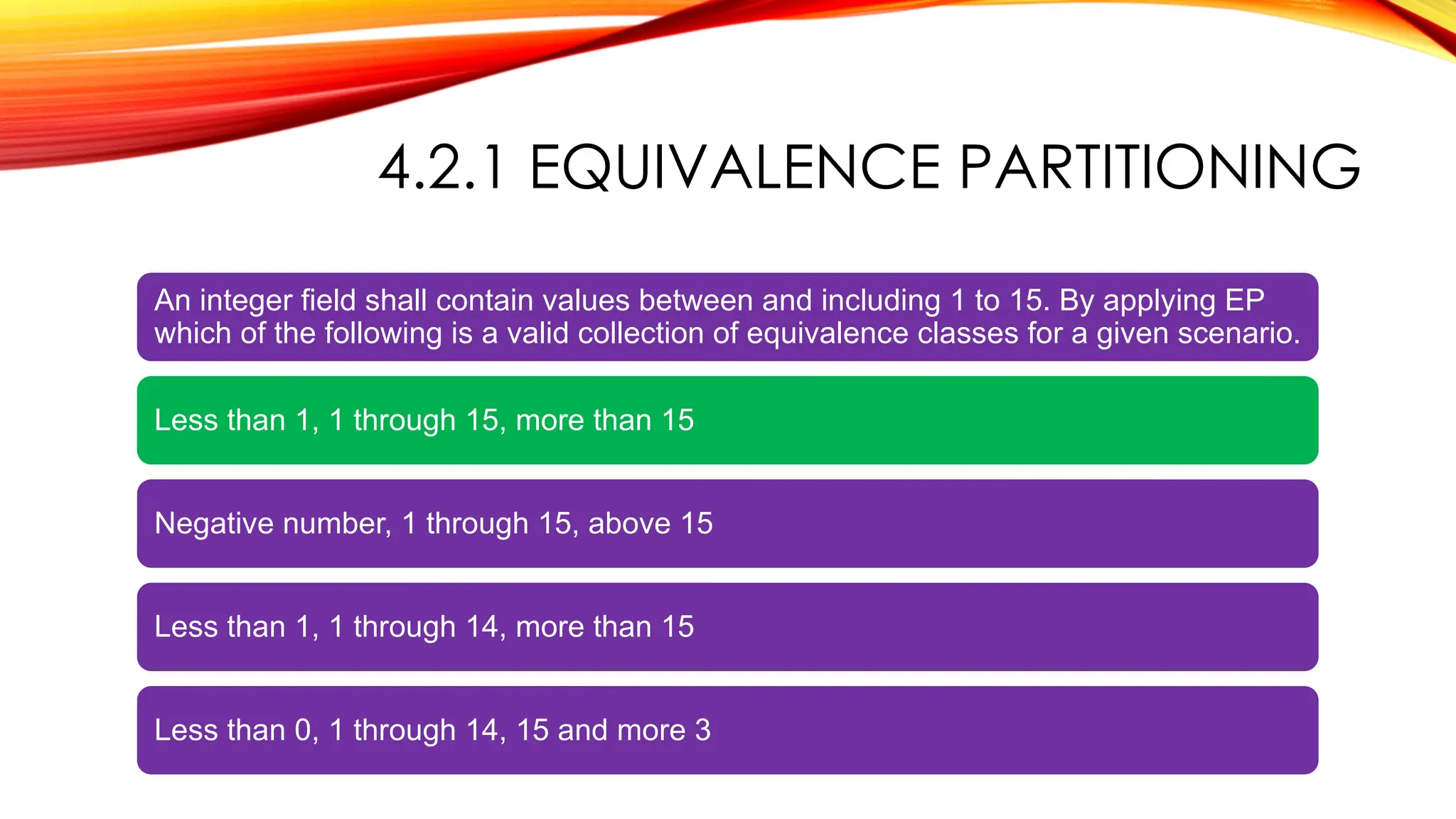

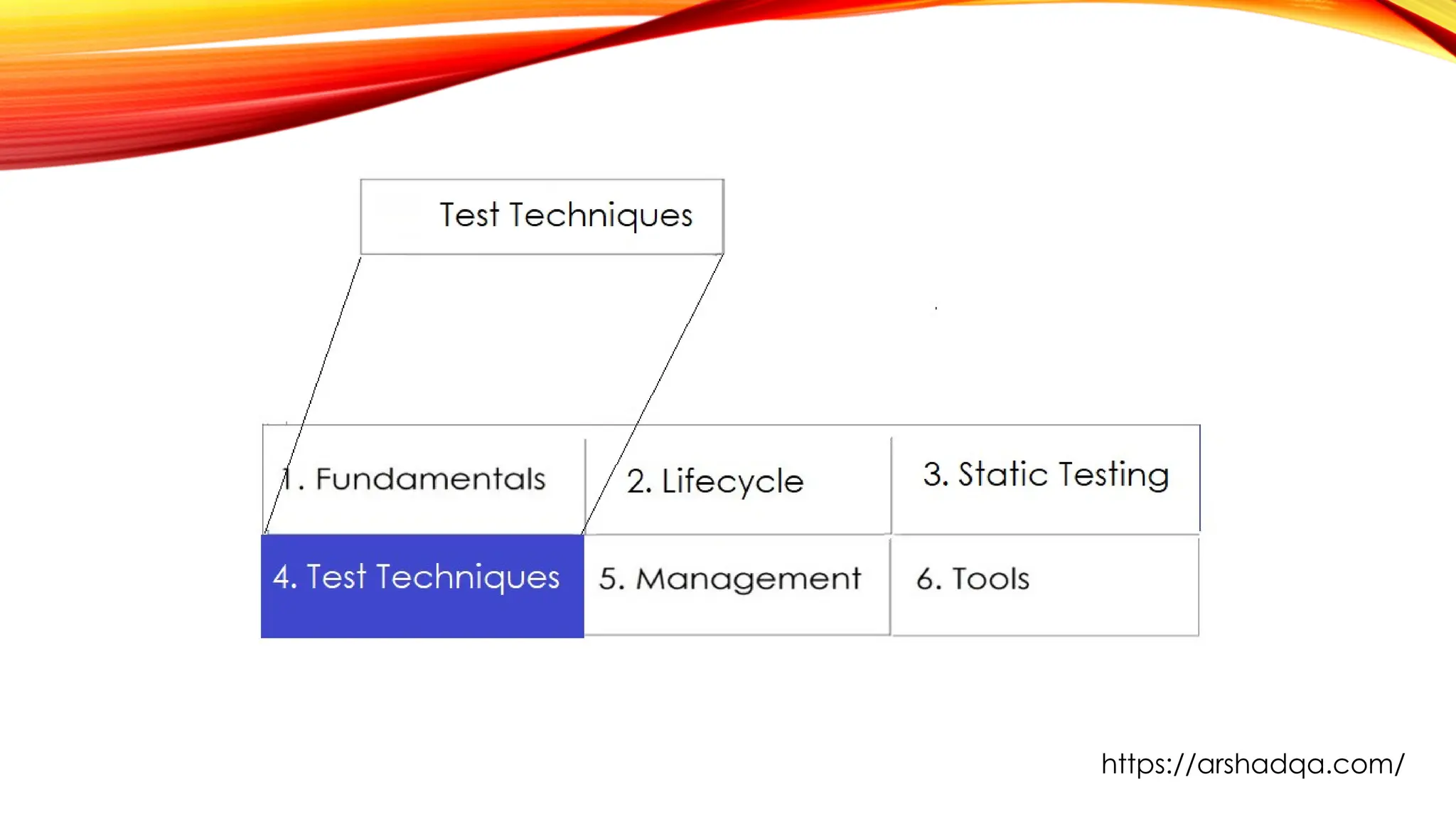

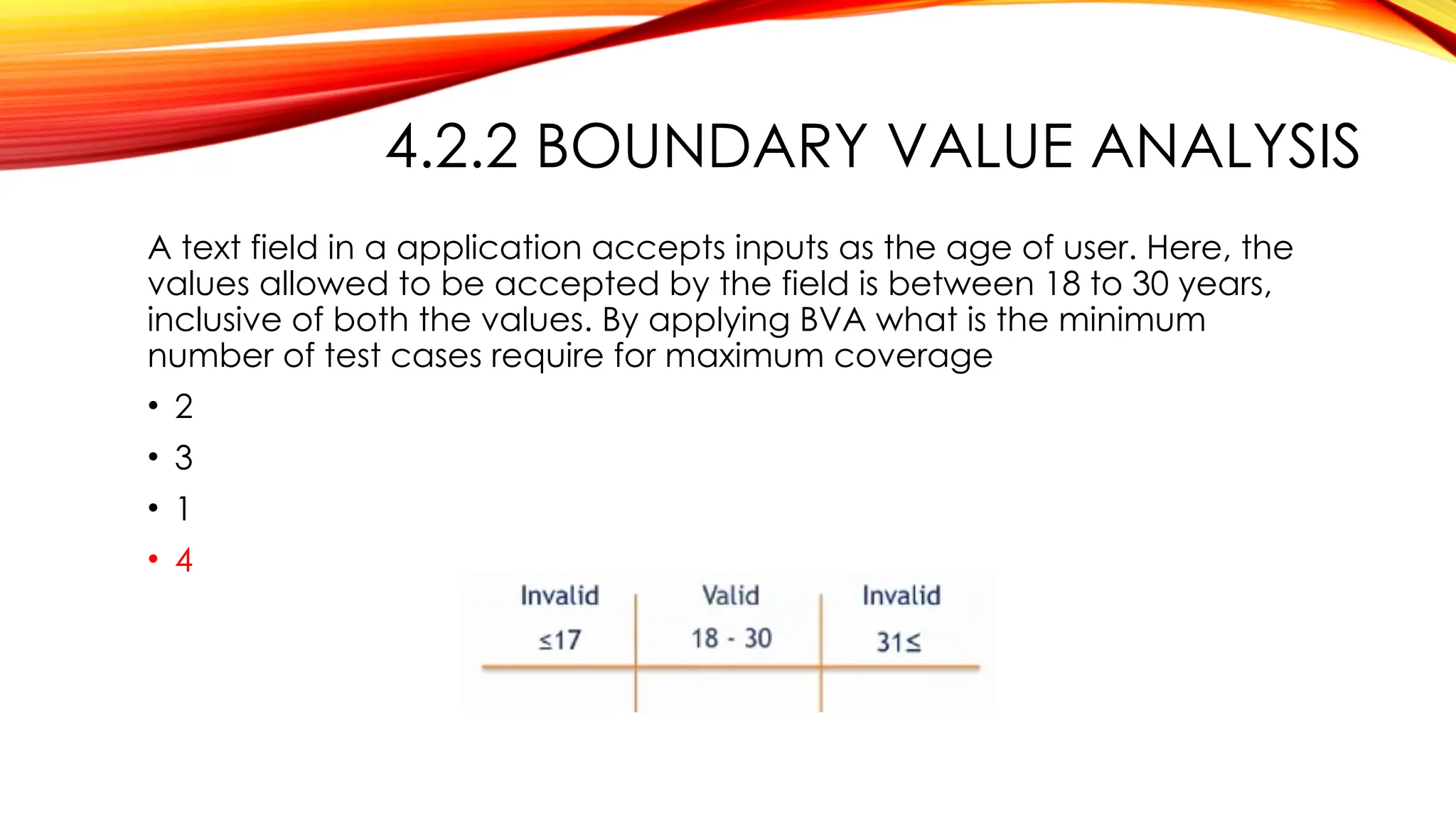

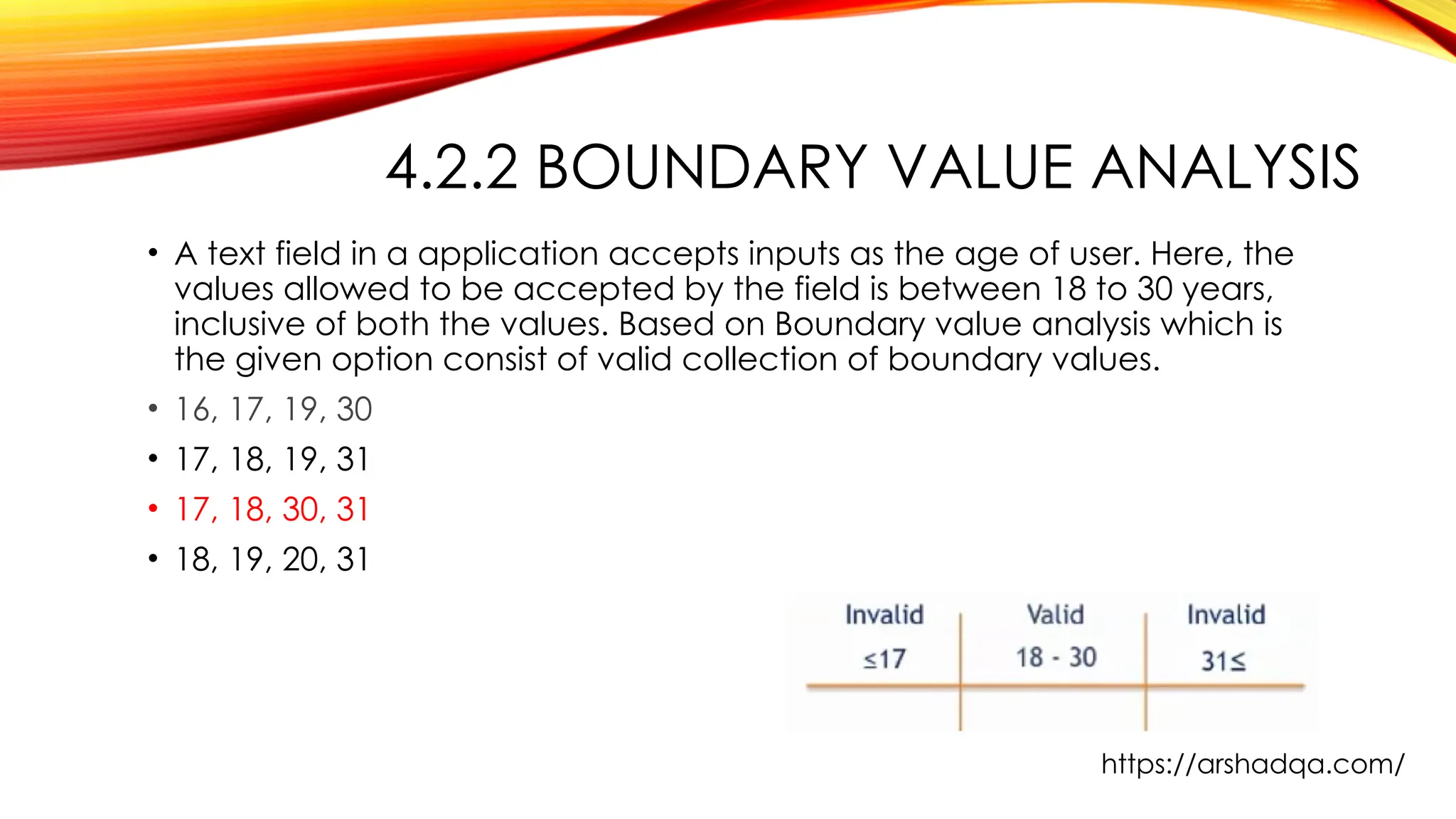

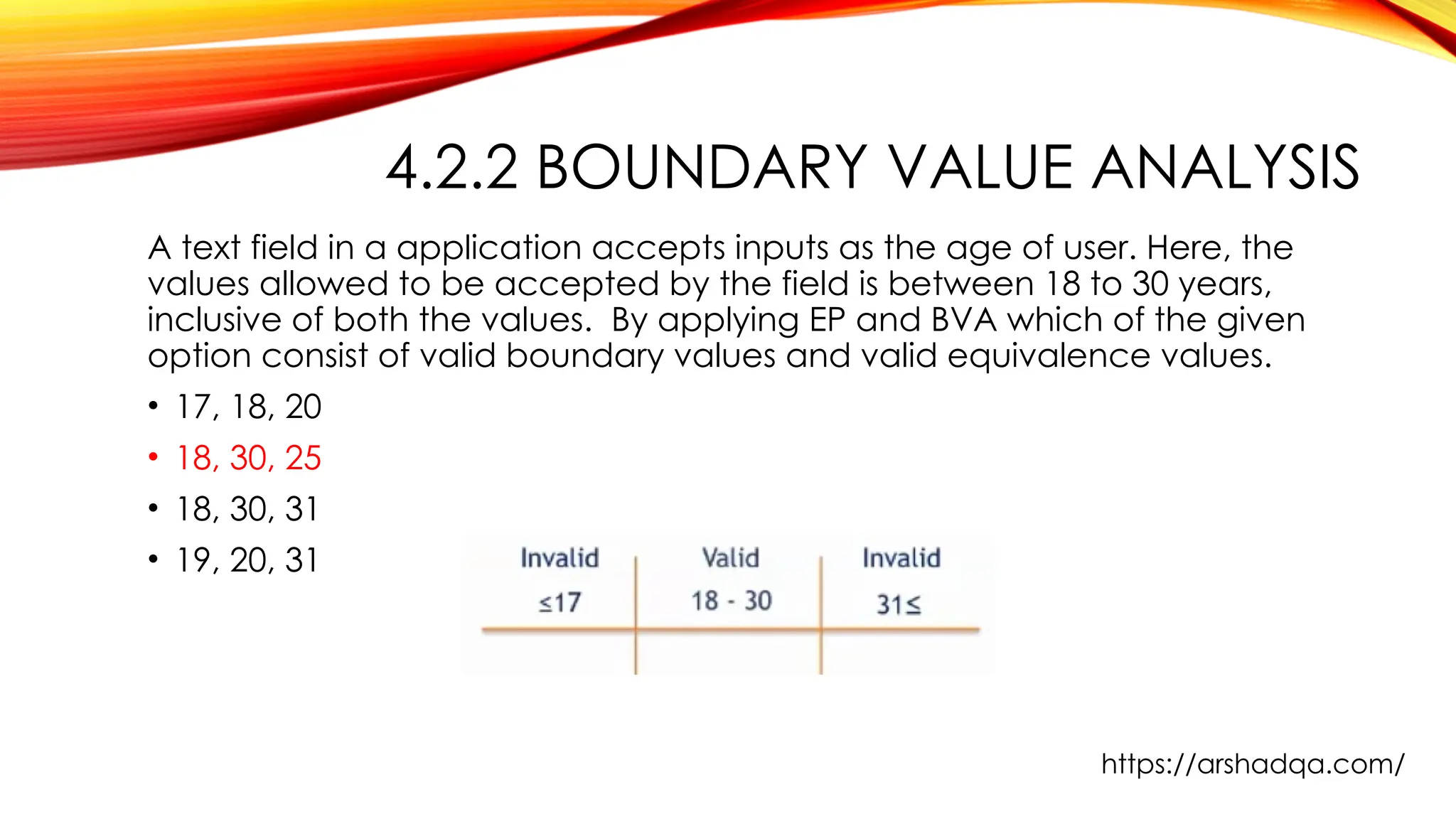

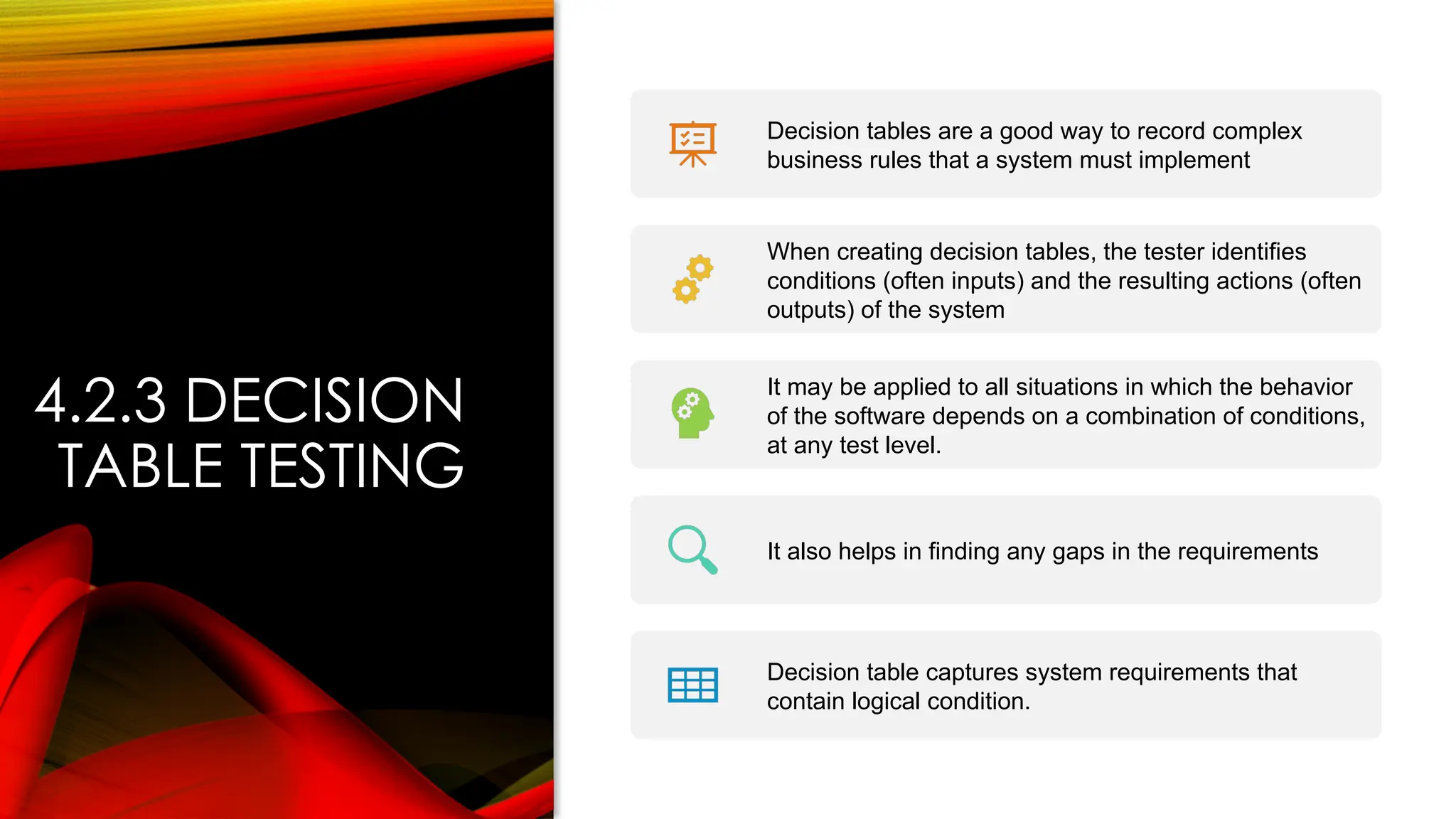

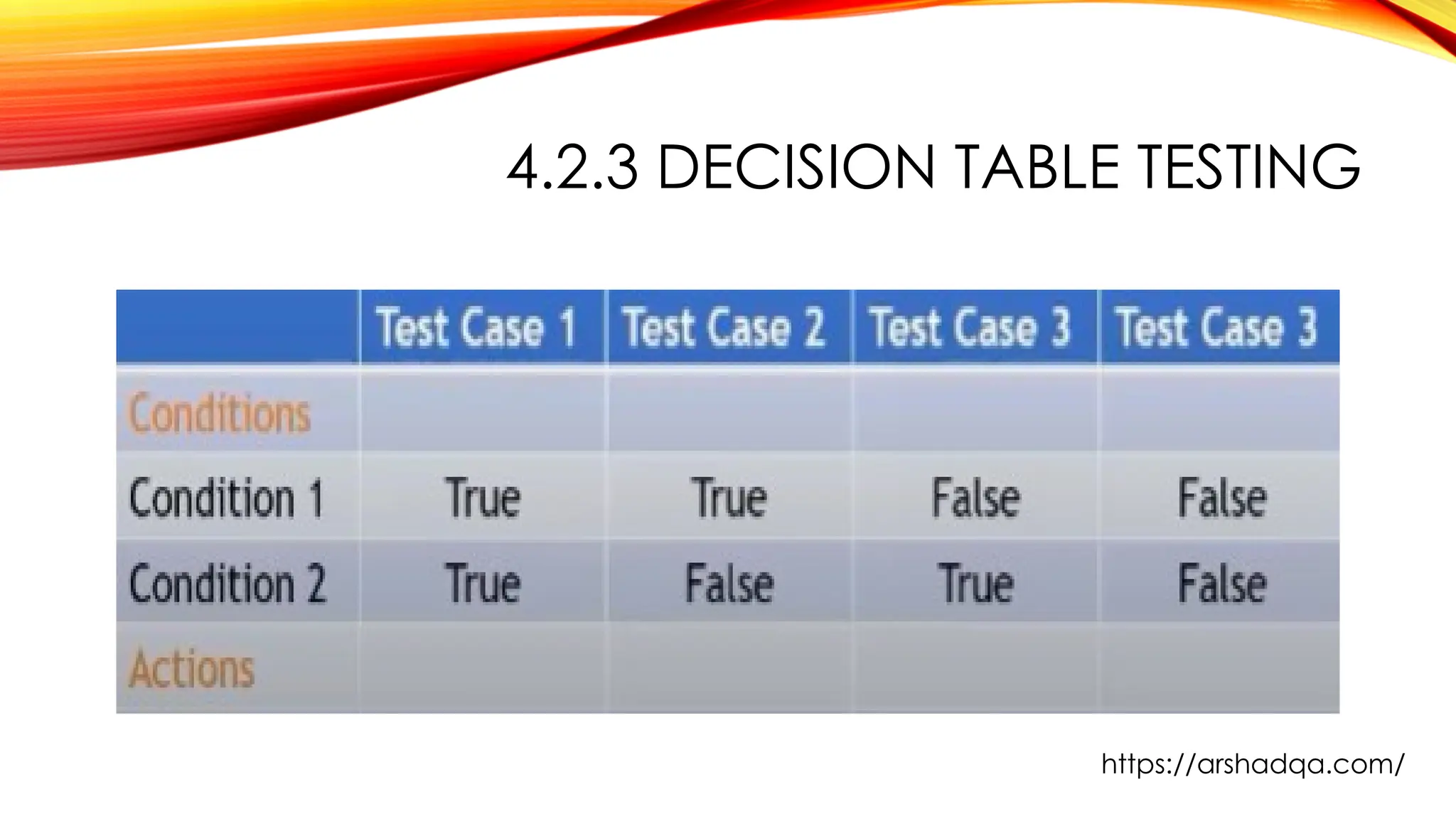

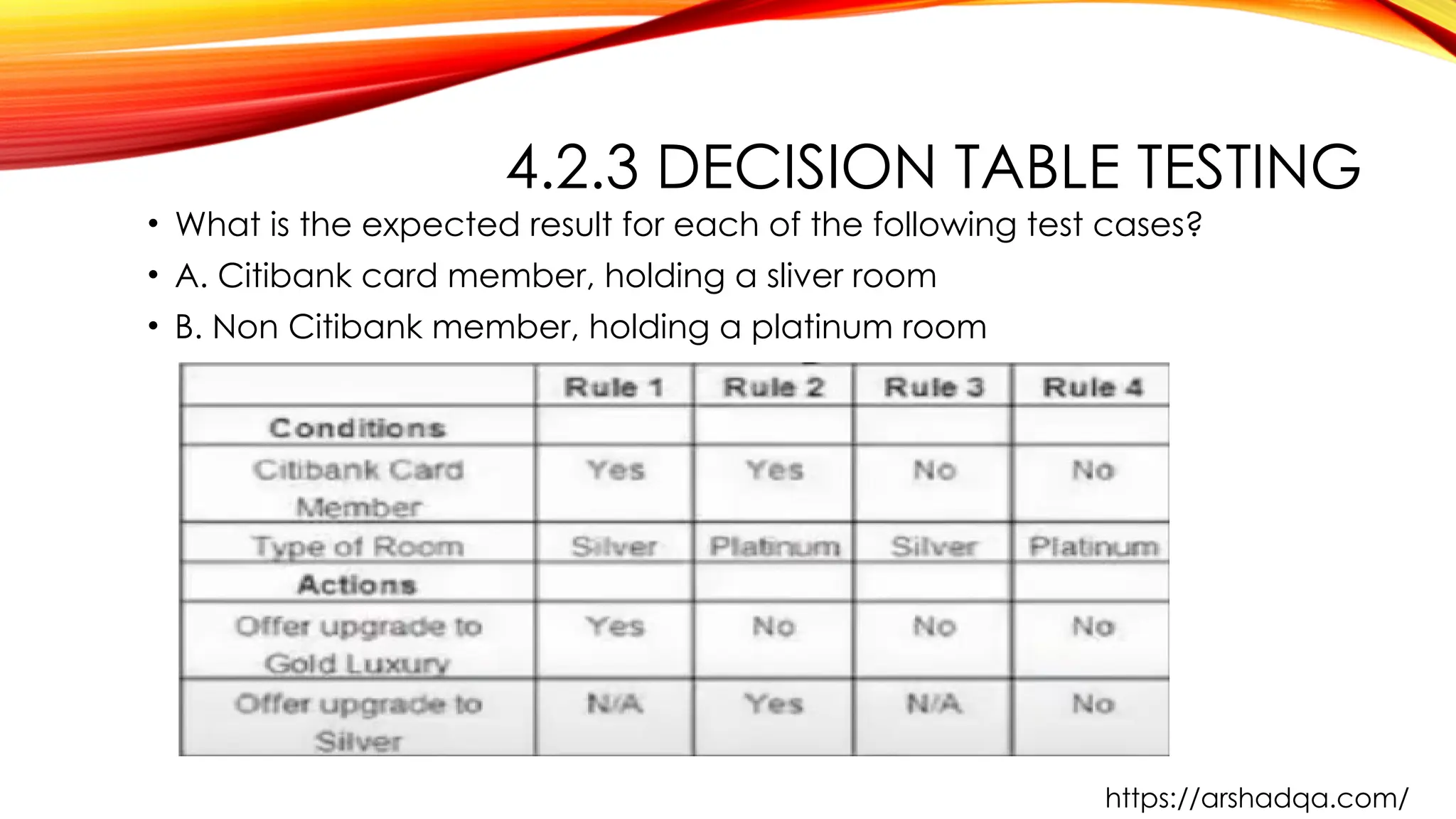

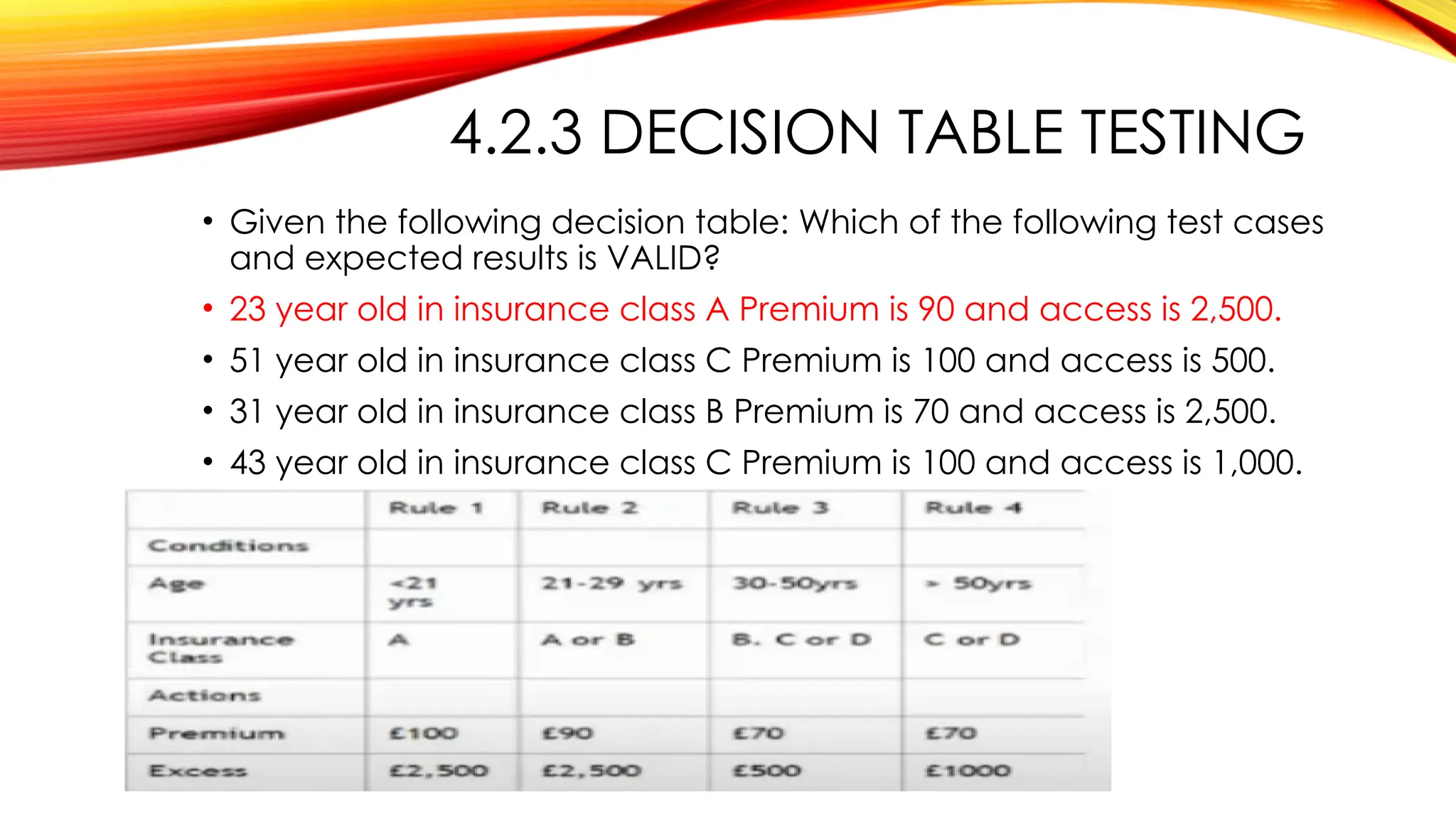

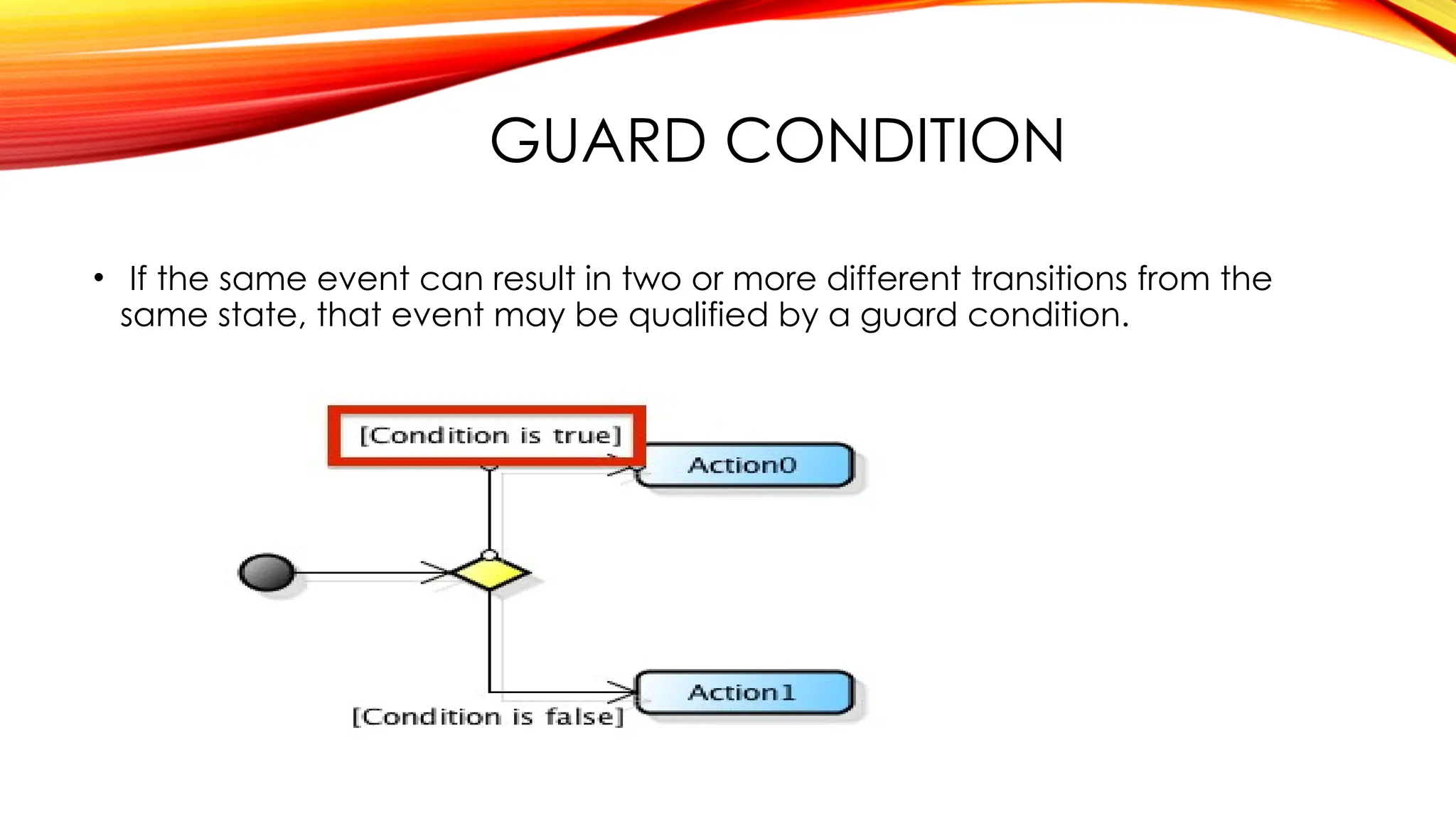

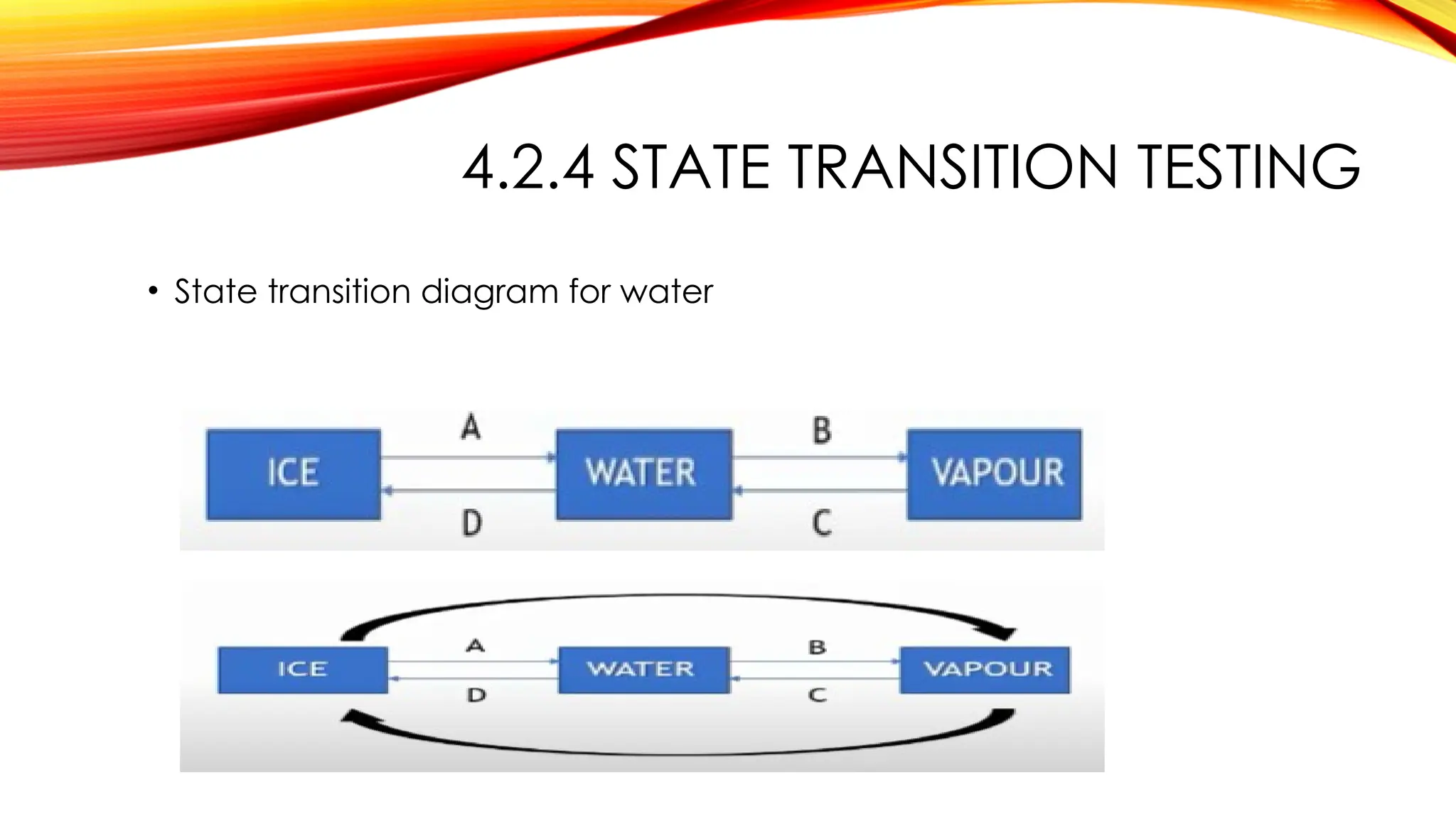

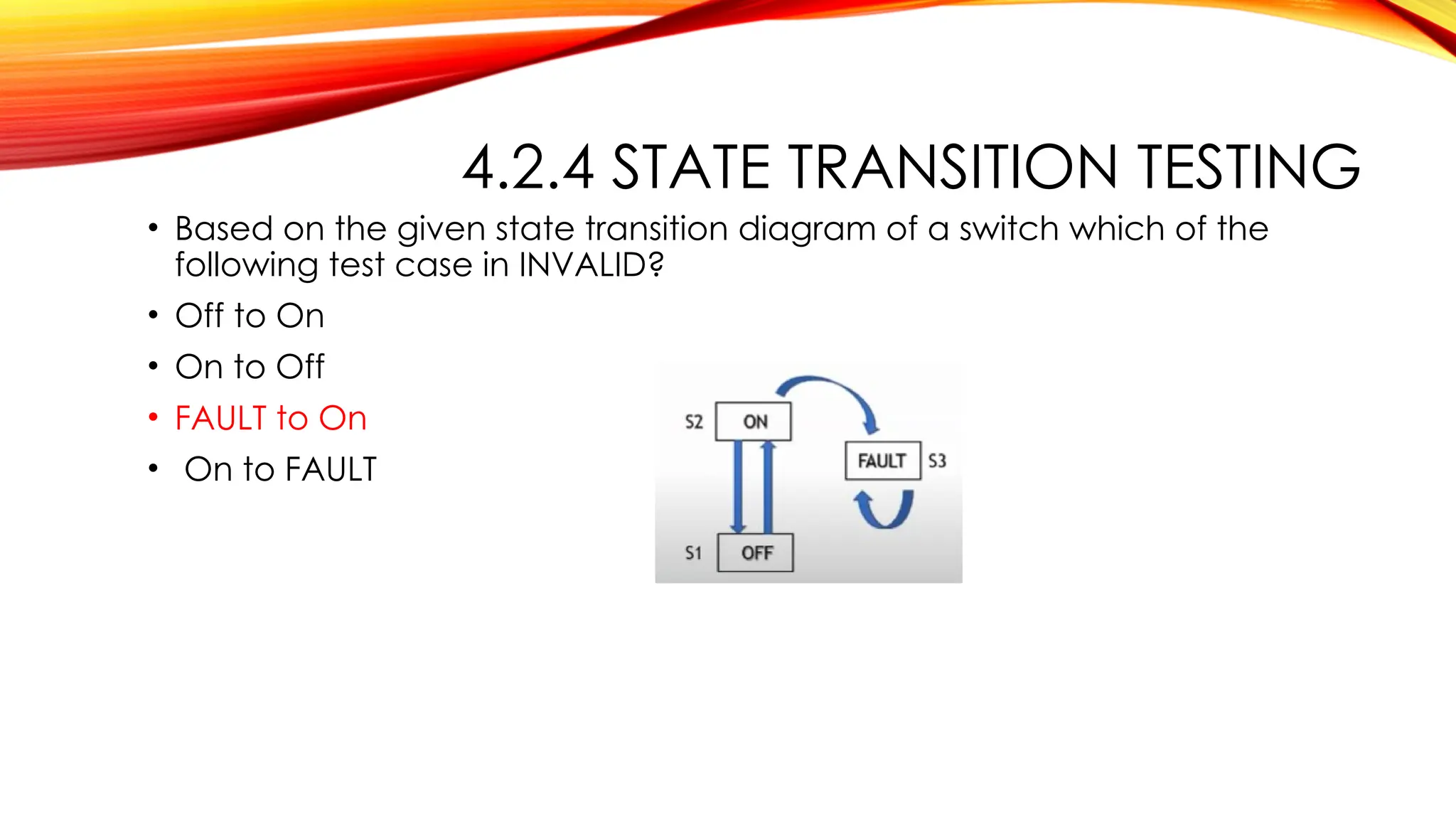

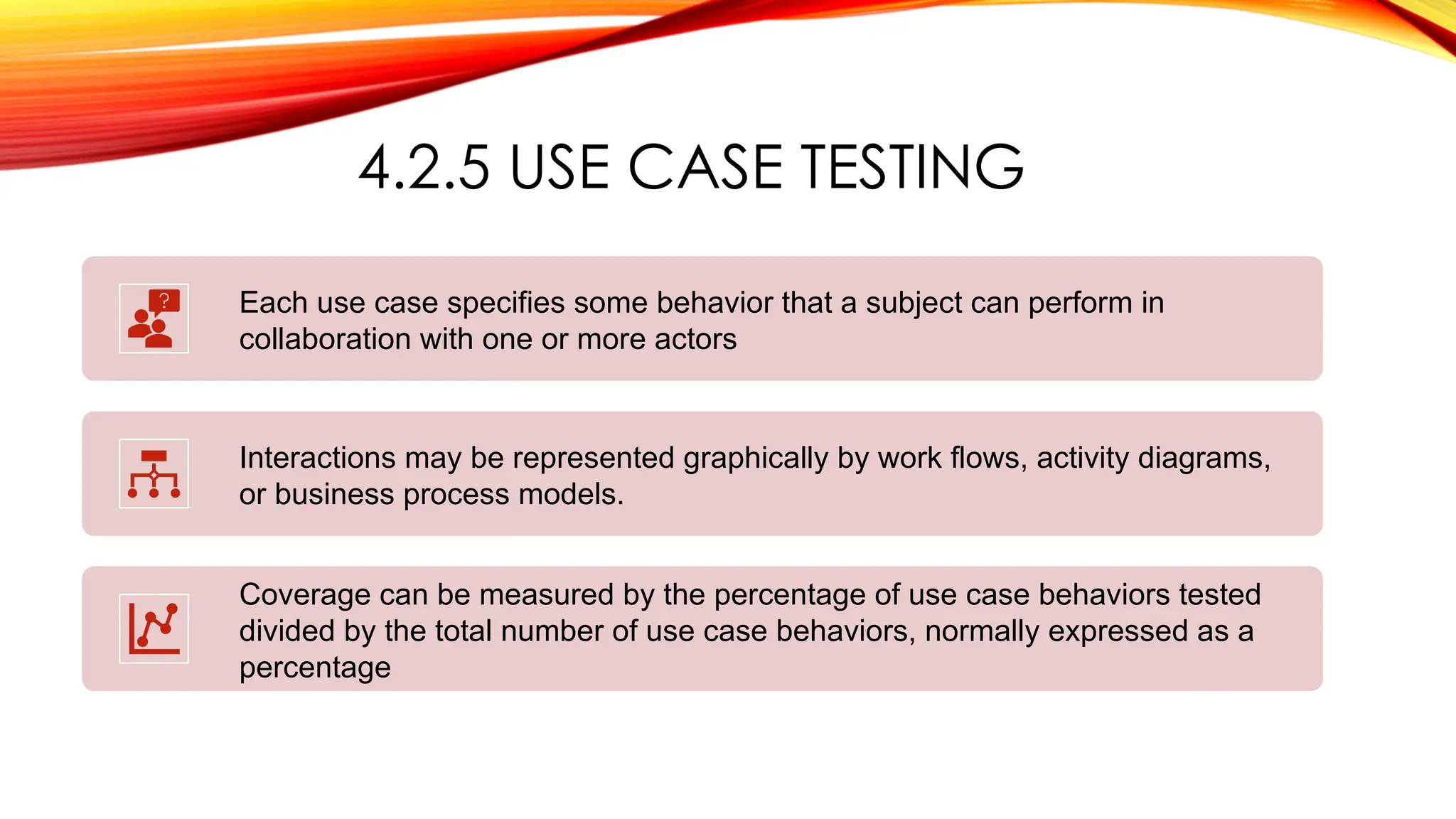

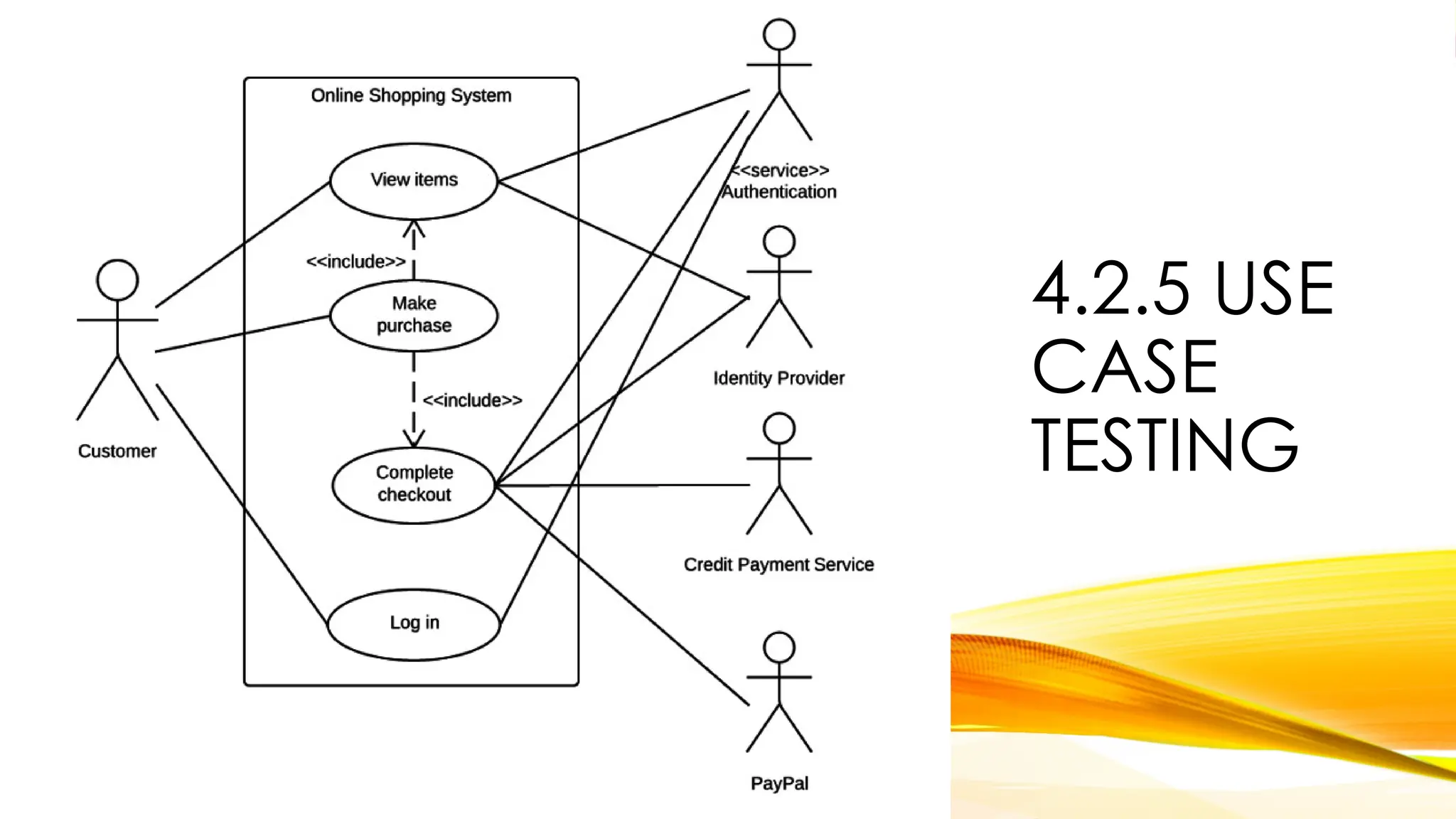

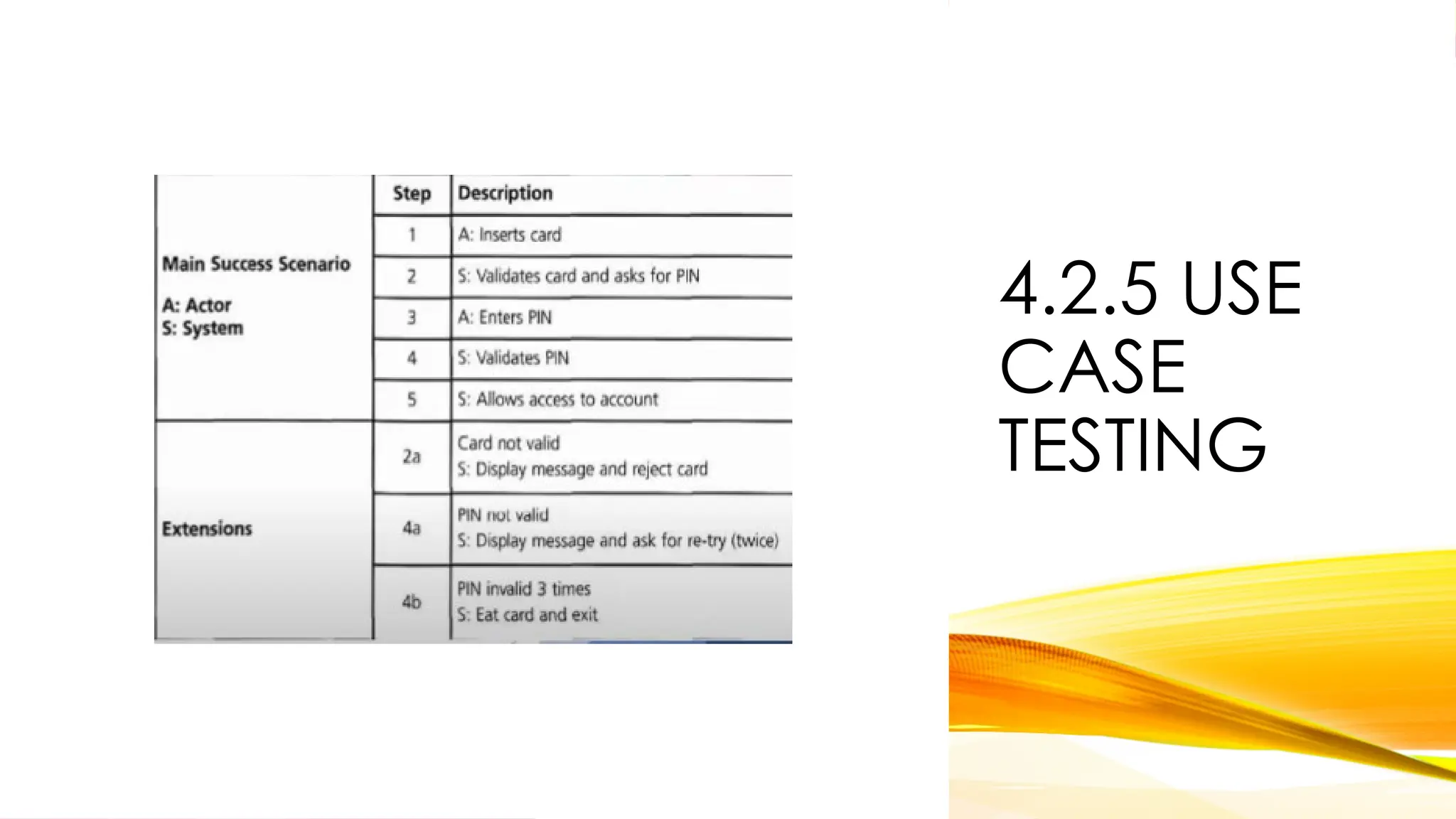

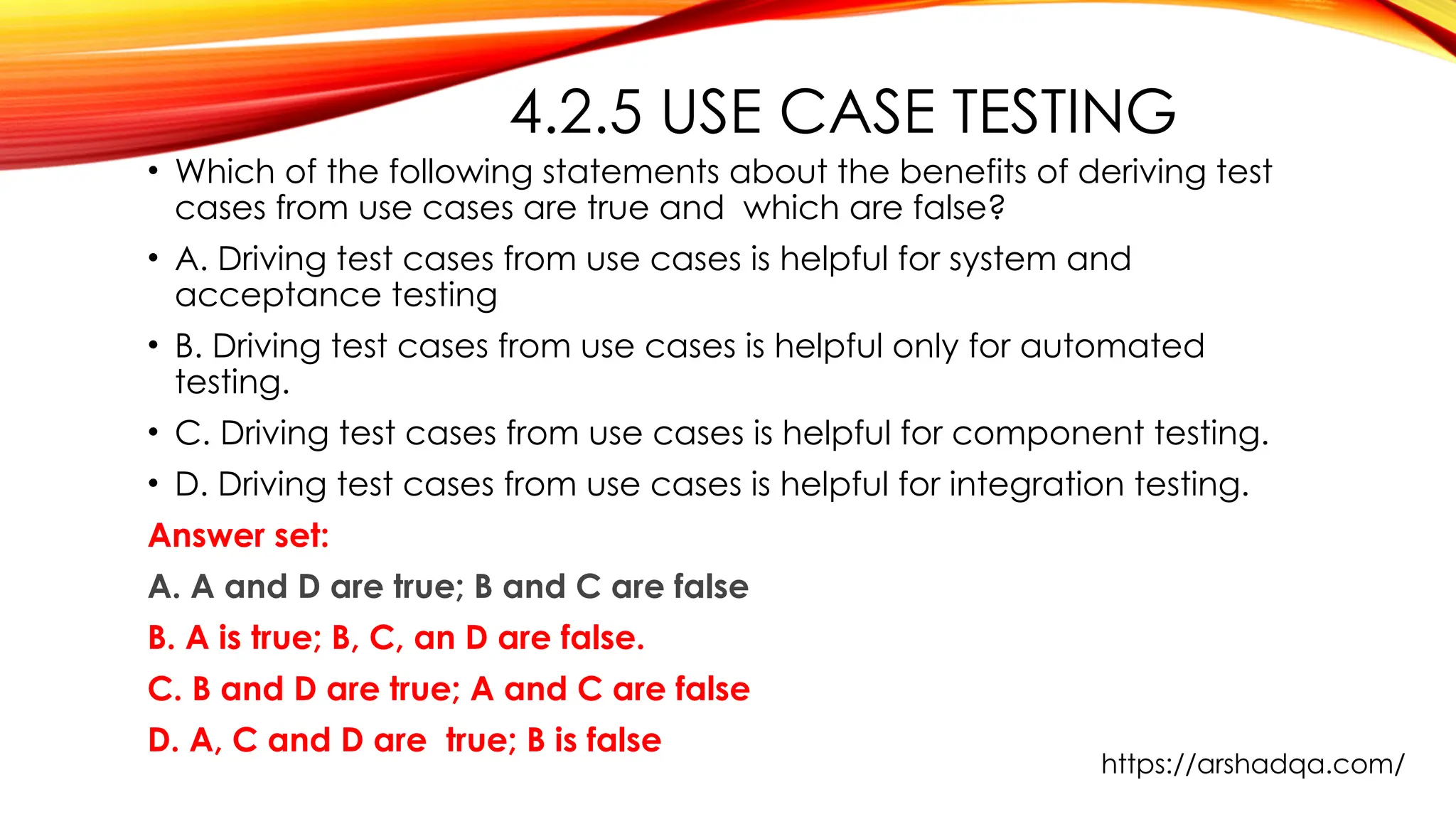

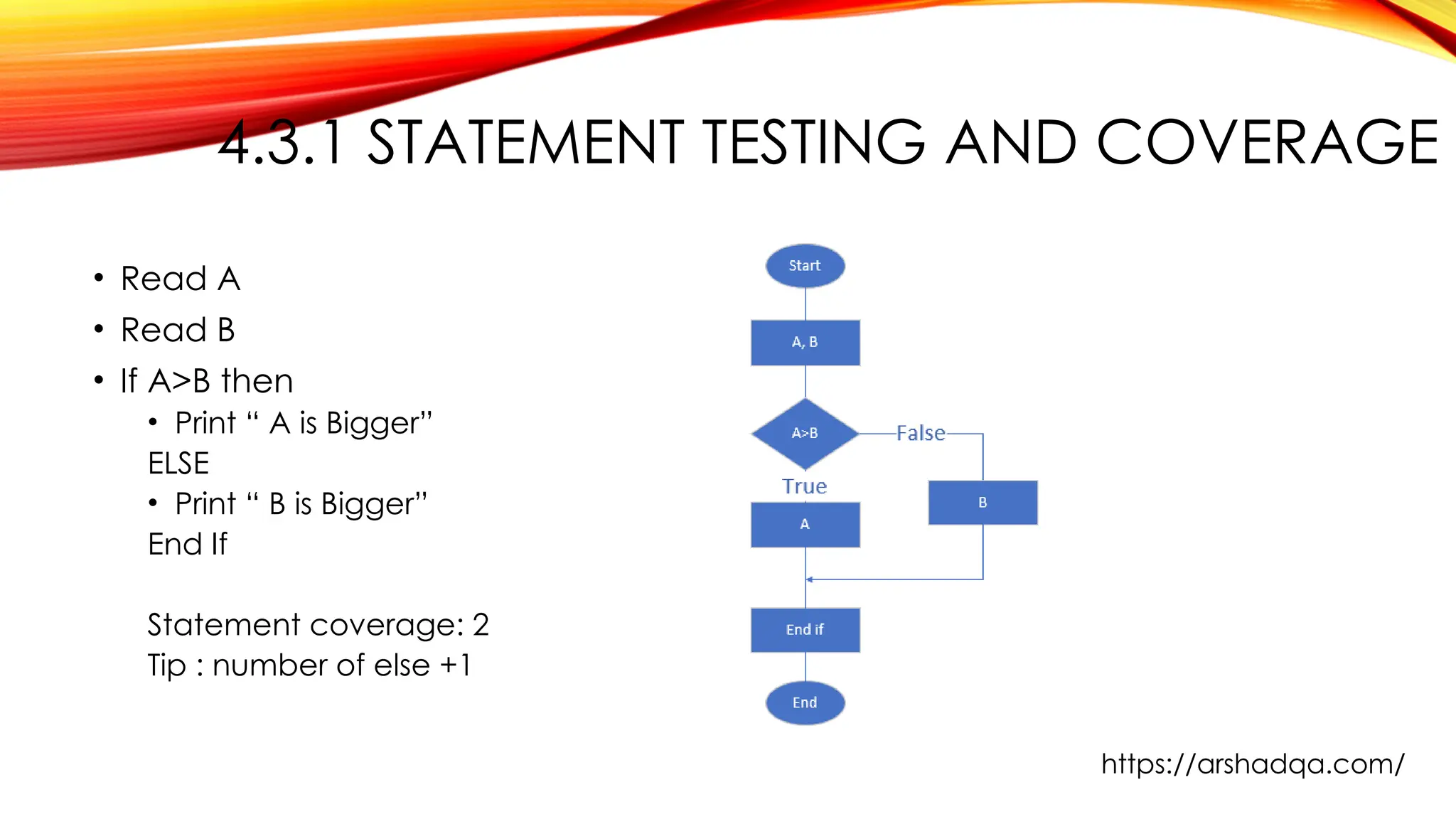

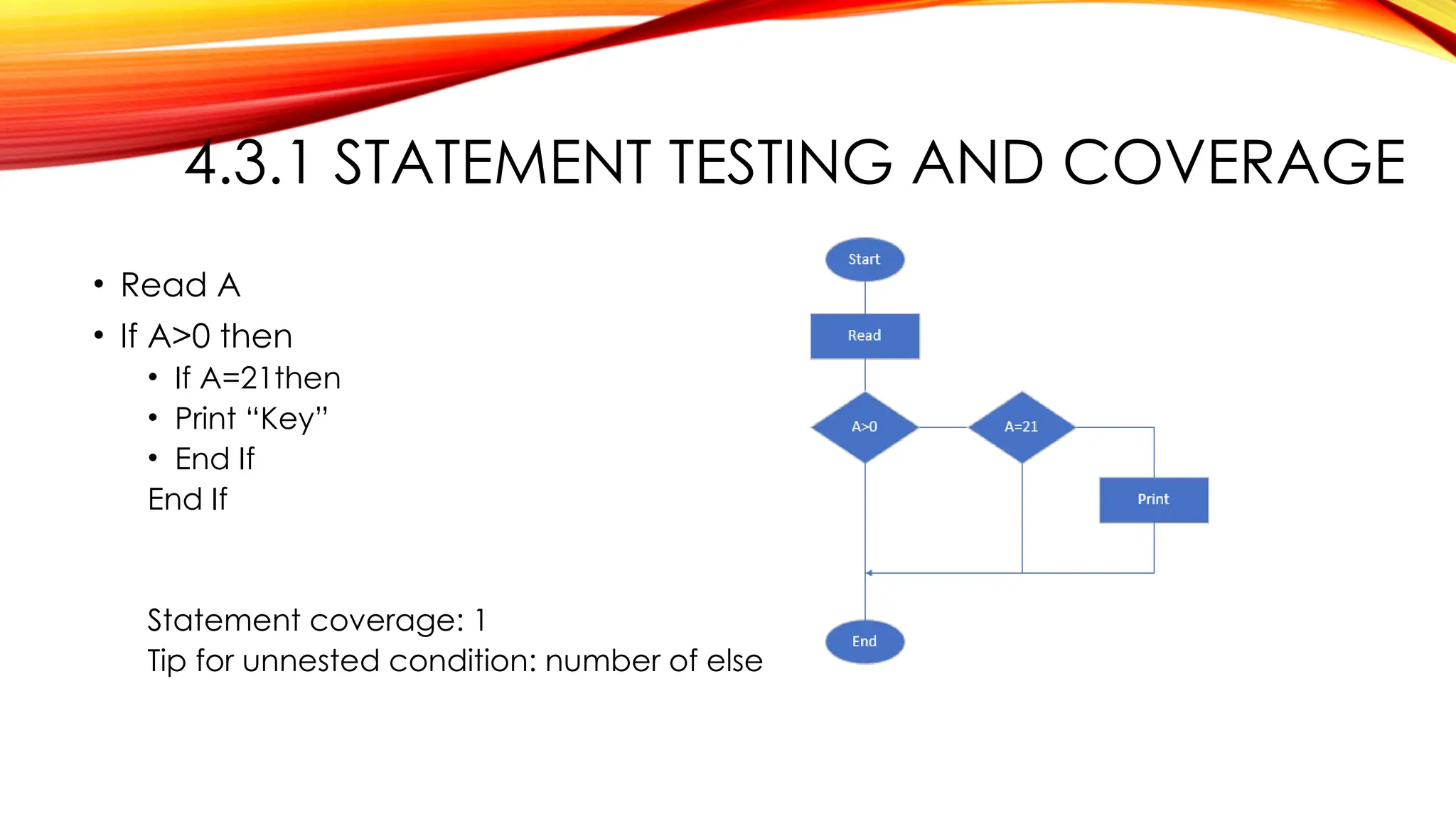

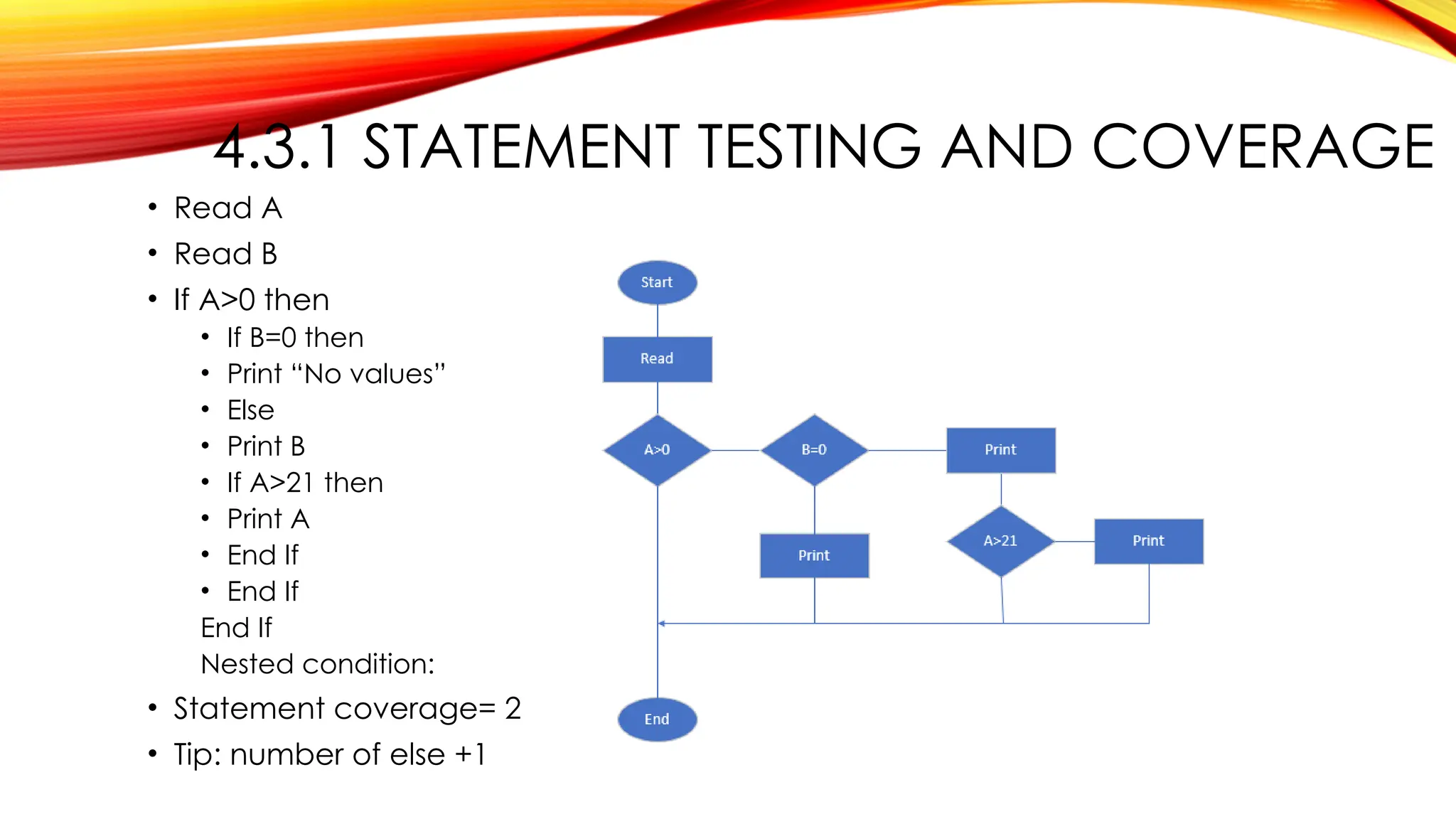

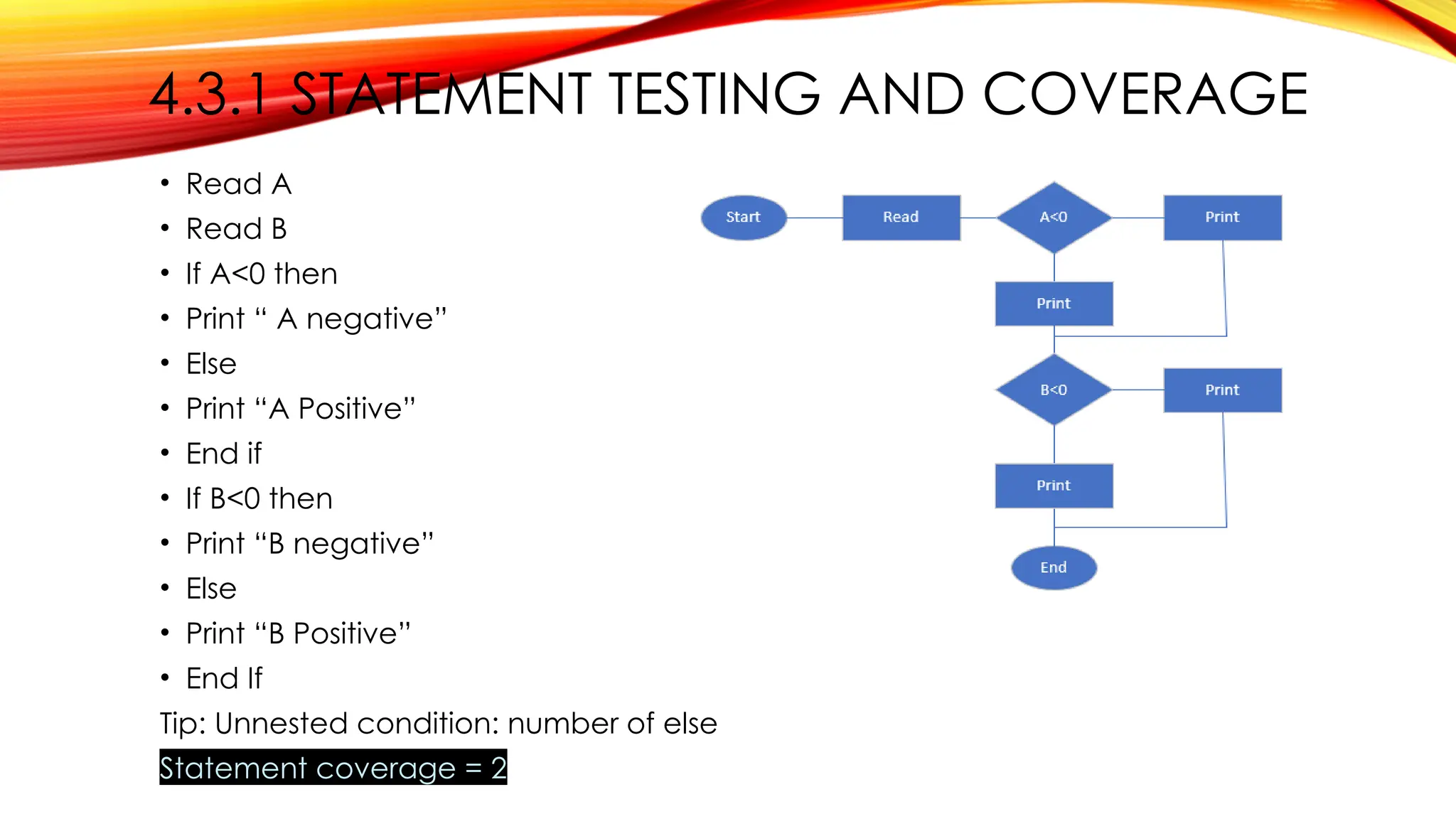

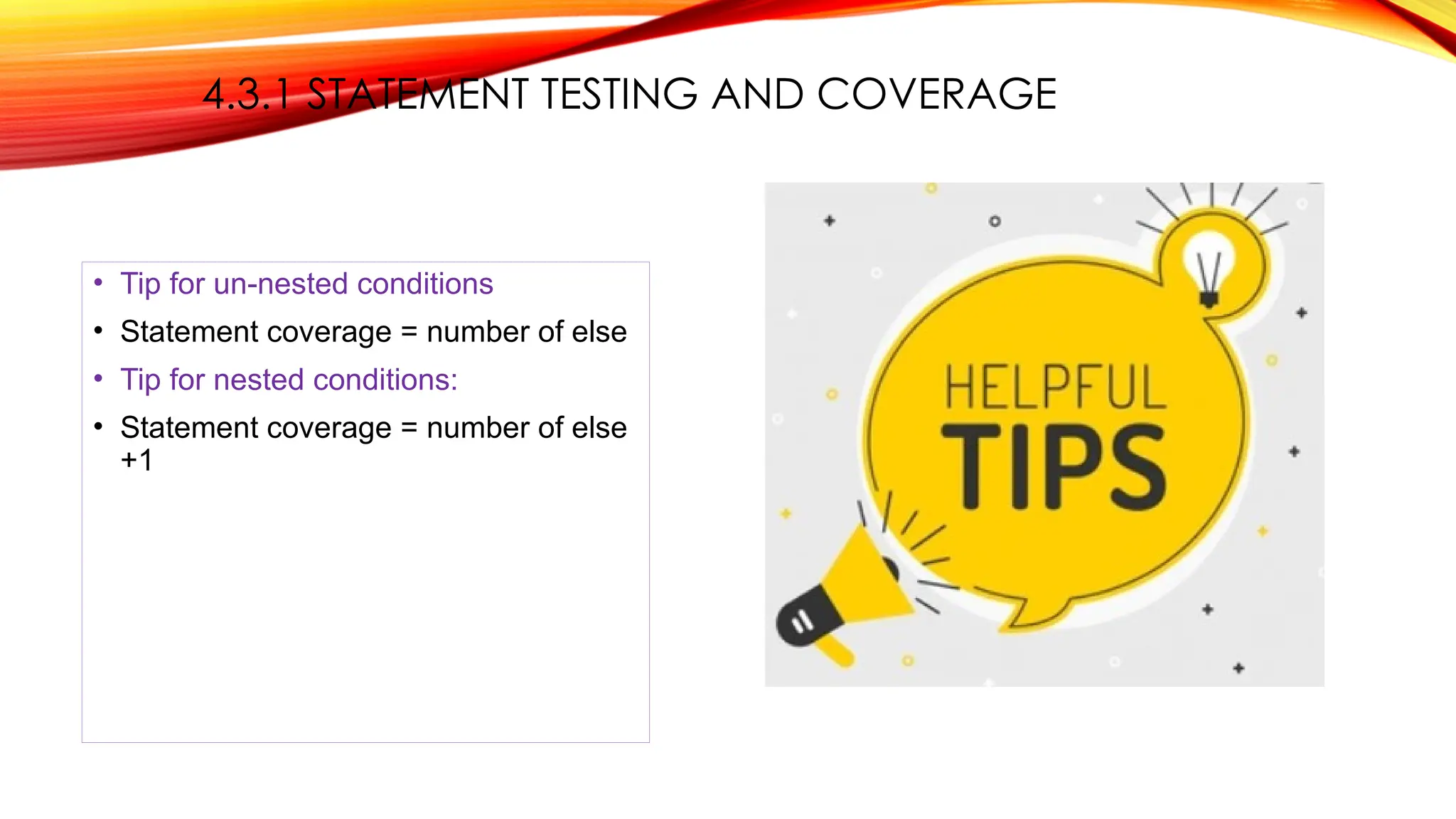

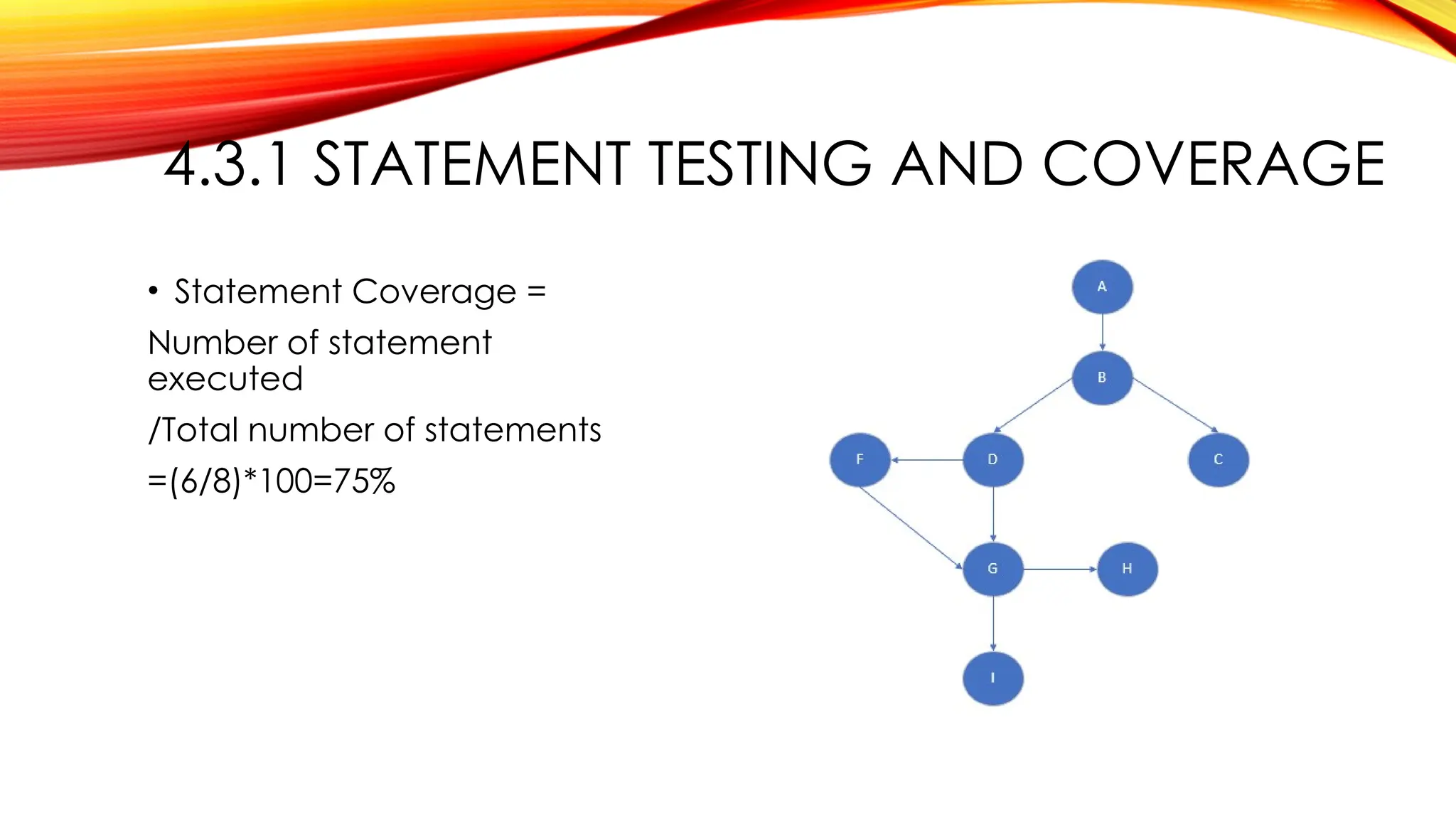

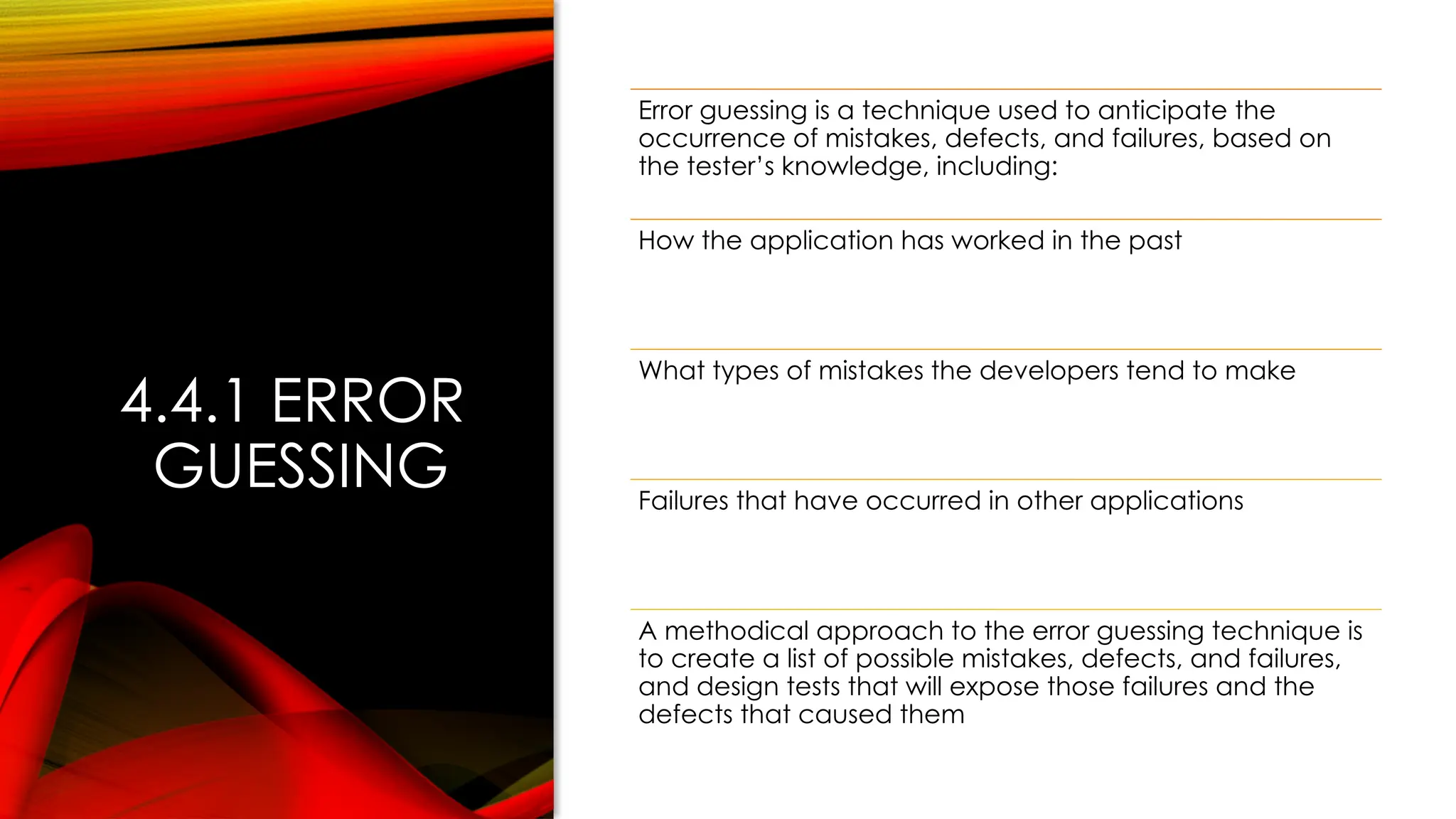

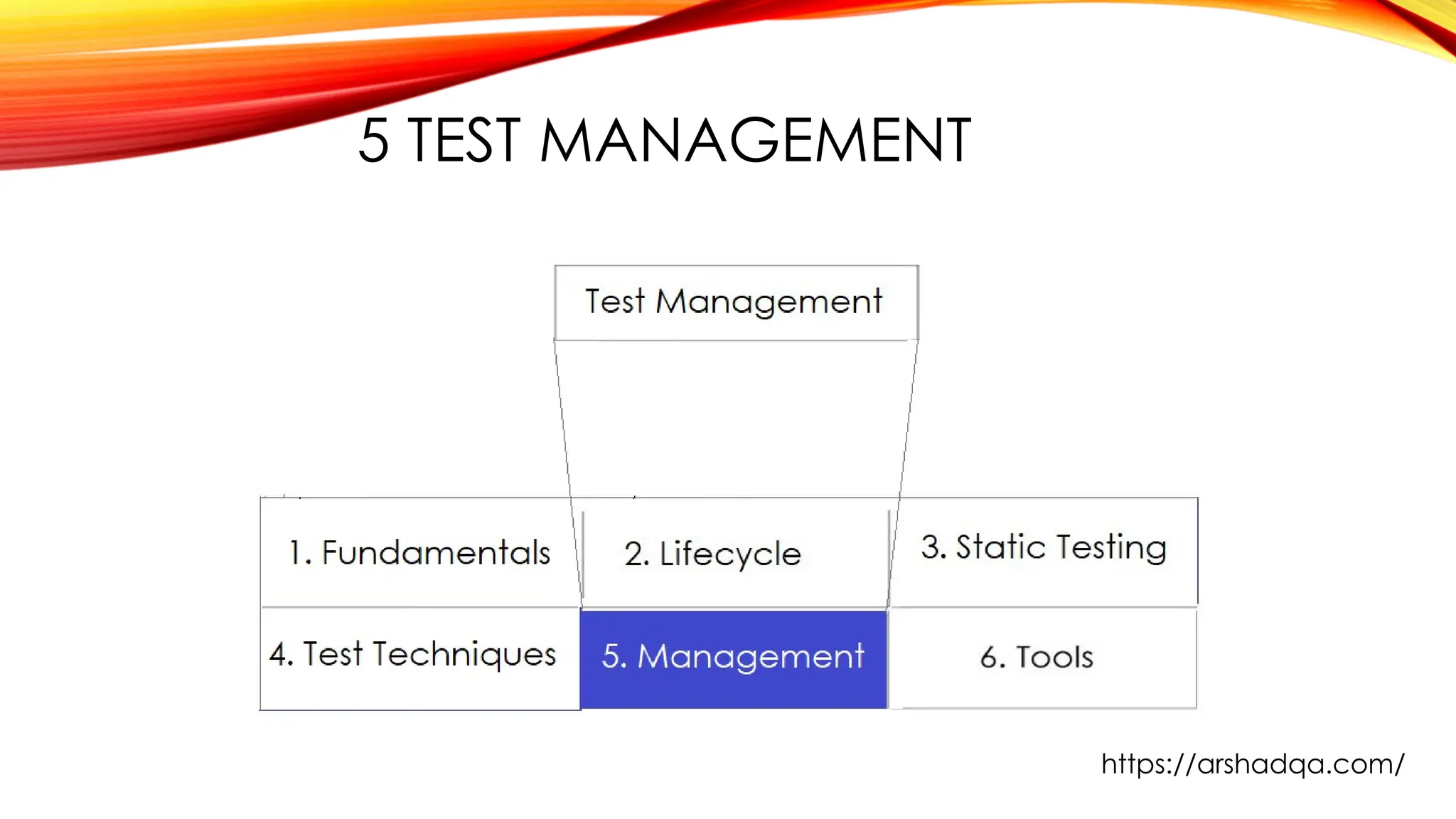

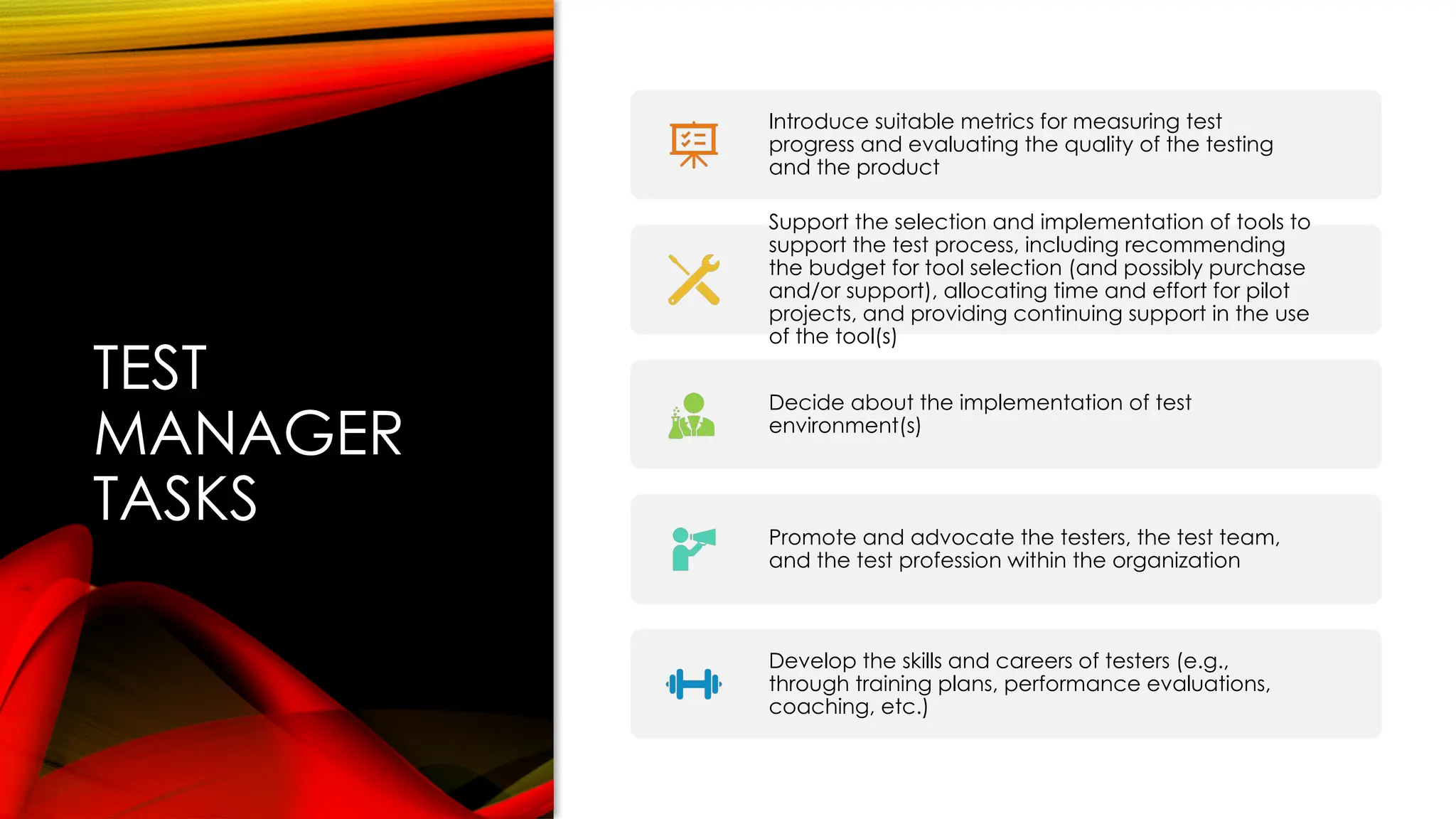

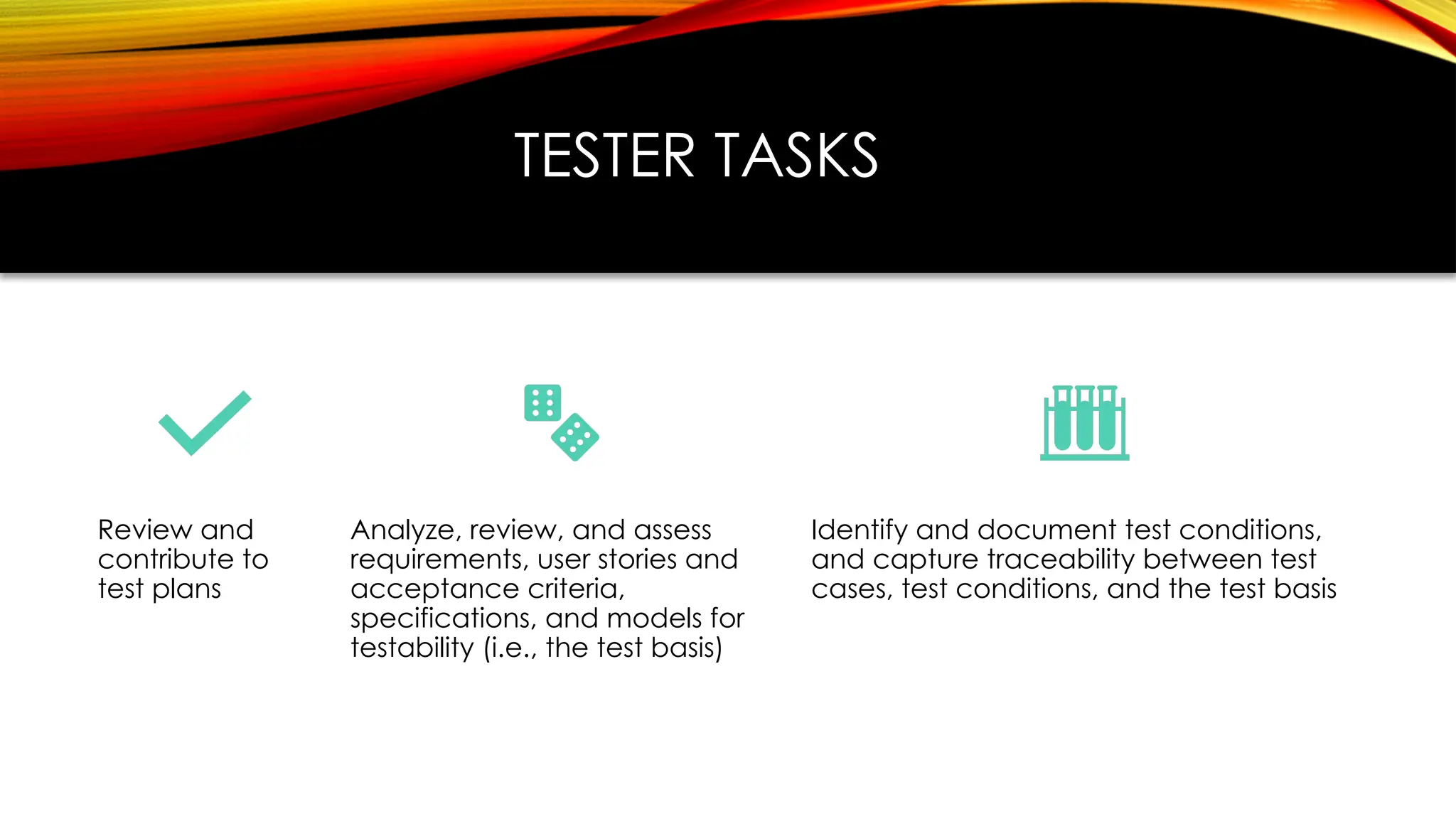

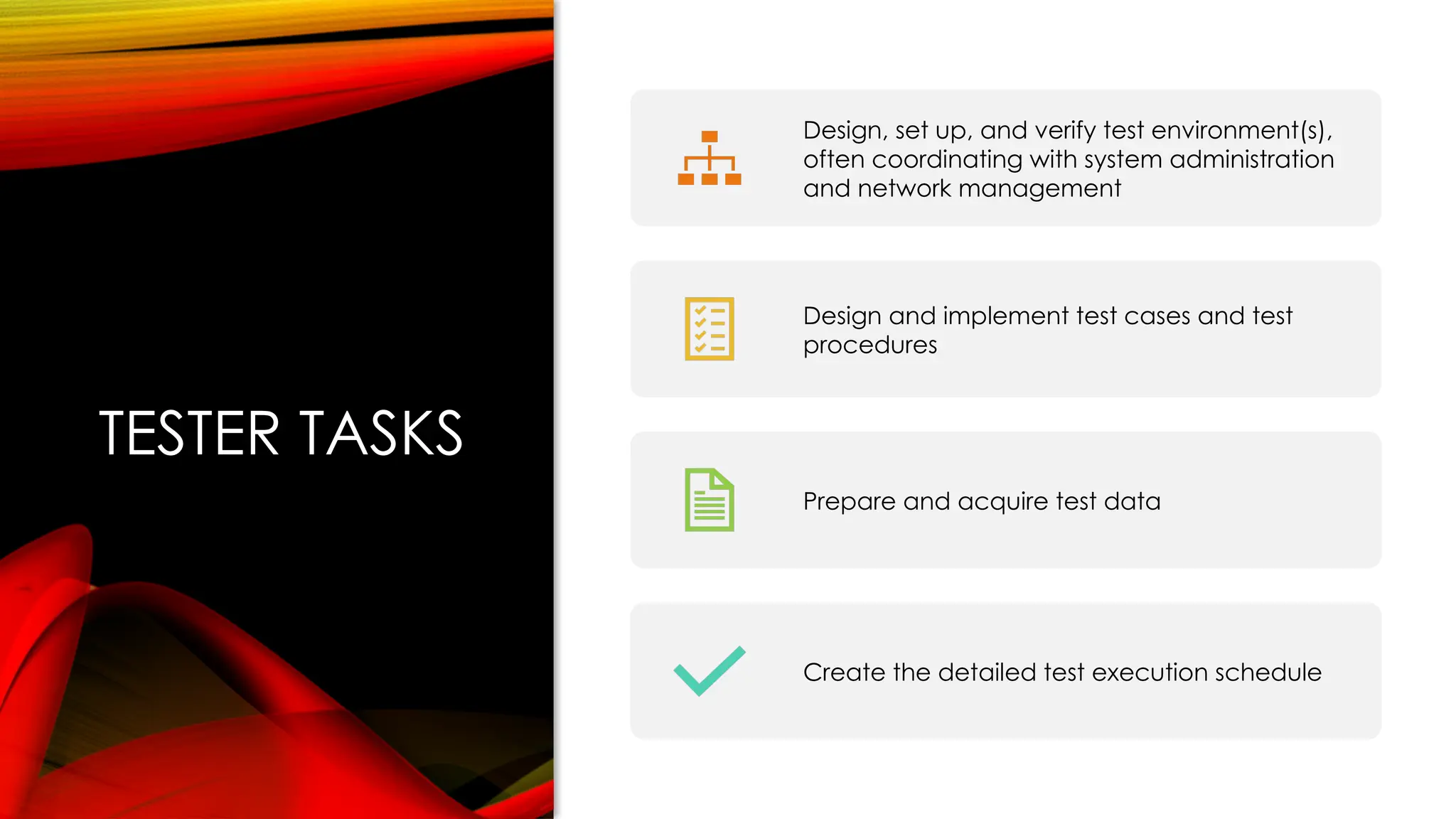

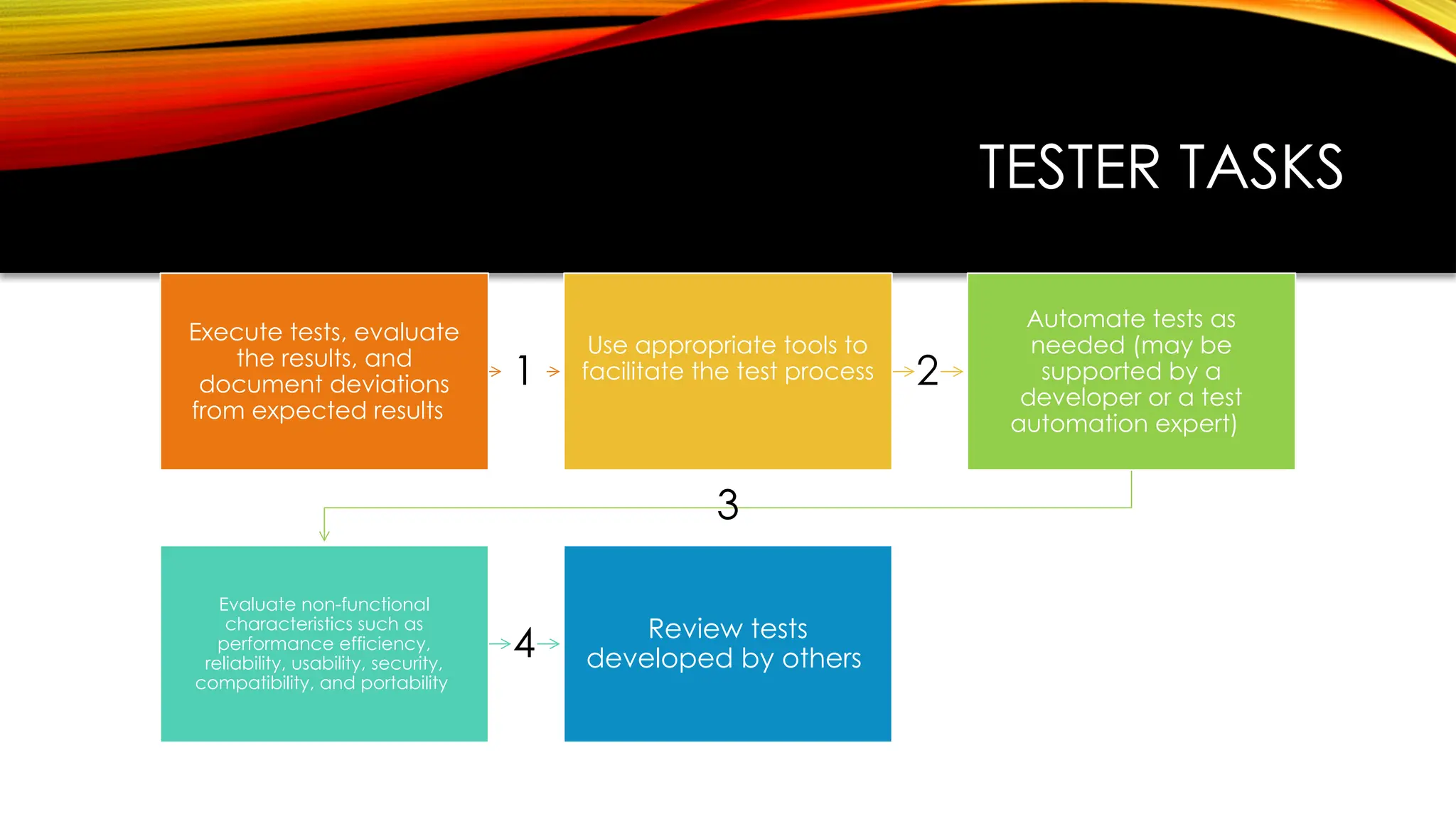

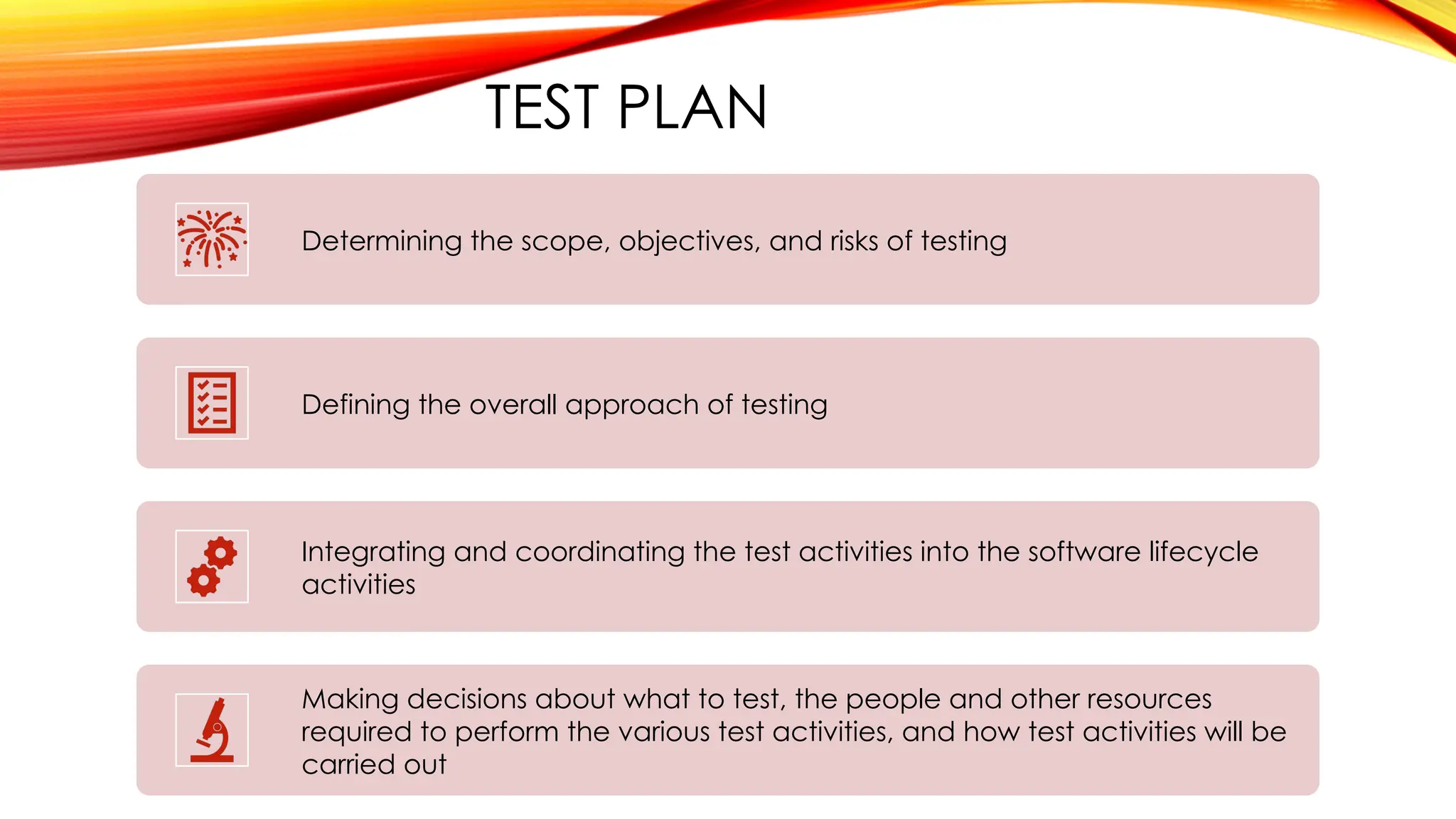

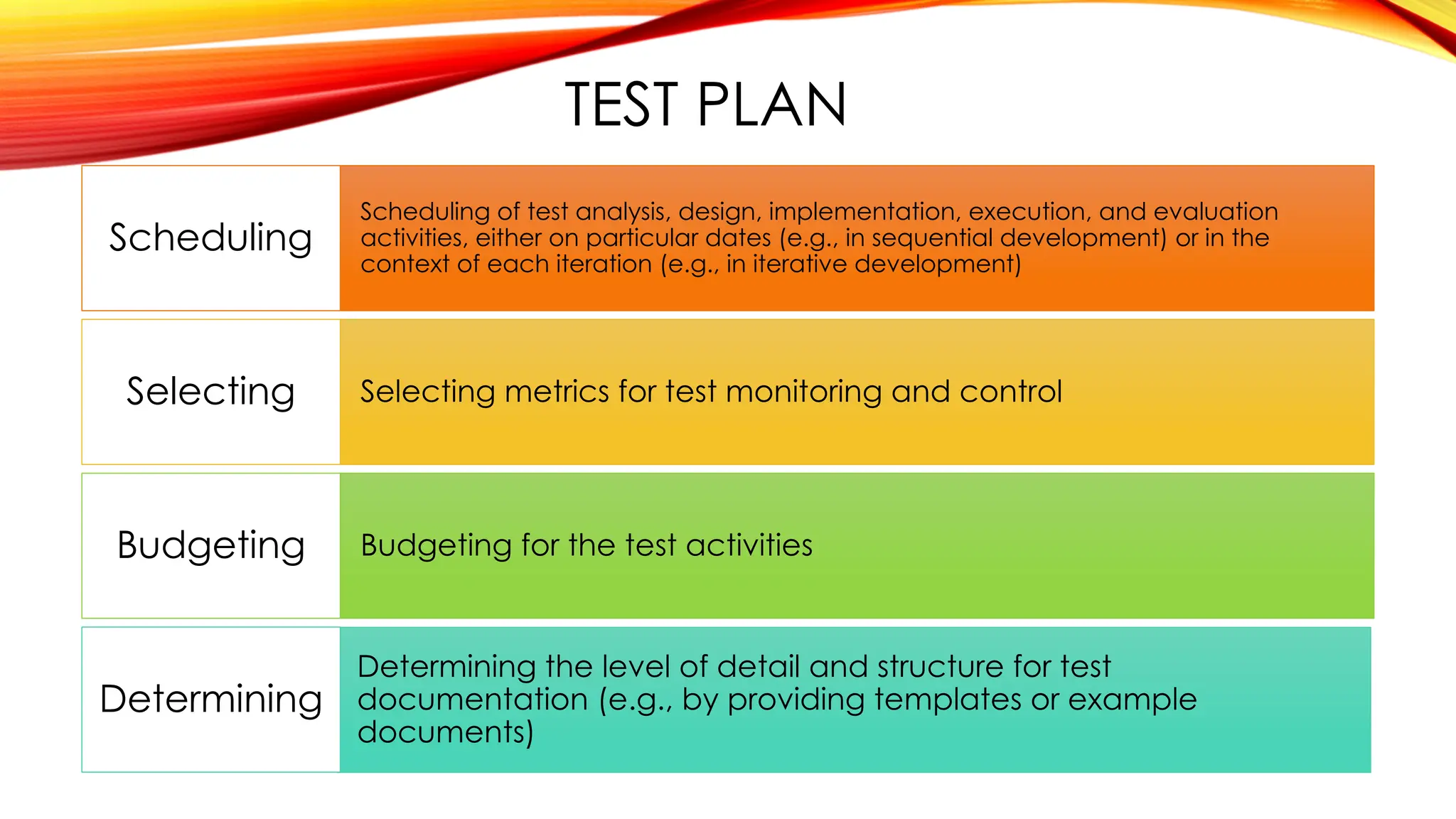

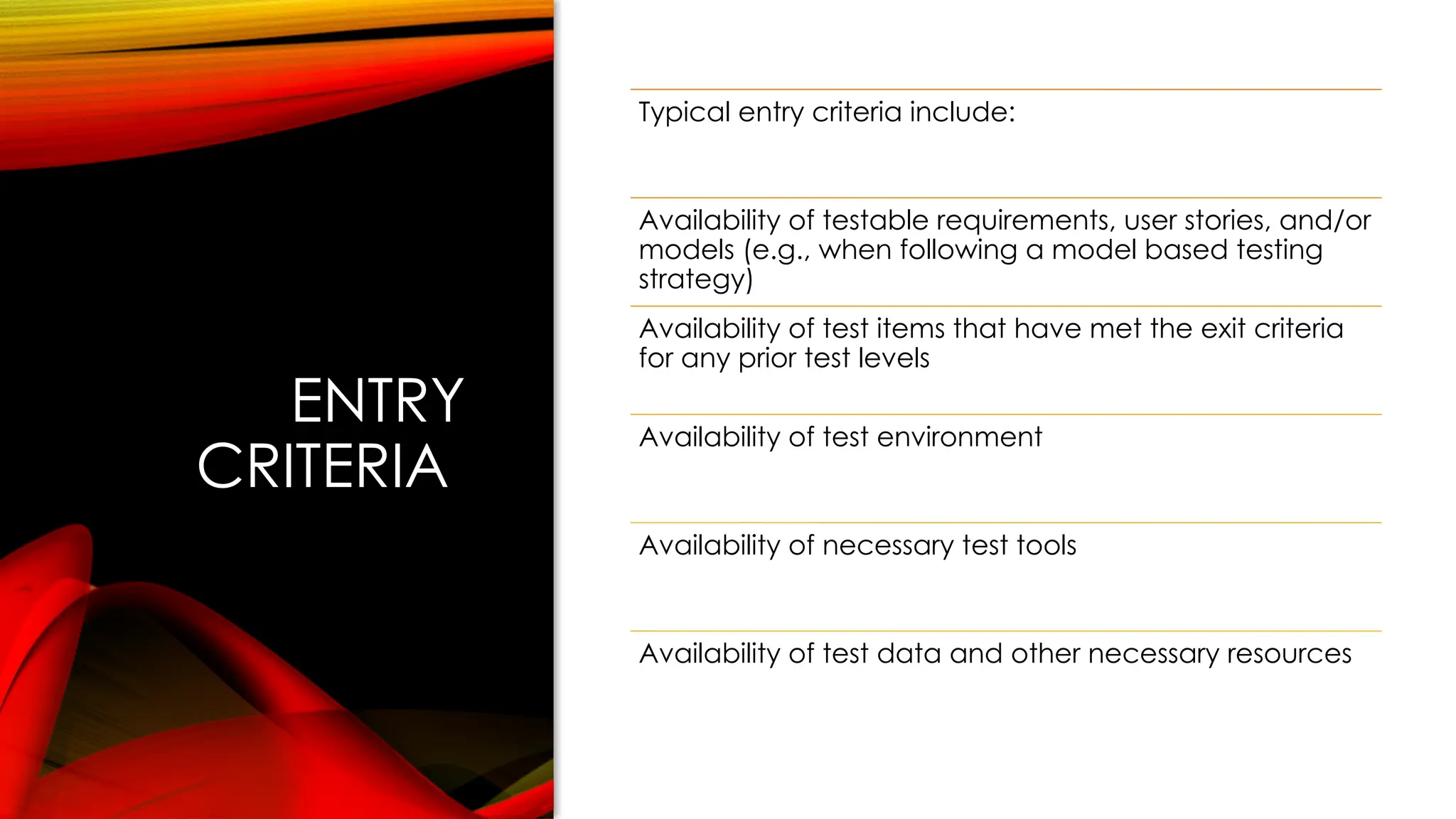

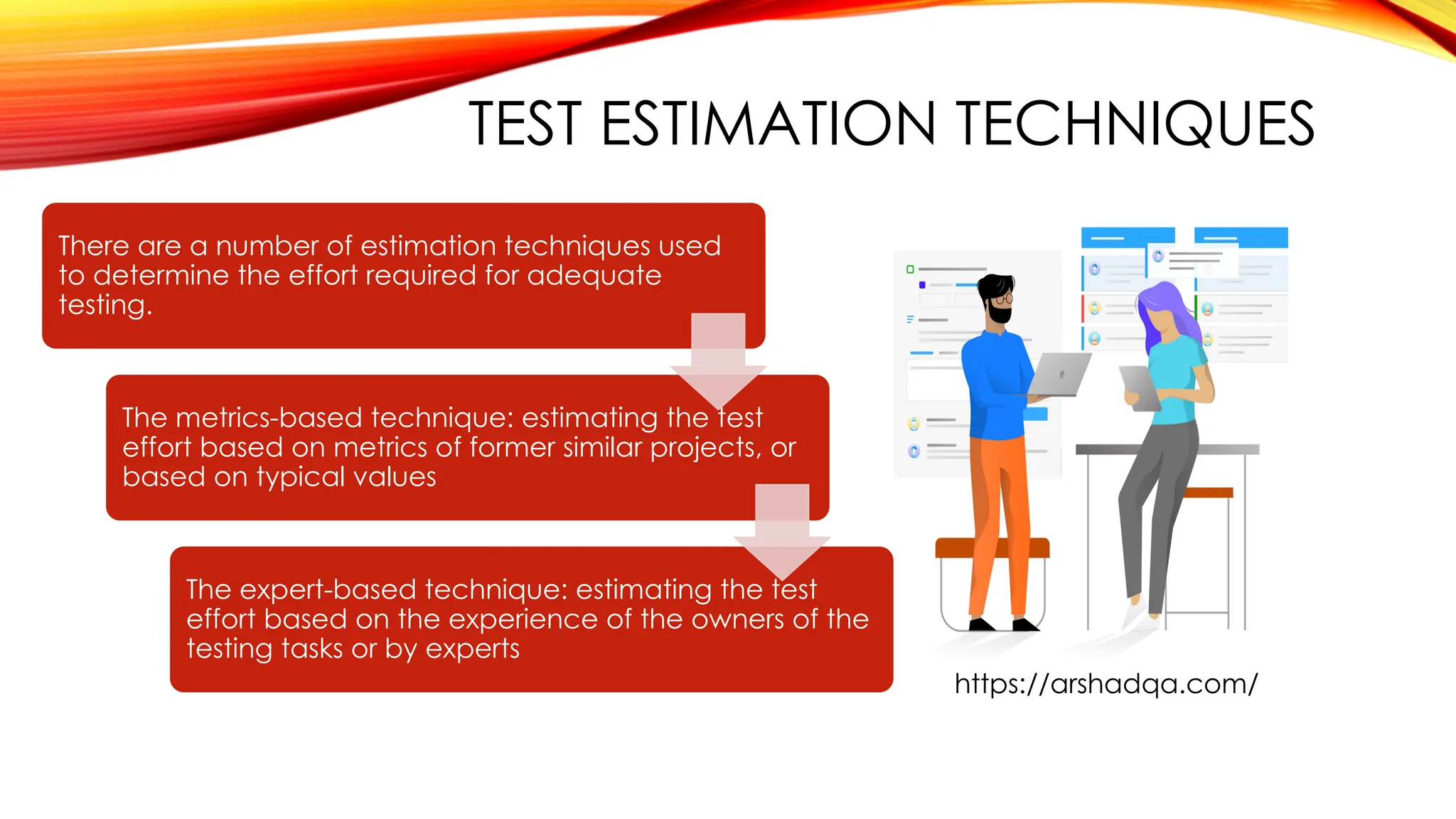

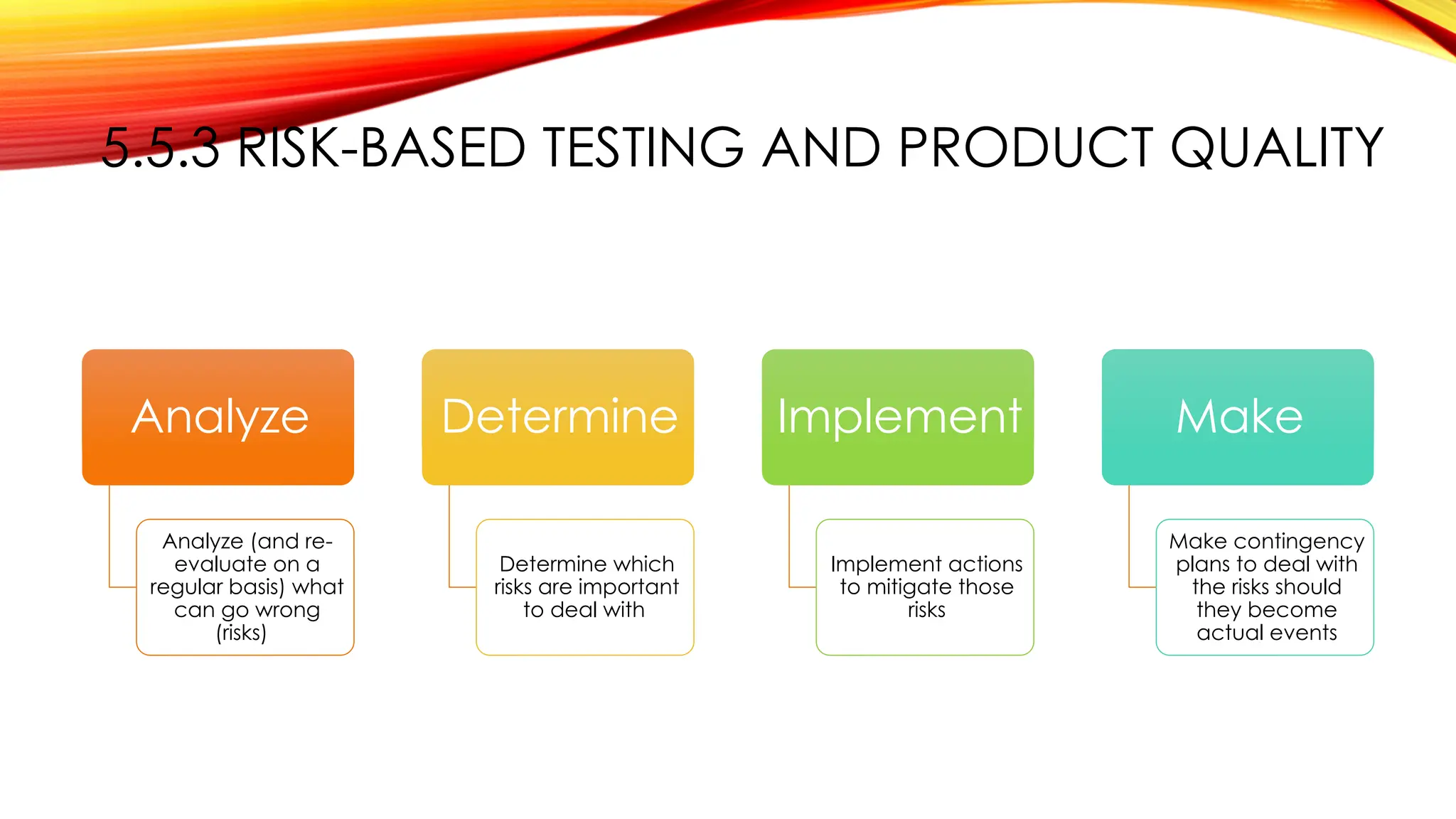

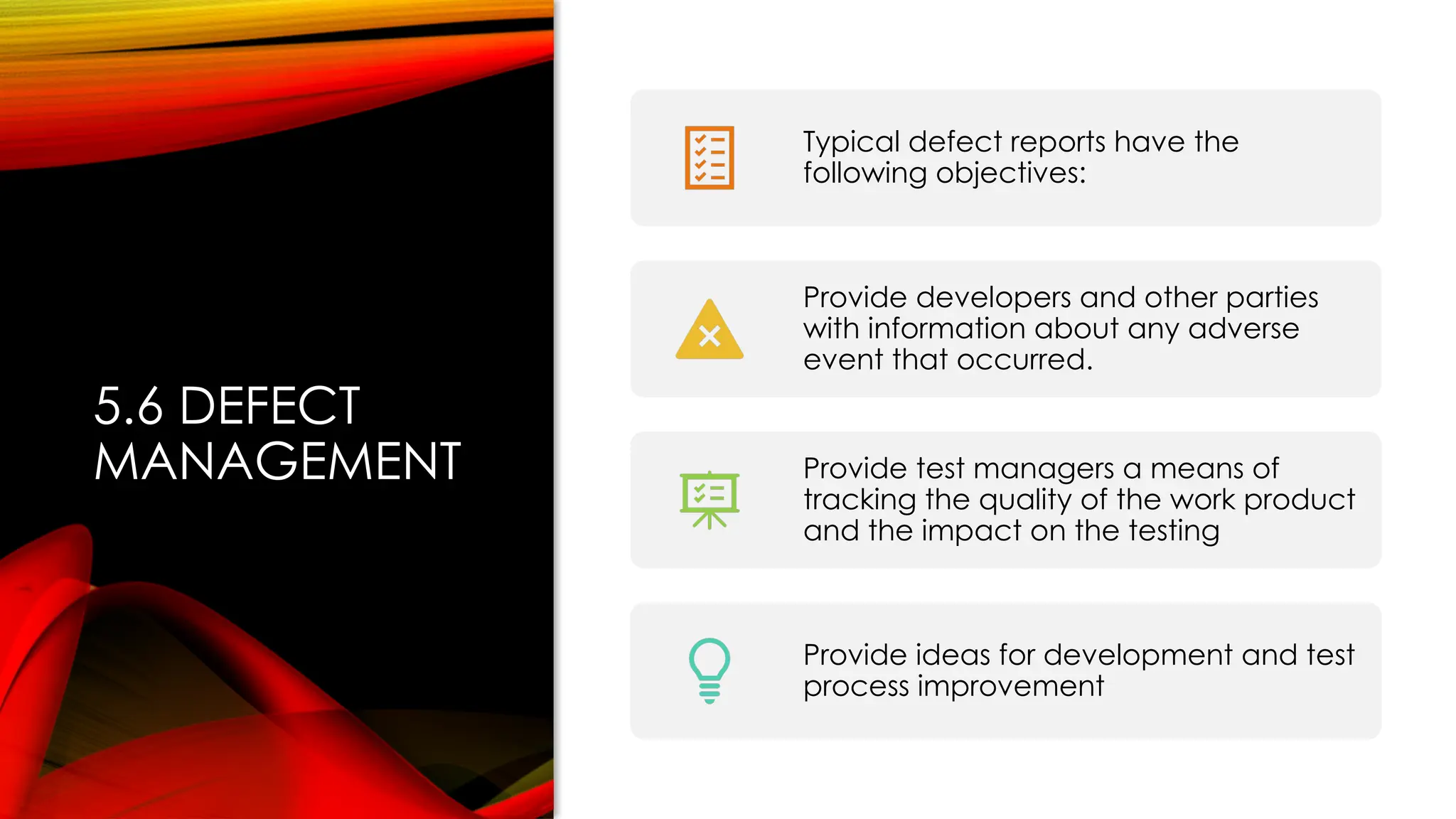

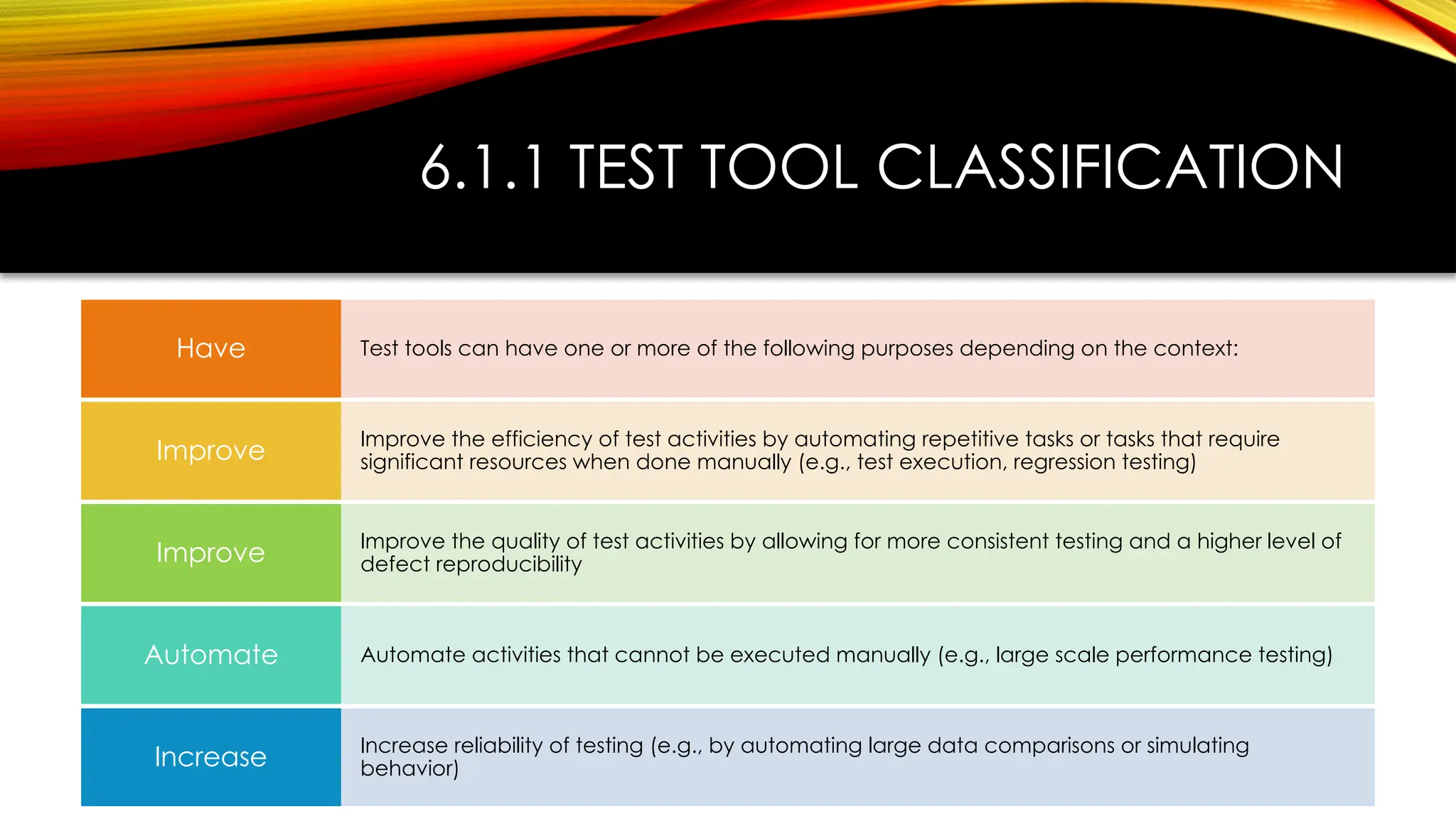

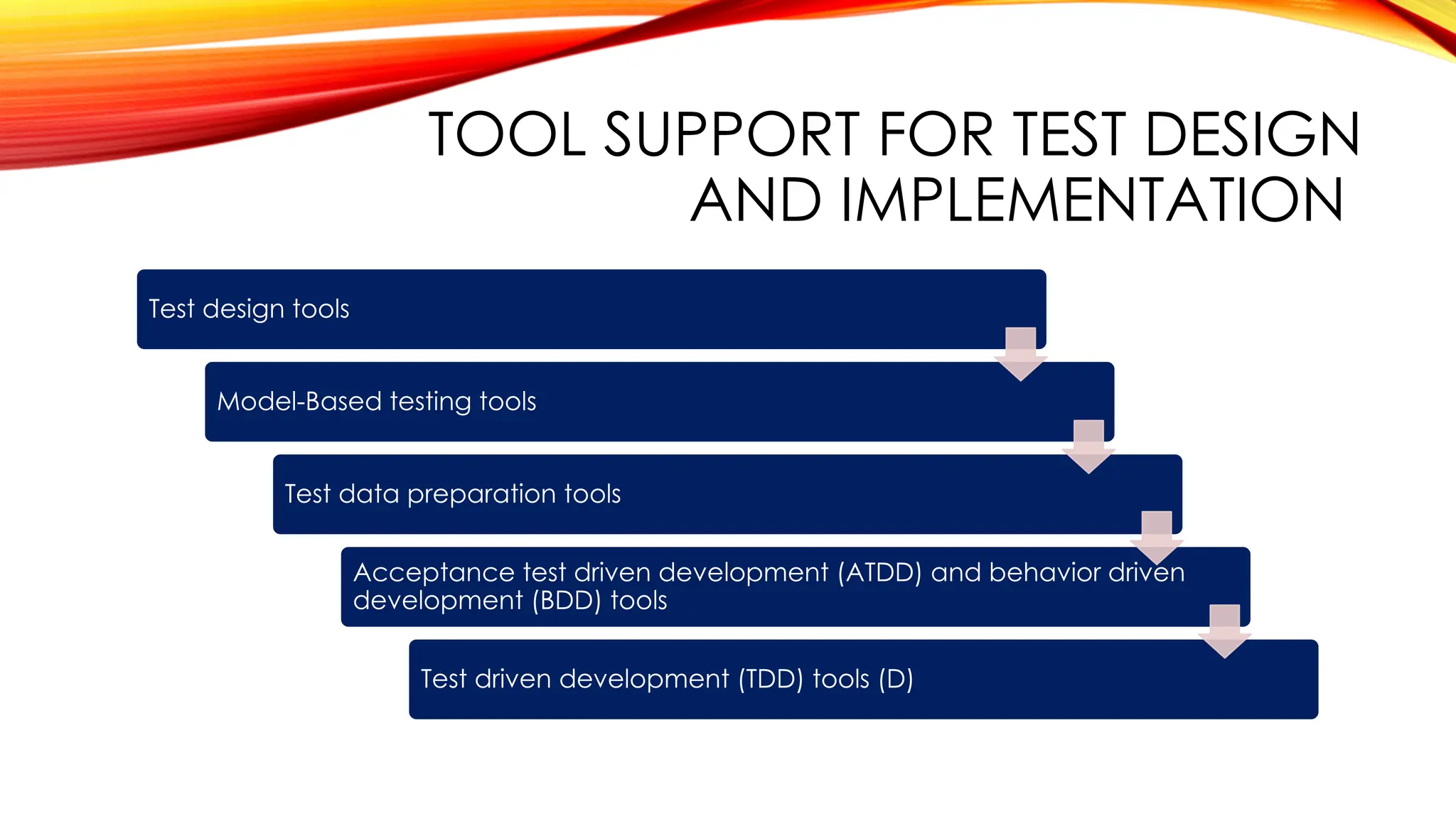

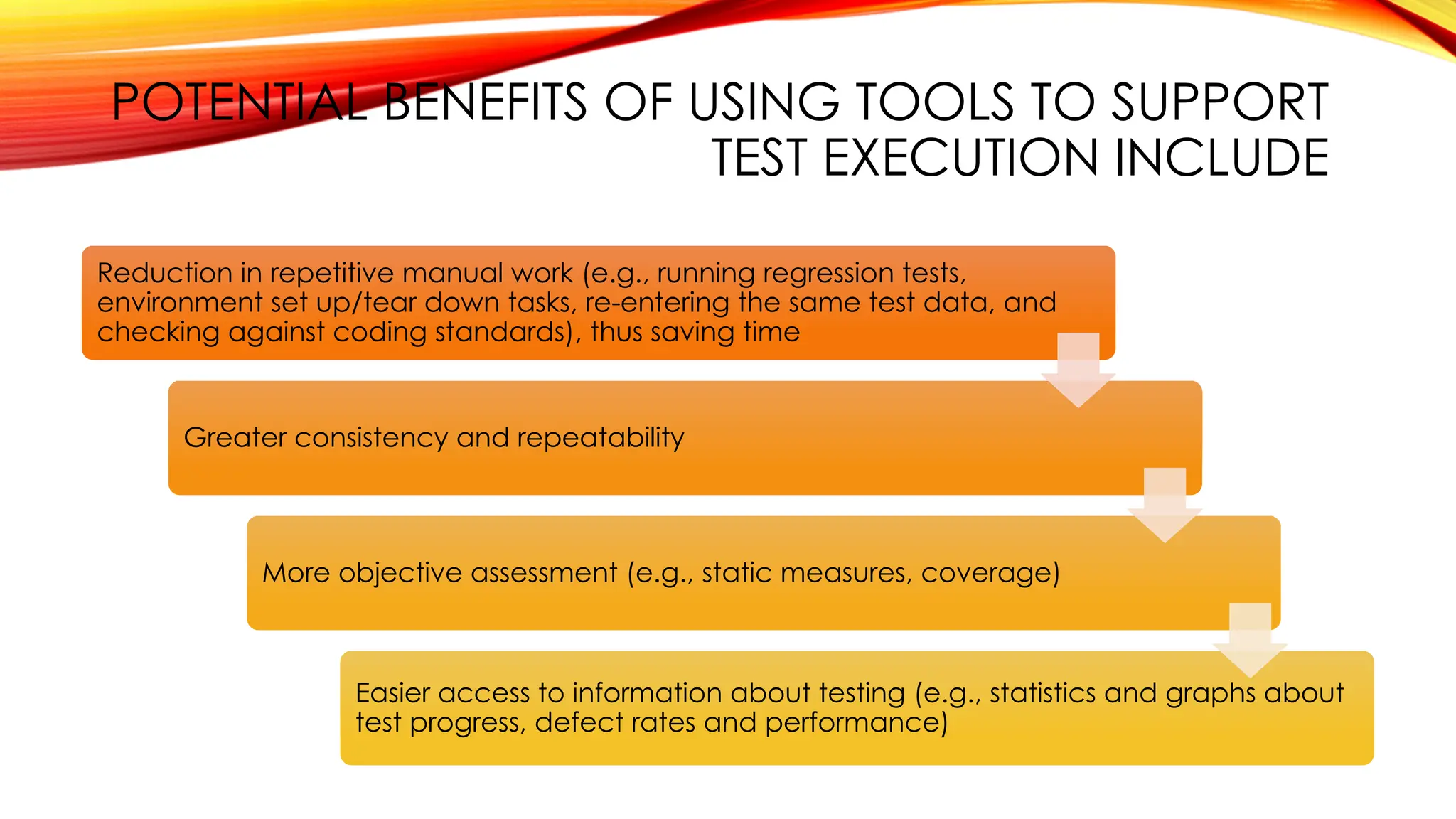

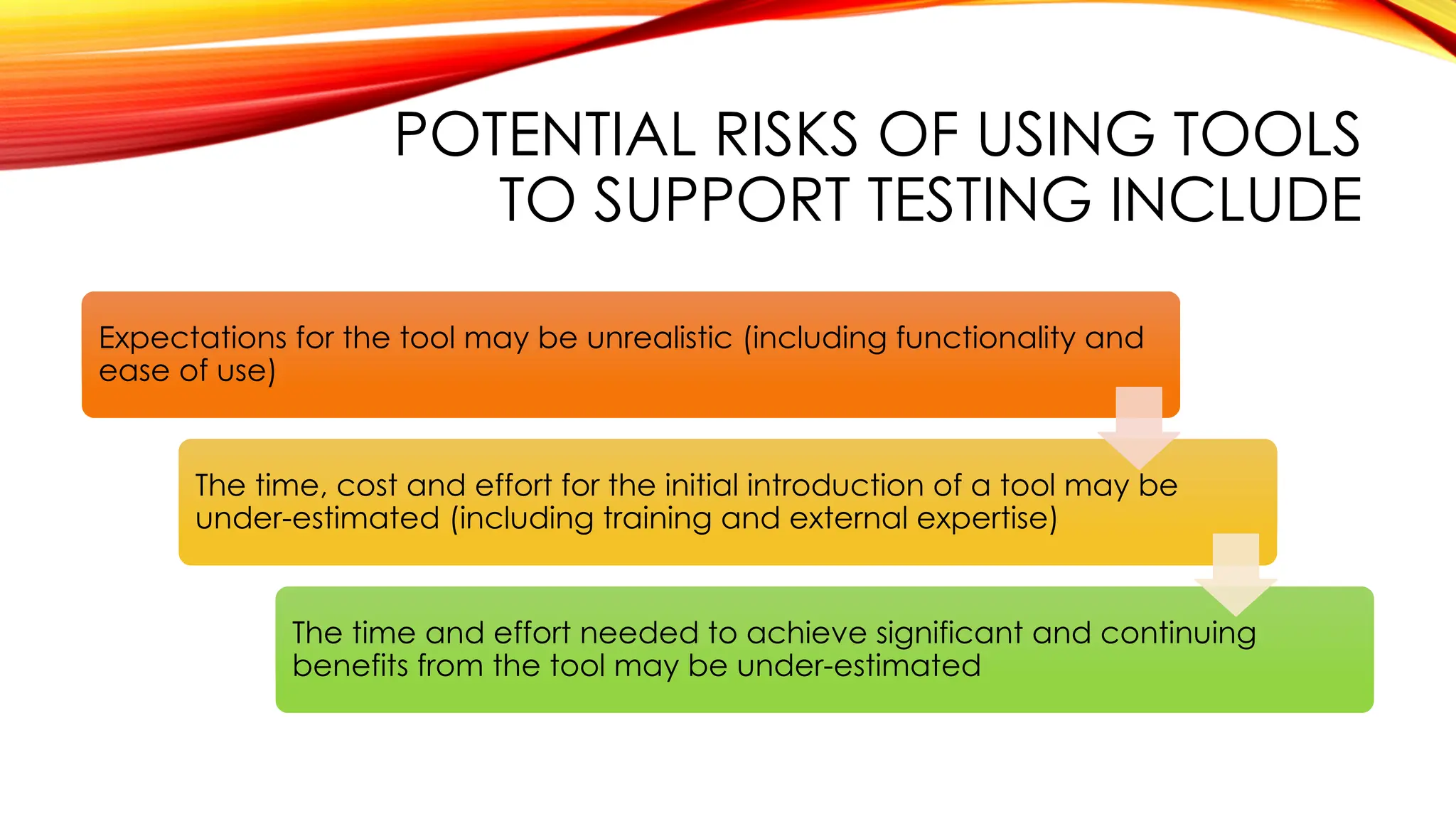

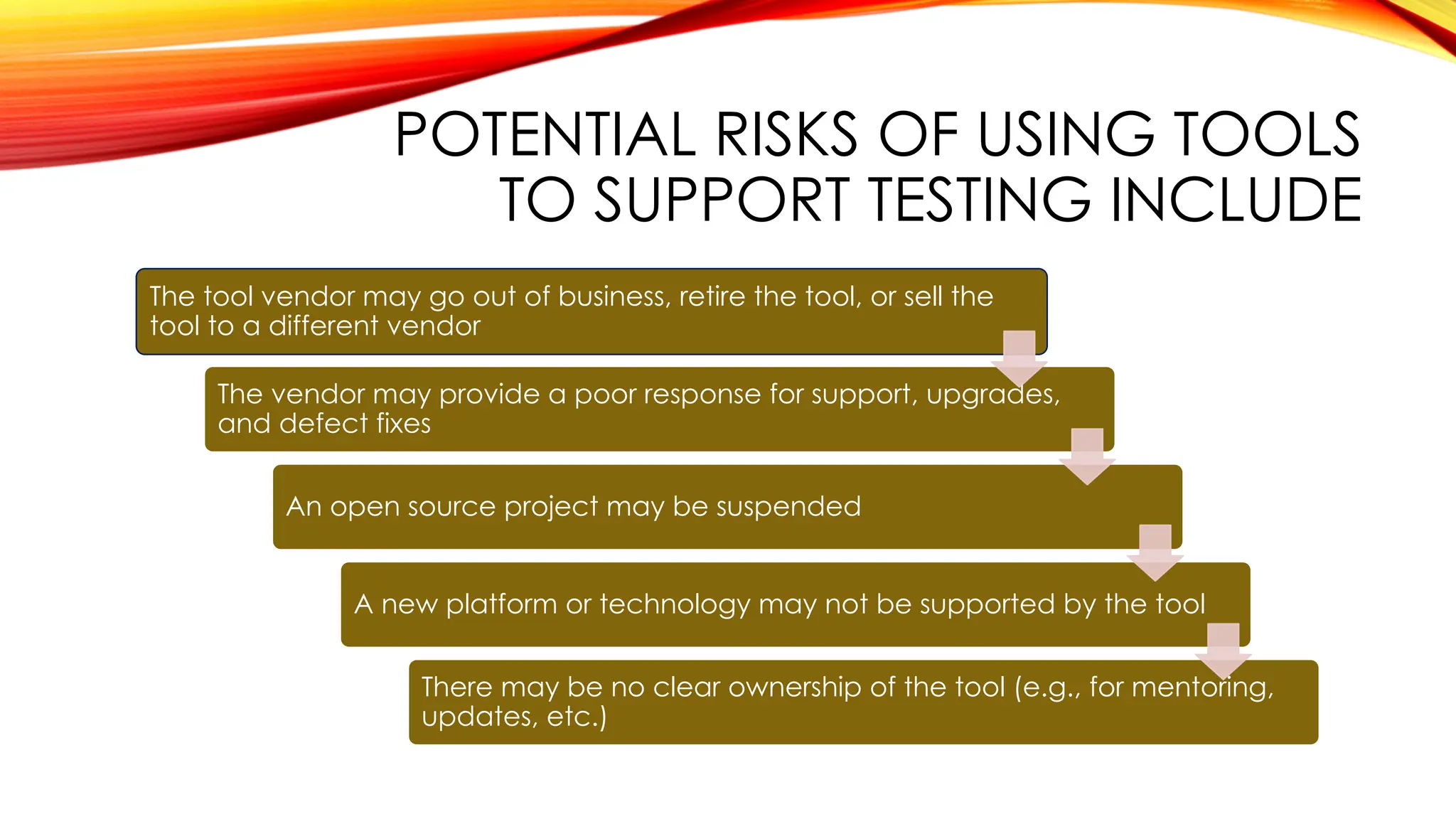

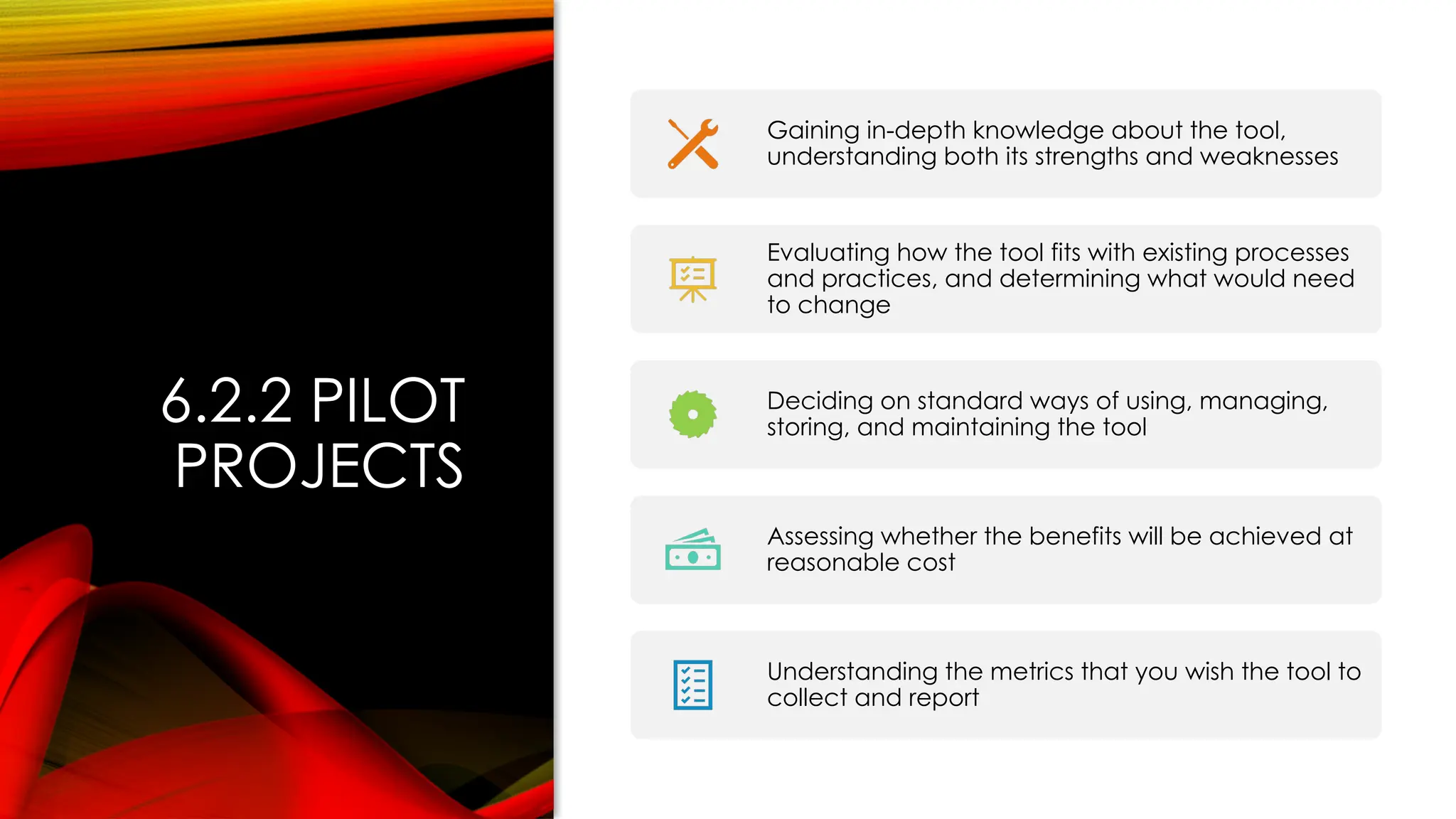

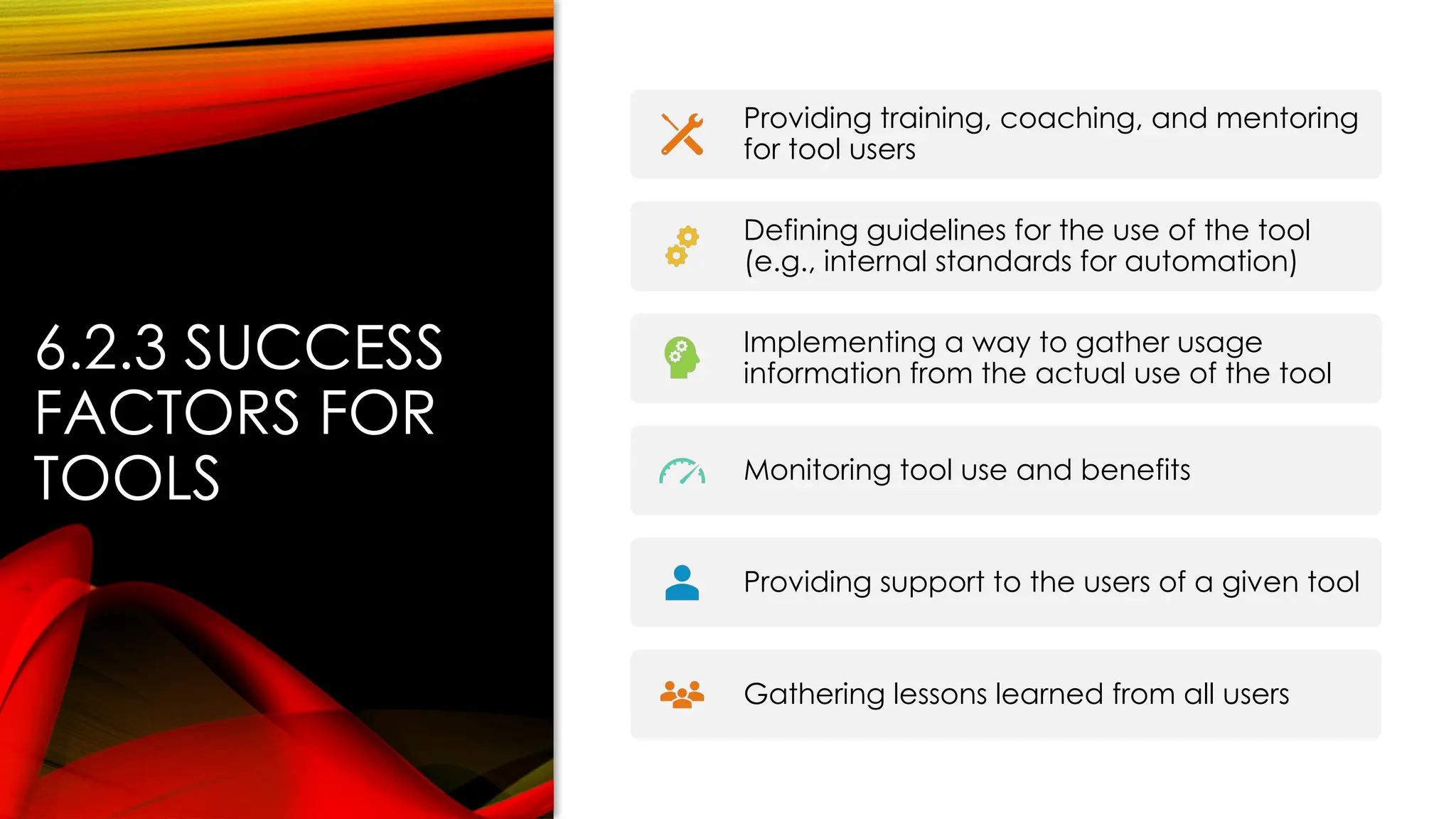

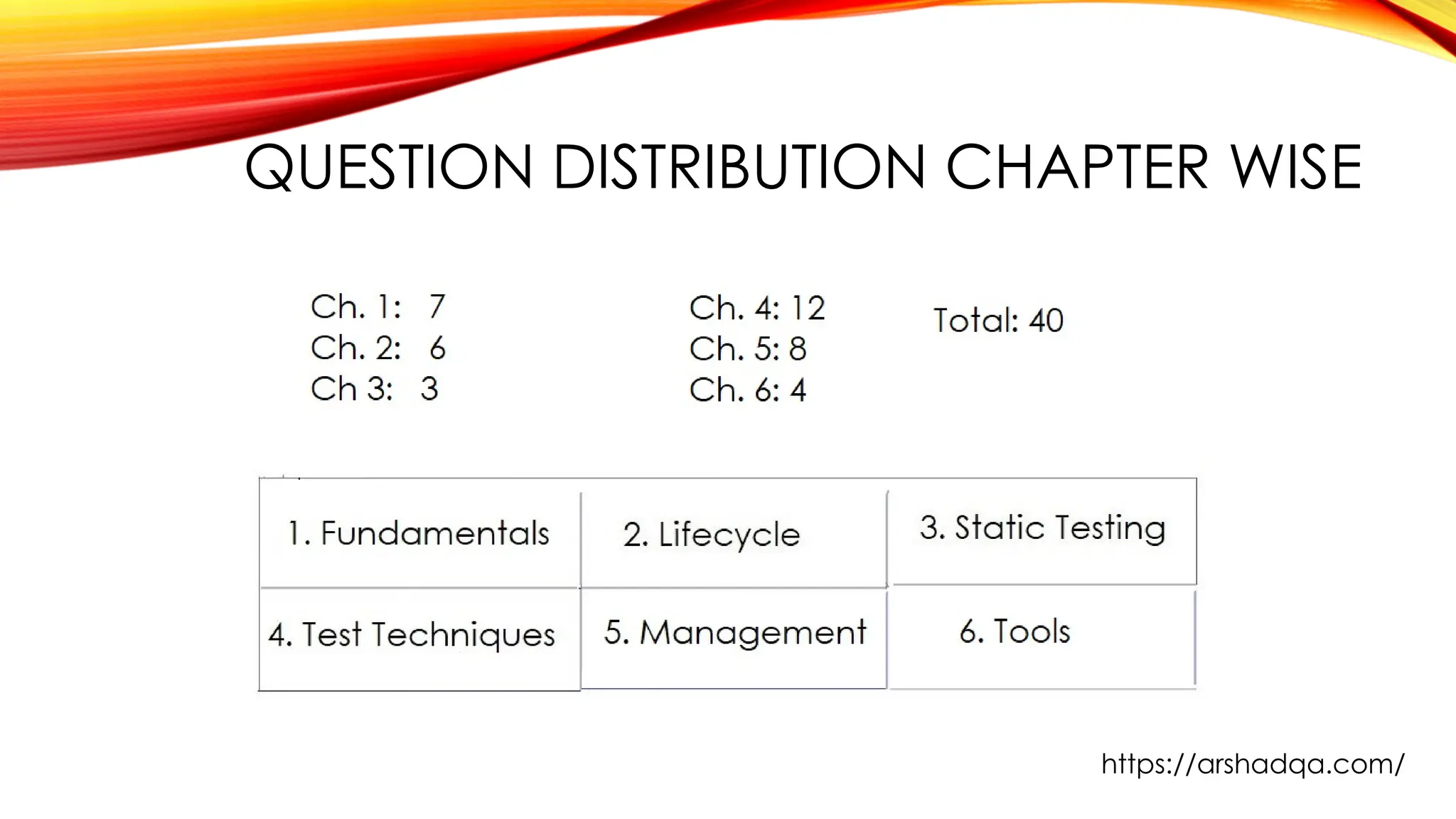

The ISTQB Foundation Level Exam Preparation Course aims to equip individuals interested in quality assurance with the necessary skills to pass the ISTQB-FL exam on their first attempt, offering 16 sample papers and a 100% success guarantee. The document outlines various aspects of software quality and testing fundamentals, including definitions, principles, and processes, while emphasizing the importance of involving testers during the entire software development lifecycle. It also discusses the psychological factors in testing and how effective communication can enhance collaboration among team members.