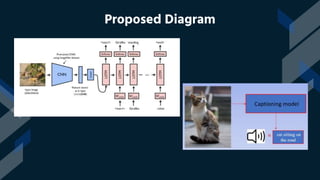

This project introduces an image captioning system that incorporates sound to generate more comprehensive captions. Two extensively trained models are combined - one for computer vision and one for natural language processing. Sound recommendations are made based on the image scene. The system achieves a top 5 accuracy of 67% and top 1 accuracy of 53%, setting a new standard. It is the first image captioning system to offer this level of accuracy while also incorporating audio. This has significant implications for helping visually impaired individuals understand visual content more vividly.