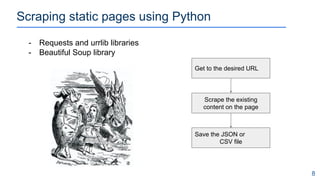

This document provides an overview of techniques for scraping data from websites as an economics student. It begins with an introduction and agenda, then covers the theoretical background of web scraping. It presents several no-coding tricks using browser extensions and Google Spreadsheets. Methods of scraping static pages using Python, Beautiful Soup, and Requests are described. Finally, it discusses scraping dynamic pages using Selenium and considerations for web etiquette.

![Navigating HTML using XPATH

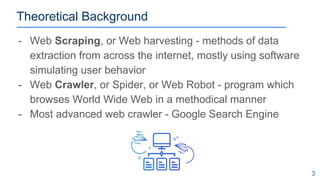

5

/html[@class='js bootstrap-anchors-processed']/body[@class='html not-front not-logged-in one-sidebar sidebar-first

page-traineeships navbar-is-fixed-top']/div[@class='main-container container']/div[@class='row']/div[@class='region

region-content col-sm-9']/section[@id='block-system-main']/div[@class='view view-erasmusintern-traineeships-search

view-id-erasmusintern_traineeships_search view-display-id-page media-list-container

view-dom-id-bfcb6580db560c43fd748355dce05662']/div[@class='view-content']/div[1]/div[@class='node node-traineeship

view-mode-media_list clearfix']/div[@class='row media-list-items']/div[@class='col-md-12']/div[@class='ds-header

inline-header-content']/div[@class='field field-name-title field-type-ds field-label-hidden

pull-left']/div[@class='field-items']/div[@class='field-item even']/h3[@class='dot-title']/a

XPath Query:](https://image.slidesharecdn.com/howtoscrapedataaseconomicsstudent-181214095319/85/How-to-scrape-data-as-economics-student-6-320.jpg)

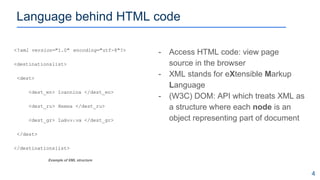

![Navigating HTML using XPATH

6

Key Characters:

/ : starts the root,leads to children`s

node;

// : starts wherever (relative path);

@ : select attributes;

[] : answers question Which one?

[*] : grabs everything

XPATH: “//div[@class='field-item even']/h3[@class='dot-title']/a[1]”

Types of nodes:

- element

- attribute

- text

- namespace

- processing instructions

- comment

- document node](https://image.slidesharecdn.com/howtoscrapedataaseconomicsstudent-181214095319/85/How-to-scrape-data-as-economics-student-7-320.jpg)

![Scraping static pages using BS4 + Requests

9

Python Source Code example of JSON file

import requests

import BeautifulSoup as BS

def save_the_JSON :***

url = requests.get(erasmus_intern_url )

soup = BS(url, "lxml")

scrape_results = soup.find_all("div", class_="field-item even" )

for element in scrape_results :

title = element.find("h3",

class_='dot-title' ).find_next('a').get_text()

save_the_JSON ()

“results”:[

{

“id” : 1

“city” : “Berlin”,

“company” : “UIZ”,

“title” : “Manager”

}

]](https://image.slidesharecdn.com/howtoscrapedataaseconomicsstudent-181214095319/85/How-to-scrape-data-as-economics-student-10-320.jpg)