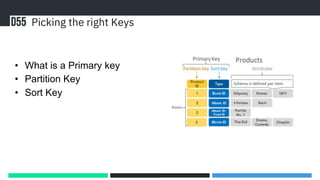

The document discusses best practices and common pitfalls when using DynamoDB, including the importance of single table design and proper key selection. It highlights the need for optimizing data querying and managing data deletion through TTL. The content is aimed at ensuring better application performance and cost-effectiveness in using DynamoDB.