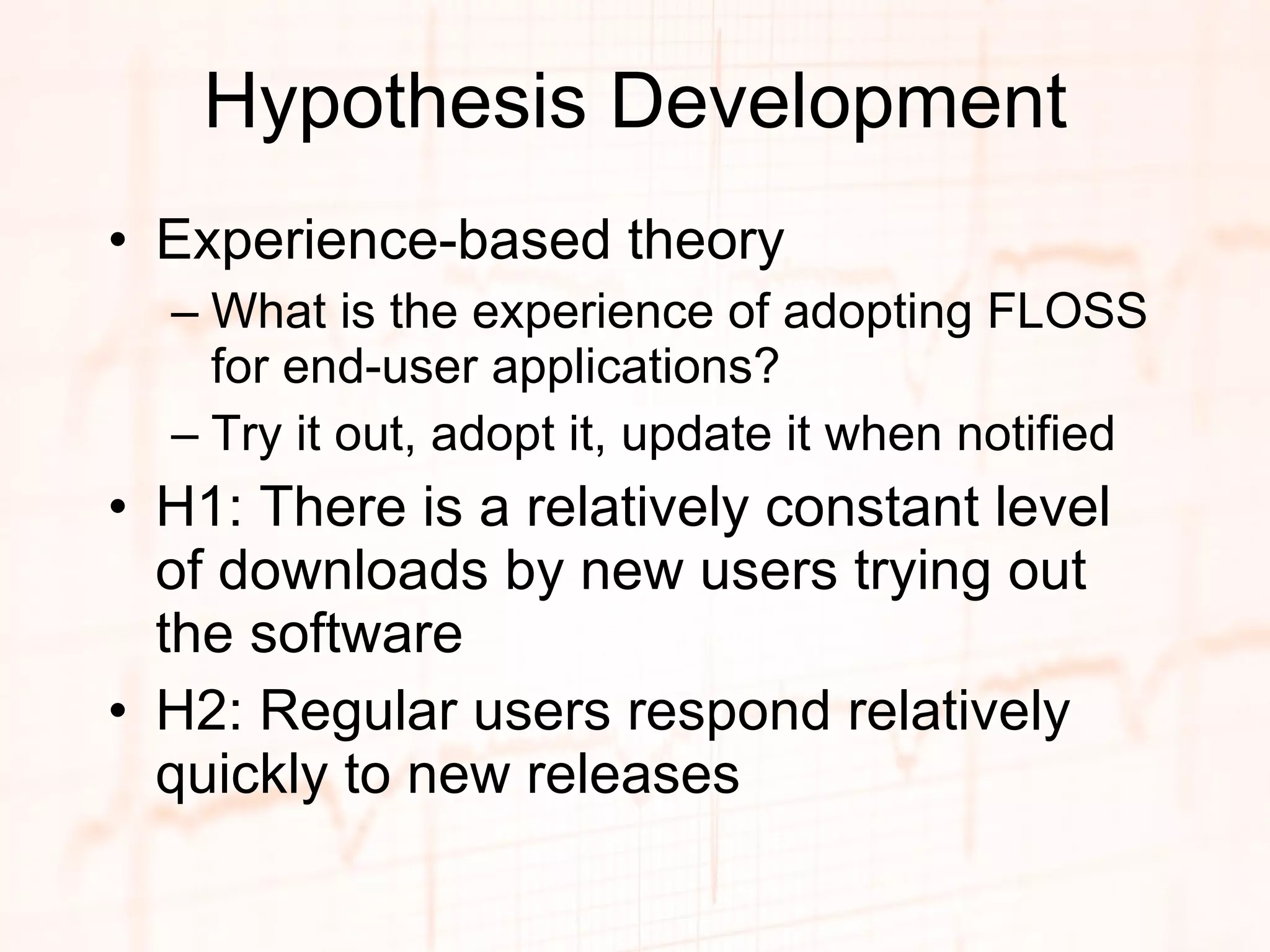

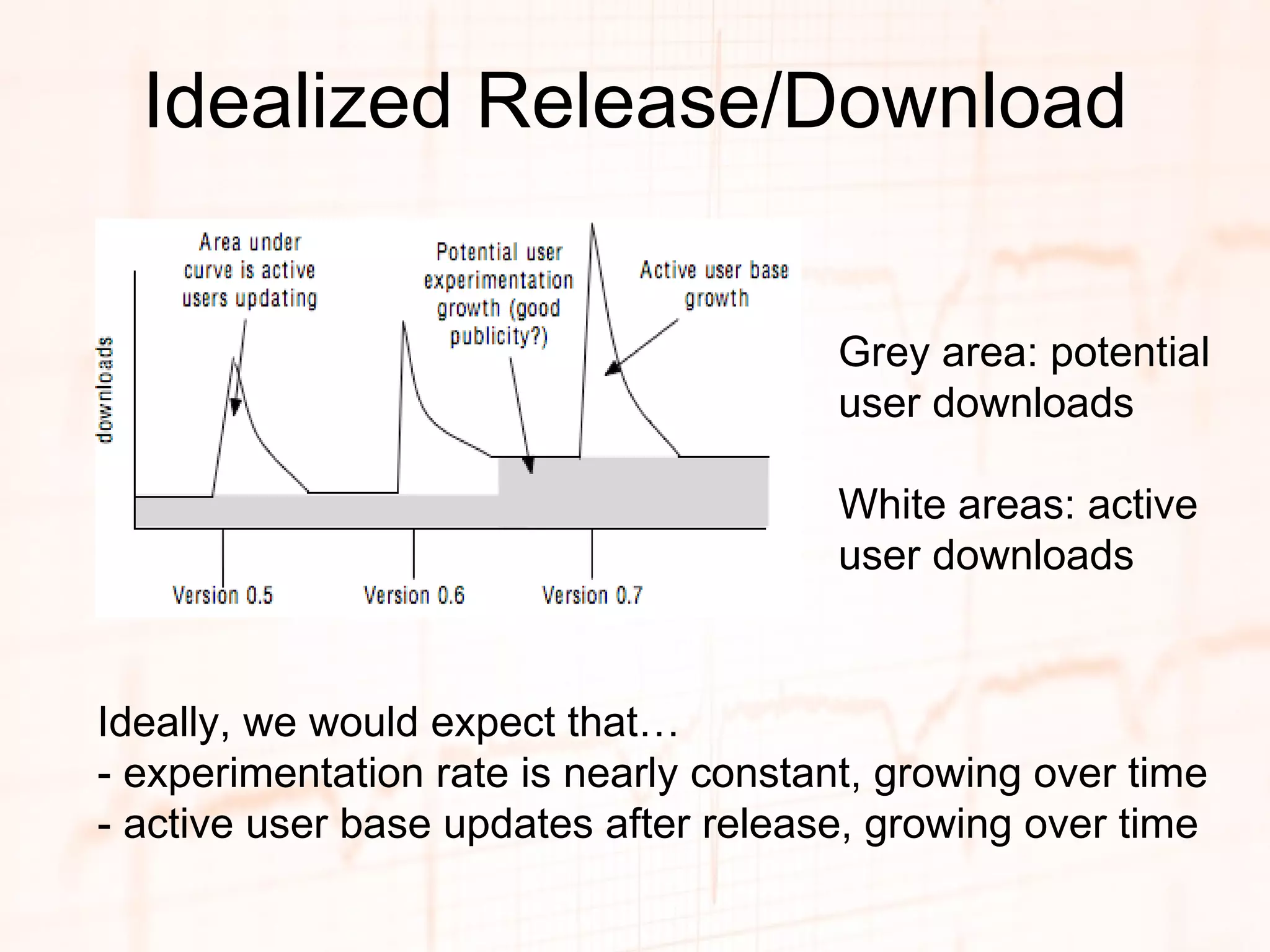

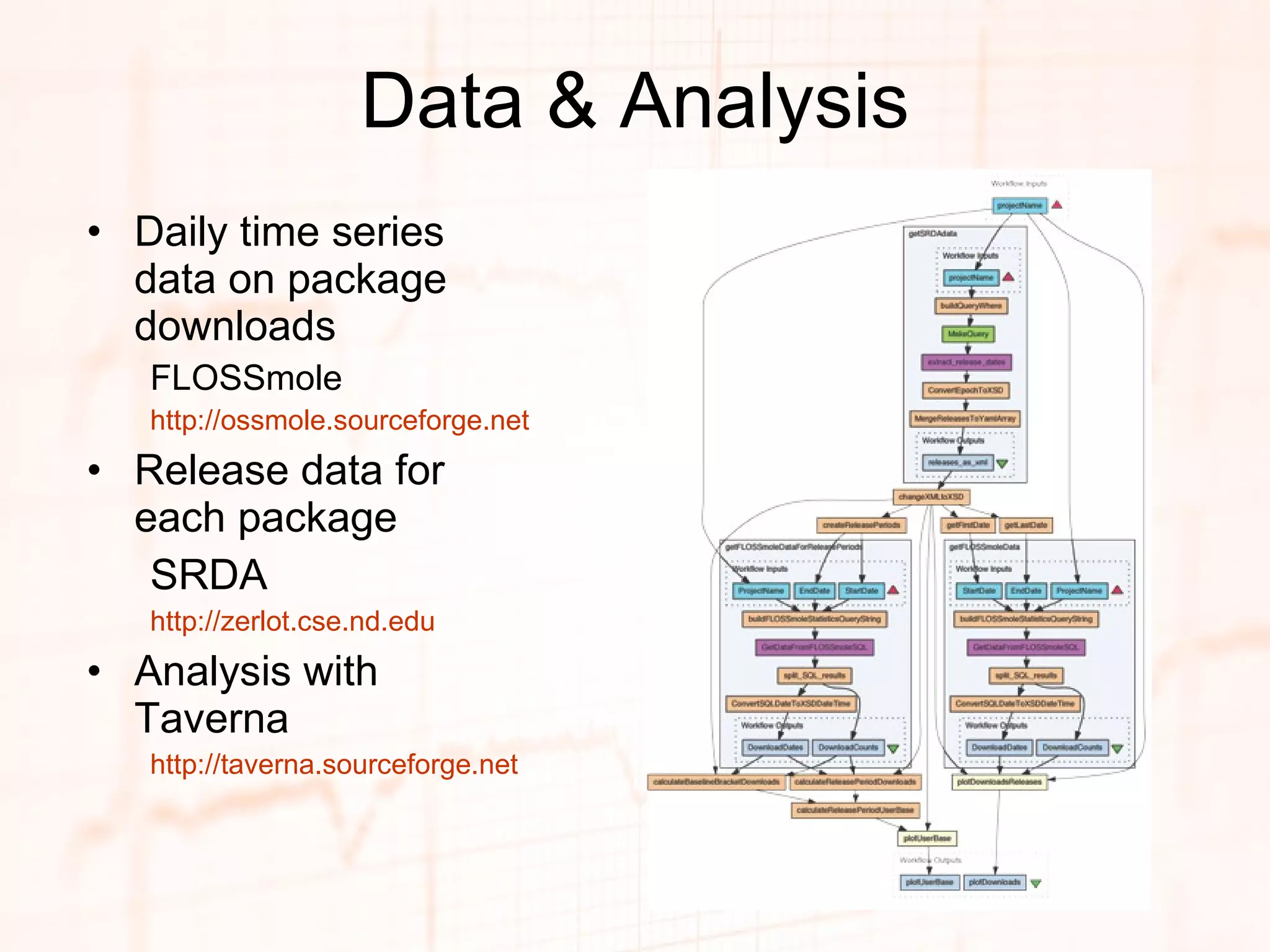

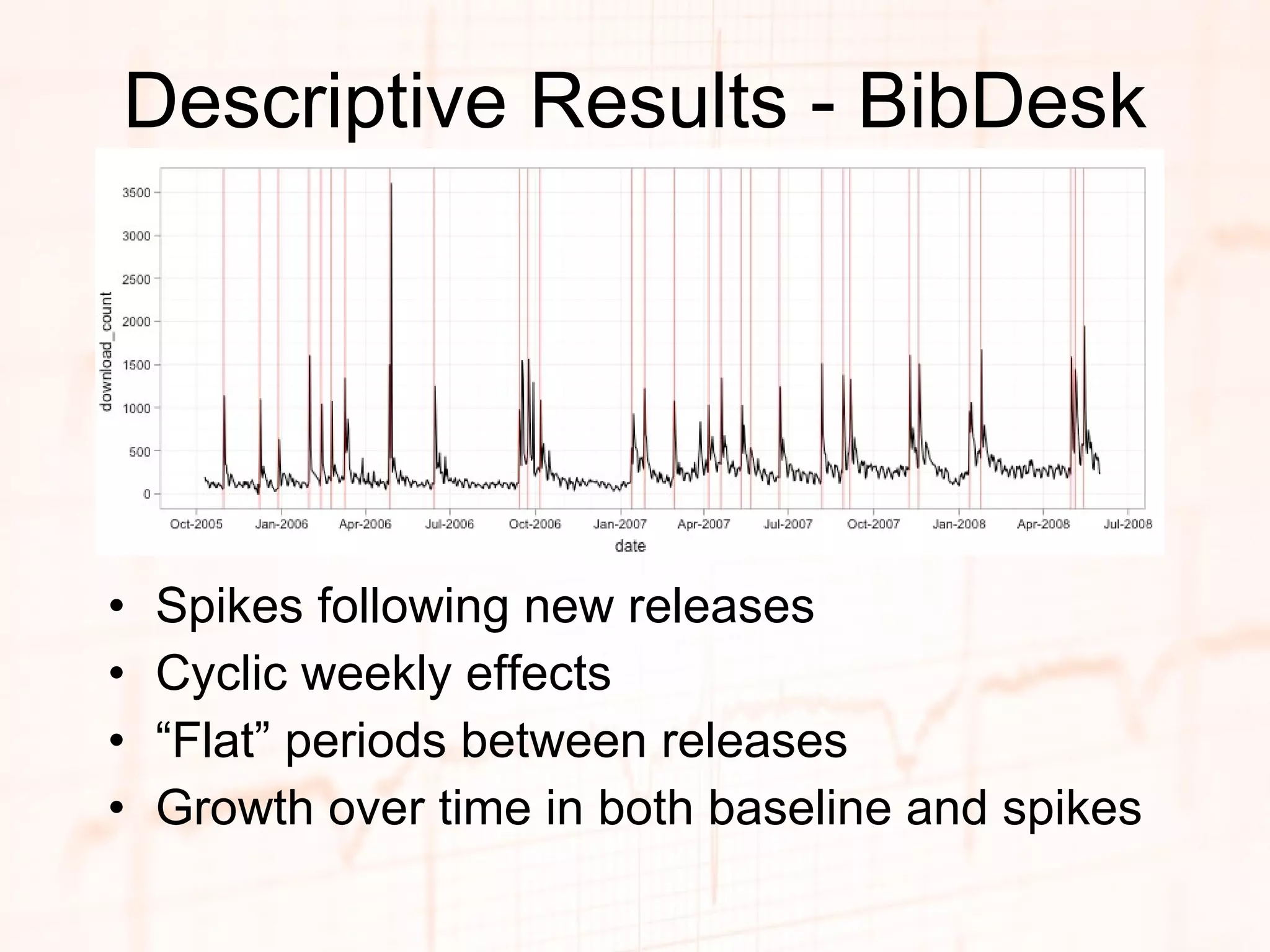

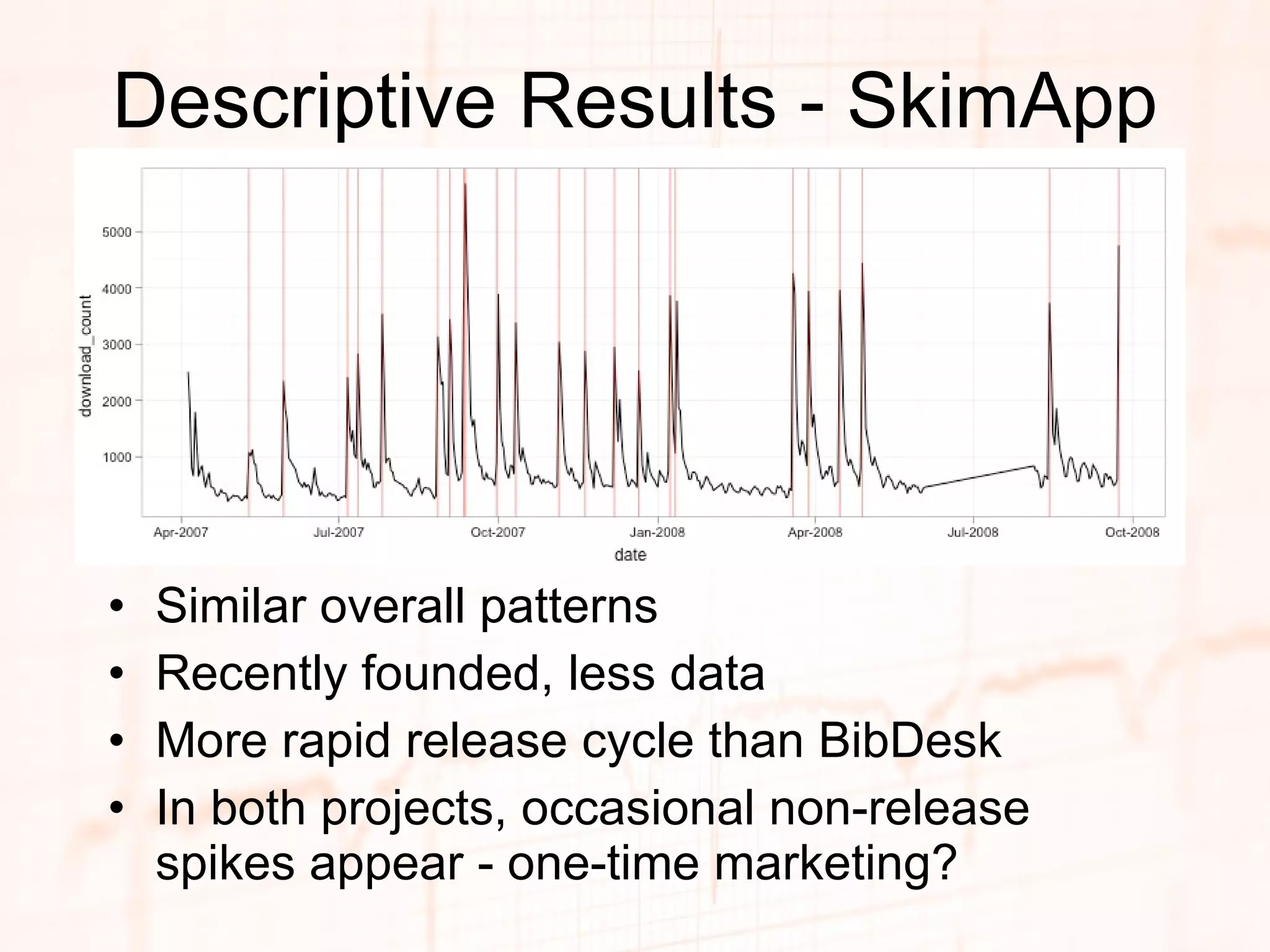

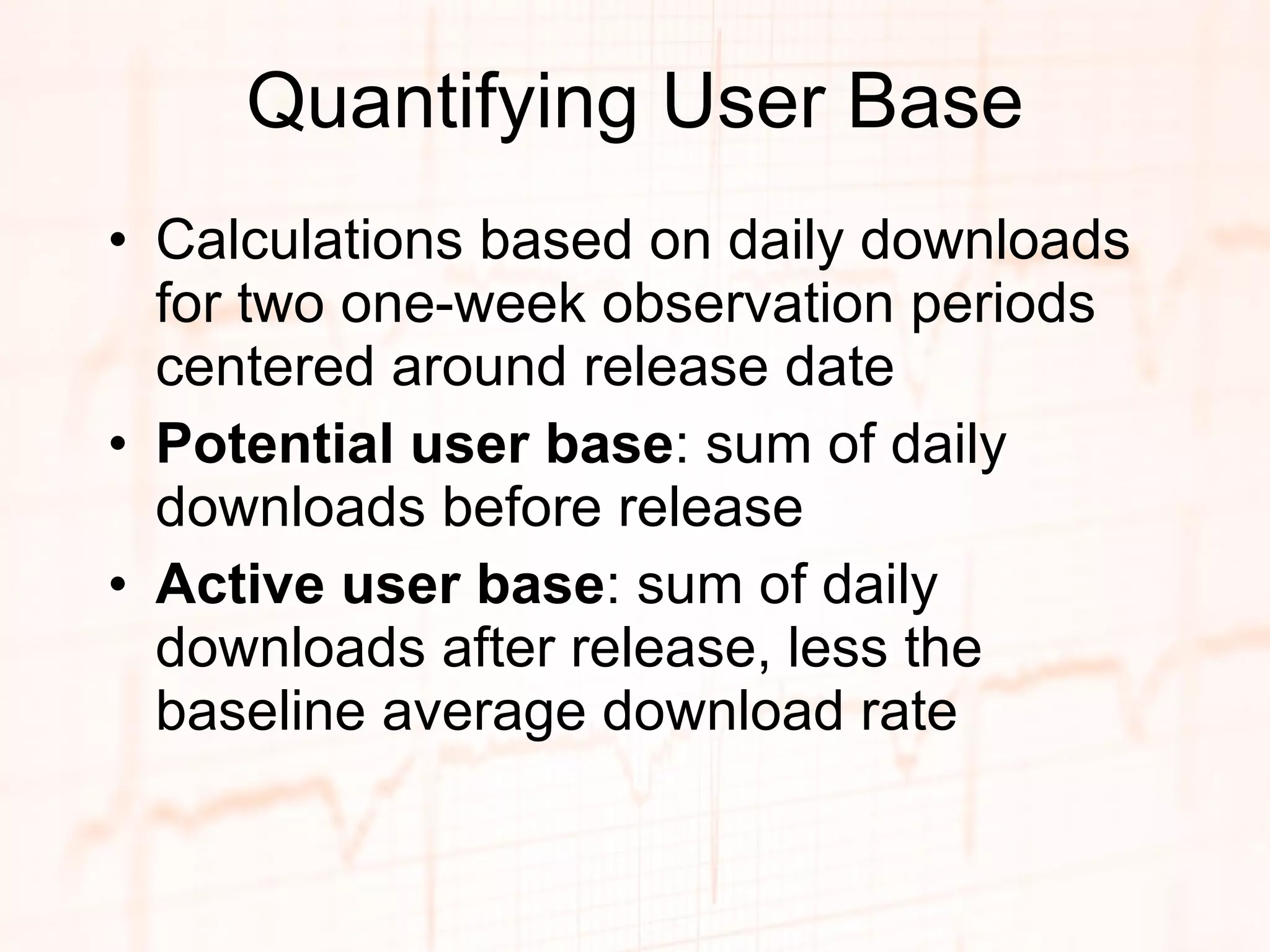

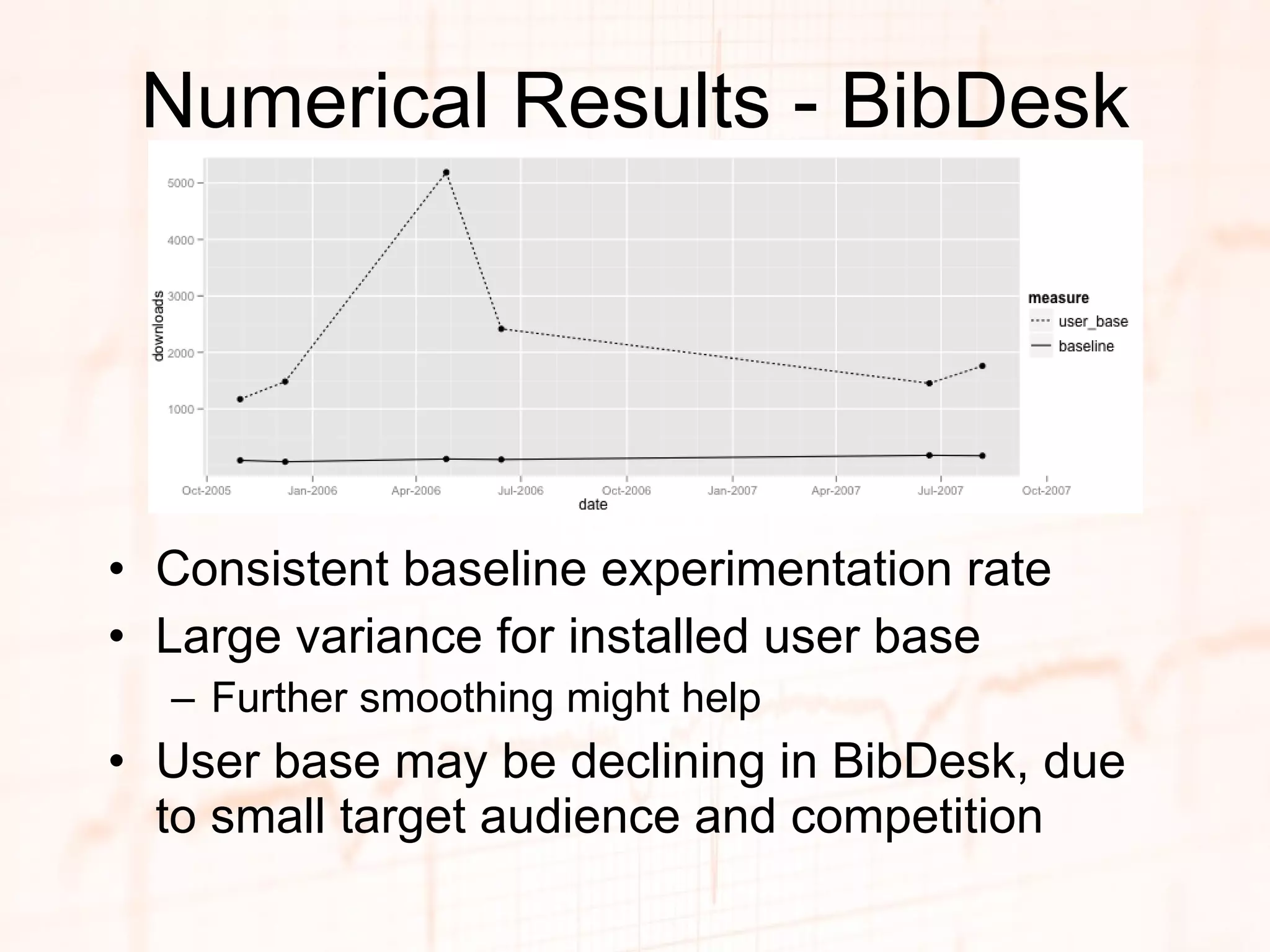

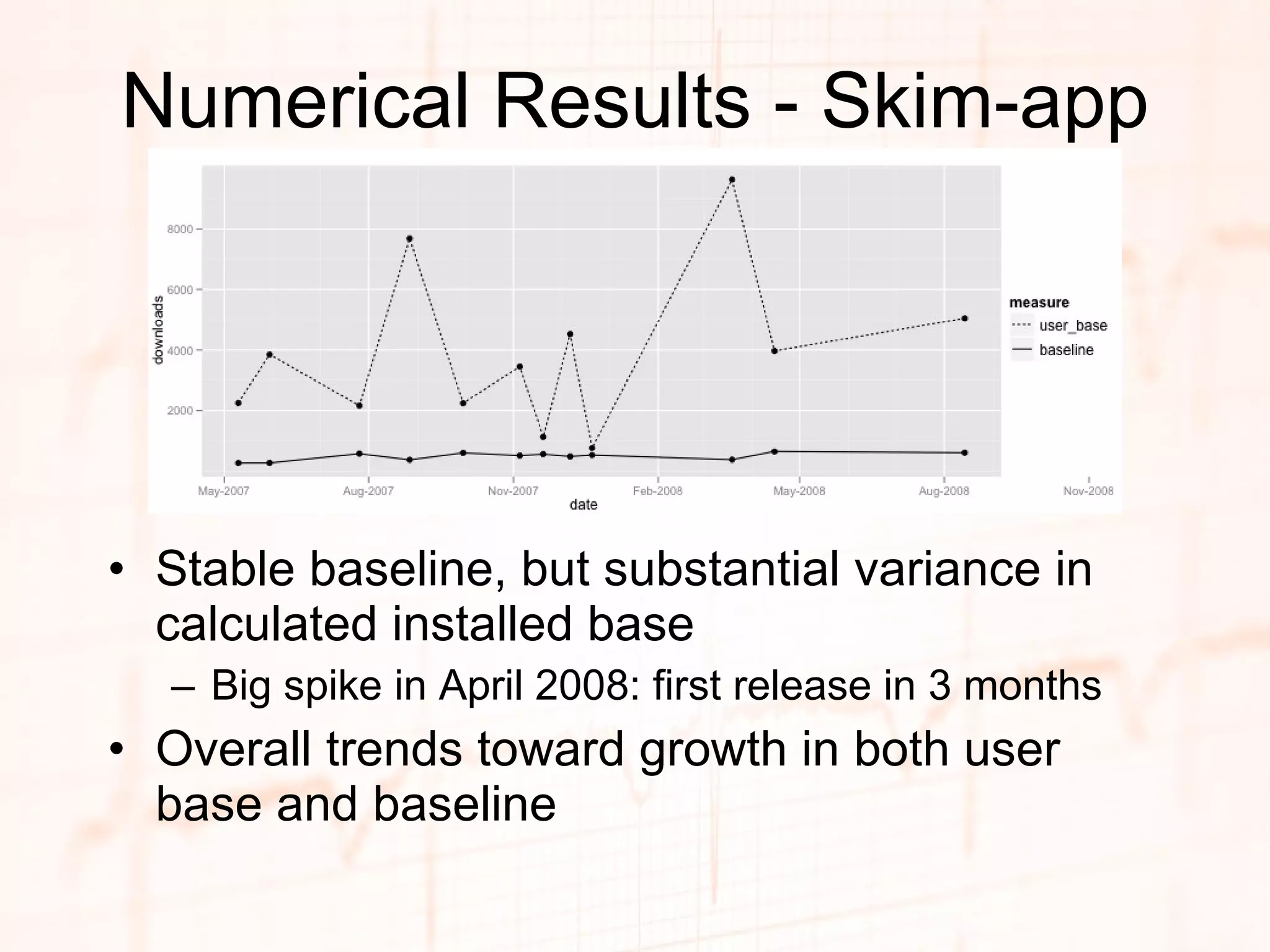

This document proposes algorithms to estimate the active user base and general interest in free/libre and open source software (FLOSS) projects based on download counts over time. It analyzes download data from two FLOSS projects, finding spikes in downloads following new releases that taper off, with a relatively constant baseline of downloads between releases. The authors calculate potential user base as downloads before releases and active user base as downloads after, excluding the baseline. While download data is imperfect, these measures provide a way to quantify user engagement with FLOSS projects over time.

![Thanks! Questions? {awiggins|crowston}@syr.edu , [email_address] floss.syr.edu flosshub.org Background image derived from photo by Vincent Kaczmarek, http://www.flickr.com/photos/kaczmarekvincent/3263200507/](https://image.slidesharecdn.com/heartbeat-090608184637-phpapp01/75/Heartbeat-Measuring-Active-User-Base-and-Potential-User-Interest-17-2048.jpg)