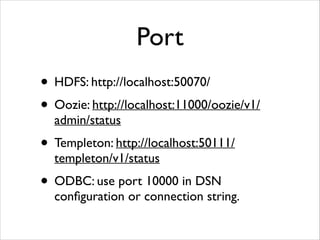

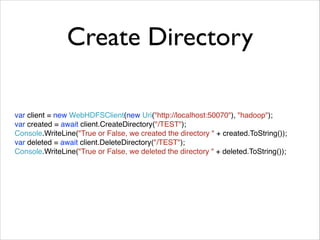

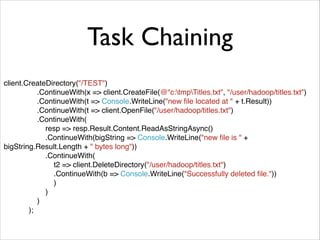

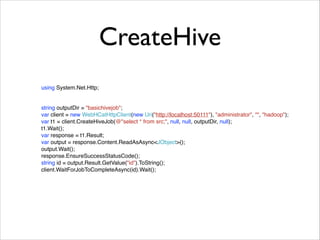

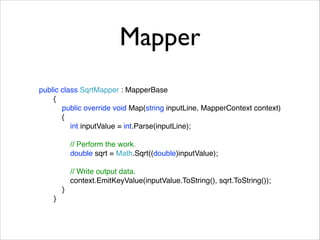

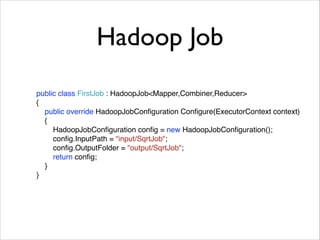

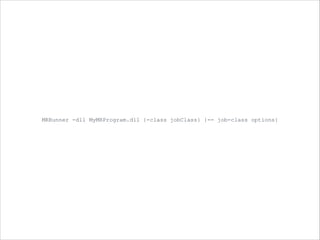

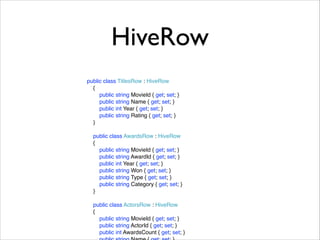

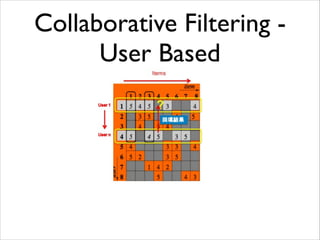

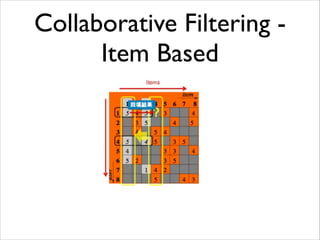

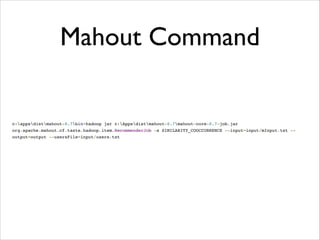

This document provides information on programming with HDInsight including important port numbers, using WebHDFS and WebHCat to interact with HDFS and Hive, running MapReduce jobs with .NET, and using Mahout for machine learning tasks like classification, clustering, and collaborative filtering recommendations.