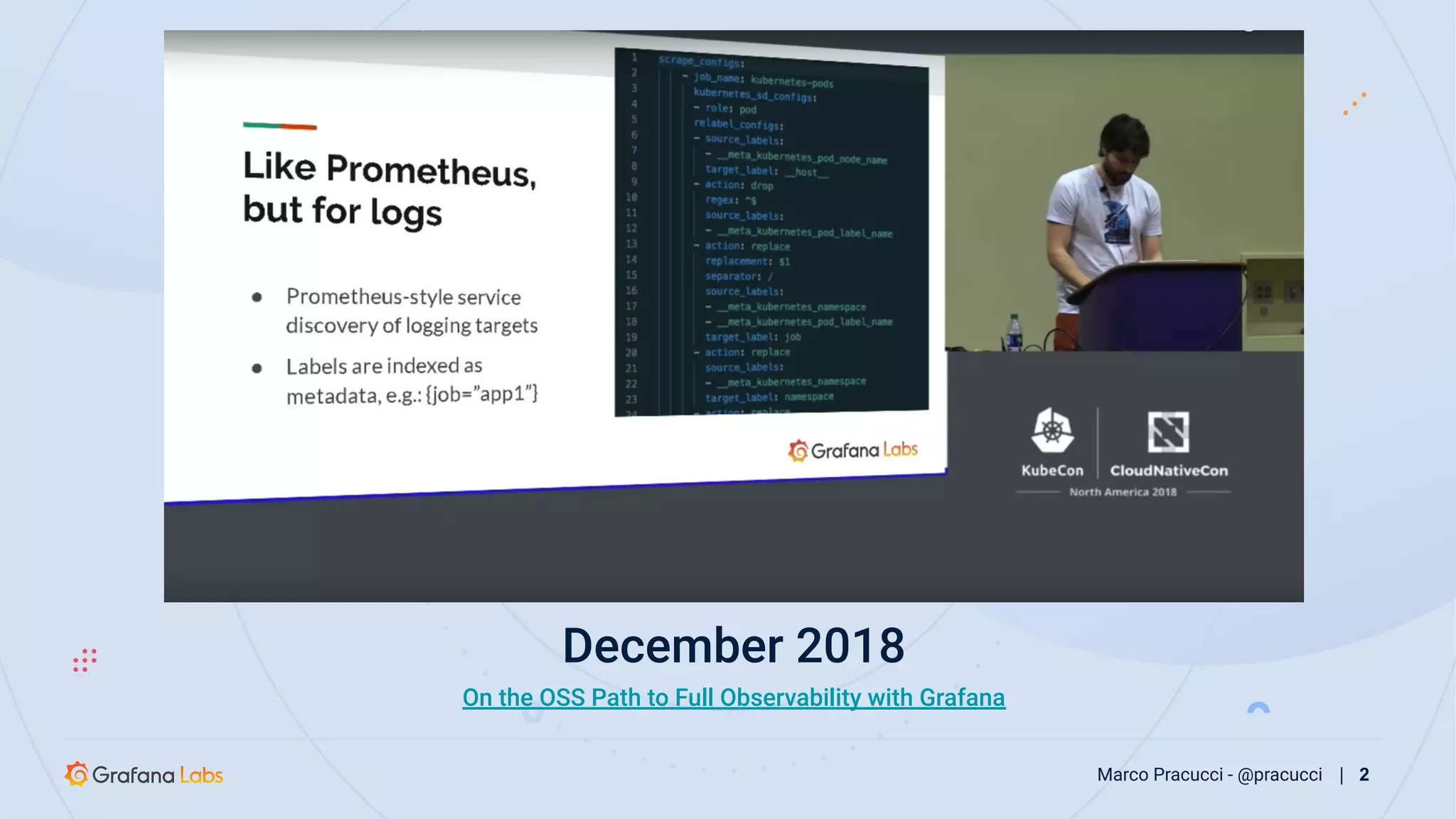

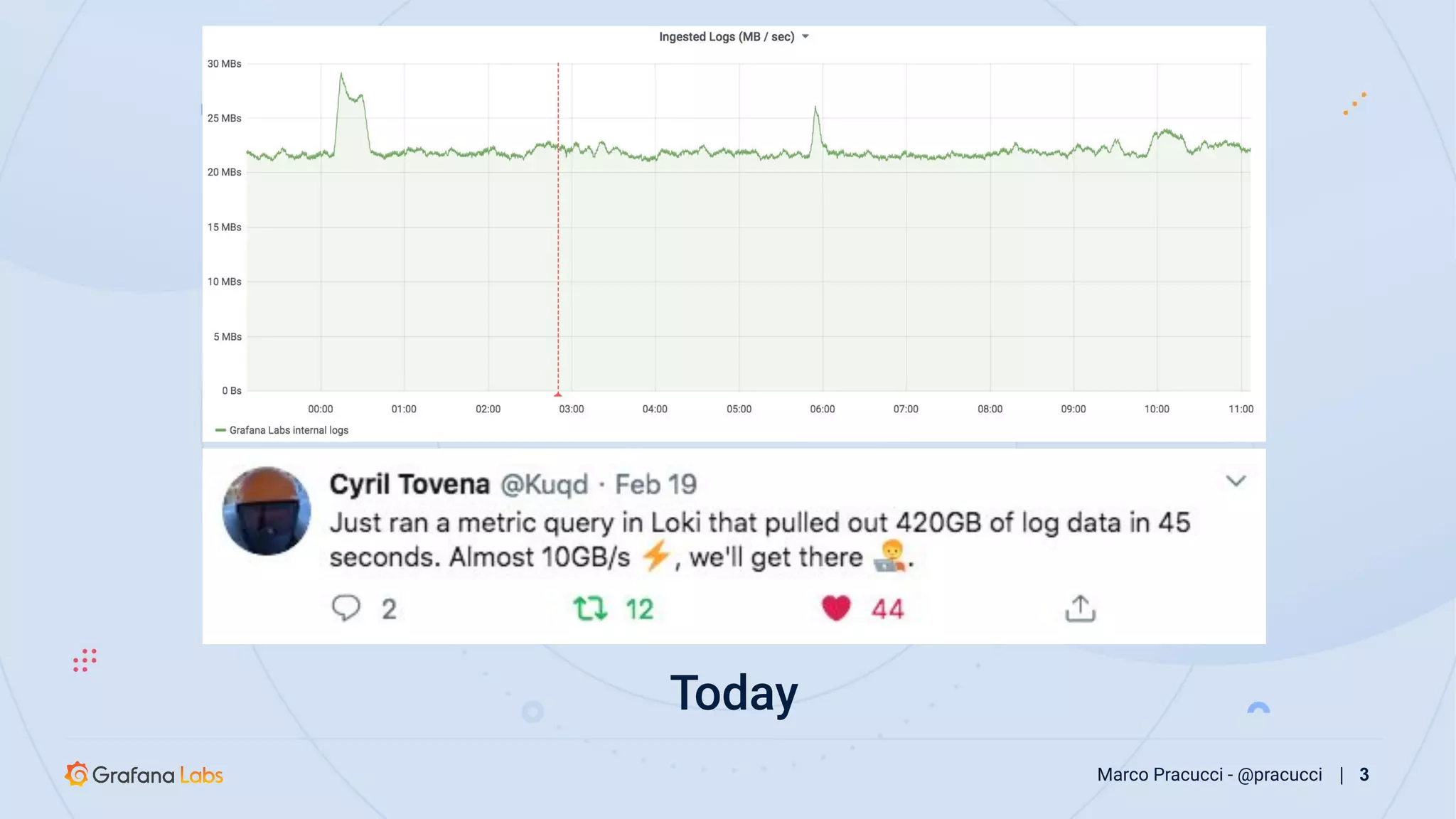

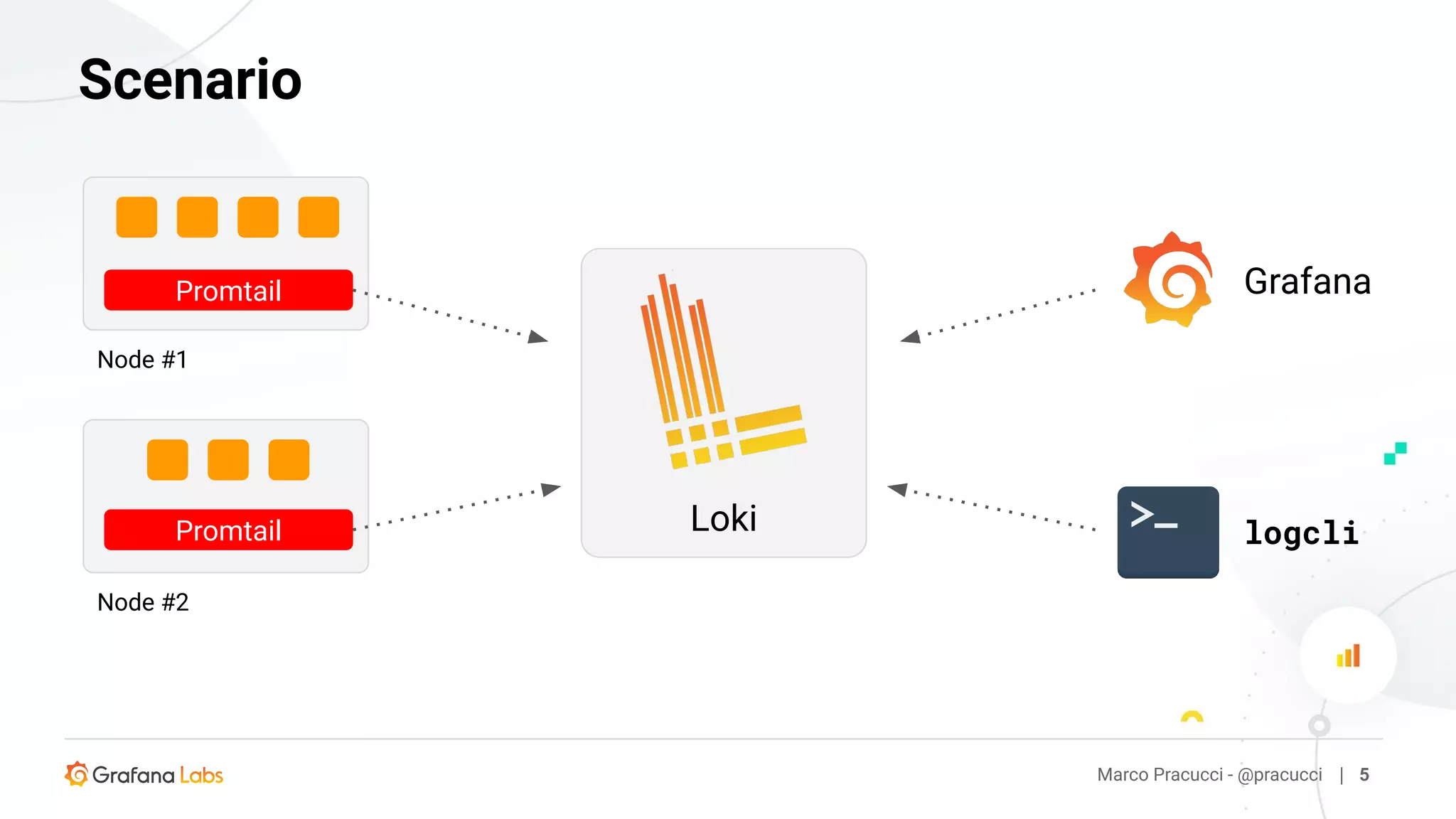

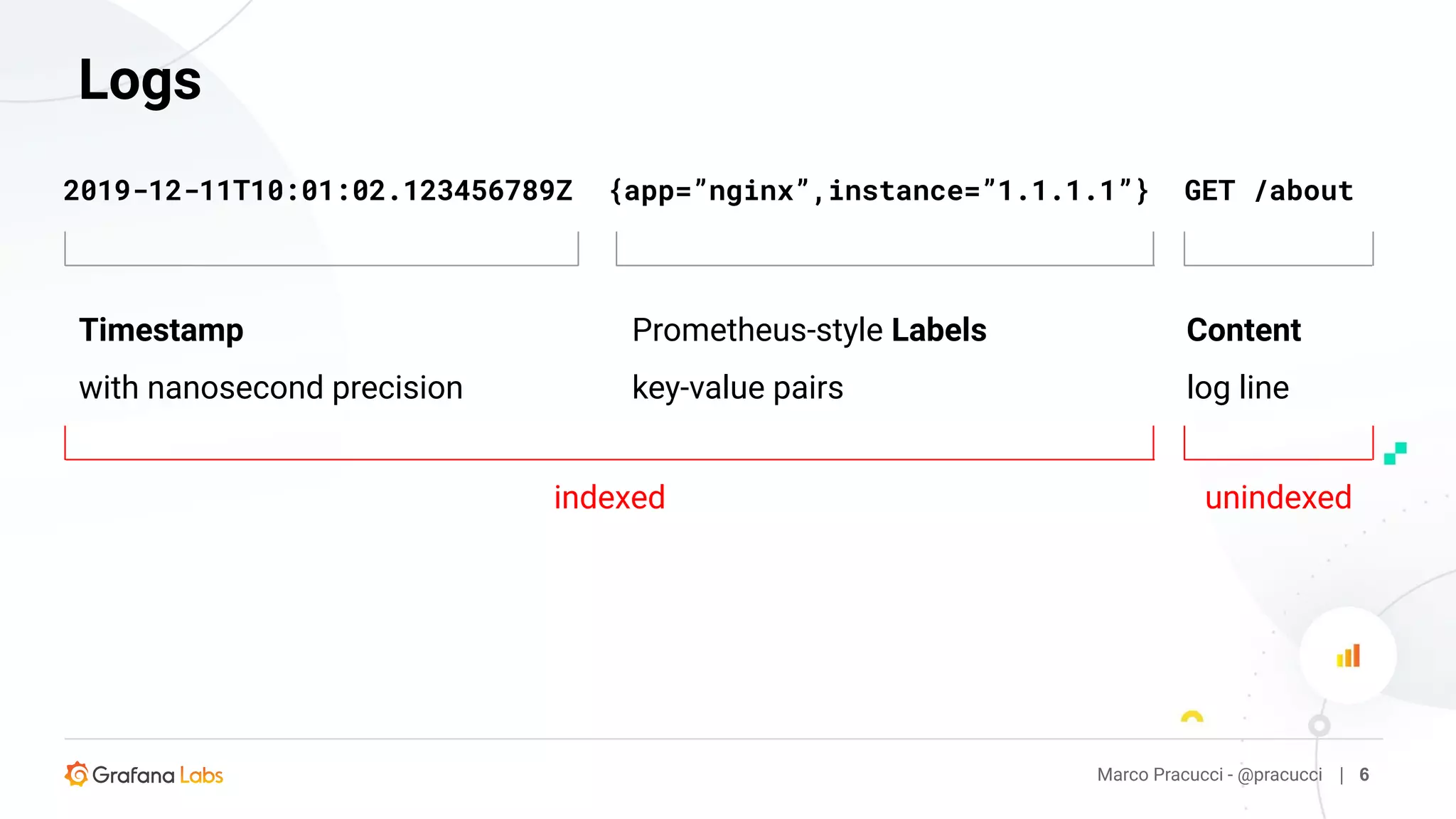

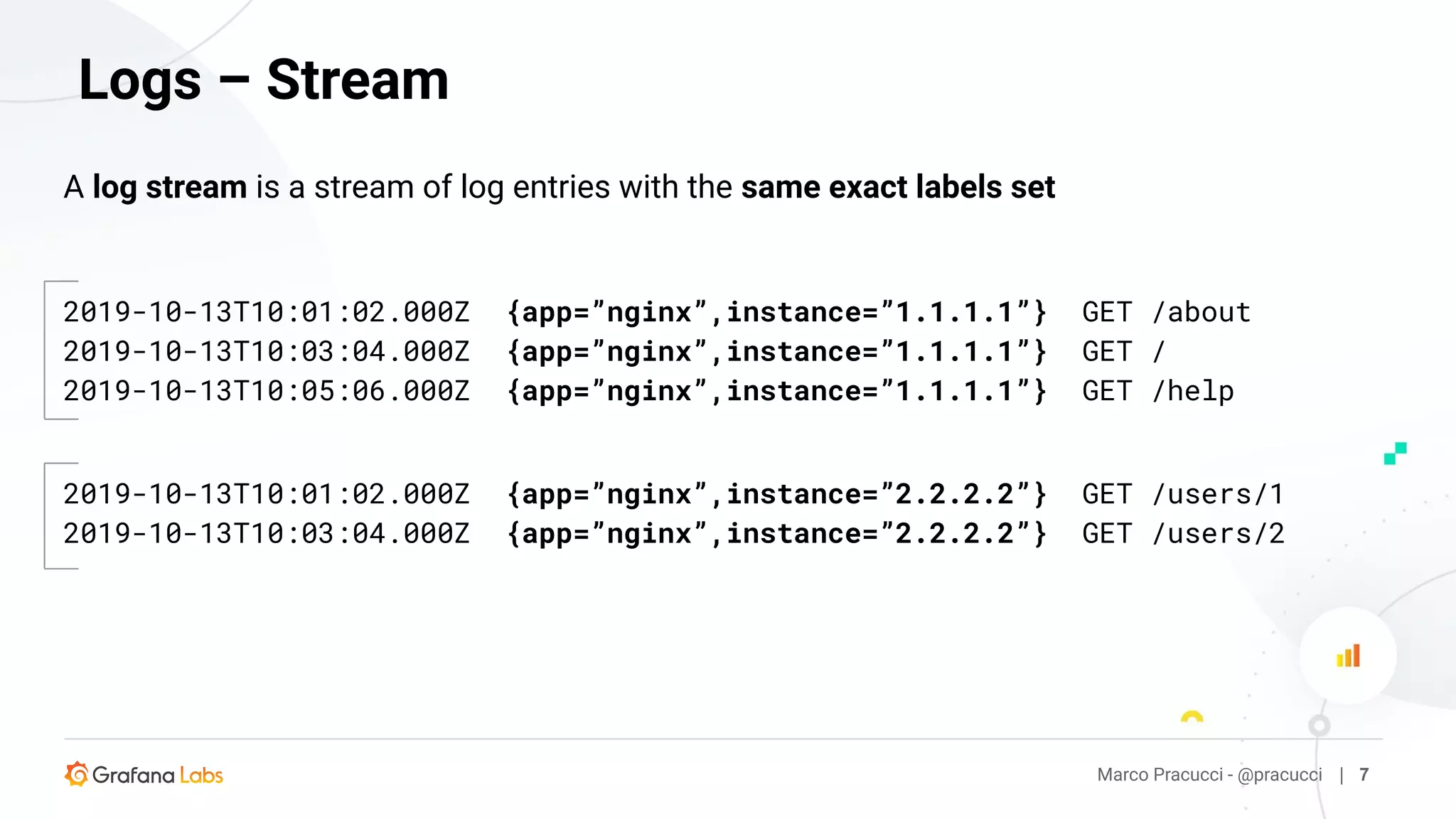

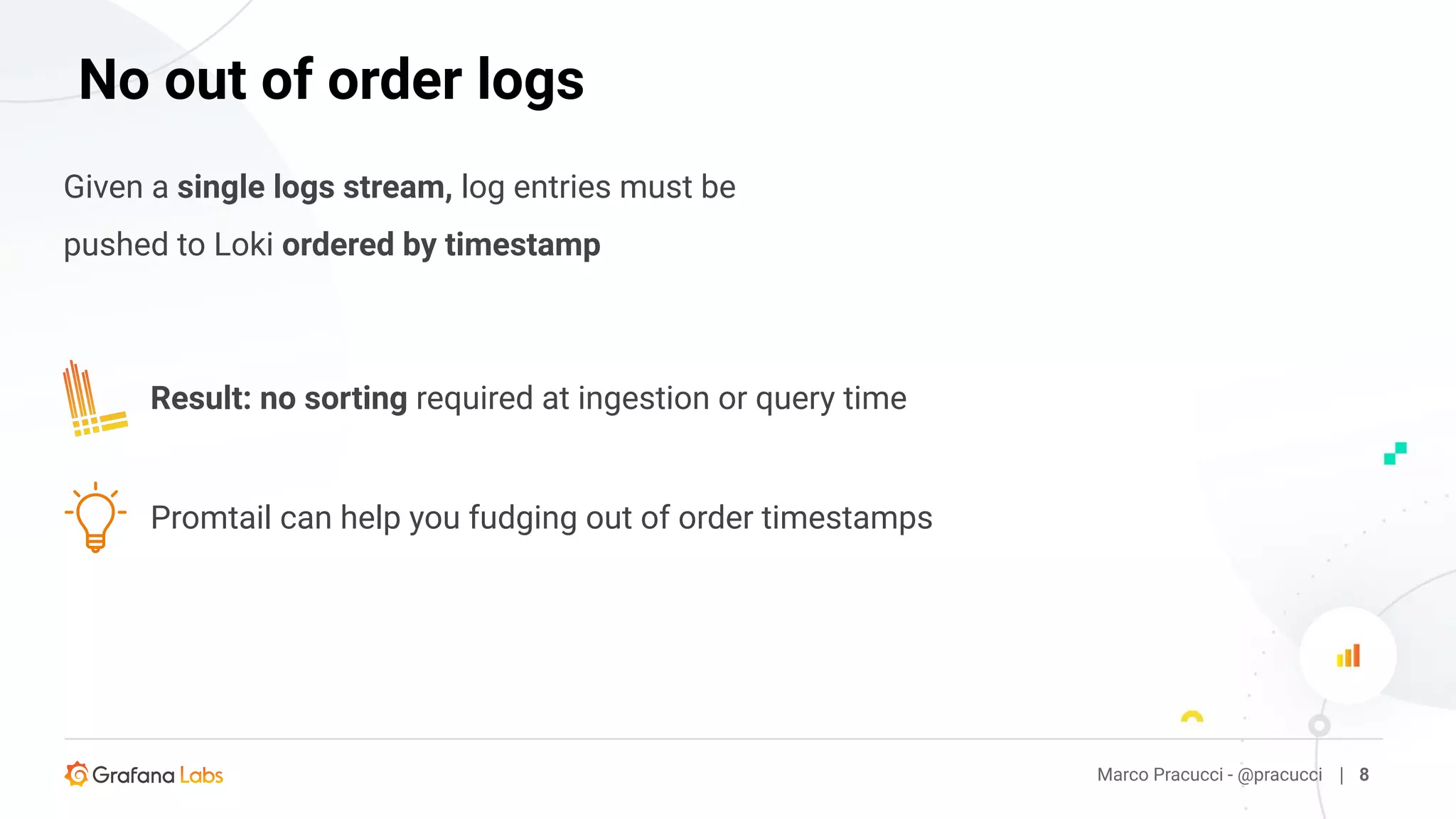

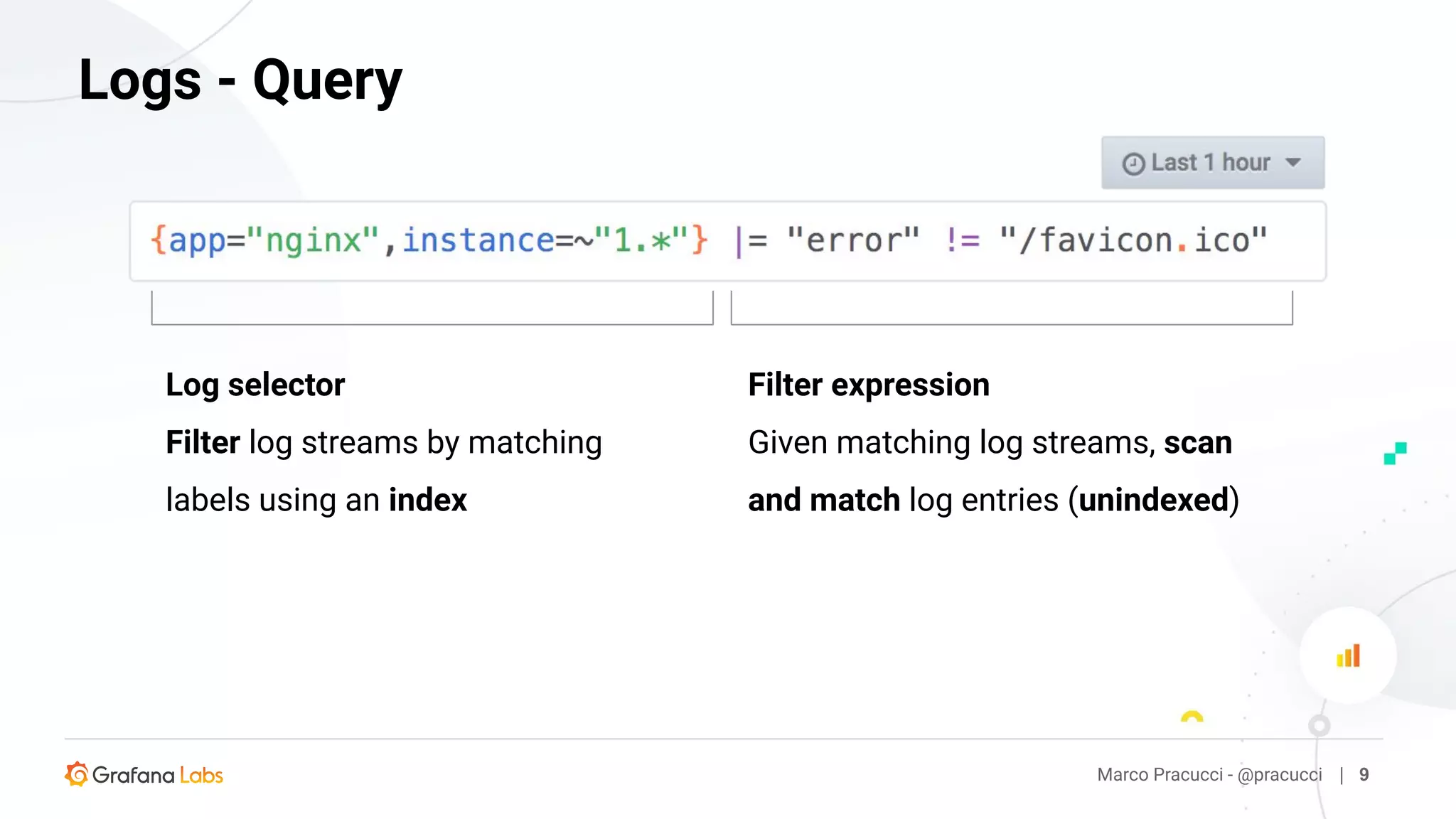

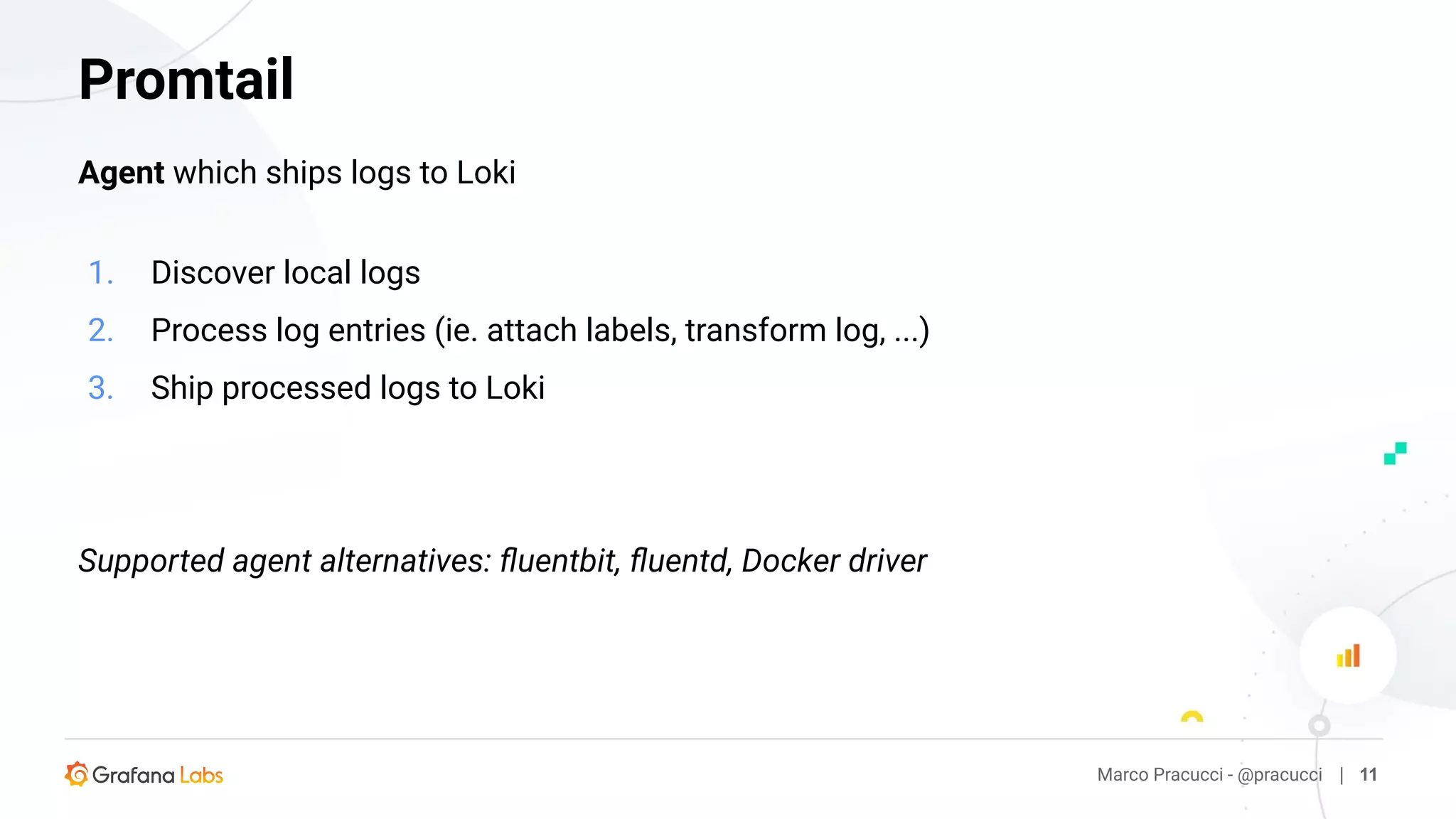

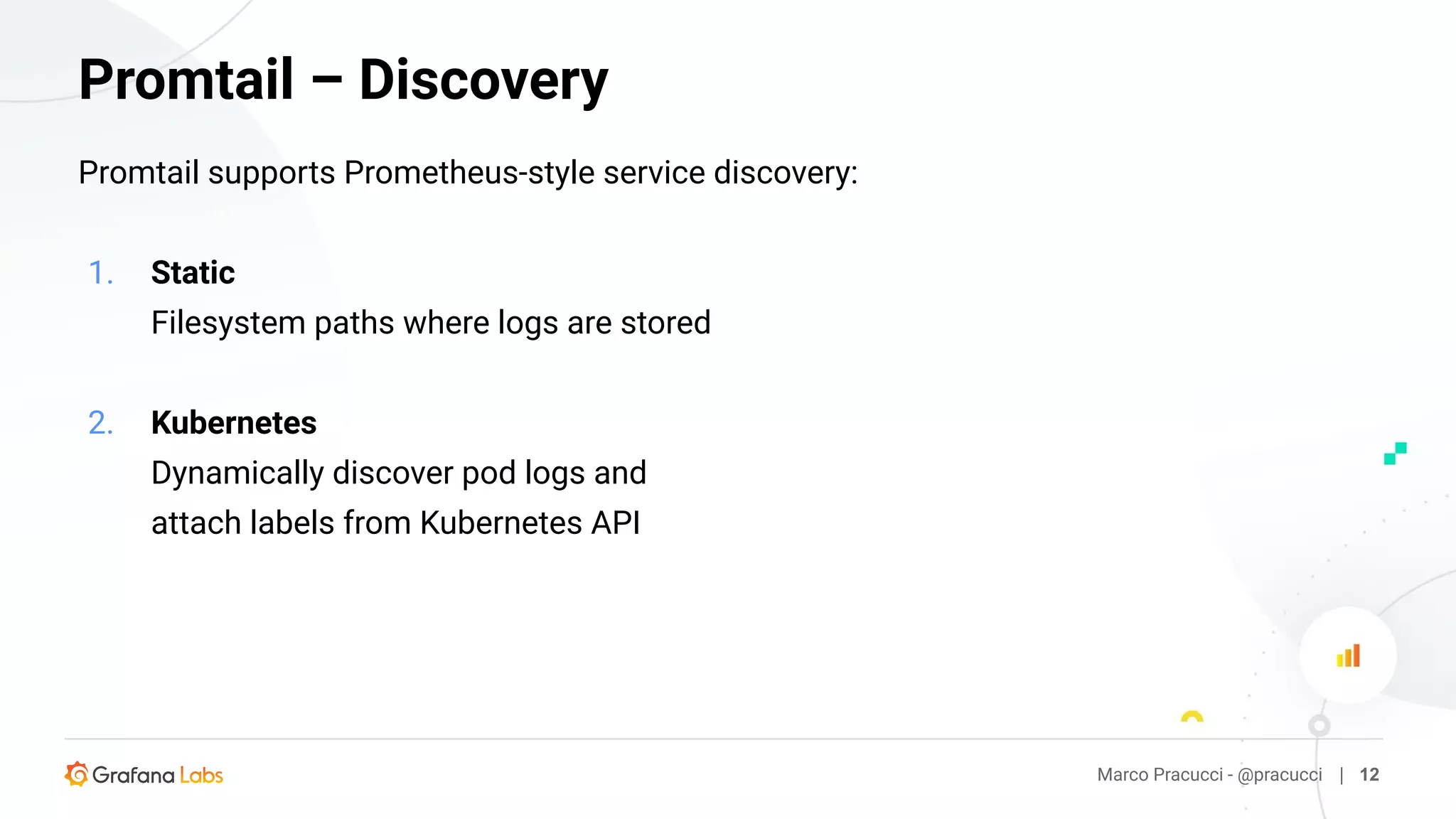

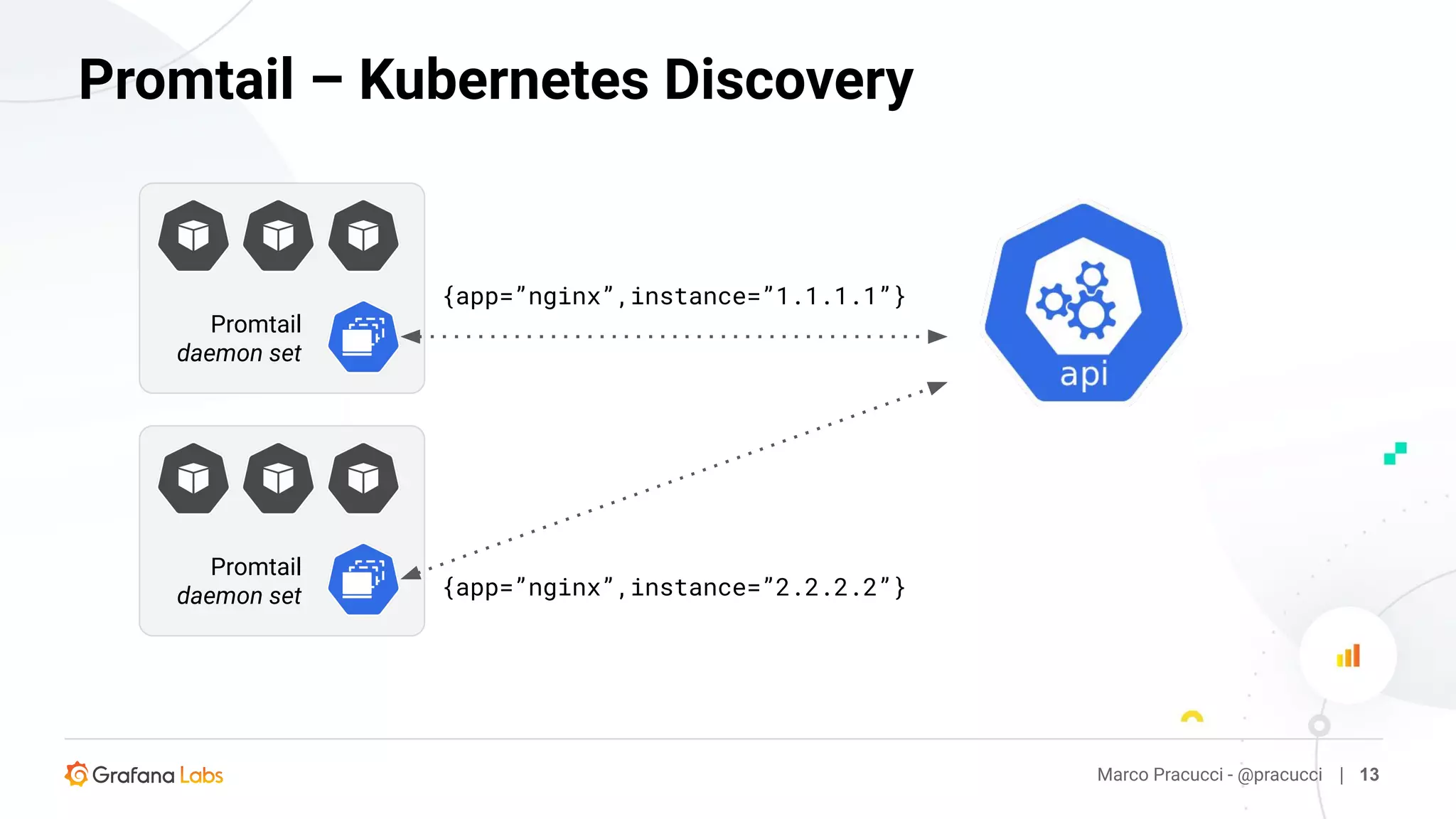

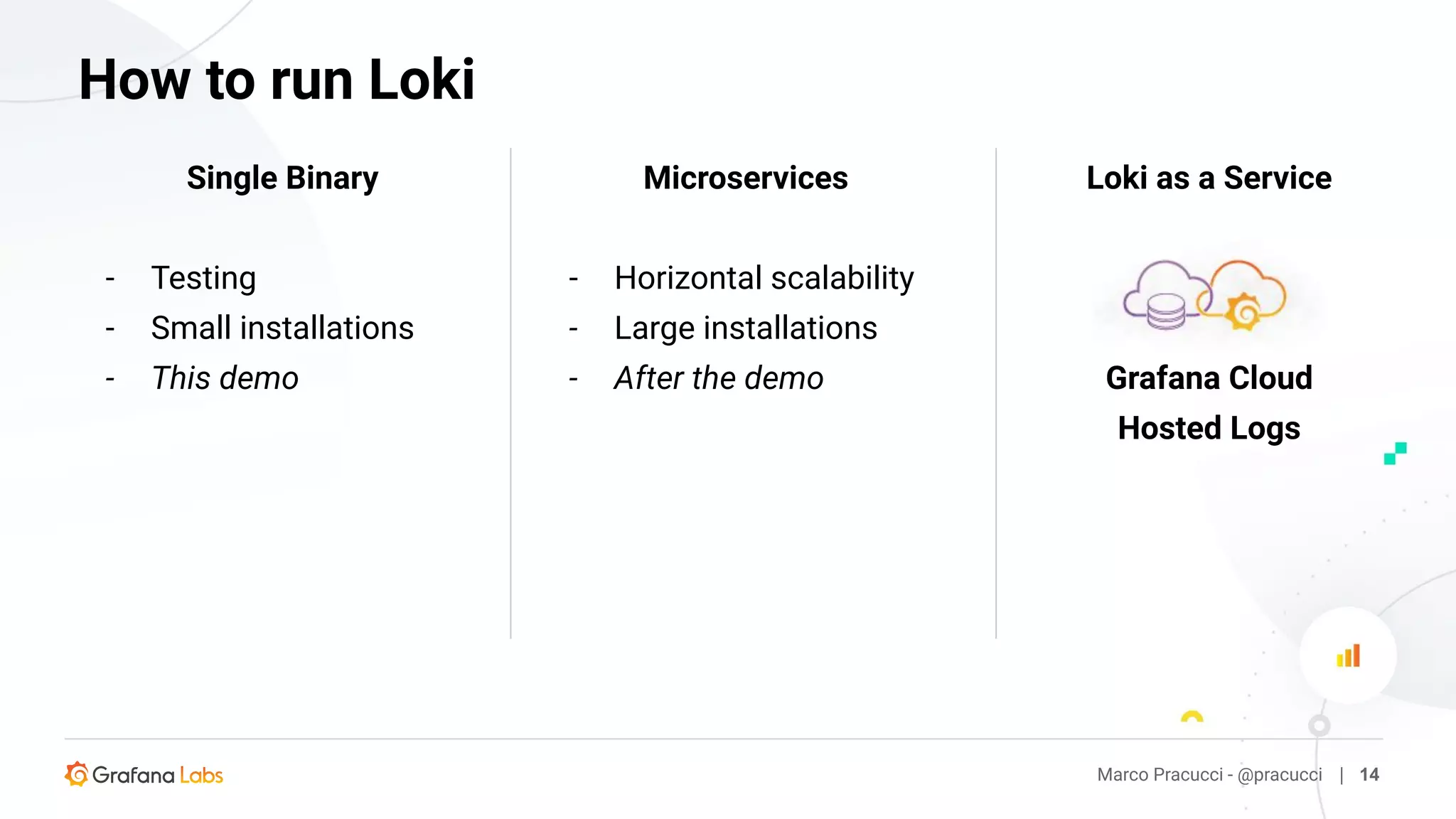

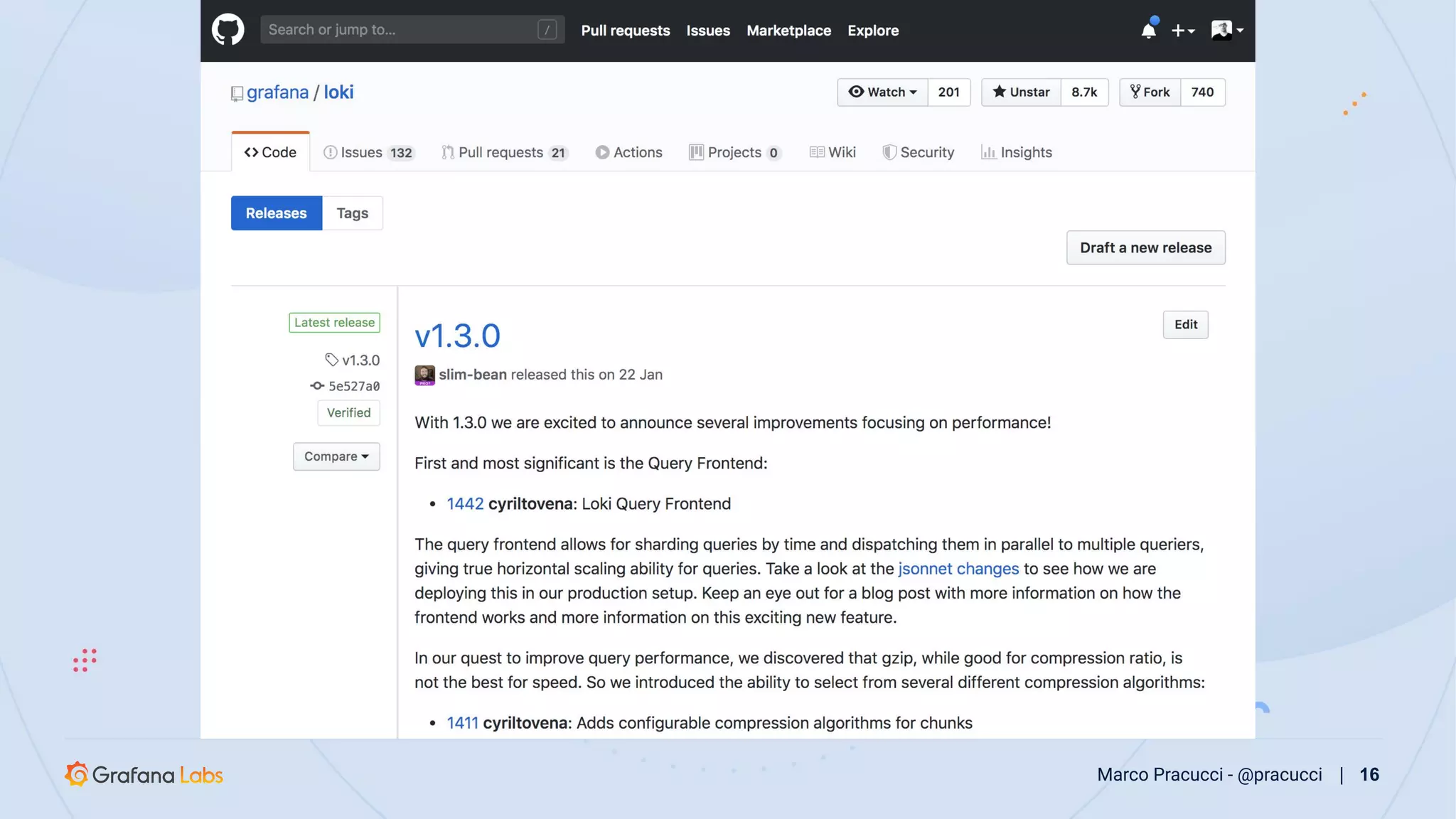

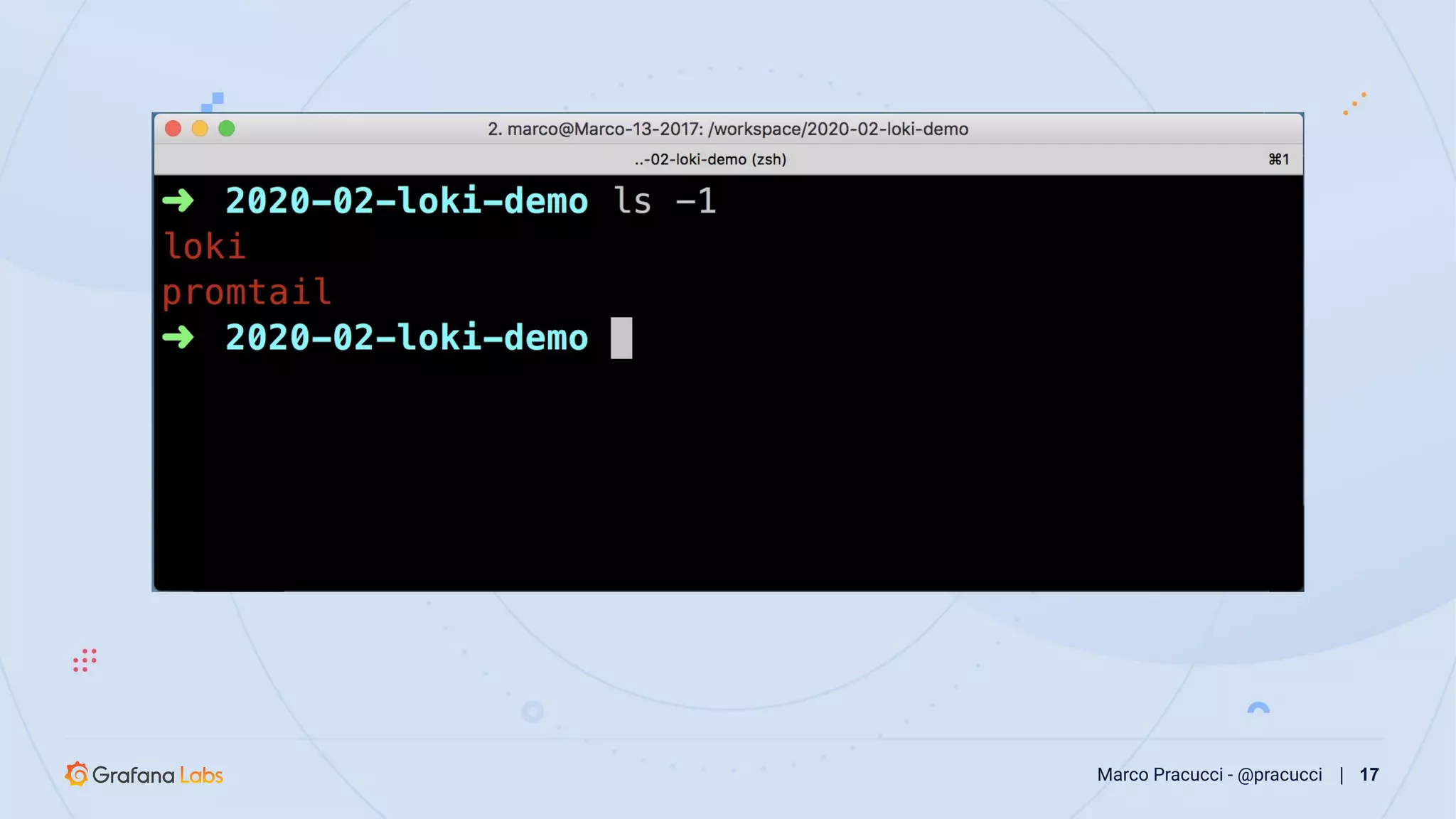

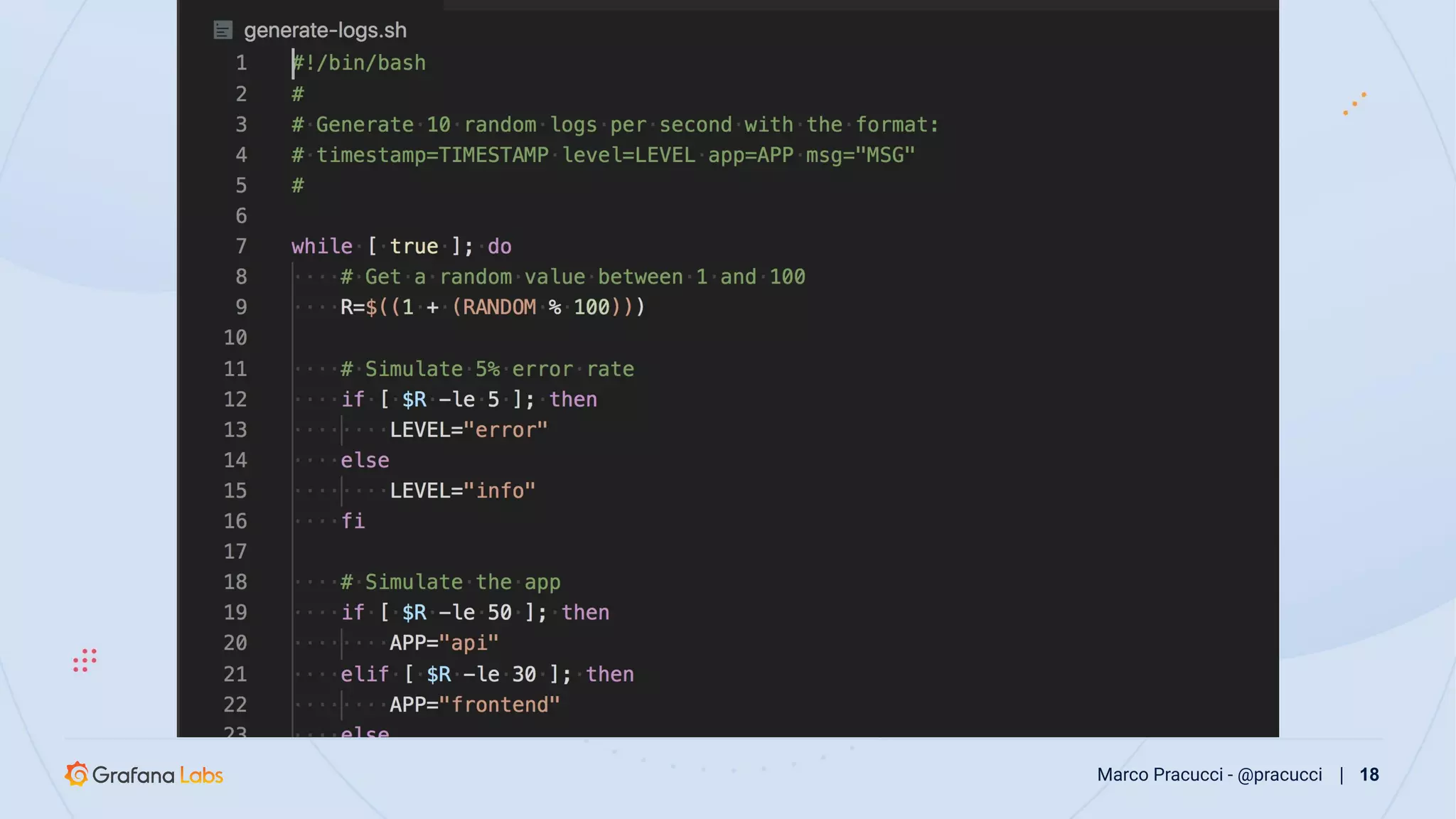

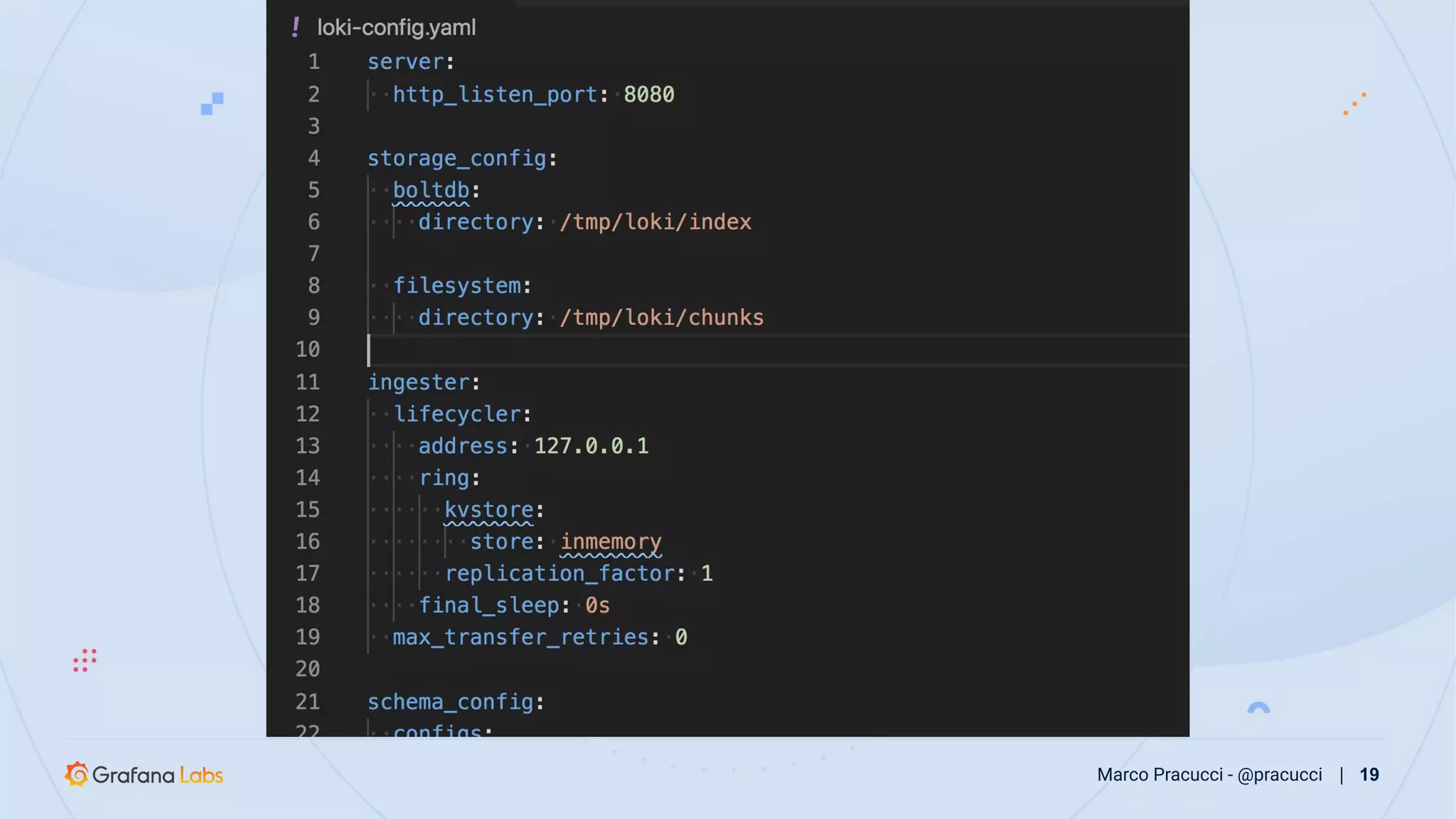

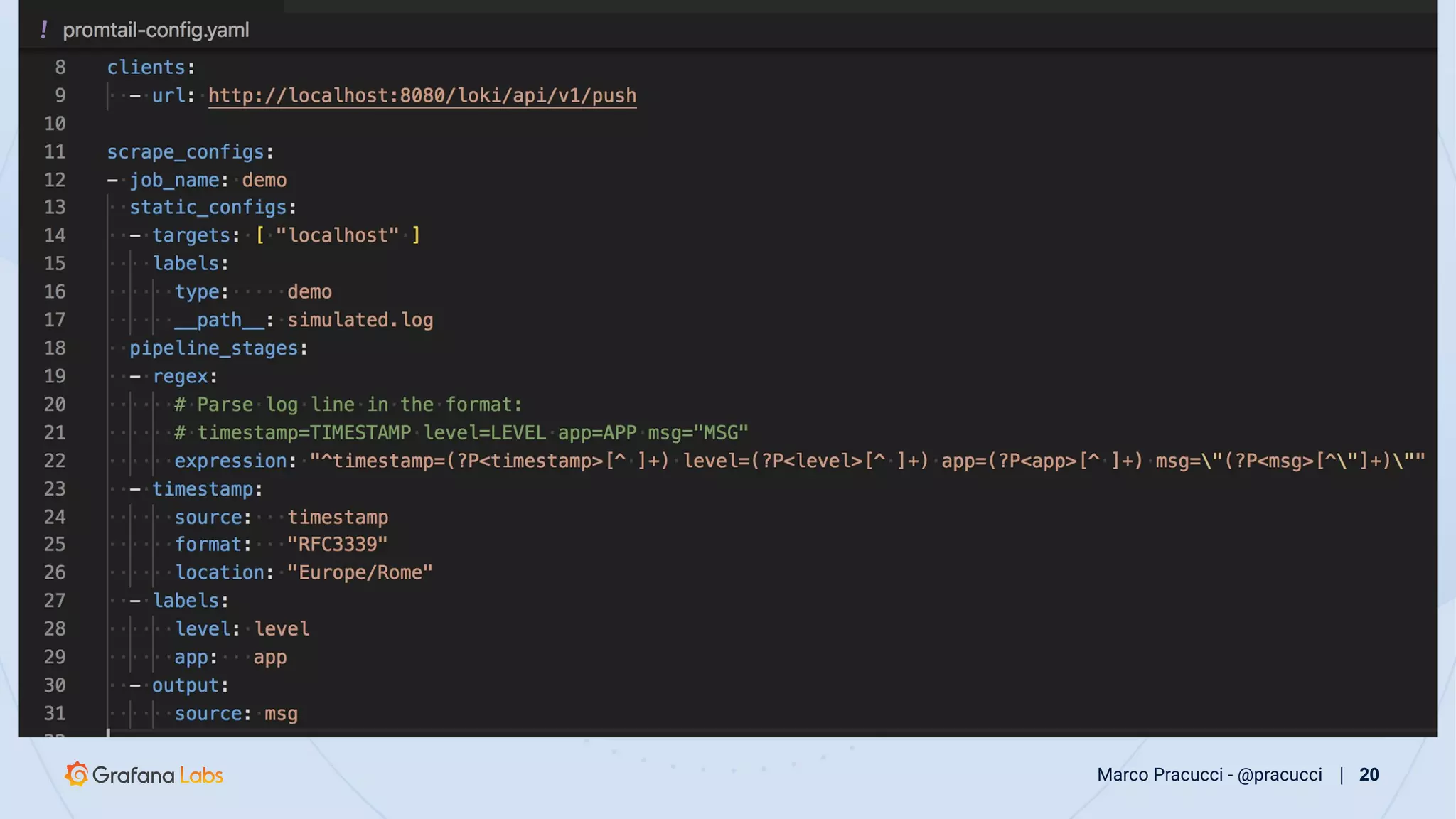

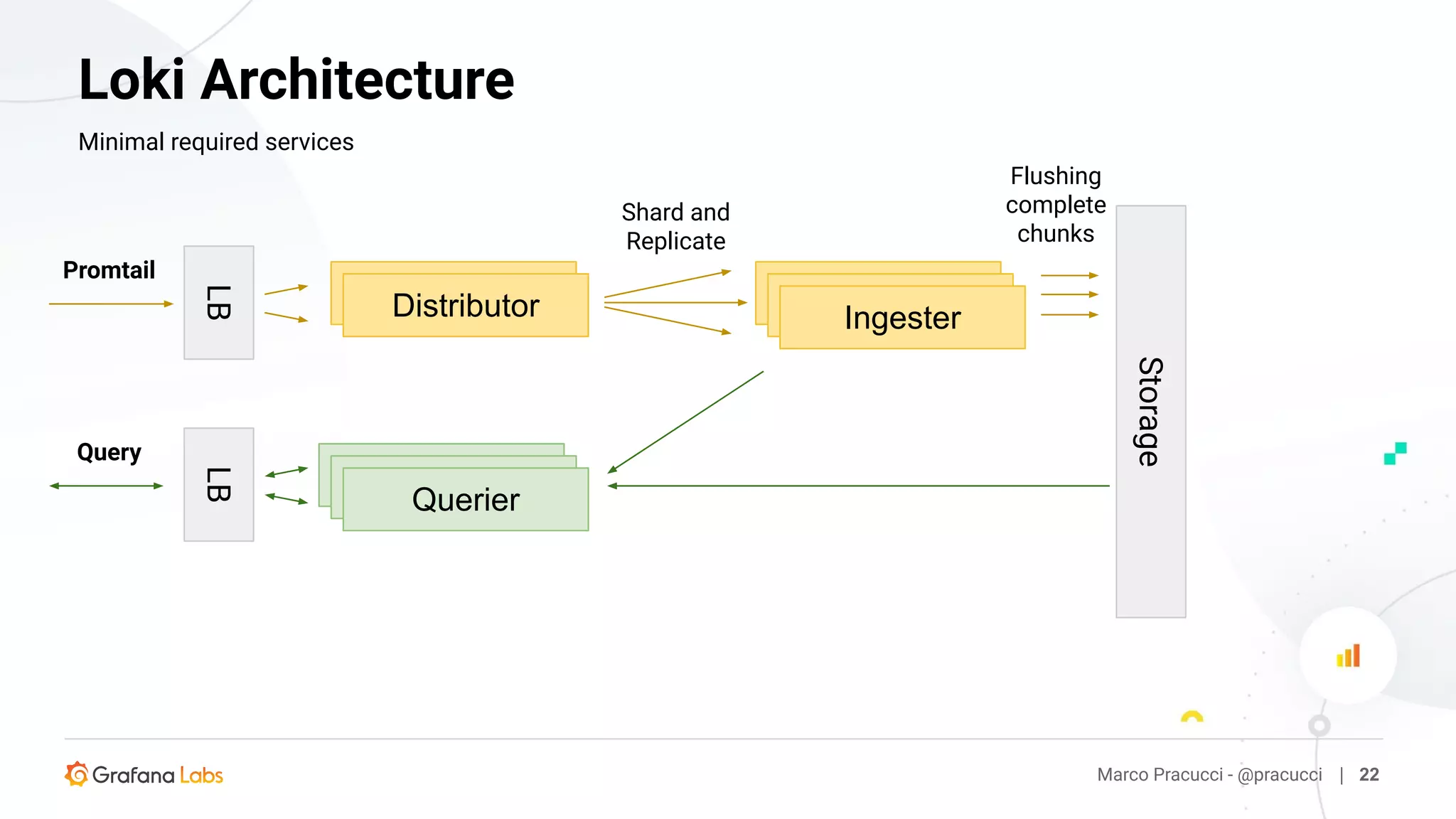

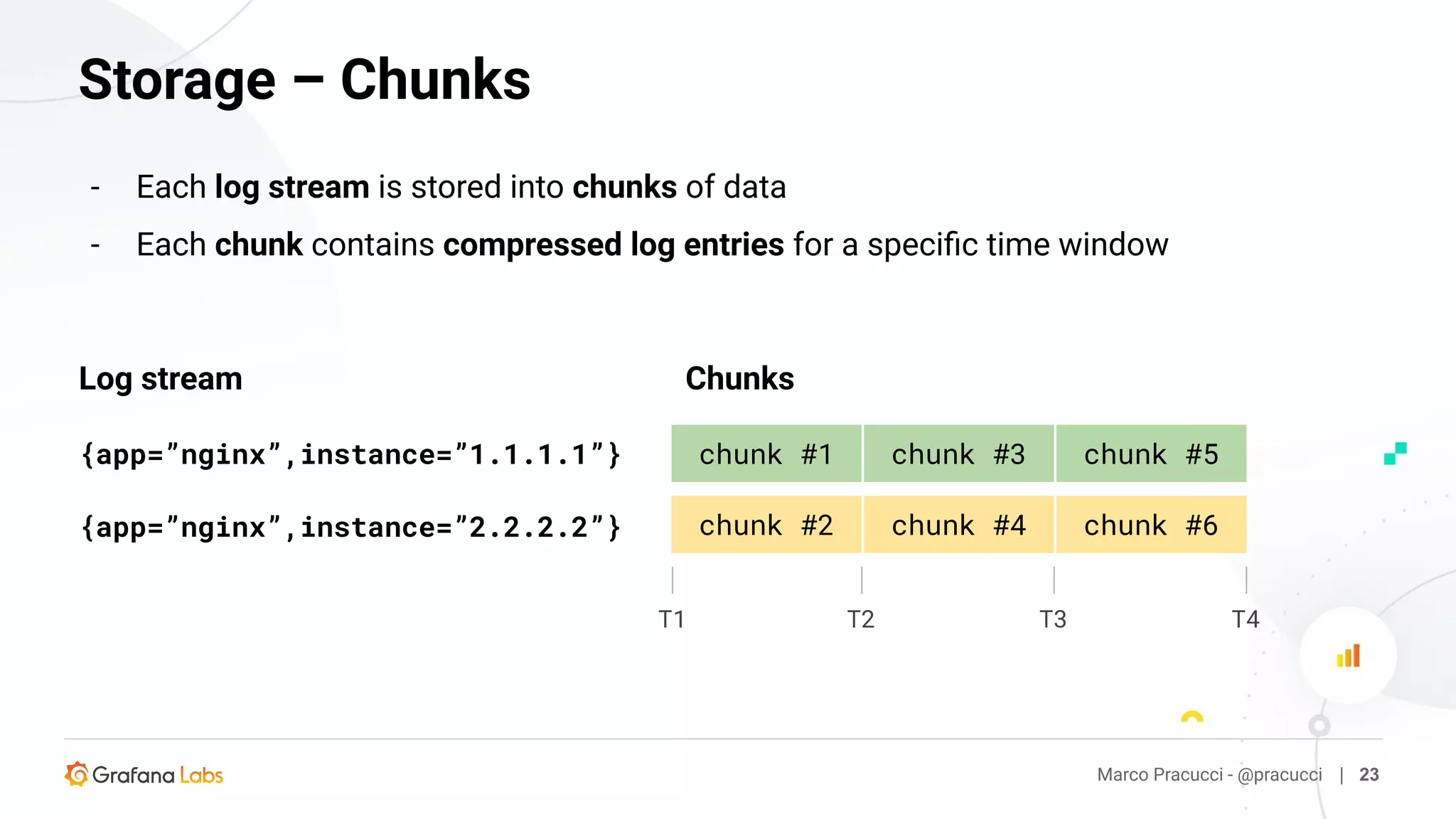

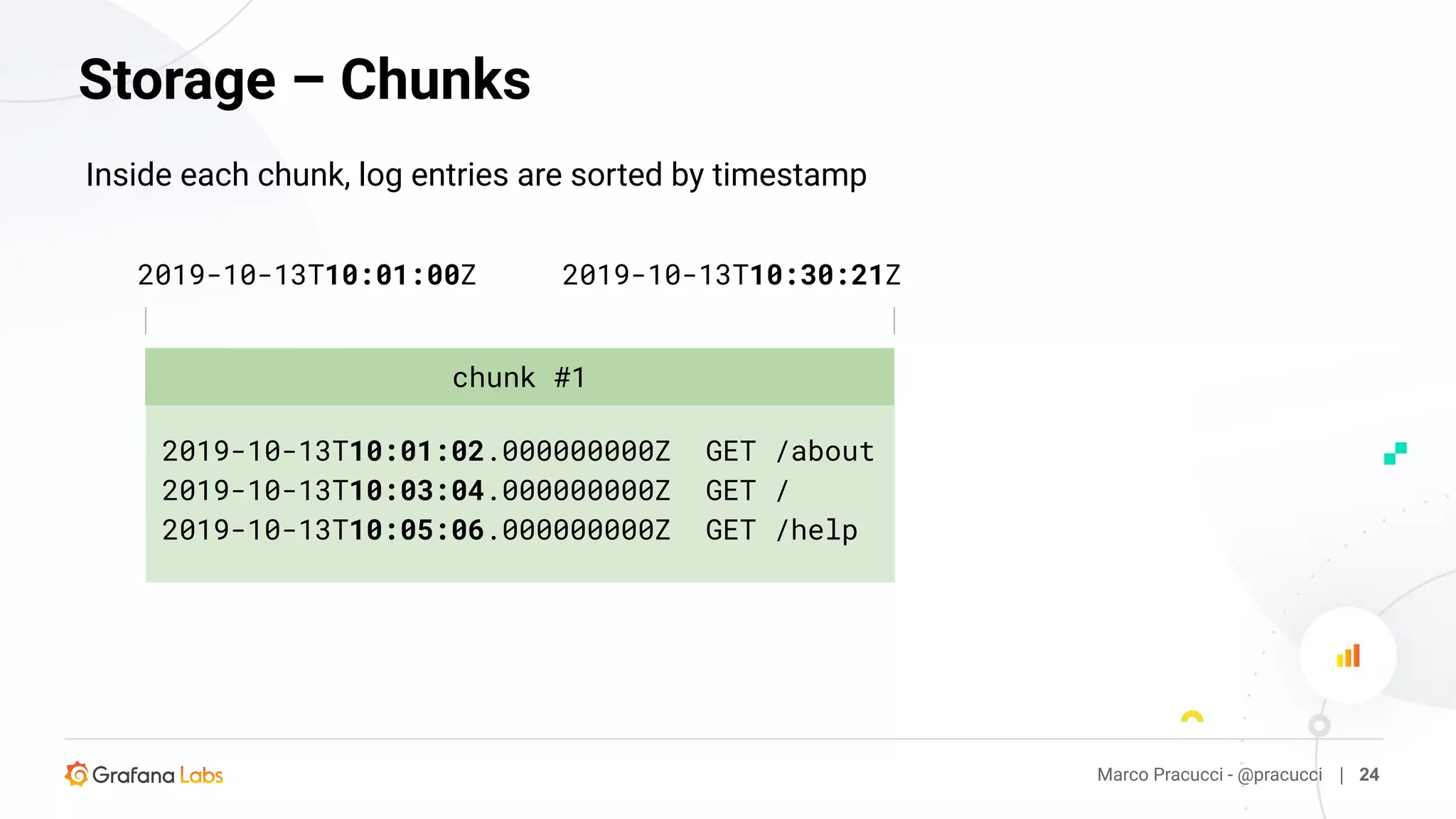

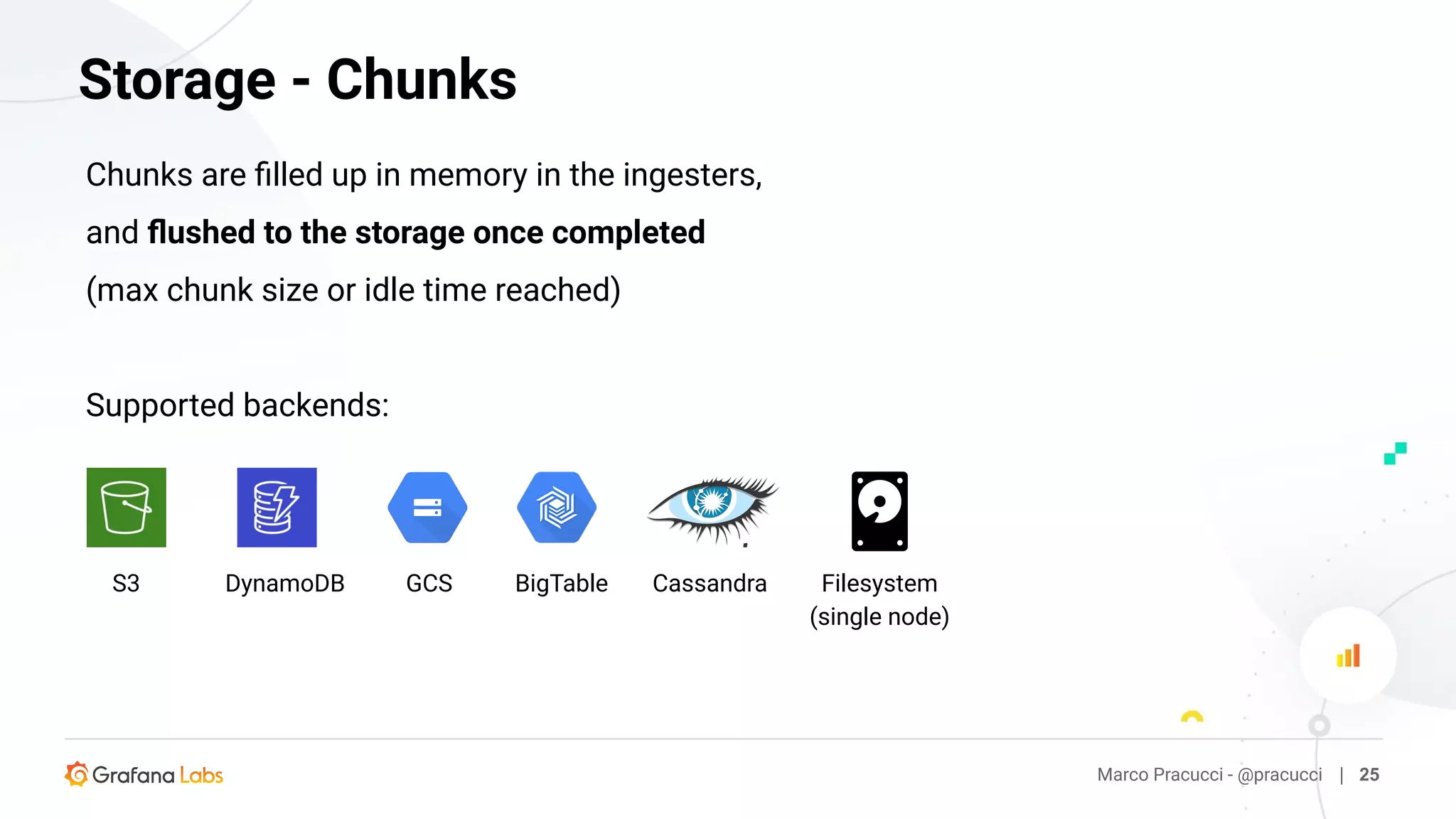

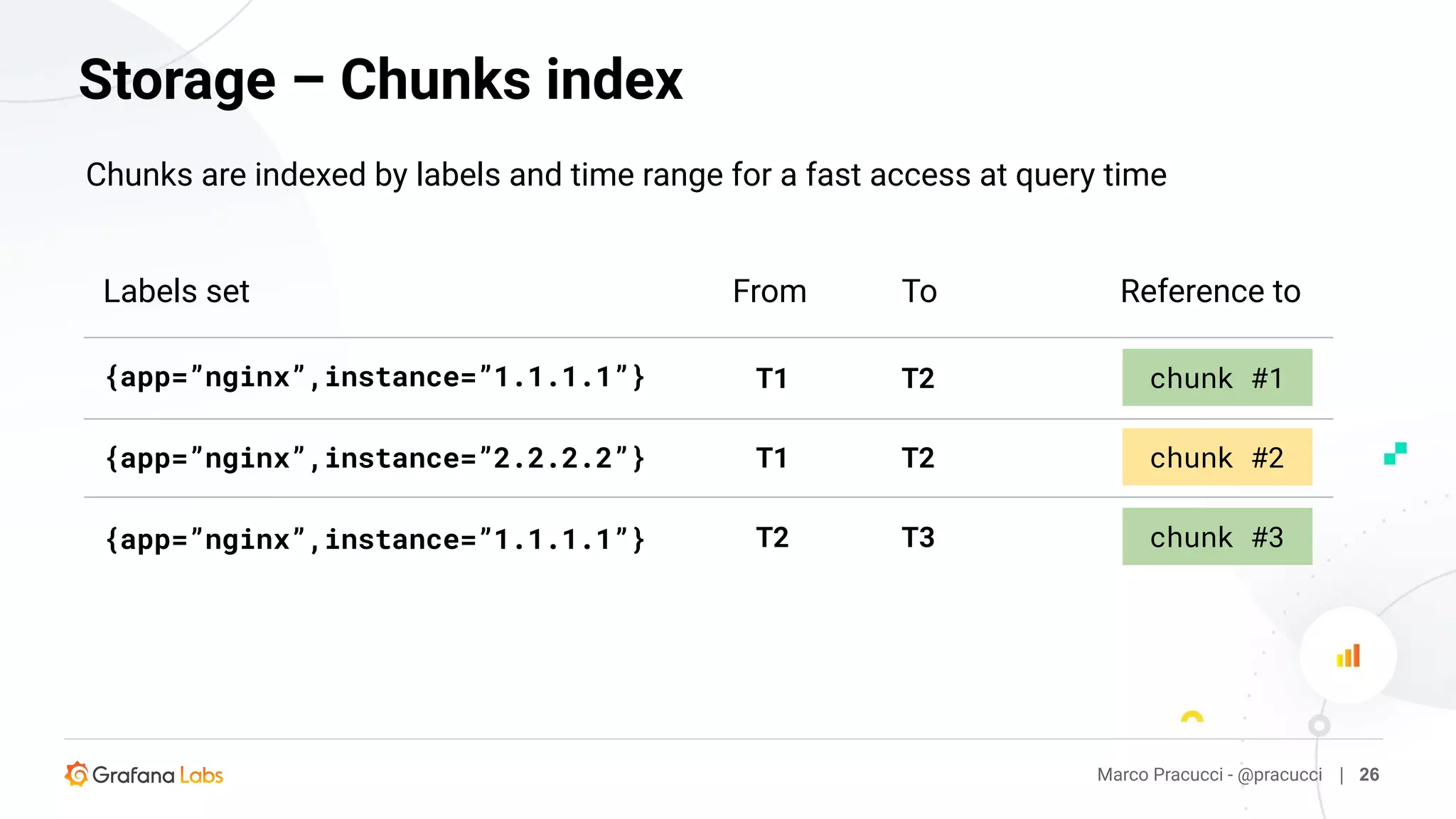

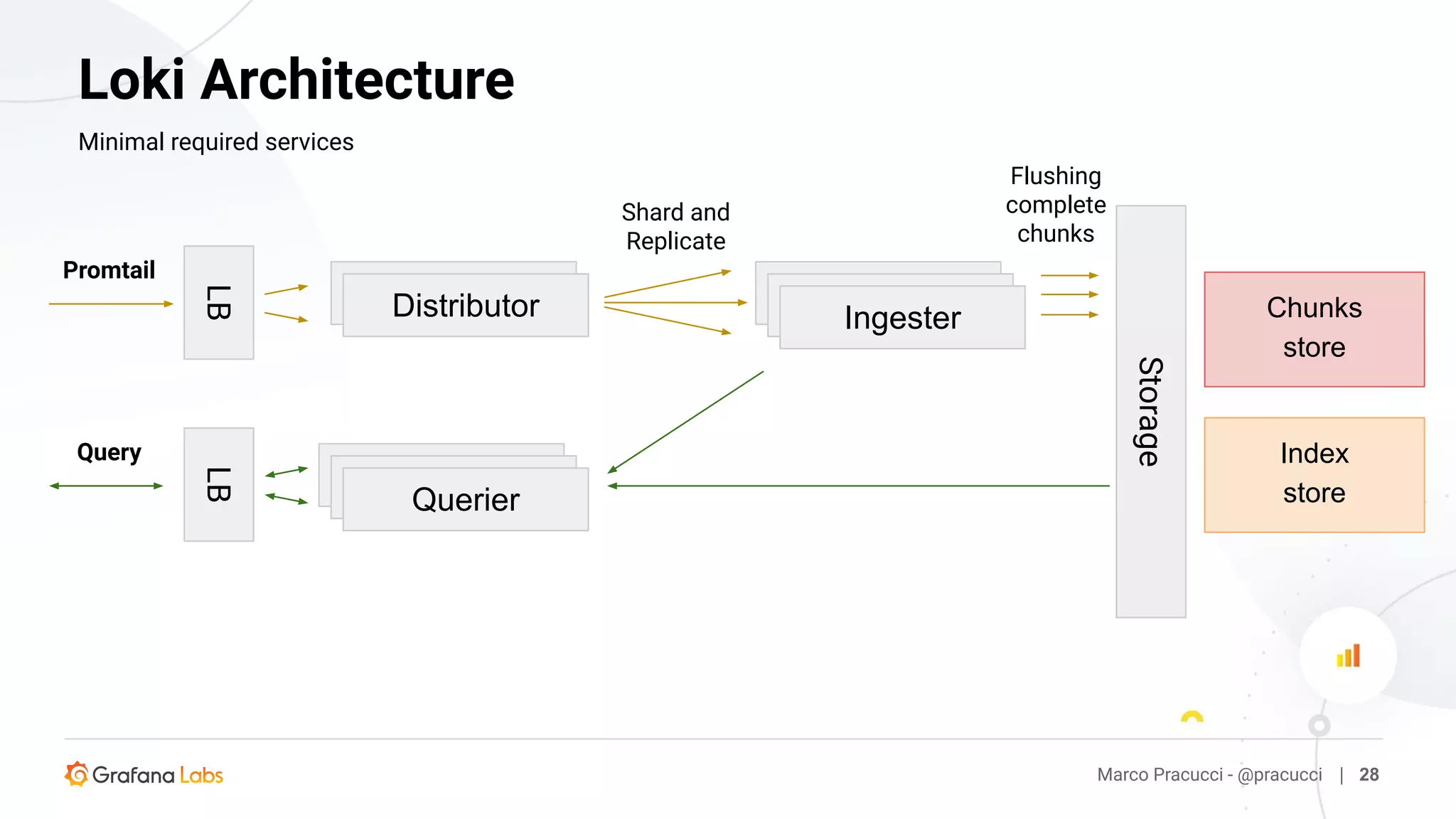

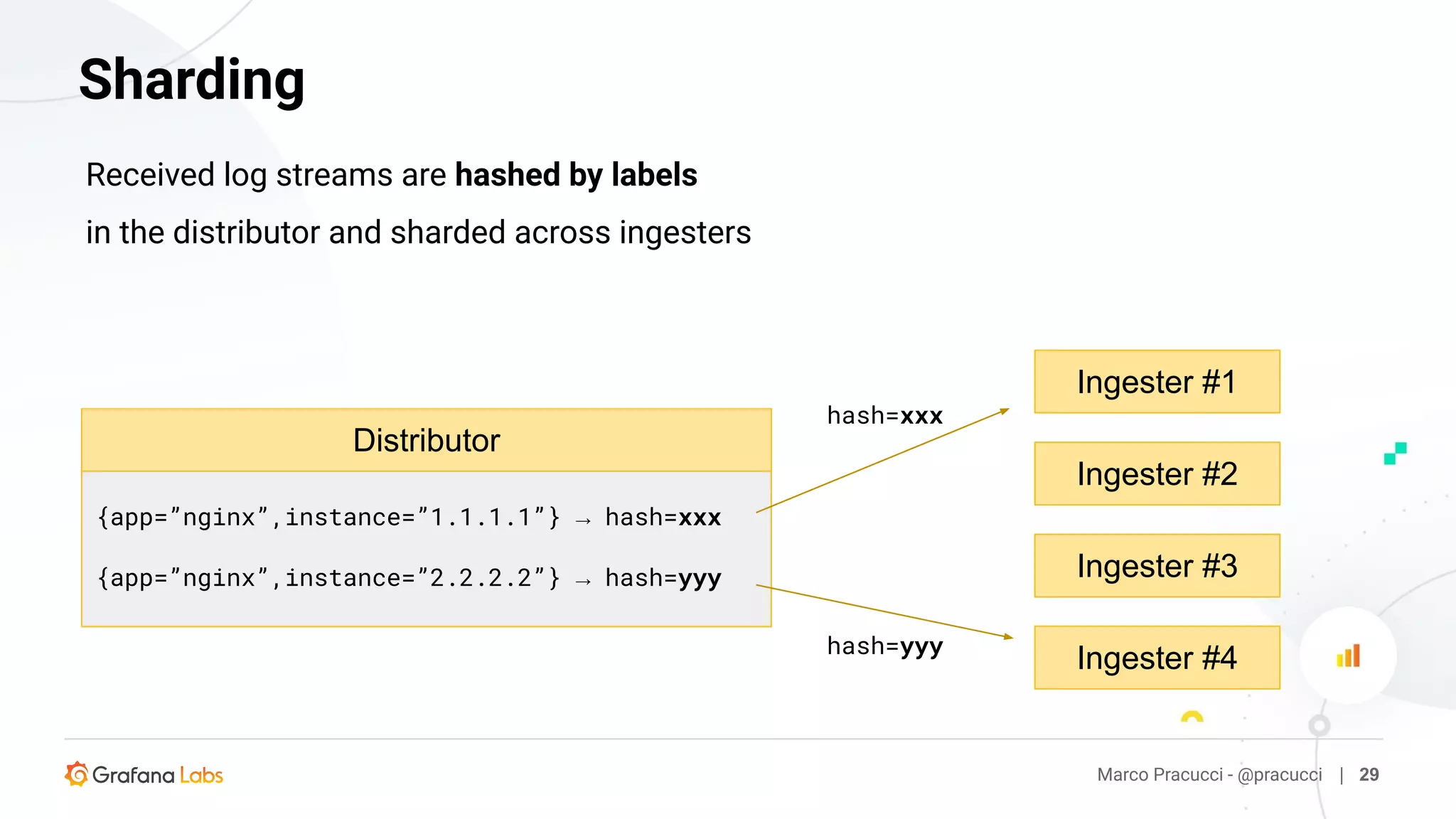

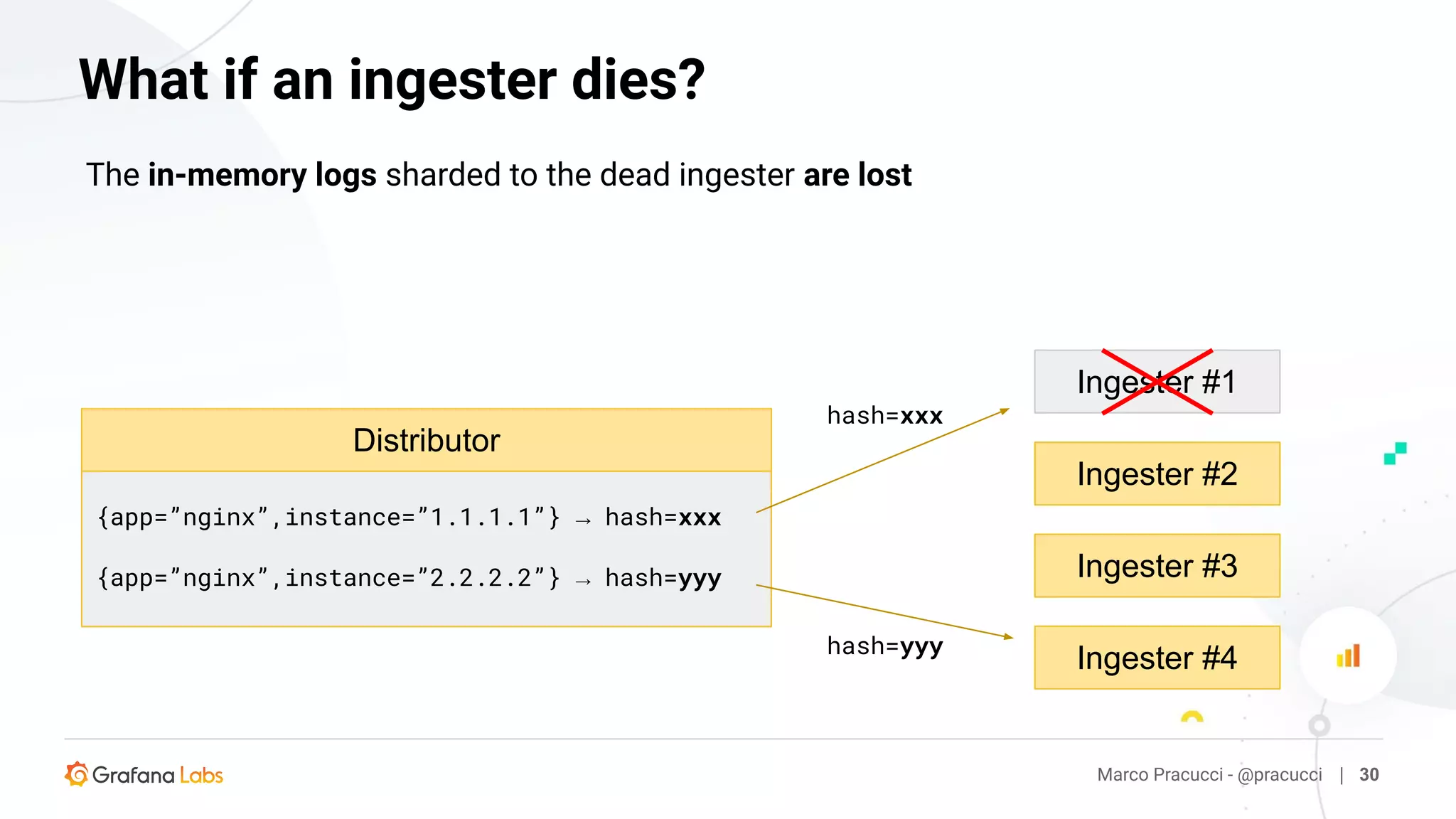

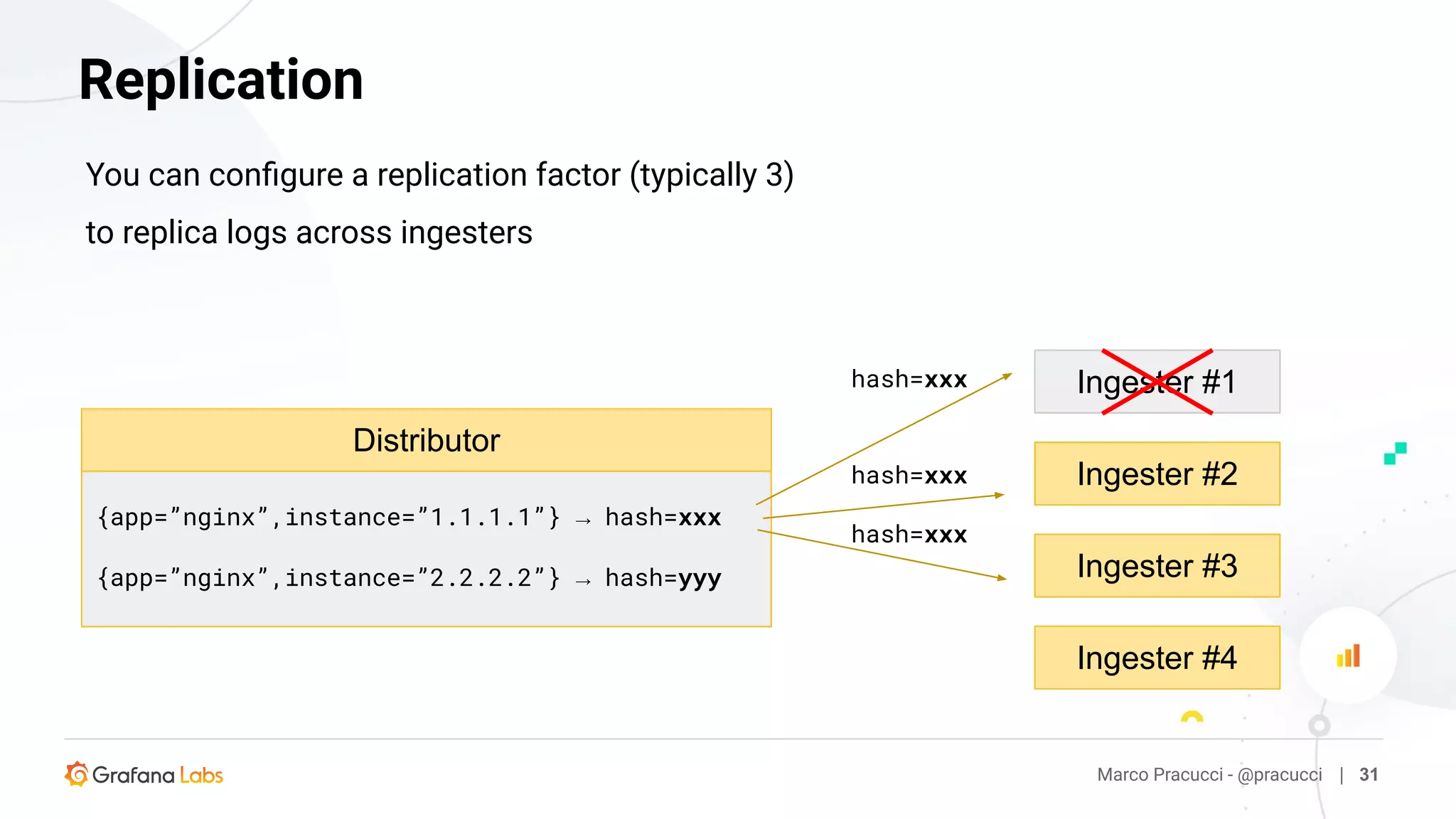

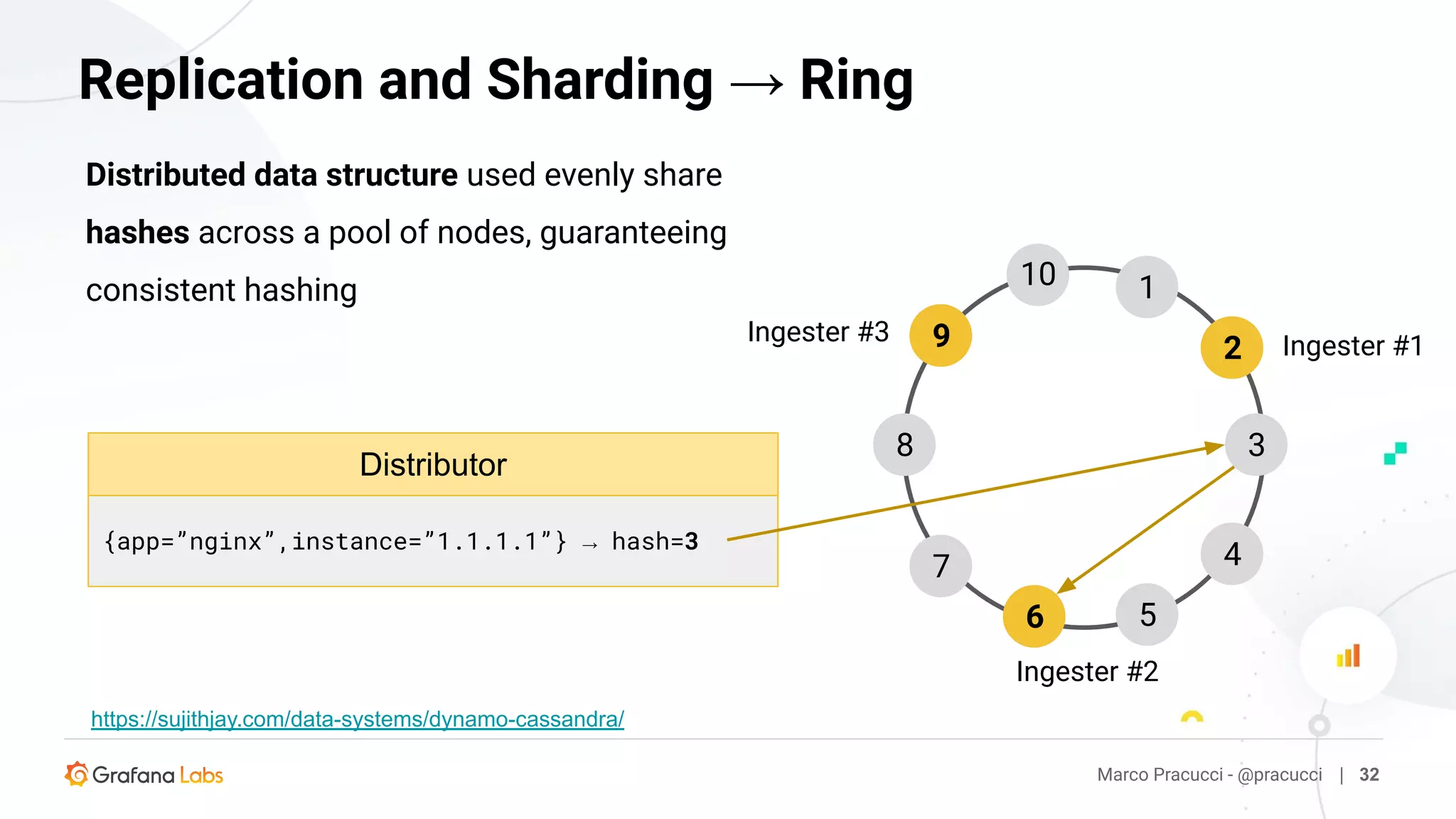

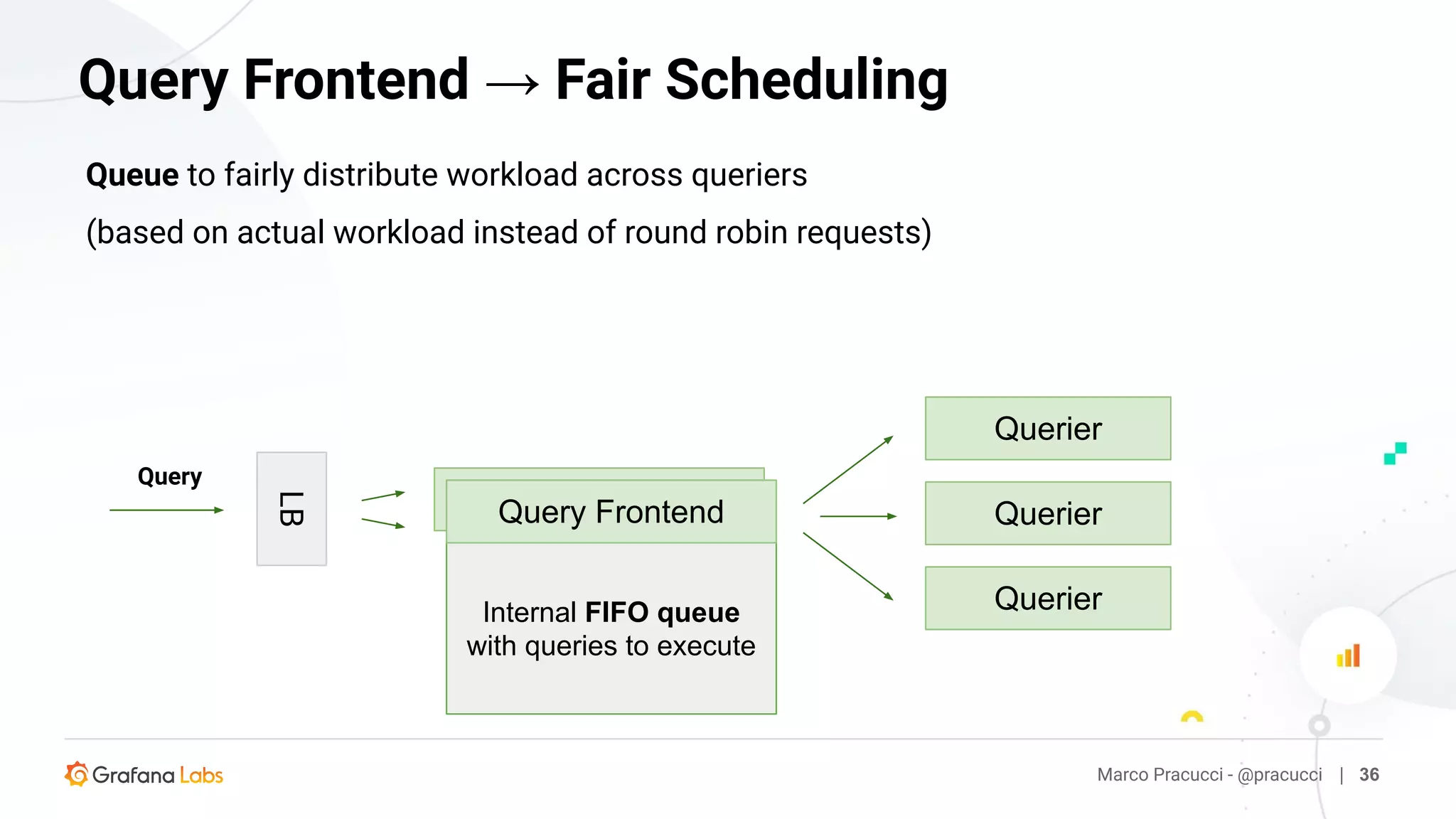

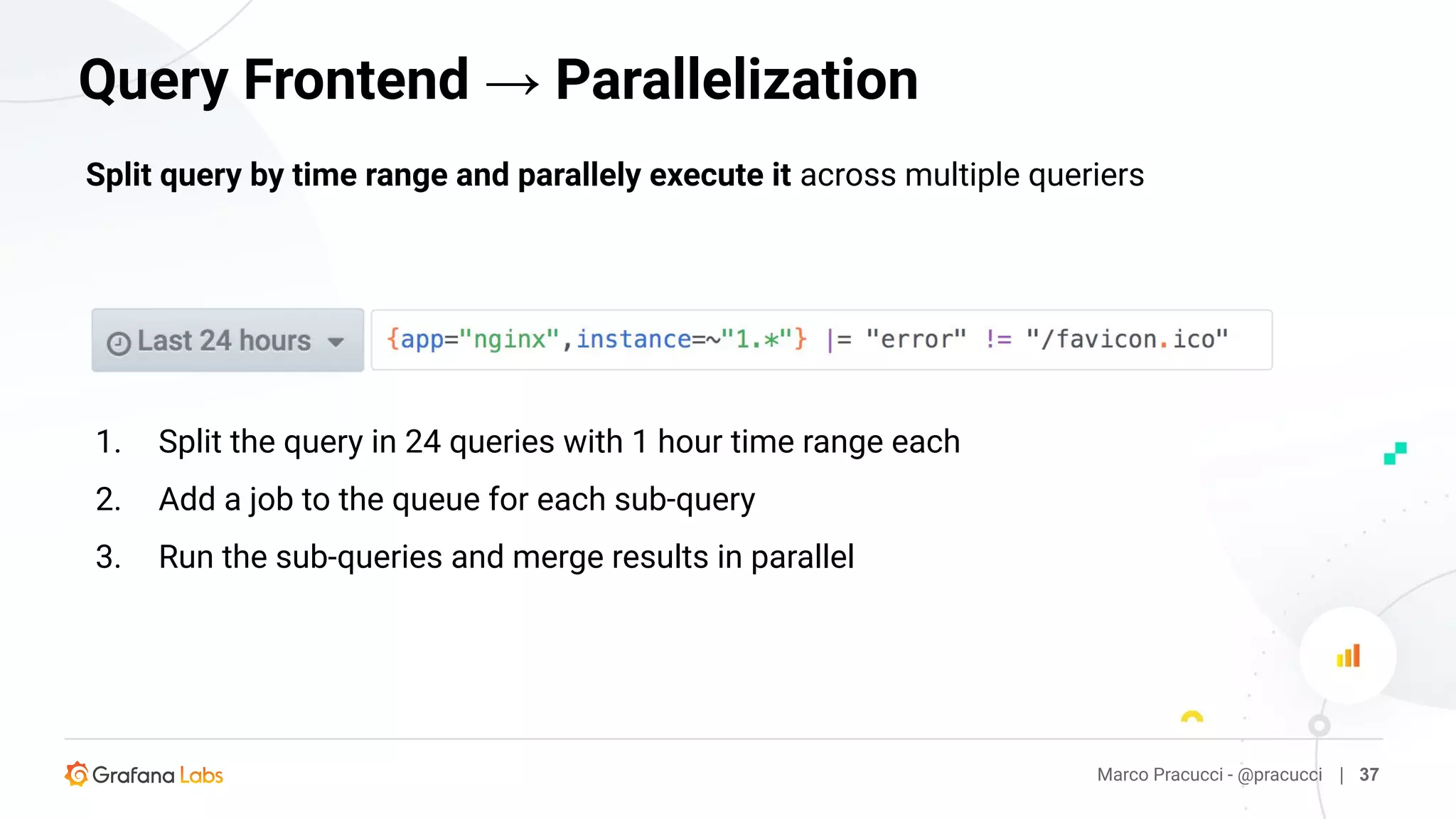

The document discusses Grafana Loki, an open-source tool for efficiently managing logs in observability systems. It details the architecture, including components like Promtail, and explains how logs are processed, indexed, and stored in various backends. Additionally, it covers sharding, replication strategies, and the query mechanism utilized to enhance performance in log management.