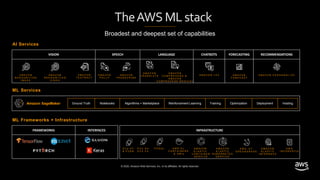

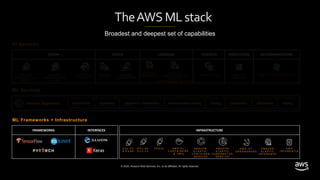

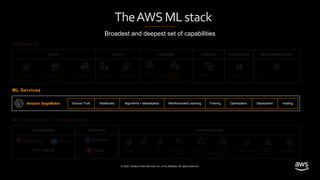

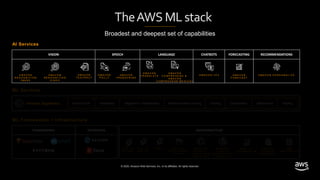

The document is a webinar presentation by Cobus Bernard from Amazon Web Services (AWS) on getting started with AWS machine learning. It outlines various AWS AI/ML services, including Amazon Polly, Lex, Rekognition, and SageMaker, along with their capabilities. The overarching mission is to empower developers and data scientists with machine learning tools and frameworks.