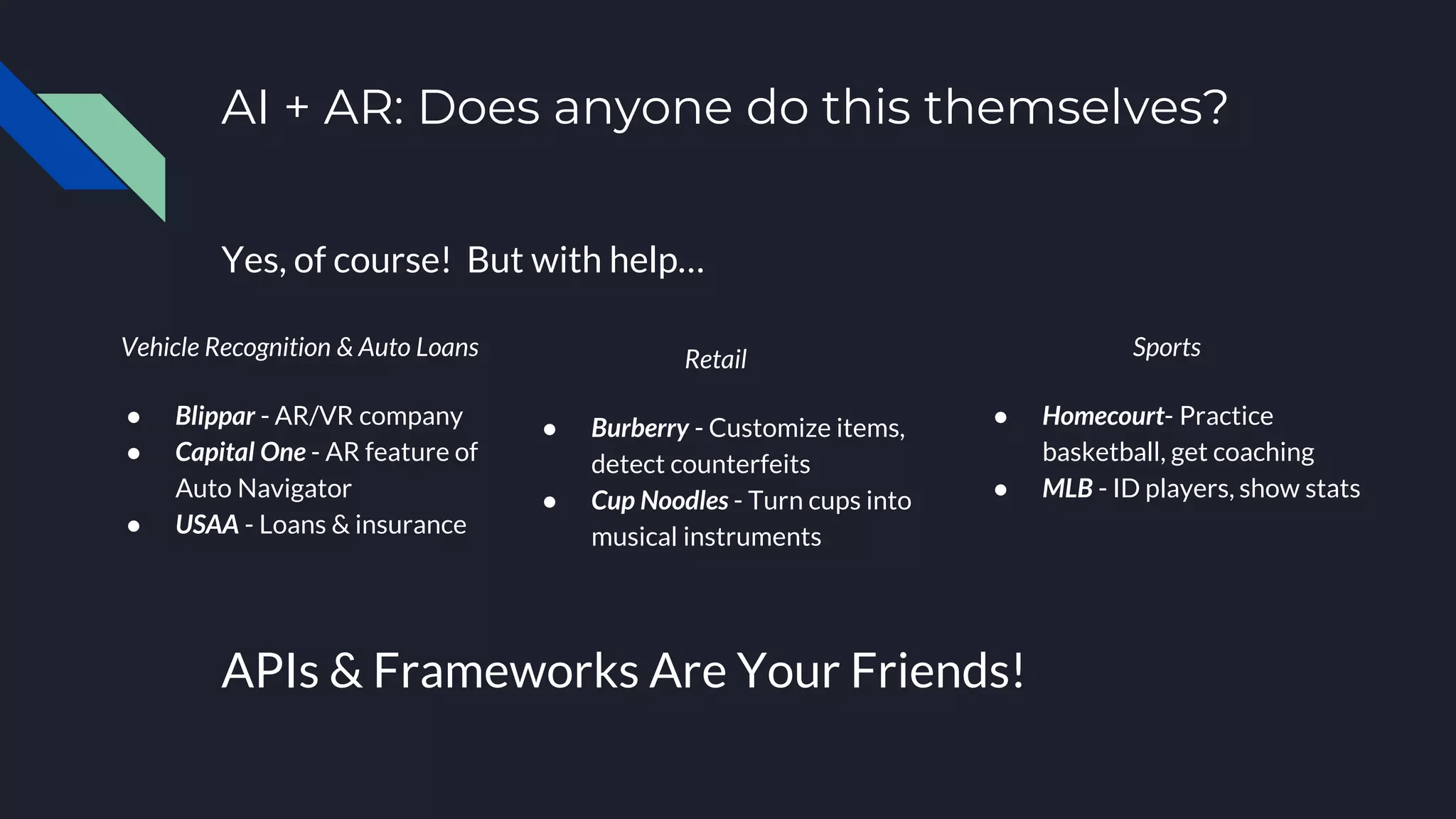

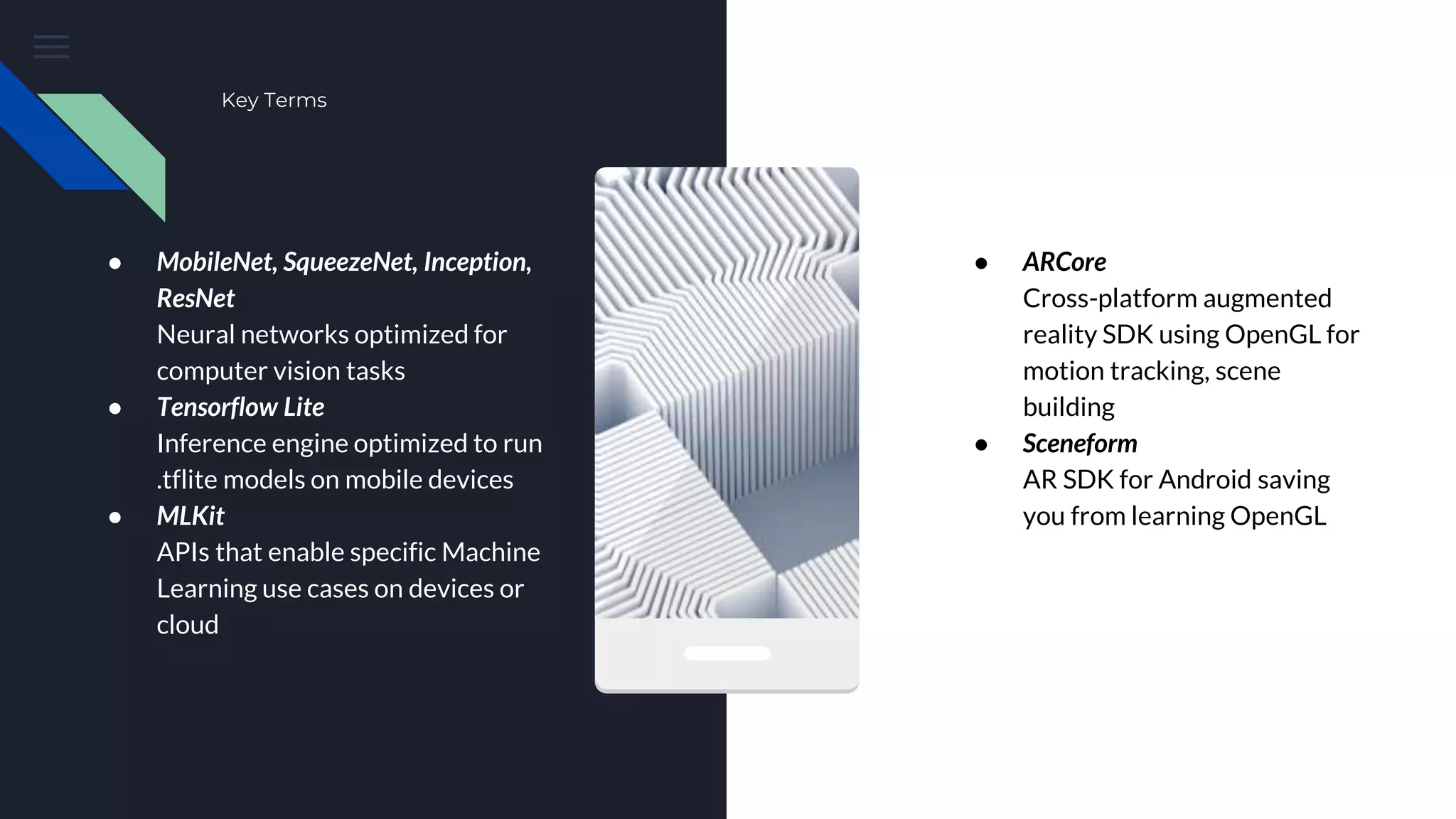

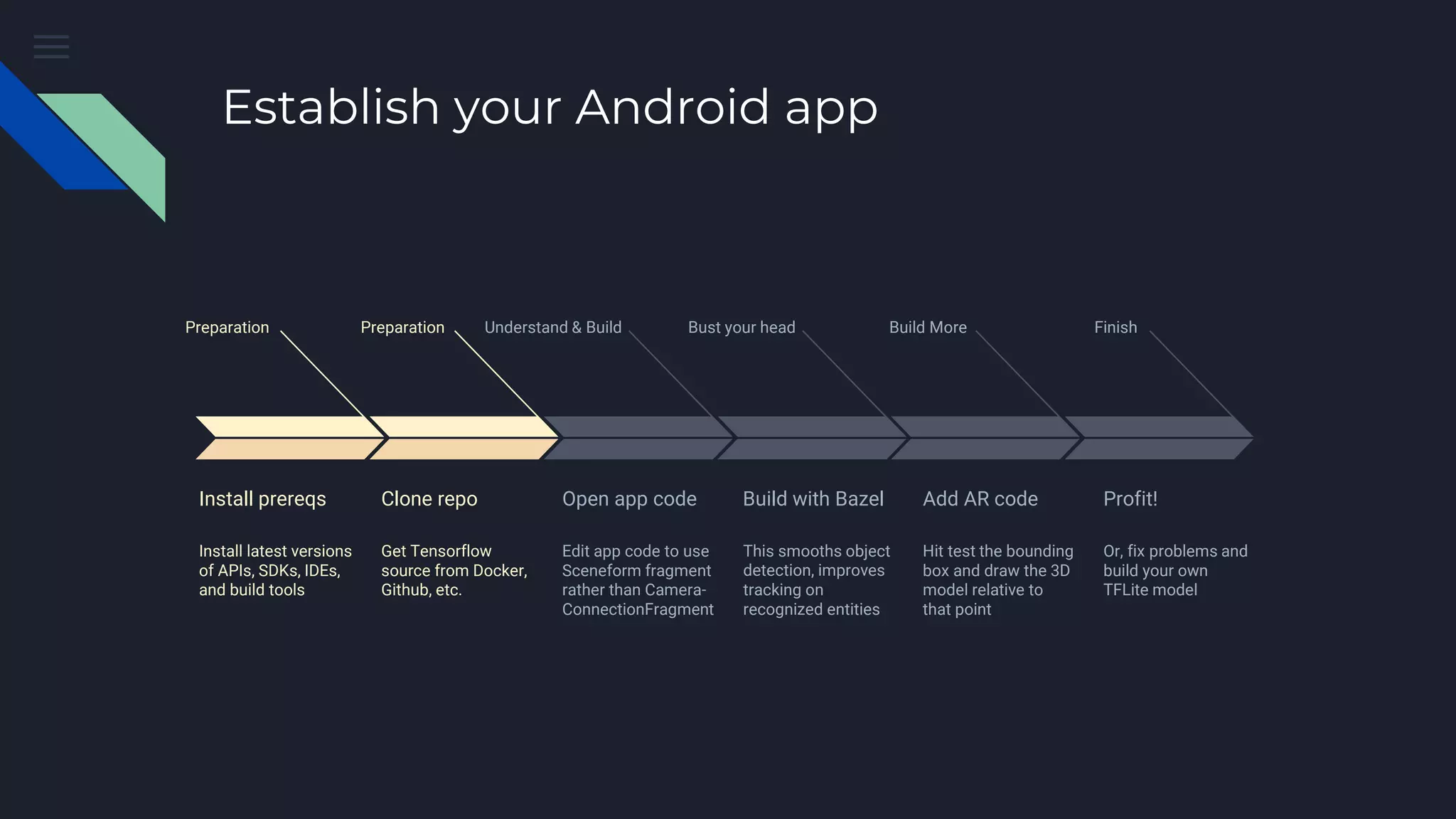

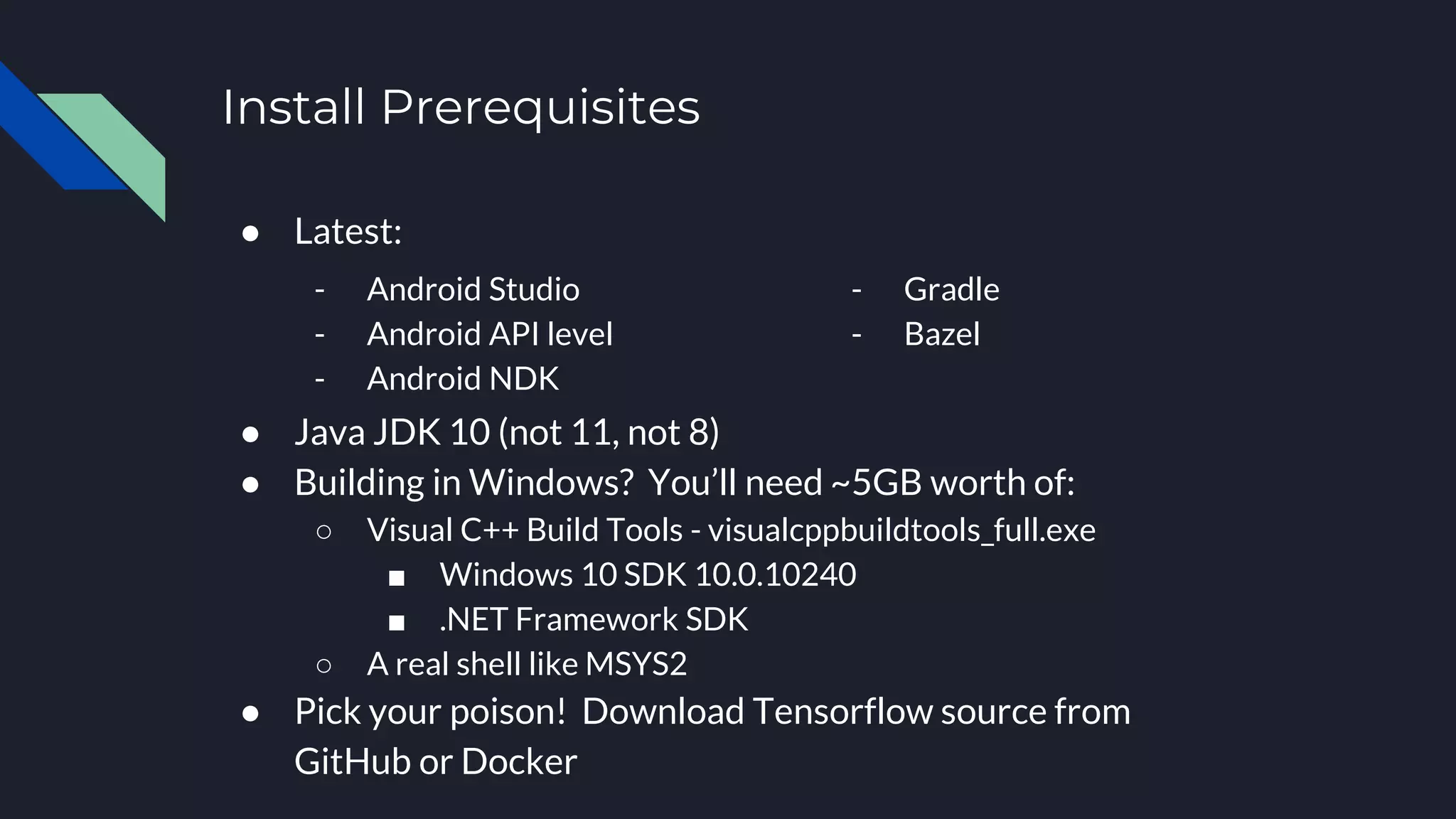

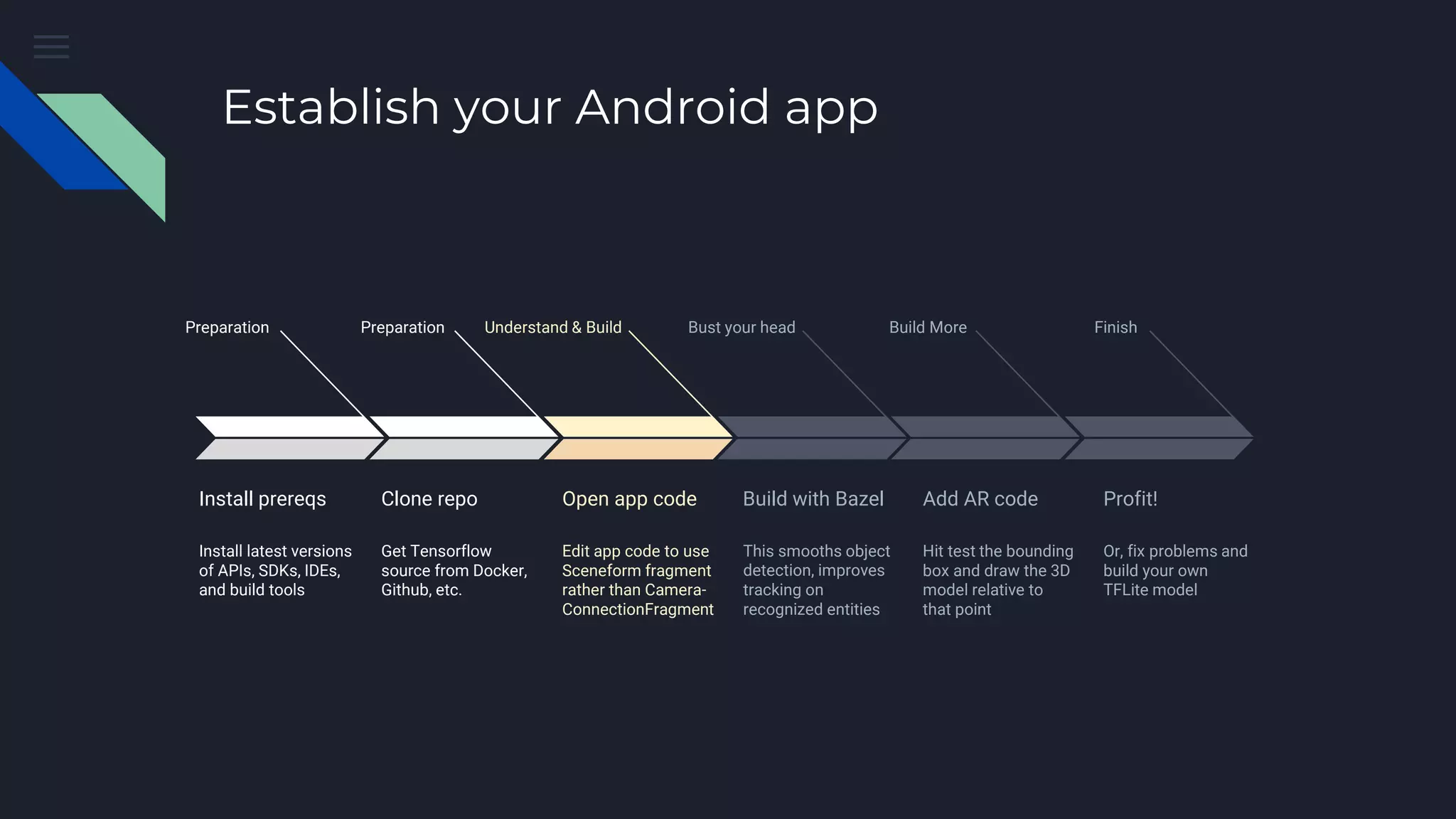

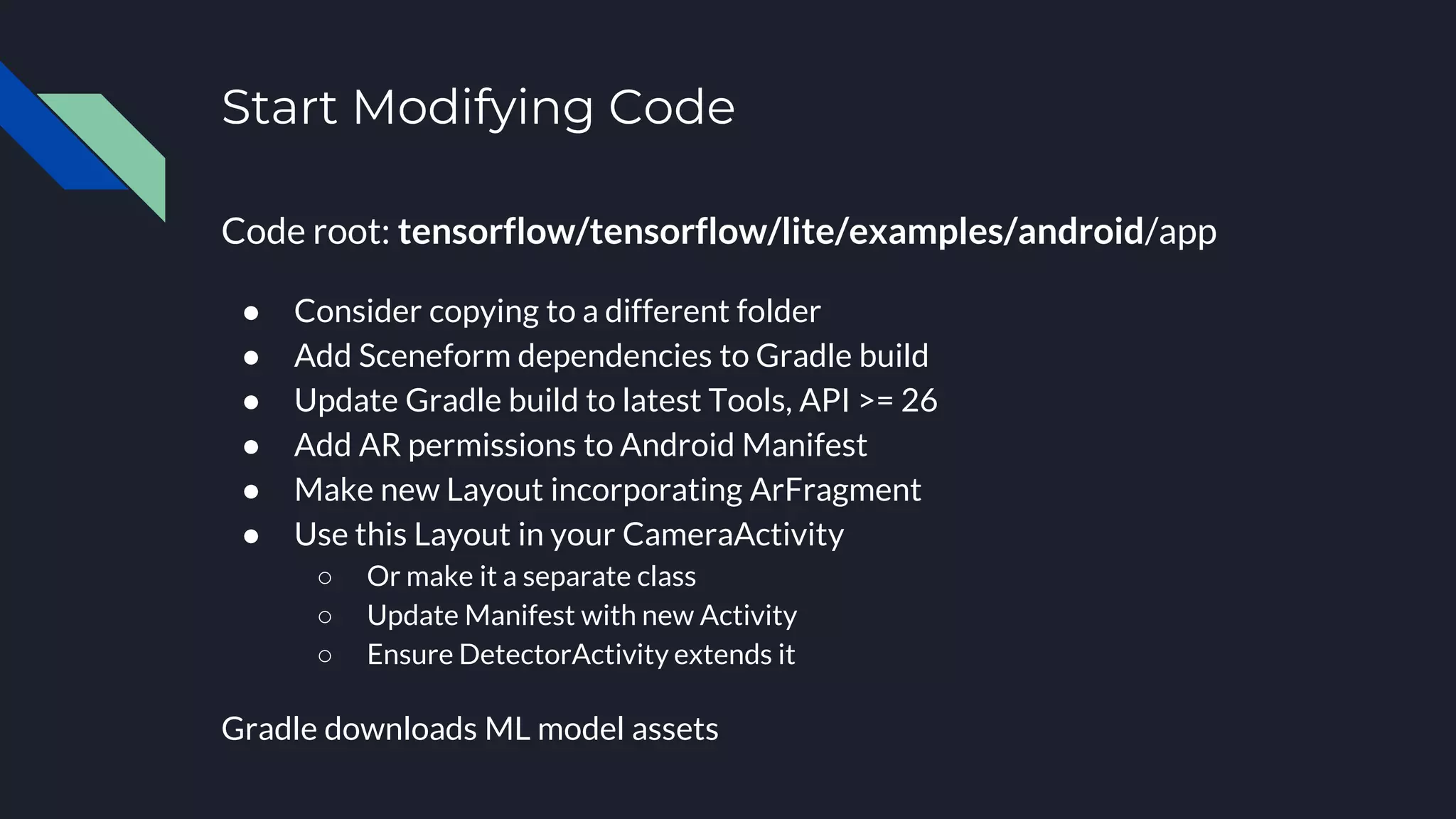

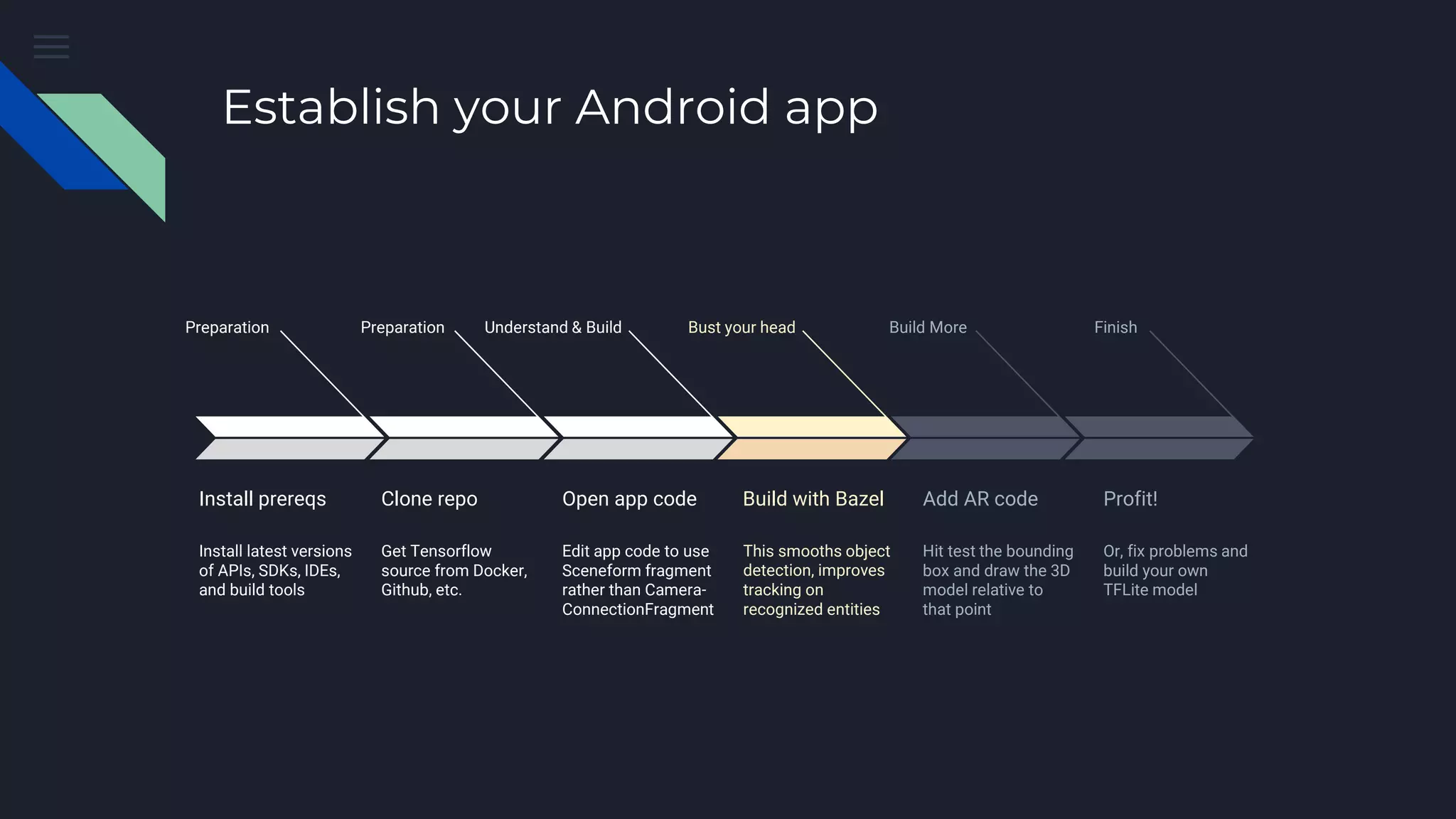

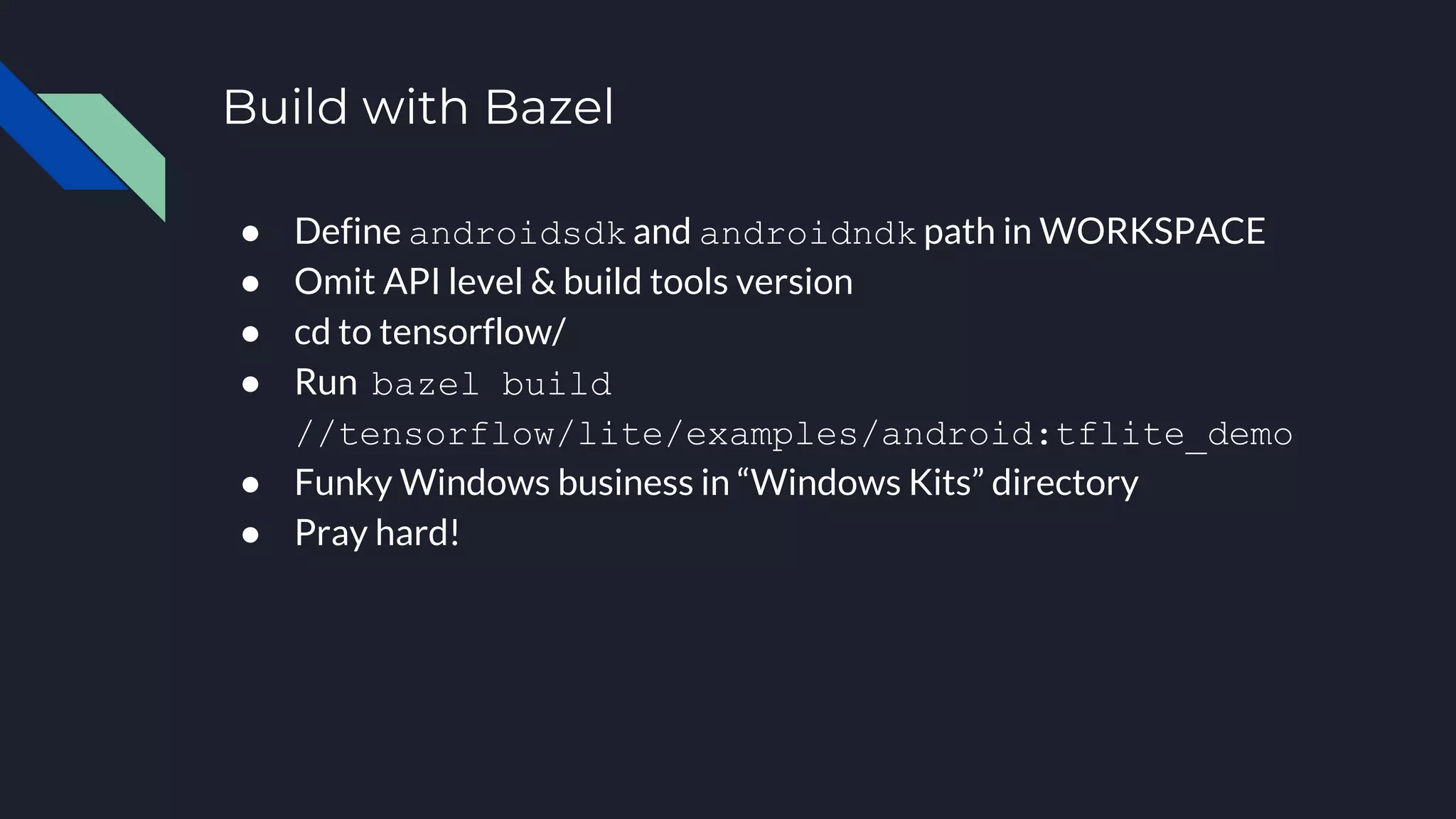

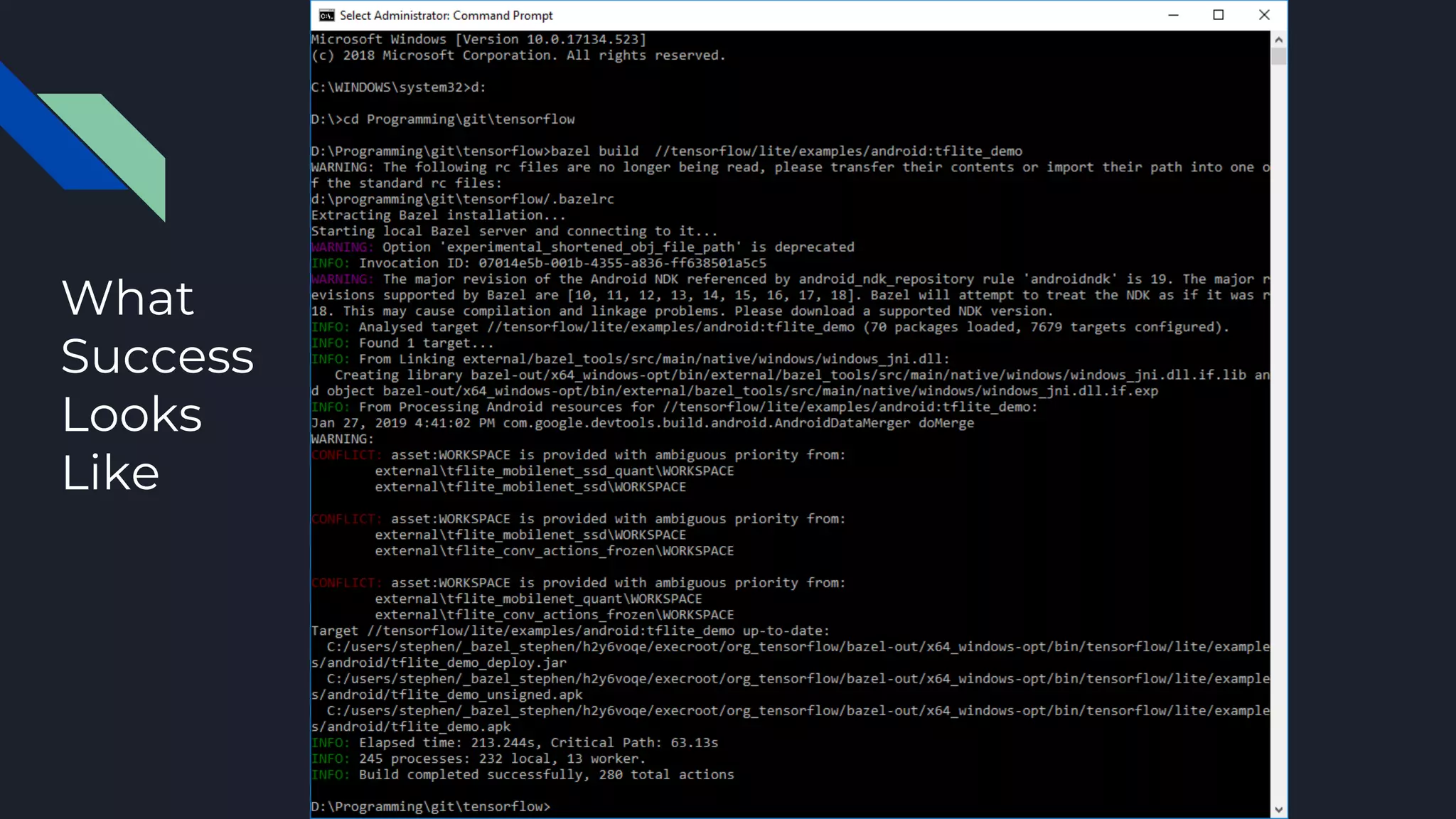

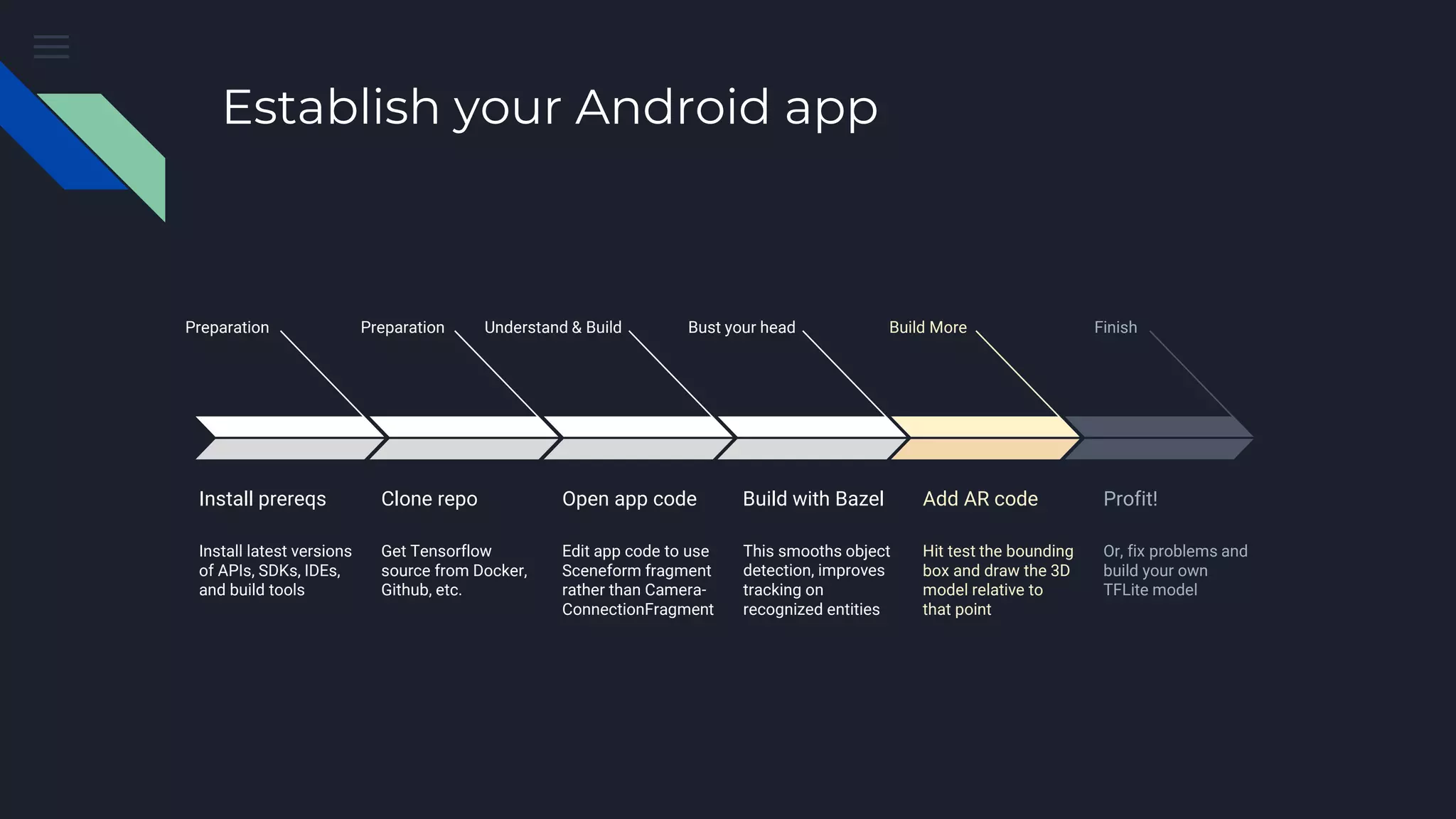

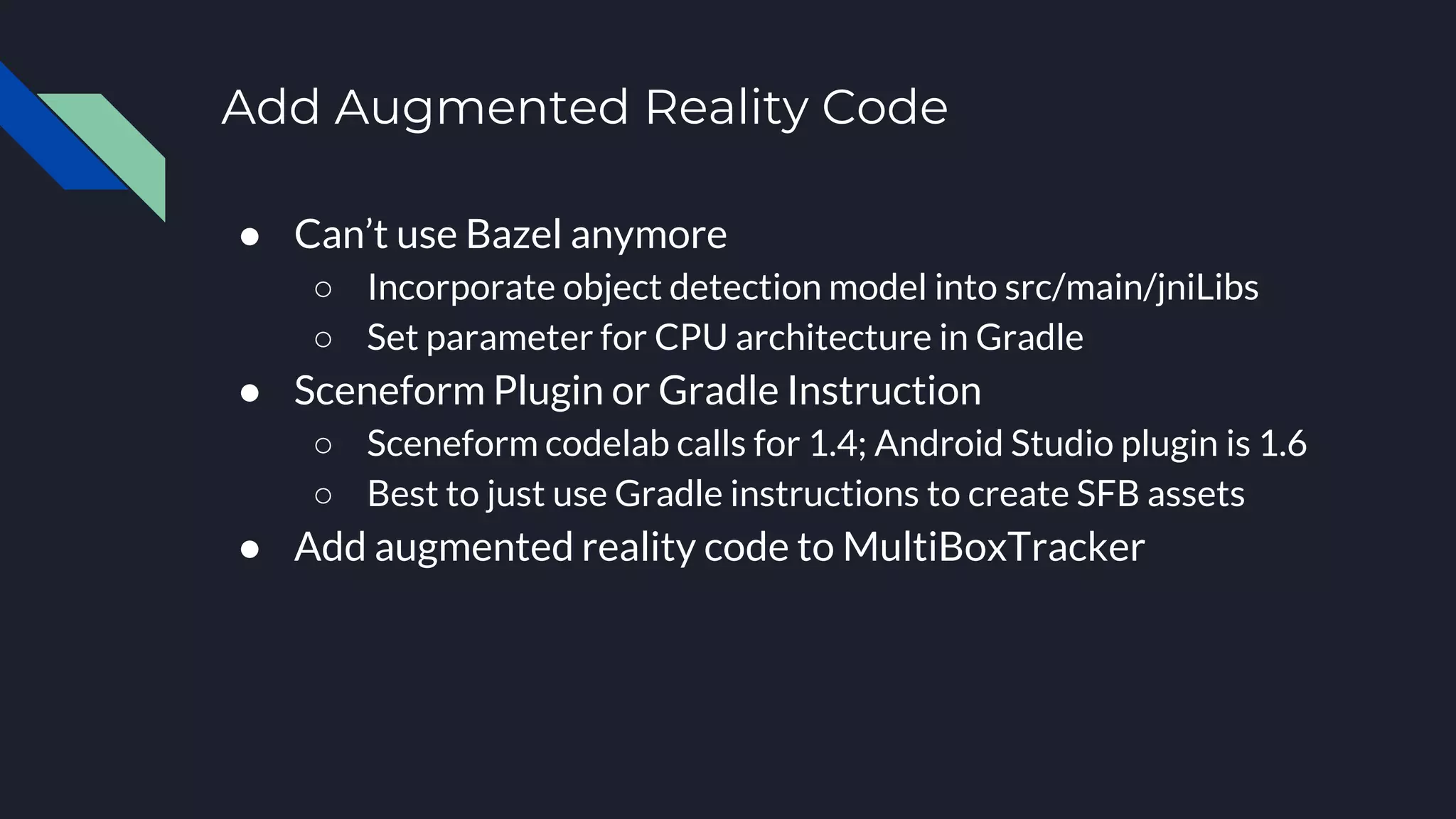

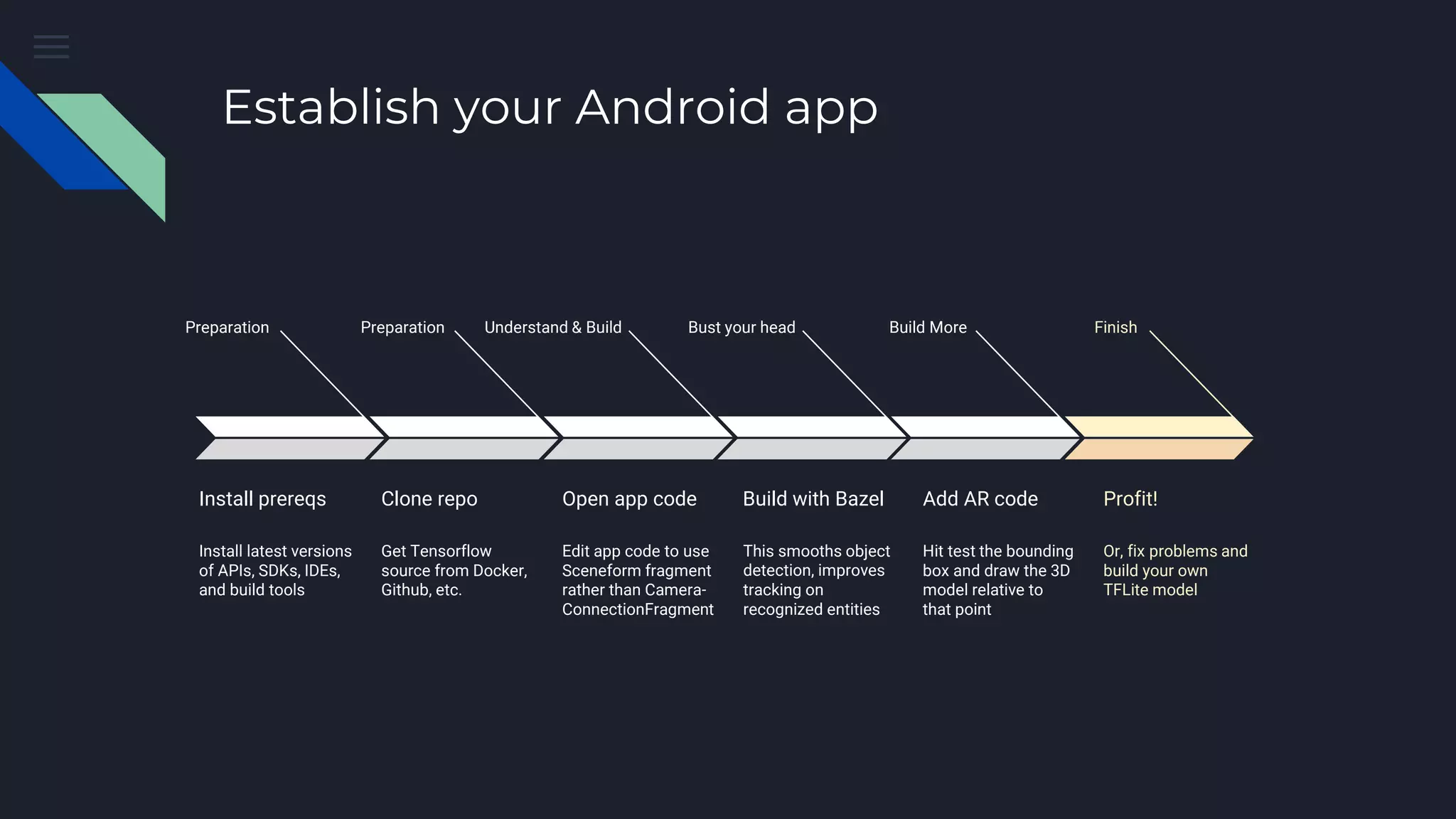

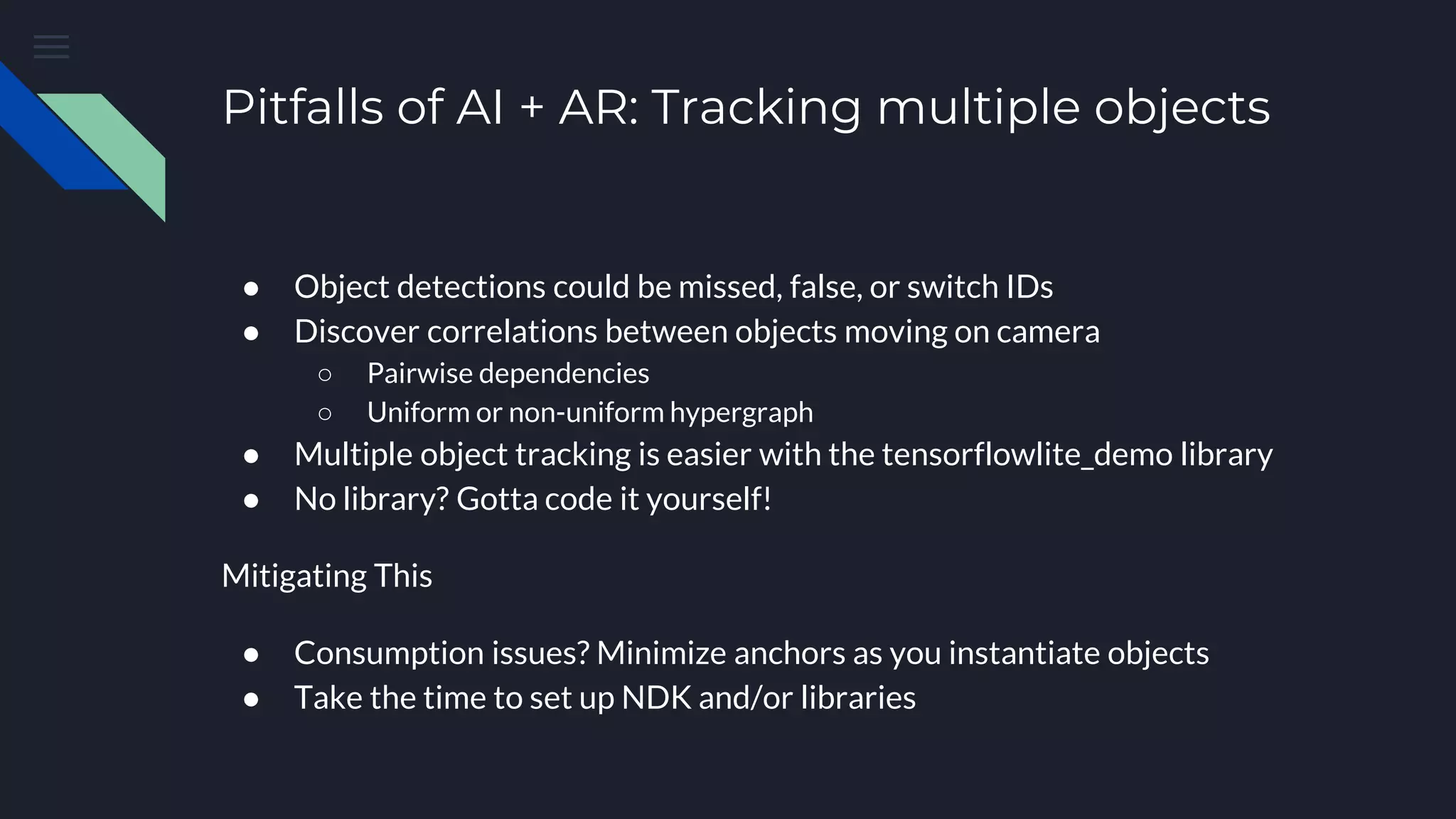

The document presents a comprehensive guide on integrating AI with augmented reality (AR) using TensorFlow and associated tools. It covers the preparation, coding processes, and potential pitfalls when creating AR applications on Android, such as battery life, object tracking issues, and sensor drift. It emphasizes the importance of proper implementation and testing of models, alongside sharing resources and references for further learning.