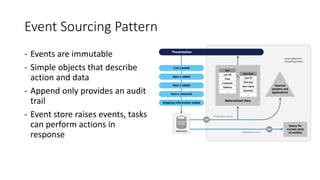

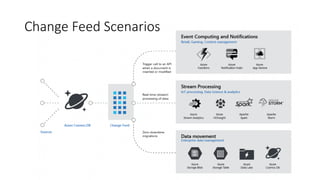

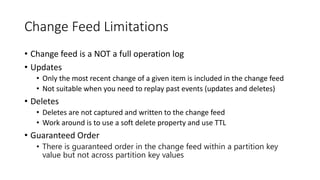

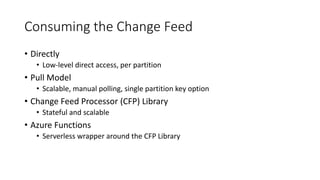

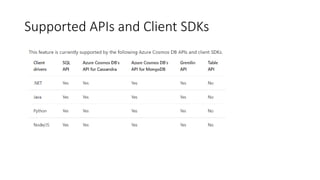

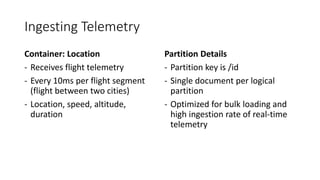

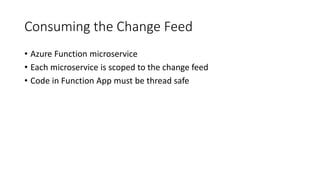

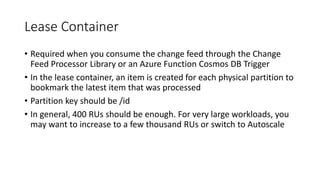

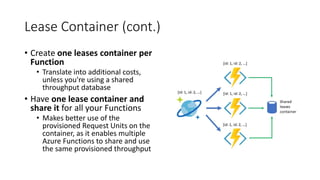

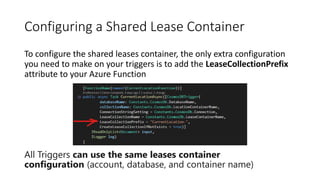

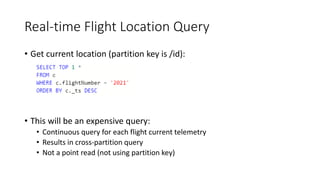

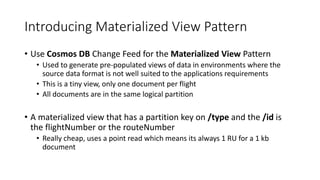

This document summarizes a presentation about using event sourcing with Azure Cosmos DB change feed and Azure Functions. The presentation introduces event sourcing and how Cosmos DB can be used as an event store. It describes how to consume the Cosmos DB change feed using the change feed processor library or Azure Functions. It also demonstrates how to generate materialized views of the data using the change feed to optimize queries. The demos show ingesting telemetry into Cosmos DB, consuming the change feed with Functions, and creating materialized views for current location and delivery status.