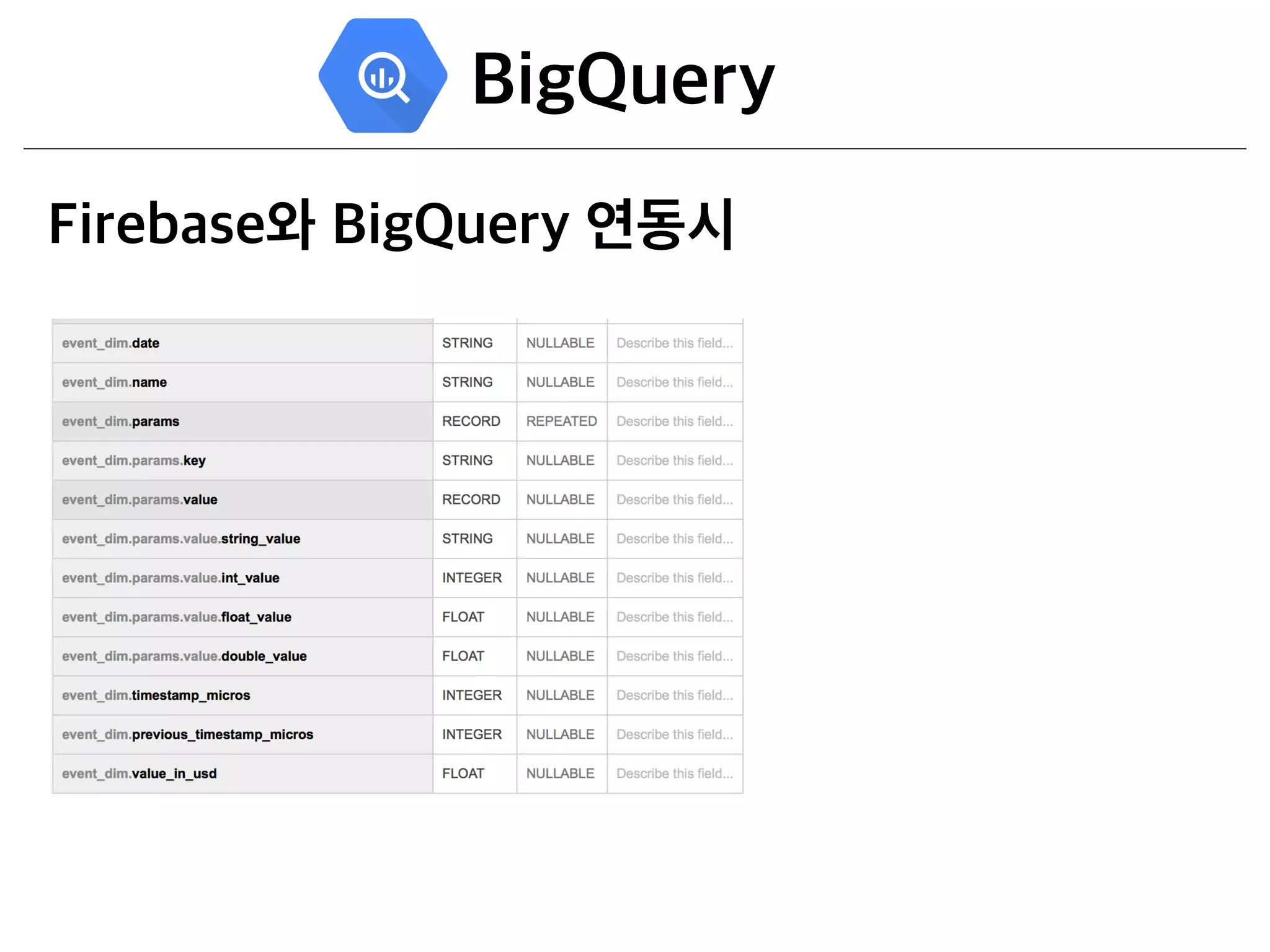

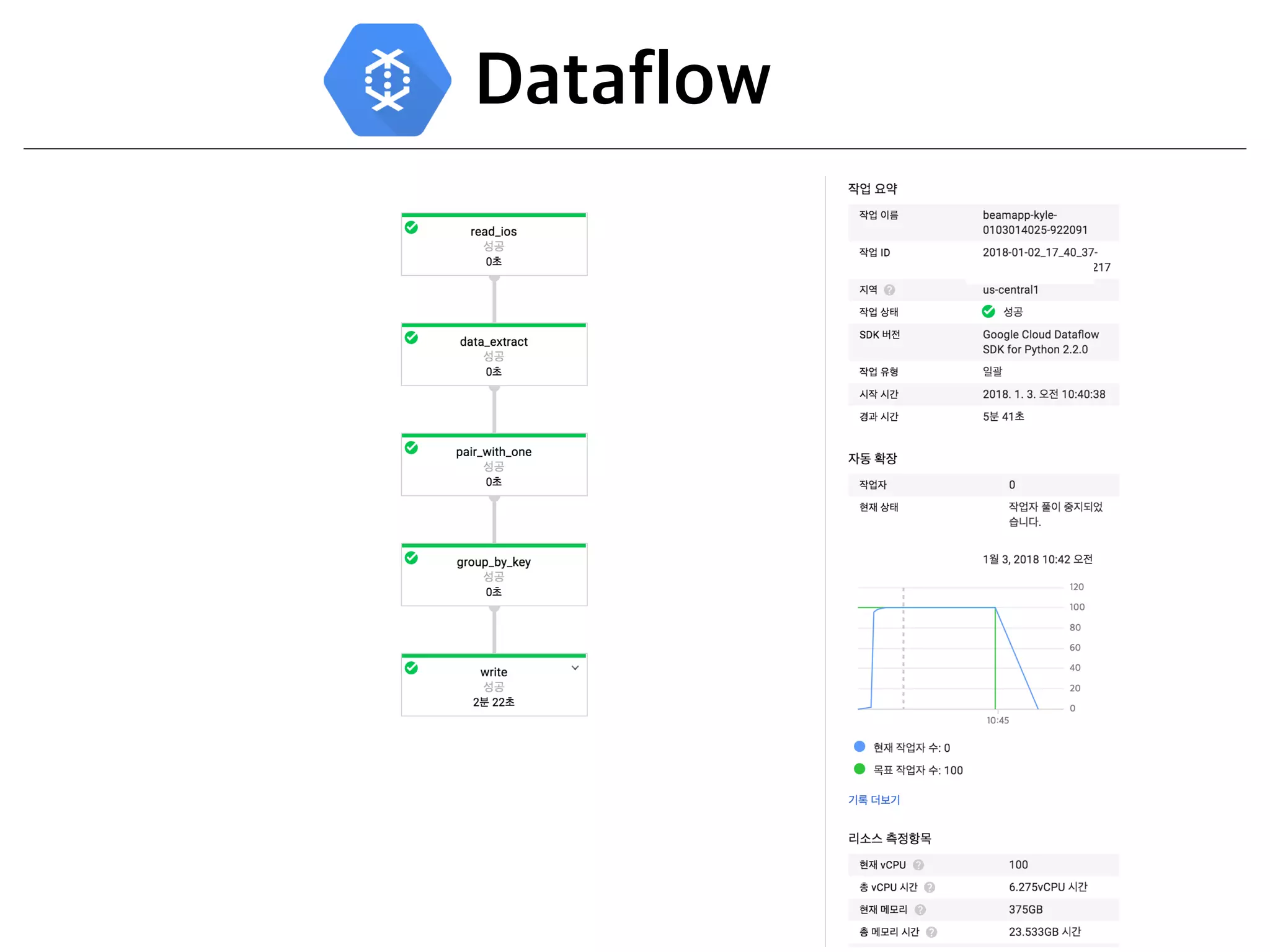

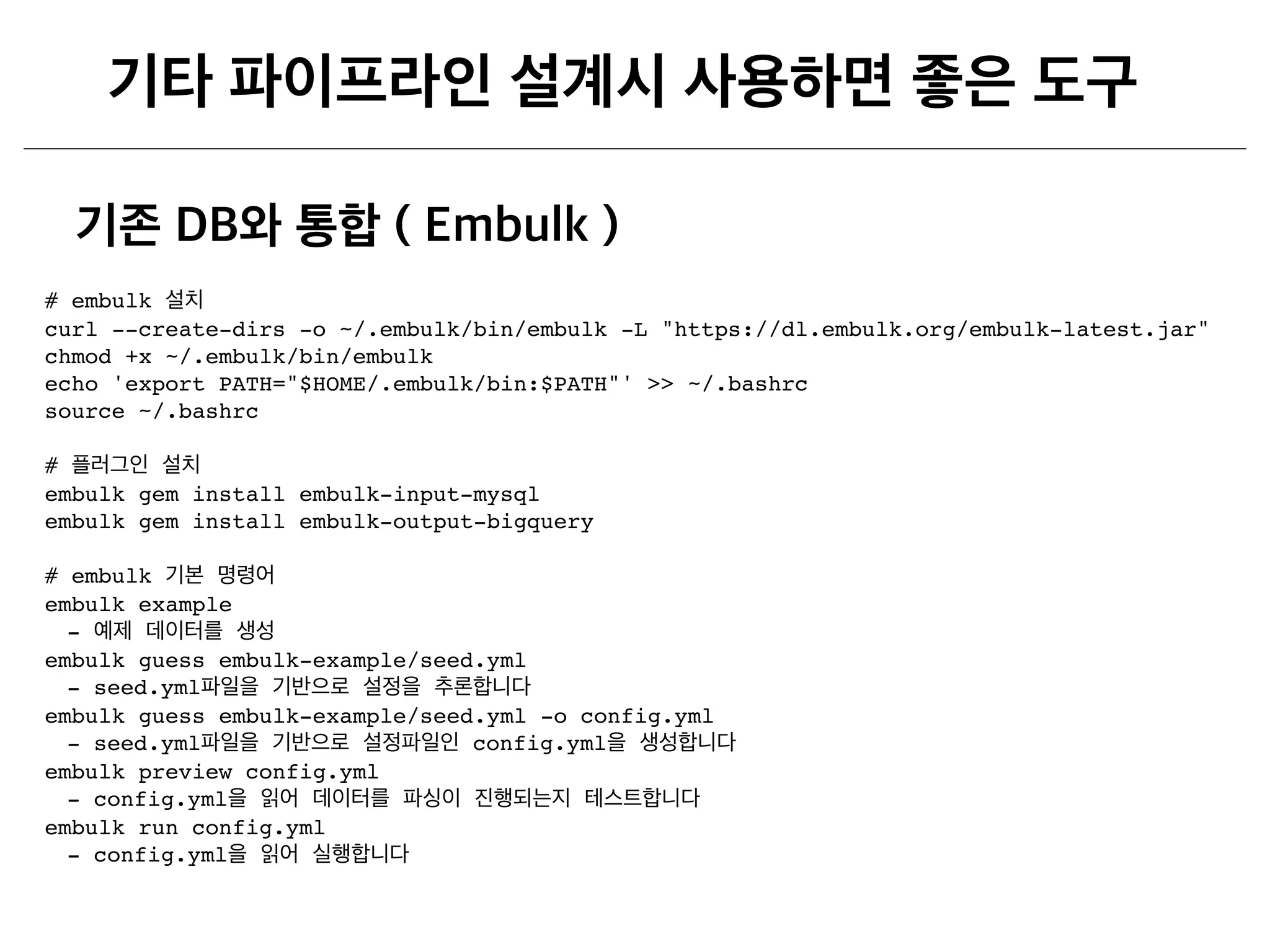

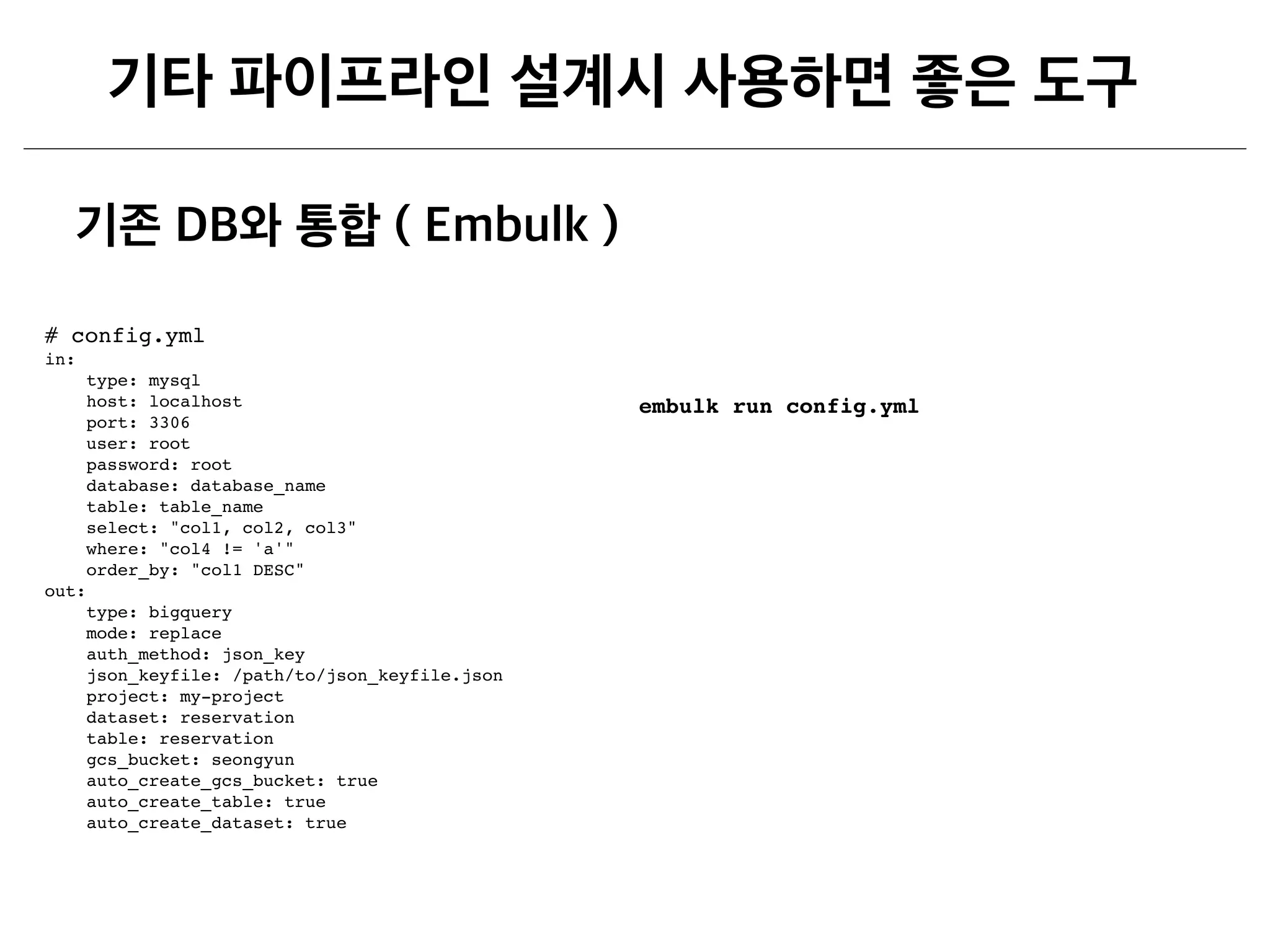

This document provides instructions for installing and using Embulk and Airflow. It explains how to install Embulk and common Embulk plugins, run Embulk tasks to load data from MySQL to BigQuery, and configure an Embulk config file to specify the input, output, and load options. It also explains how to install Airflow, initialize the metadata database, run the Airflow web server, list and test DAGs and tasks, and write a simple DAG with BashOperator tasks to print the date and sleep for demonstration purposes.

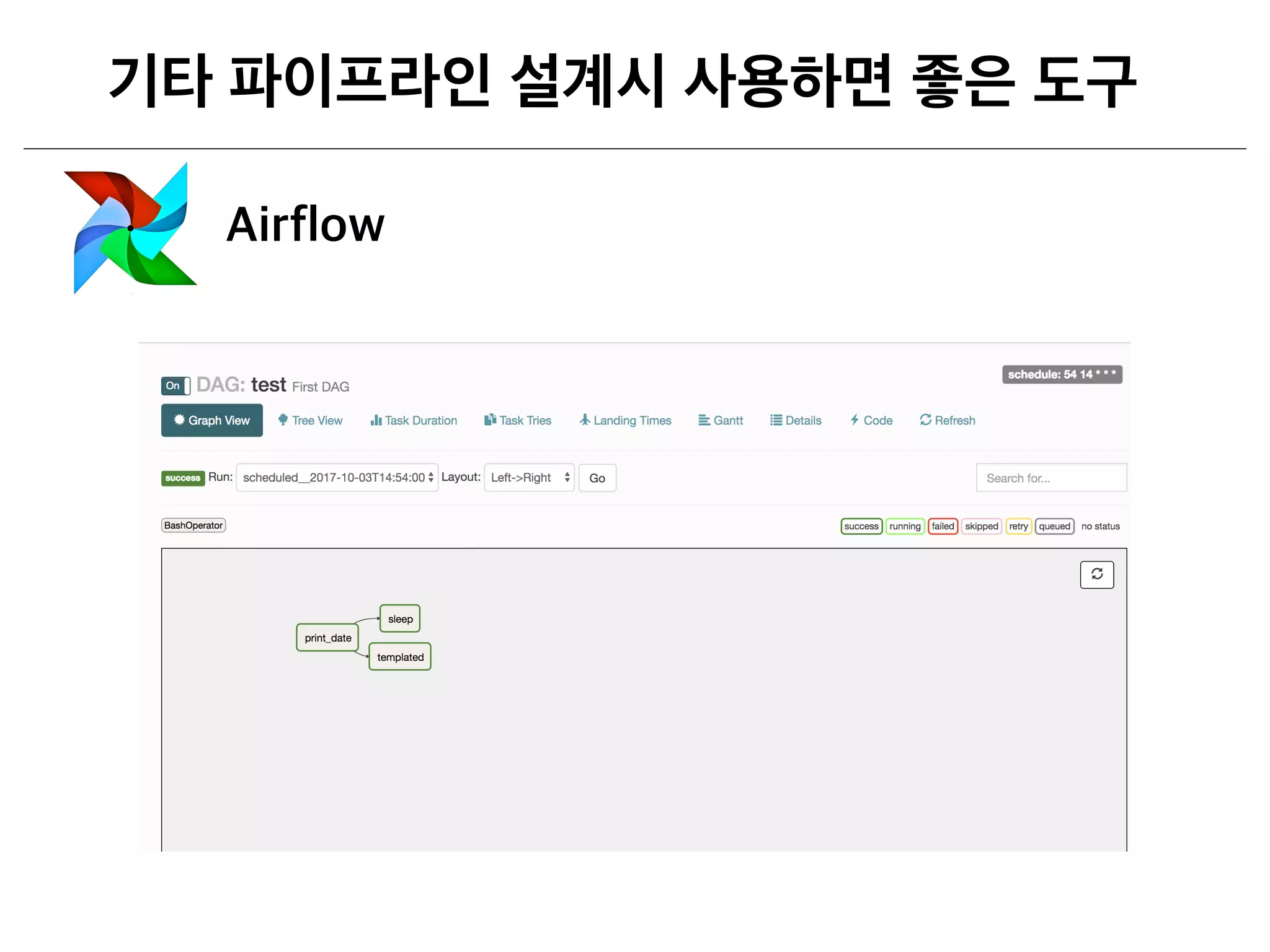

![# airflow

pip3 install apache-airflow

airflow initdb

airflow webserver -p 8080

localhost:8080

airflow list_dags

- airflow dags *.py , DAGs

- dags DAG

airflow list_tasks test

- test dags tasks

airflow list_tasks test --tree

- test dags tasks tree

airflow test [DAG id] [Task id] [date]

) airflow test test print_date 2017-10-01

- DAG Task test

airflow scheduler

- Test , . DAG](https://image.slidesharecdn.com/feat-180118115120/75/Feat-gcp-GCP-36-2048.jpg)

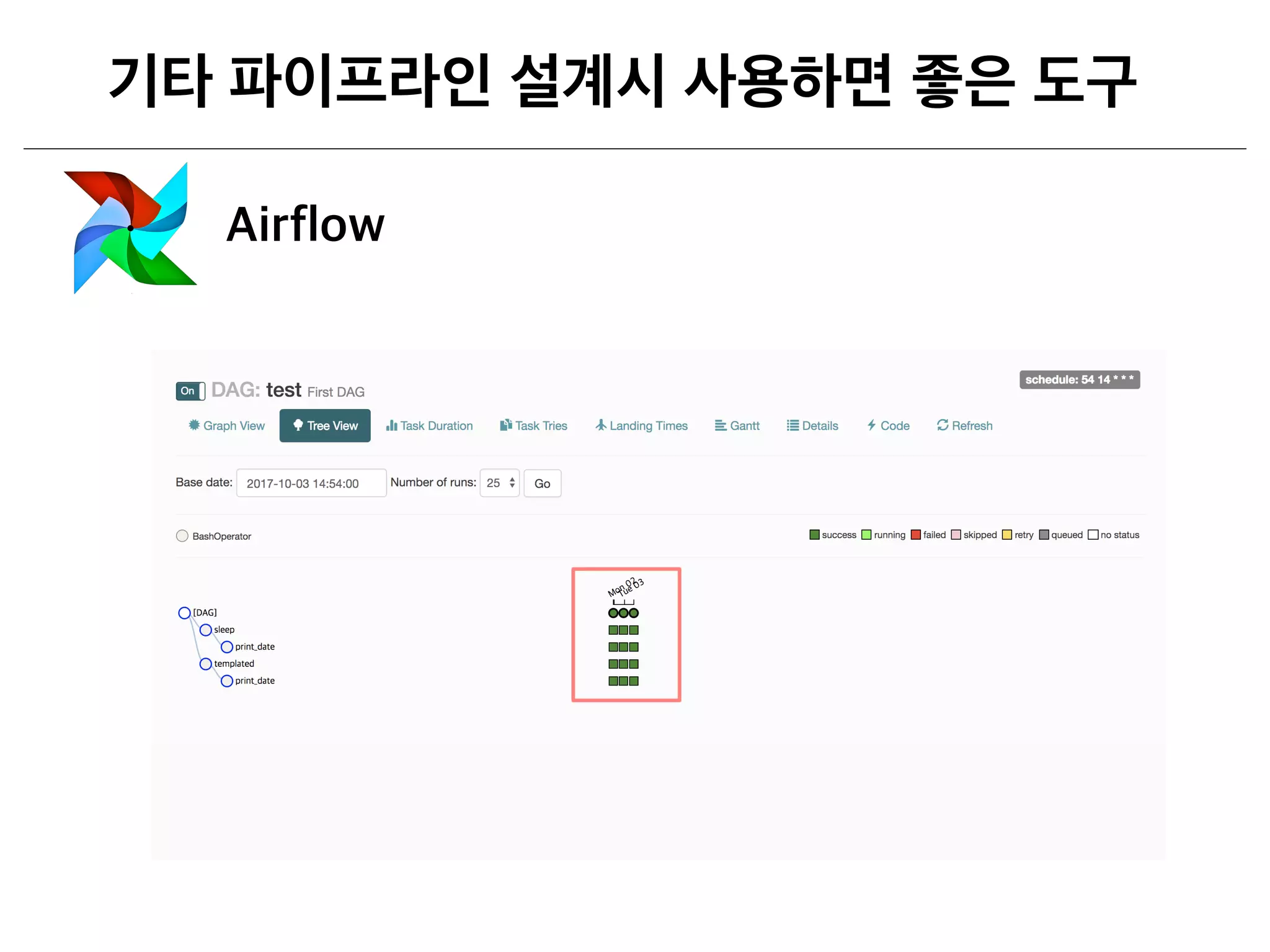

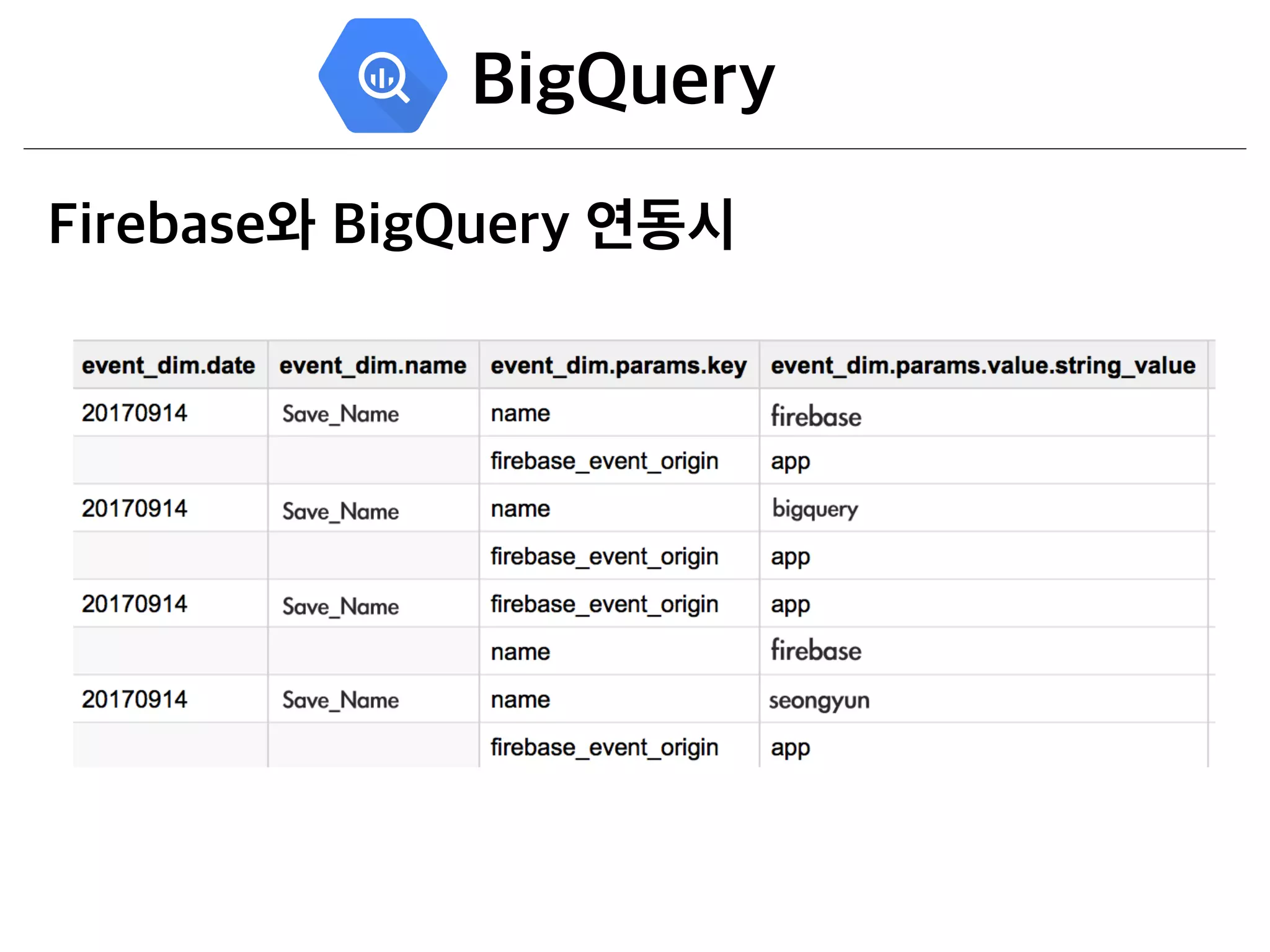

![from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(2017, 10, 1),

'email': ['airflow@airflow.com'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

# 'queue': 'bash_queue',

# 'pool': 'backfill', # Only celery option

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

}

# dag

dag = DAG('test', description='First DAG',

schedule_interval = '55 14 * * *',

default_args=default_args)

t1 = BashOperator(

task_id='print_date',

bash_command='date',

dag=dag)

# BashOperator

# task_id unique

# bash_command bash date

t2 = BashOperator(

task_id='sleep',

bash_command='sleep 5',

retries=3,

dag=dag)

t3 = BashOperator(

task_id='templated',

bash_command=templated_command,

params={'my_param': 'Parameter I passed in'},

dag=dag)

# set_upstream t1 t2

t2.set_upstream(t1)

# t1.set_downstream(t2)

# t1 >> t2

t3.set_upstream(t1)](https://image.slidesharecdn.com/feat-180118115120/75/Feat-gcp-GCP-37-2048.jpg)