The document outlines a tutorial on geometric deep learning, focusing on its applications in multimedia, including image classification, synthesis, and video analysis. It covers fundamental concepts such as manifold theory, operations on manifolds, and presents various algorithms and open-source software packages for implementing these techniques. The tutorial also explores advanced uses of geometric deep learning, such as improving out-of-distribution performance and addressing challenges in non-Euclidean spaces.

![A short primer into graph neural networks

• Many relations can be modeled as graphs

• Molecules, genes, social networks, knowledge graphs, …

• Important operators in graph neural networks (GNNs)

• Graph convolution

• Usually done employing

all neighbors of the node

• Graph laplacian

• Has same role in graphs as the

Laplace operator in Rd

• Resources

• See the survey by Wu et al [2]

• See also the blog article [3]

8

Normal convolution on 2D grid

versus graph convolution](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-8-2048.jpg)

![Manifold operators – Frechet mean

• Frechet mean (intrinsic mean)

• The Frechet mean of the points x1, ..., xn is the point ∈ M which minimizes

the sum of the squared distances to all these points

• Formula:

• d(p,q) is the geodesic distance

• A weighted Frechet mean can be also defined

• In an Euclidean space, Frechet mean is identical

to the Euclidean mean (arithmetic mean)

• Usually calculated (approximately)

via an iterative algorithm

13

Frechet mean of three points

on a hyperboloid.

Image courtesy of [S-36]](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-13-2048.jpg)

![Manifold operators – convolution

• Convolution operator can be defined in several ways on a manifold

• Via a weighted Frechet mean (see [SU-14], [SU-15], [SU-36])

• Via a weighted sum in the tangent space (see [SU-10])

• Convolution via a weighted sum in the tangent space – procedure

14](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-14-2048.jpg)

![Manifold operators – other

• There is a variety of other useful operators on manifolds

• Parallel transport of a tangent vector along a curve

• Retraction is a first-order approximation (see [4])

of the exponential map which is faster to calculate

• Pullback operator

• Differential operators can be also defined on manifolds

• Intrinsic gradient

• Covariant derivative

• Divergence

• Laplacian

15

Parallel transport](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-15-2048.jpg)

![Similarity search & retrieval

• Identifying “hard” training examples

• Iscen et al, CVPR, 2018, ref. [SU-30]

• Useful e.g. for re-training with these

examples (curriculum learning etc.)

• Hard examples are identified

by comparing the distances

(similarities) measured via

• Euclidean distance

• Geodesic distance on the manifold

• Geodesic distance is calculated via

a random walk on the Euclidean

nearest neighbor graph

19](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-19-2048.jpg)

![Similarity search & retrieval

• Robust metric learning with Grassmann manifolds

• Luo et al, AAAI, 2019, ref. [5]

• Traditional methods employ

L2 distance in feature space

• Sensitive to noise, as the

data distribution is usually

not Gaussian

• Method learns a projection from a

high-dimensional to a low-dimensional

Grassmann manifold

• Embedding distance (Harandi et al, [6])

is employed as distance on the

low-dimensional Grassmann manifold

20](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-20-2048.jpg)

![Image classification & object detection

• Manifold mixup

• Verma et al, ICML, 2019, ref. [SU-50]

• Novel regularizer which forces the training to

interpolate between hidden representations

(captured in the intermediate network layers)

• Can be seen as a generalization of input mixup,

which does the interpolation on a random layer

• Input mixup uses always layer 0

• Positive effects of manifold mixup

• Flattens the class-specific representation

(lower variance)

• Generates a smoother decision boundary,

compare (a) and (b) in the right figure

21](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-21-2048.jpg)

![Image classification & object detection

• Transfer CNN to 360° images

• Su et al, 2022, see [SU-50]

• Transfer an existing CNN model

trained on perspective images

to spherical images (from 360° camera)

without any additional annotation/training

• Faster R-CNN => Spherical Faster R-CNN

22](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-22-2048.jpg)

![Image synthesis & enhancement

• Progressive Attentional Manifold Alignment for Arbitrary Style Transfer

• Luo et al, ACCV, 2022, ref. [SU-37]

• Progressively aligns content manifolds to their most related style manifolds

• Relaxed earthmover distance is used for alignment cost function

• Afterwards, space-aware interpolation is done in order to increase the

structural similarity of the corresponding manifolds

• Makes it easier for the attention module to match between them

23](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-23-2048.jpg)

![Image synthesis & enhancement

• Image editing by manipulation of the latent space manifolds

• Parihar et al, ACM MM, 2022, ref. [SU-42]

• Performs highly realistic image manipulation with minimal supervision

• Estimates linear directions in the latent space of StyleGAN2 using a few images

• Introduces a novel method for sampling from the style manifold

24](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-24-2048.jpg)

![Video analysis

• Geometry-aware algorithm for skeleton-based human action recognition

• Friji et al, CoRR, 2020, ref. [SU-27]

• Skeleton sequences are modeled as trajectories on

Kendall shape space and fed into a CNN-LSTM network

• Kendall shape space [7] is a quotient manifold which is invariant against

location, scaling and rotation (as these do not change the shape)

25](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-25-2048.jpg)

![Video analysis

• DreamNet: Deep riemannian manifold network for SPD matrix learning

• Wang et al, ACCV, 2022, Ref. [SU-57]

• Adopts a neural network over the manifold Pn of

symmetric positive definite matrices as the backbone

• Appends a cascade of Riemannian autoencoders to it

in order to enrich the information flow within the network

• Experiments on diverse tasks (emotion recognition, hand action recognition

and human action recognition) demonstrate a favourable performance

26](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-26-2048.jpg)

![3D data processing

• Unsupervised Geometric Disentanglement for Surfaces via CFAN-VAE

• Tatro et al, ICLR, 2021, Ref. [SU-41]

• Novel algorithm for geometric disentanglement

(separating intrinsic and extrinsic geometry) of 3D models

• Surface features are described via a combination

of conformal factors and surface normal vectors

• Conformal factor defines a conformal

(angle-preserving) deformation

• Propose a convolutional mesh autoencoder

based on these features

• Algorithm achieves state-of-the-art performance

on 3D surface generation, reconstruction & interpolation

27](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-27-2048.jpg)

![3D data processing

• Intrinsic Neural Fields: Learning Functions on Manifolds

• Koestler et al, ECCV, 2022, Ref. [SU-33]

• Introduces intrinsic neural fields, a novel and versatile representation

for neural fields on manifolds

• Based on the eigenfunctions of the Laplace-Beltrami operator

• Intrinsic neural fields can reconstruct high-fidelity textures from images

28](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-28-2048.jpg)

![Nonlinear dimension reduction

• DIPOLE algorithm

• Wagner et al, 2021, Ref. [SU-56]

• Corrects an initial embedding (e.g. calculated via Isomap) by minimizing

a loss functional with both a local, metric term and a global, topological term

based on persistent homology

• Unlike more ad hoc methods for measuring the

shape of data at multiple scales,

persistent homology is rooted in

algebraic topology and enjoys

strong theoretical foundations

29](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-29-2048.jpg)

![Nonlinear dimension reduction

• SpaceMAP algorithm

• Tao et al, ICML, 2022, Ref. [SU-61]

• Introduces equivalent extended distance

• Makes it possible to match the

capacity between two spaces

of different dimensionality

• Performs hierarchical manifold

approximation, as real-world data

often has a hierarchical structure

30](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-30-2048.jpg)

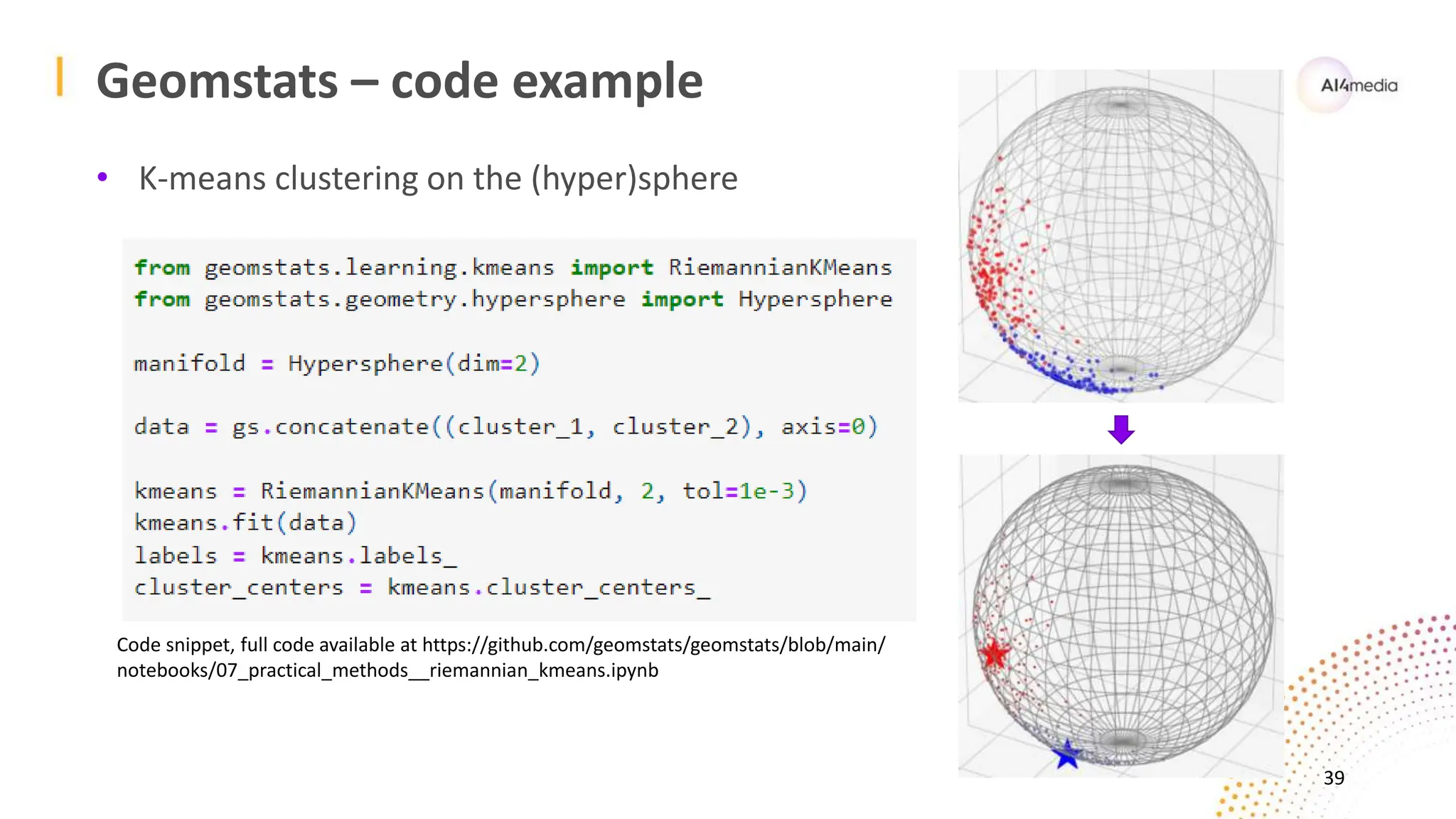

![Geomstats

• Supports roughly the same set of manifolds as geoopt

• Additionally also Kendall shape space [7] and statistical manifolds

• Statistical manifold is a Riemannian manifold where

each point is a probability distribution

(Binomial, Exponential, Normal, Poisson etc.)

• Implements some advance operators on manifolds

• Levi-Civita connection, Christoffel symbol, Ricci curvature

• Provides also some operators for statistical analysis

• Frechet mean estimator, K−means and PCA

• Provides also methods from information geometry

• Calculates Fisher information metric for a statistical manifold

• Ref. [8] provides great introduction into manifolds and geomstats package

38

Kendall shape space

for triangles](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-38-2048.jpg)

![Introduction & Motivation

• Standard recipe applied in transfer learning

• Finetune a pretrained model on the task-specific dataset

with different hyperparameter settings

• From the finetuned models, pick the one with highest validation accuracy

• “Model soup” paper [Wortsman et al, 2022]

• Shows that merging multiple finetuned models gives a

significantly better performance on datasets with distribution shifts

• Proposed ‘greedy soup’ algorithm is the average of several finetuned models

• Our proposed “manifold mixing model soup” algorithm extends this idea

• Breaks models into several components (latent space manifolds)

• Does not do a simply averaging, but merges the selected models in an

optimal way (mixing coefficients are calculated via invoking an optimizer)

45](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-45-2048.jpg)

![Related work

• “Model soup” algorithm [Wortsman et al, 2022]

• Proposes two variants of souping:

“uniform soup” and “greedy soup”

• Uniform soup does a simple average

over all finetuned models

• “Wise-FT” [Wortsman et al, 2022a]

• Fused model is interpolation of

base model (zero-shot model)

and model finetuned on target task

• “Model Ratatouille” [Rame et al, 2023]

• “Recycles” multiple finetunes

of a base model

46](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-46-2048.jpg)

![Related work – comparison of different strategies

47

Image courtesy of “Model ratatouille” paper [Rame et al, 2023]](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-47-2048.jpg)

![Experiments & Evaluation – Results

• Measures

• X-Axis is accuracy on original

dataset used for finetuning

• Y-Axis is average accuracy over

datasets with distribution shifts

• Comparison against

• Individual finetuned models

(green markers)

• Greedy soup & uniform soup

from [Wortsman et al, 2022]

(blue & magenta circle)

51](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-51-2048.jpg)

![References

• Note all references of the form “S-<index>” are corresponding to reference number <index> in

my survey paper (see [1]). E.g. reference [SU-8] refers to reference [8] in my survey paper [1].

• [1] H. Fassold, “A survey of manifold learning and its applications for multimedia”, ICVSP, 2023,

Online available at https://arxiv.org/abs/2310.12986

• [2] “A Comprehensive Survey on Graph Neural Networks”, Wu et al, IEEE TNLS, 2021

• [3] A gentle introduction into graph neural networks, https://distill.pub/2021/gnn-intro/

• [4] https://mathoverflow.net/questions/253339/how-to-solve-optimization-problems-on-

manifolds

• [5] Luo et al, “Robust Metric Learning on Grassmann Manifolds with Generalization Guarantees”,

AAAI, 2019, https://dl.acm.org/doi/pdf/10.1609/aaai.v33i01.33014480

• [6] Harandi et al, "Extrinsic methods for coding and dictionary learning on grassmann manifolds",

IJCV, 2015, https://arxiv.org/abs/1401.8126

57](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-57-2048.jpg)

![References

• [7] Guigui et al, “Parallel Transport on Kendall Shape Spaces”, GSI, 2021

https://inria.hal.science/hal-03160677/file/parallel_transport_shape.pdf

• [8] Guigui et al, “Introduction to Riemannian Geometry and Geometric Statistics: From Basic

Theory to Implementation with Geomstats”, FTML Journal,

https://inria.hal.science/hal-03766900/document

58](https://image.slidesharecdn.com/fassold-mmasia2023-tutorial-geometricdl-part1-231220093527-b43e90ce/75/Fassold-MMAsia2023-Tutorial-GeometricDL-Part1-pptx-58-2048.jpg)