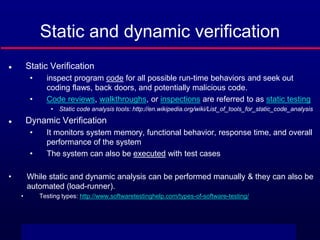

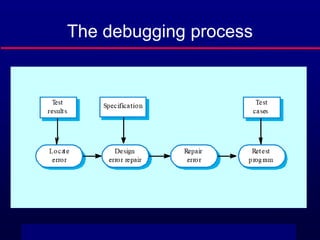

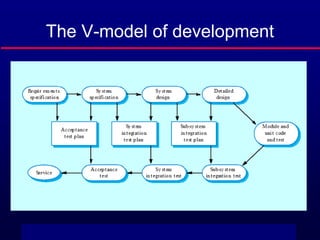

This document summarizes key topics from Chapter 22 of Ian Sommerville's Software Engineering, 7th edition, which covers verification and validation. It discusses the differences between verification and validation, static and dynamic verification methods, testing and debugging processes, the V-model development approach, components of a software test plan, and checklists for inspections. The verification process aims to ensure software is built correctly, while validation ensures the right product is built to meet customer needs. Both are essential across the entire software development lifecycle.

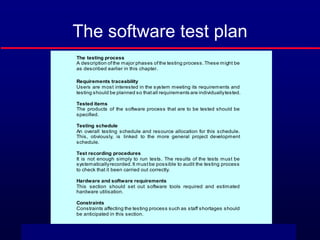

![©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 22 Slide 10

The structure of a software test plan

The testing process.

Requirements traceability.

Tested items.

Testing schedule.

Test recording procedures.[Jing, bugzilla etc]

Hardware and software requirements.

Constraints.

We will do usability and functionality testing and make test cases

• learning assignment (non-graded)

• My test cases: http://jmp.sh/J0KW3Vz](https://image.slidesharecdn.com/dr-240213180554-4fe43e7f/85/Dr-Jonathan-Software-verification-validation-ppt-10-320.jpg)