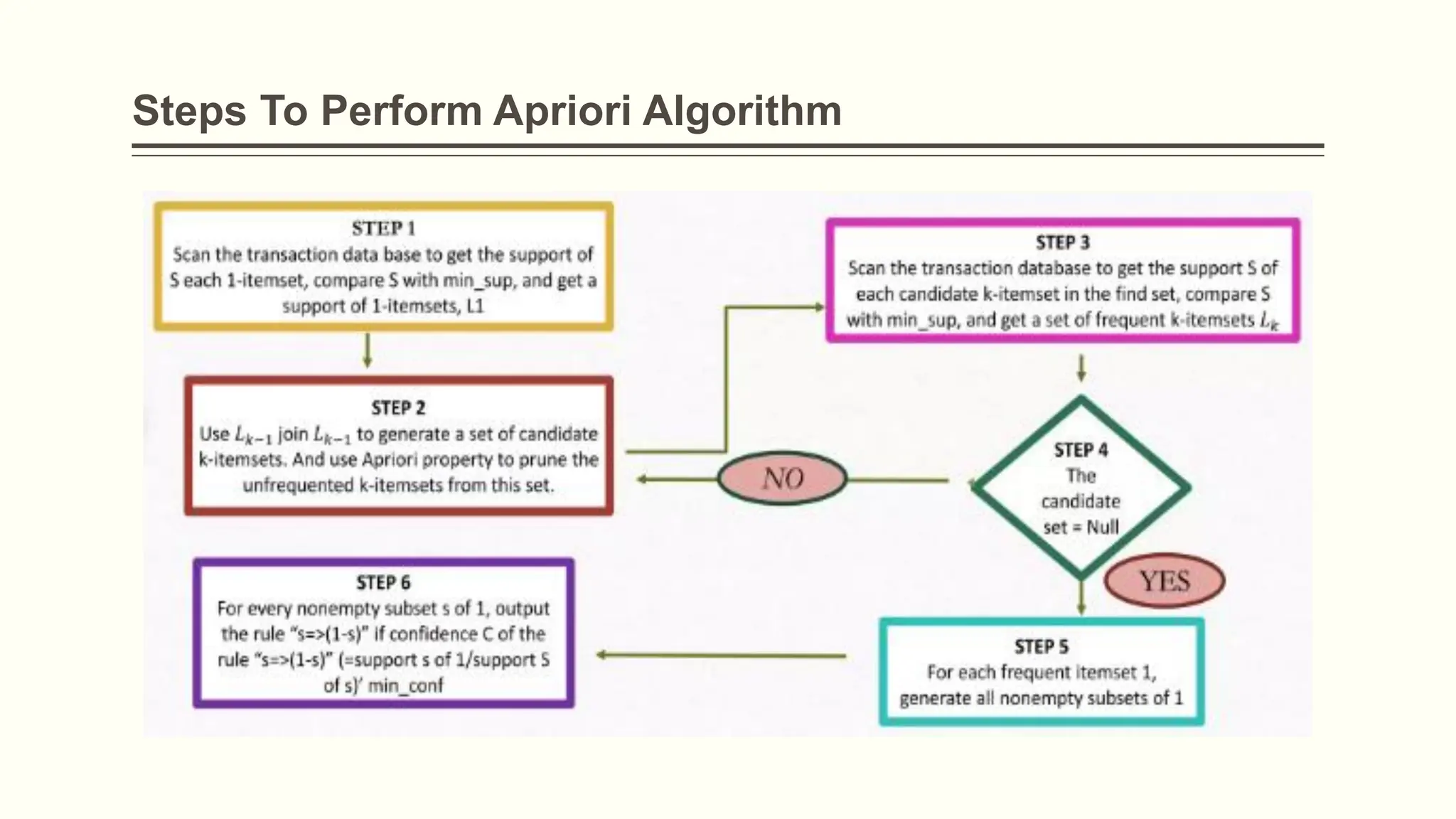

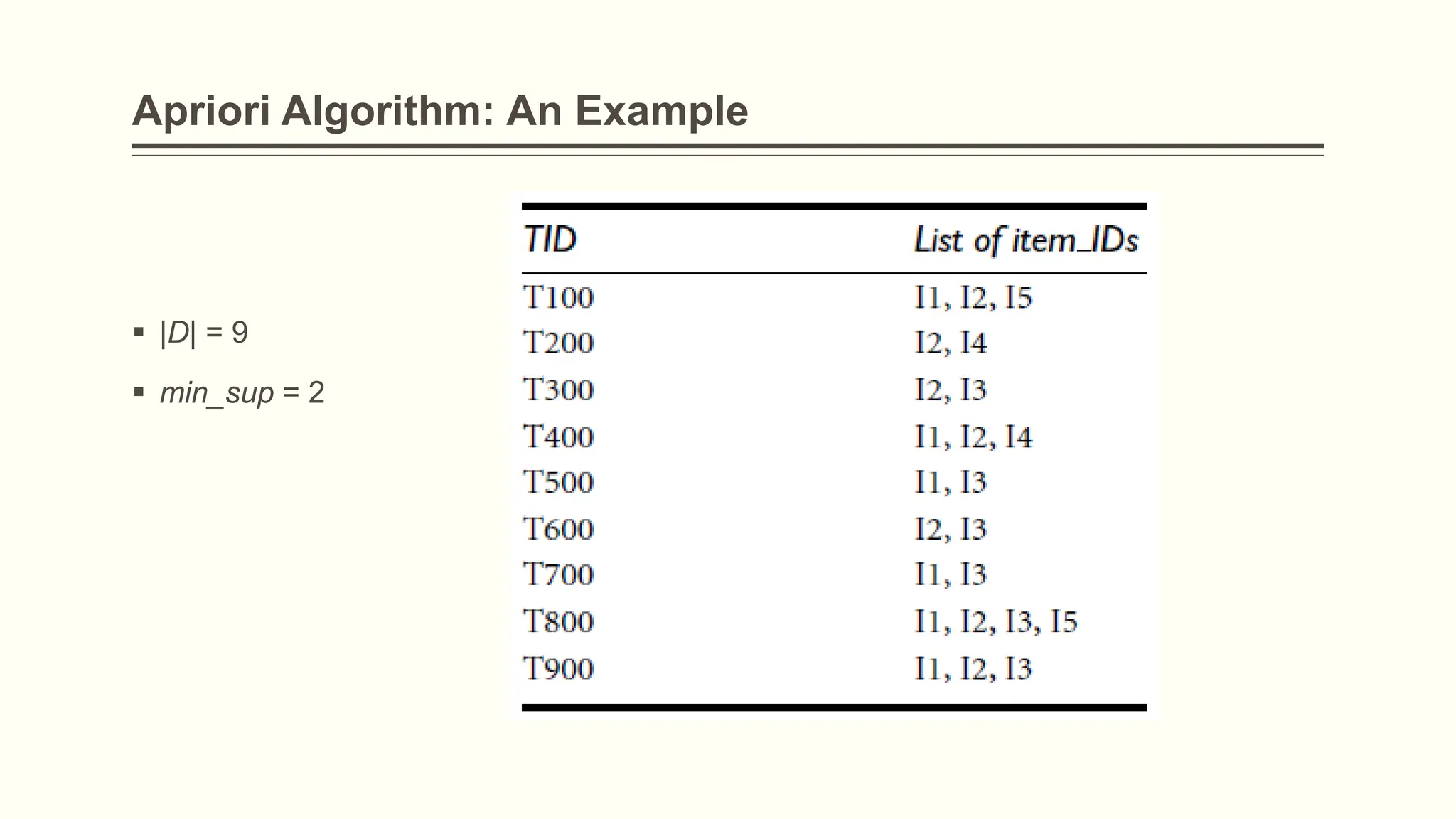

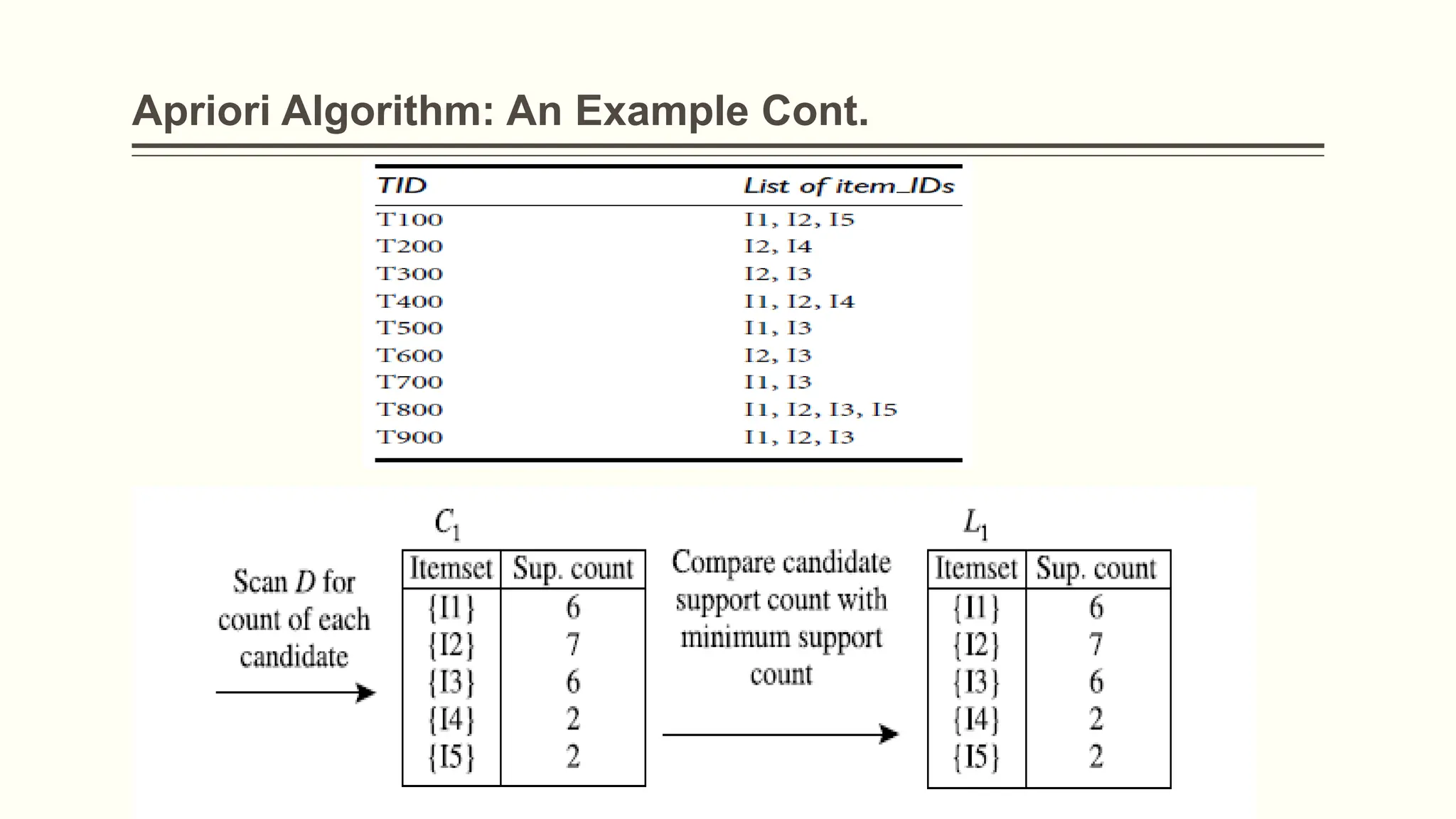

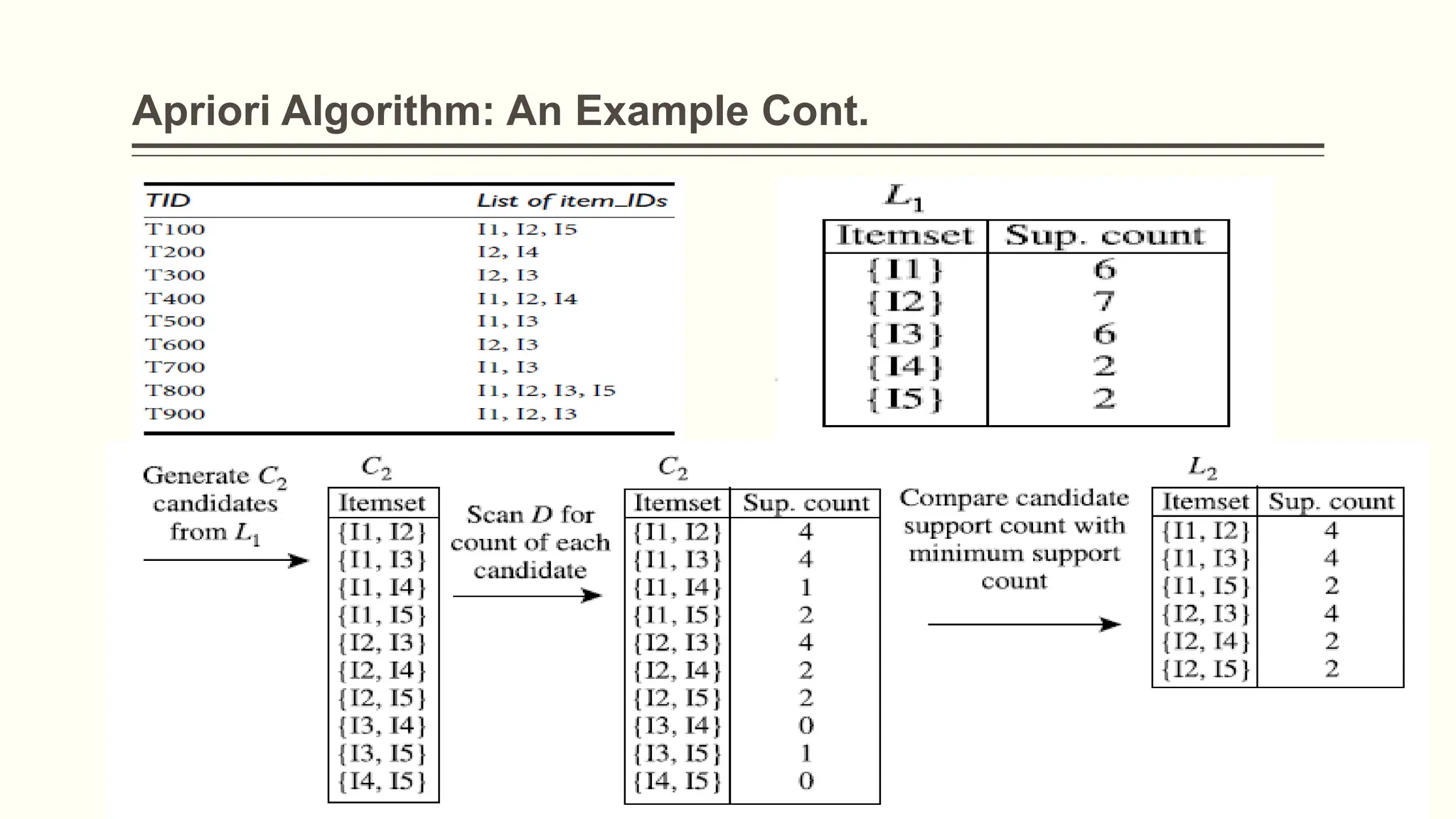

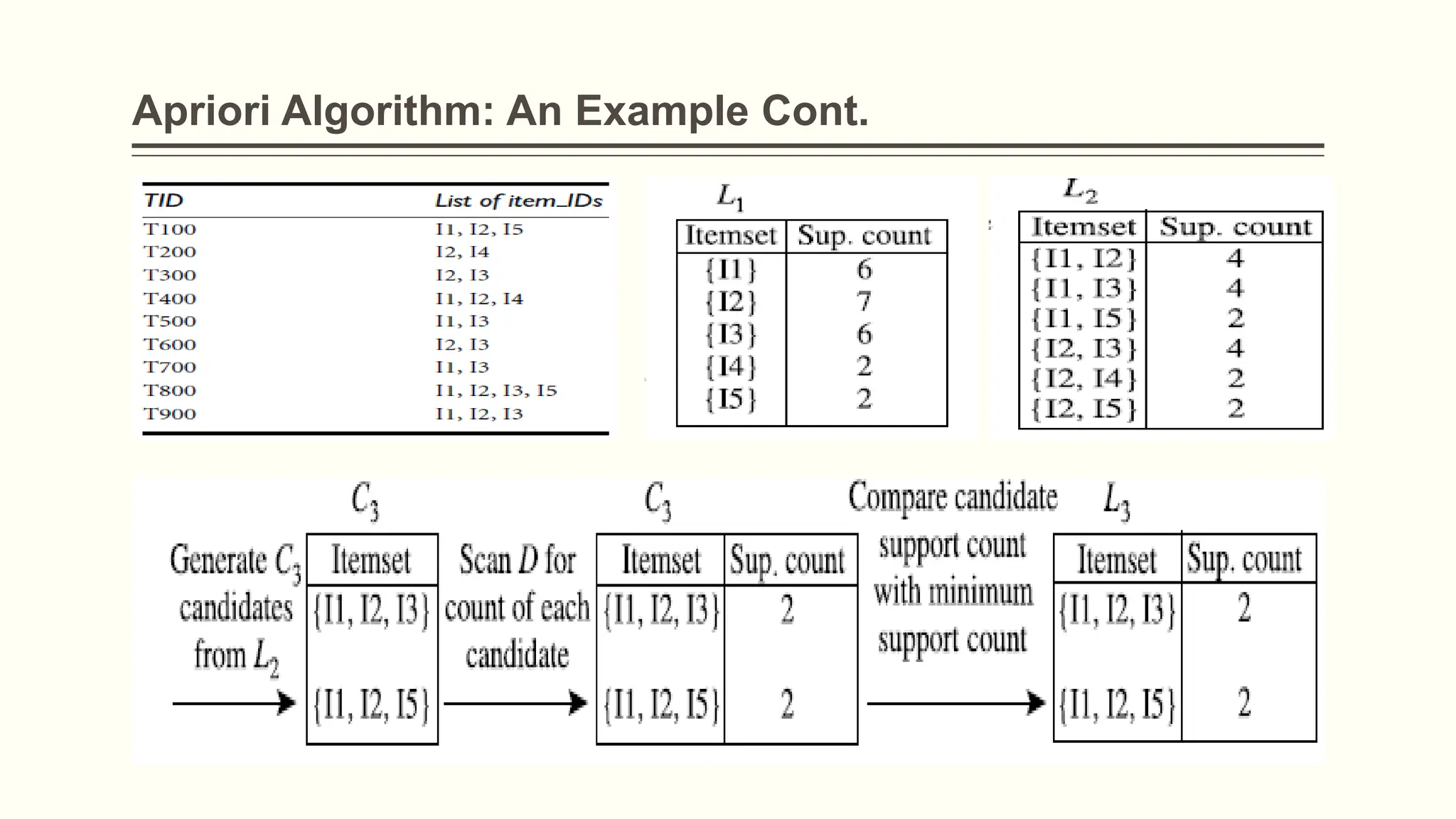

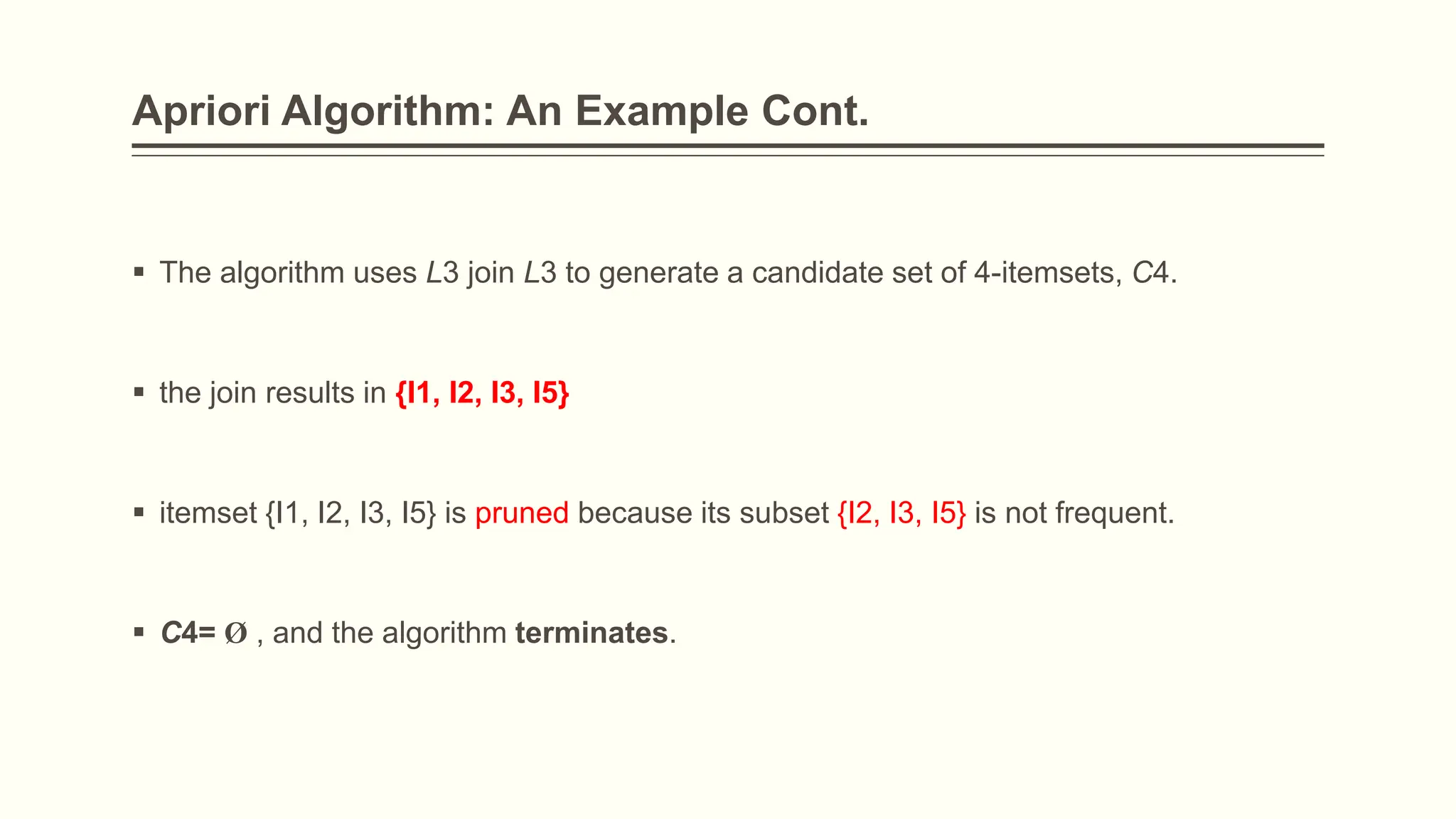

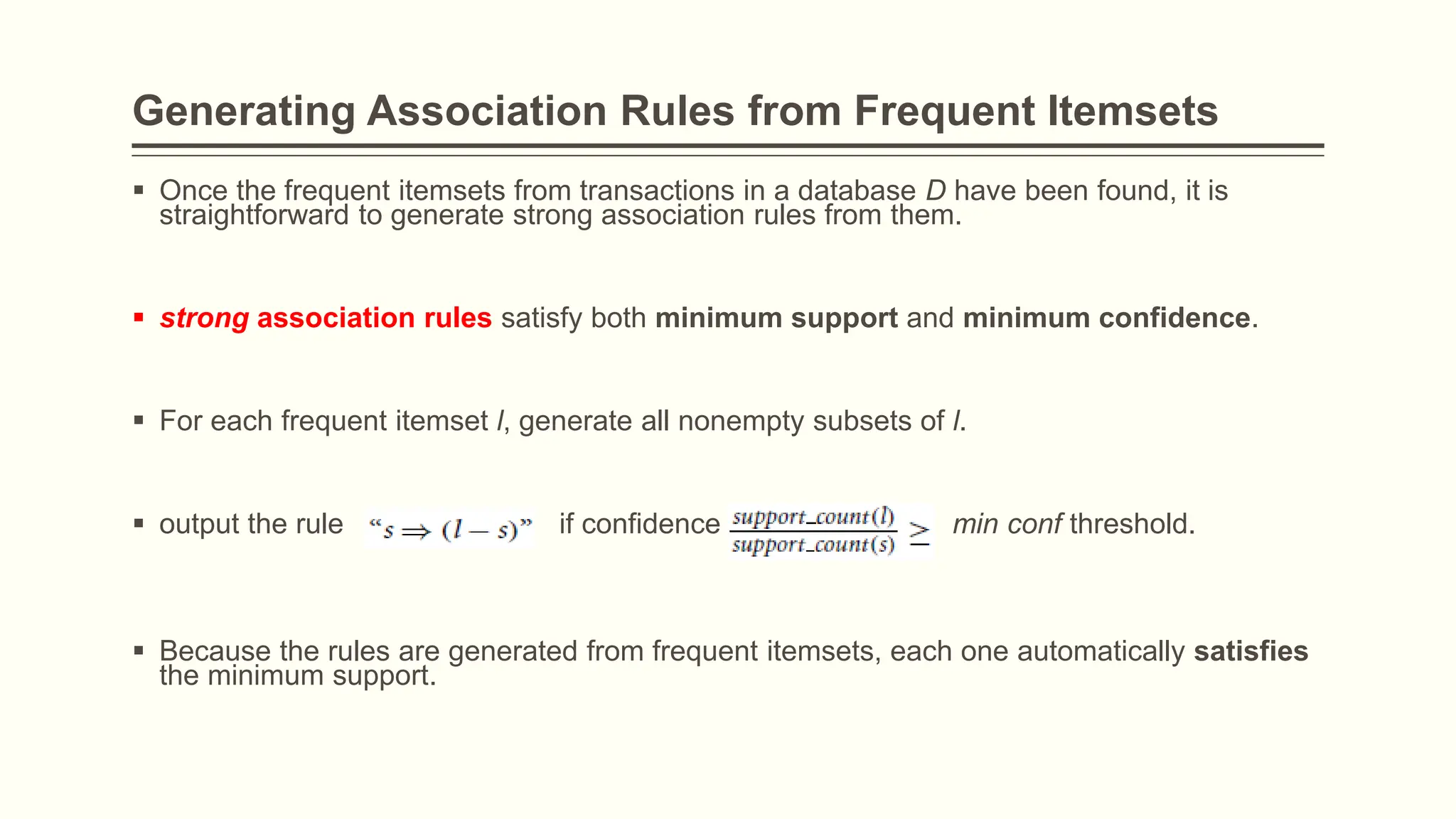

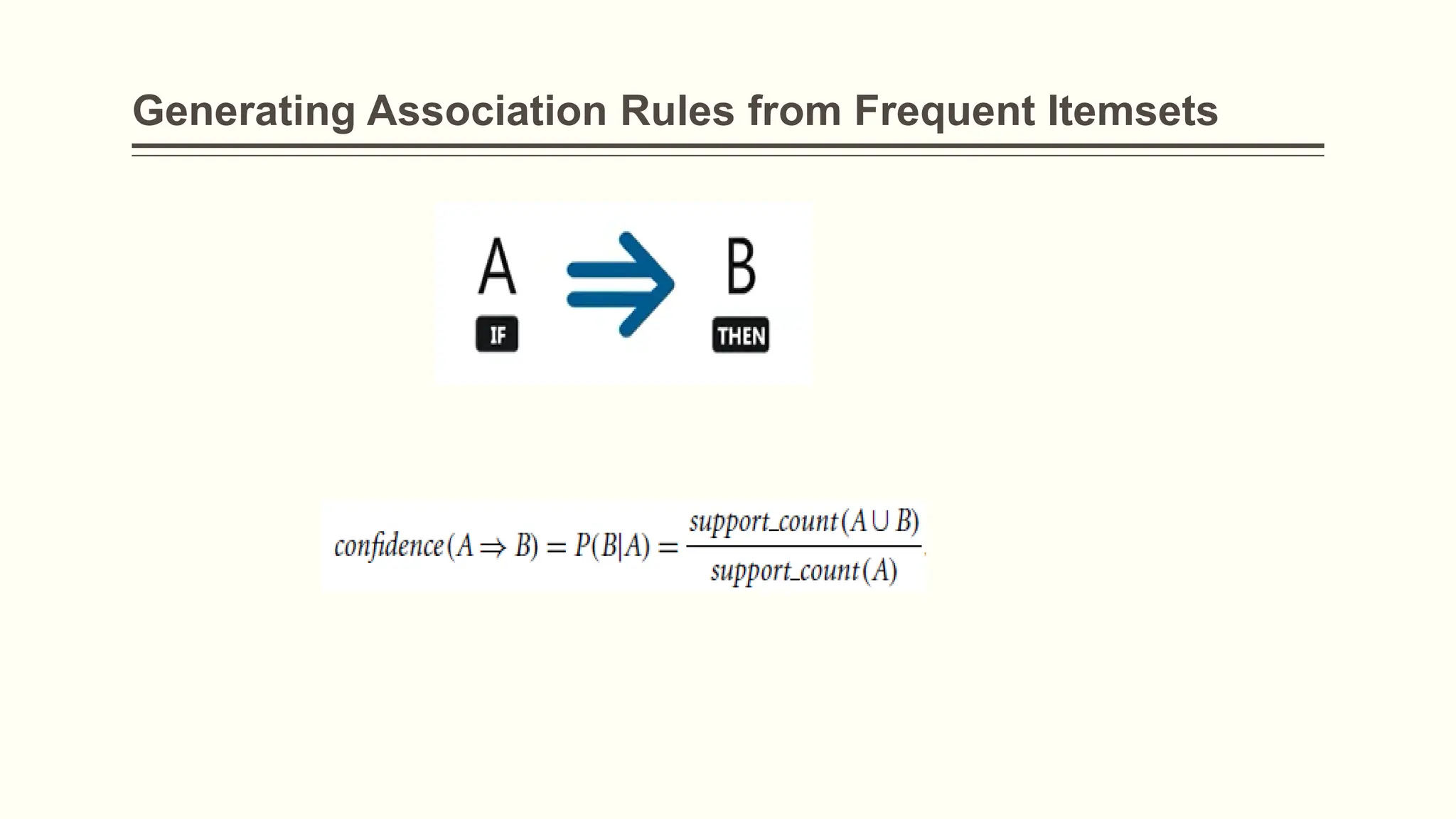

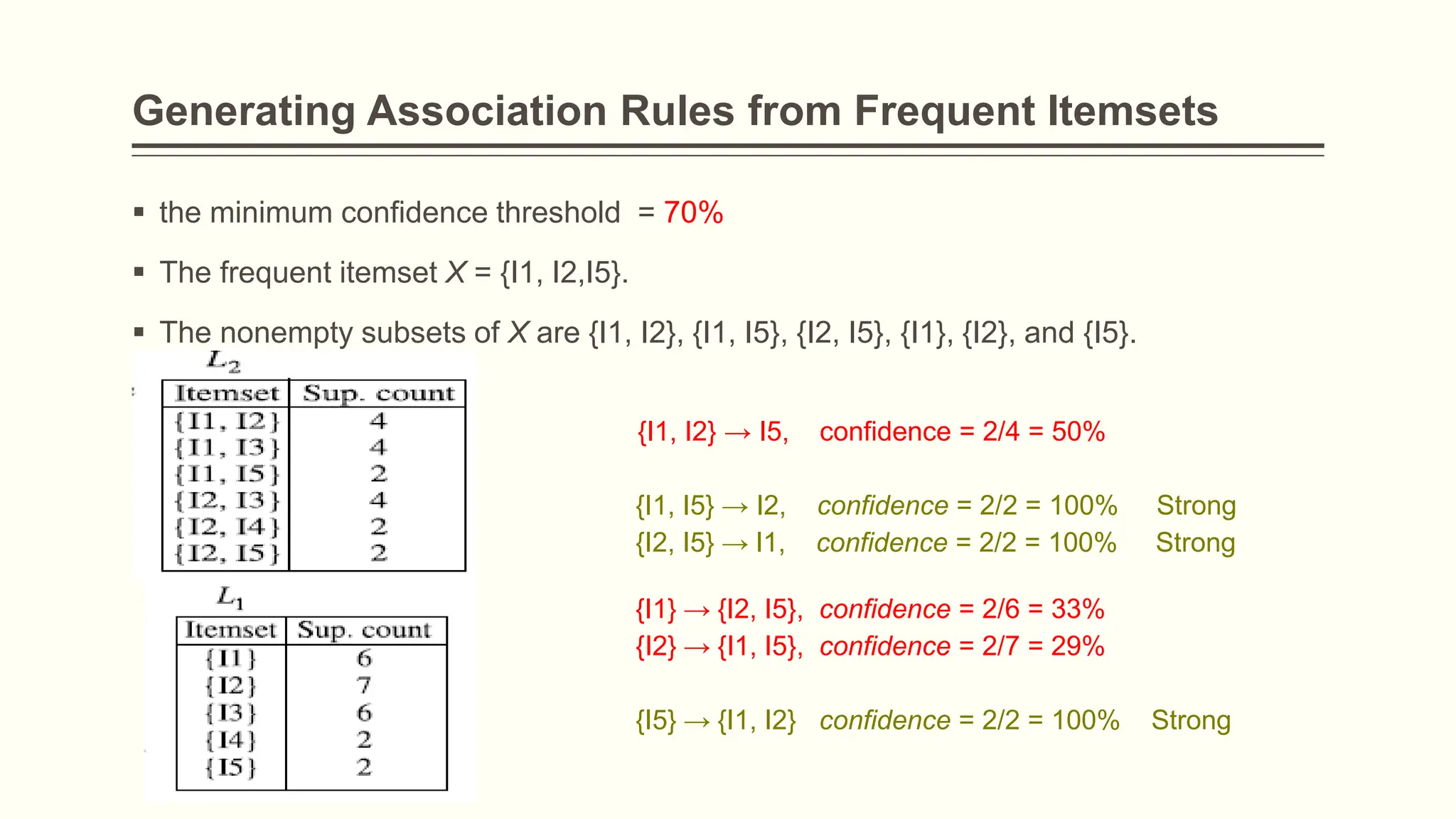

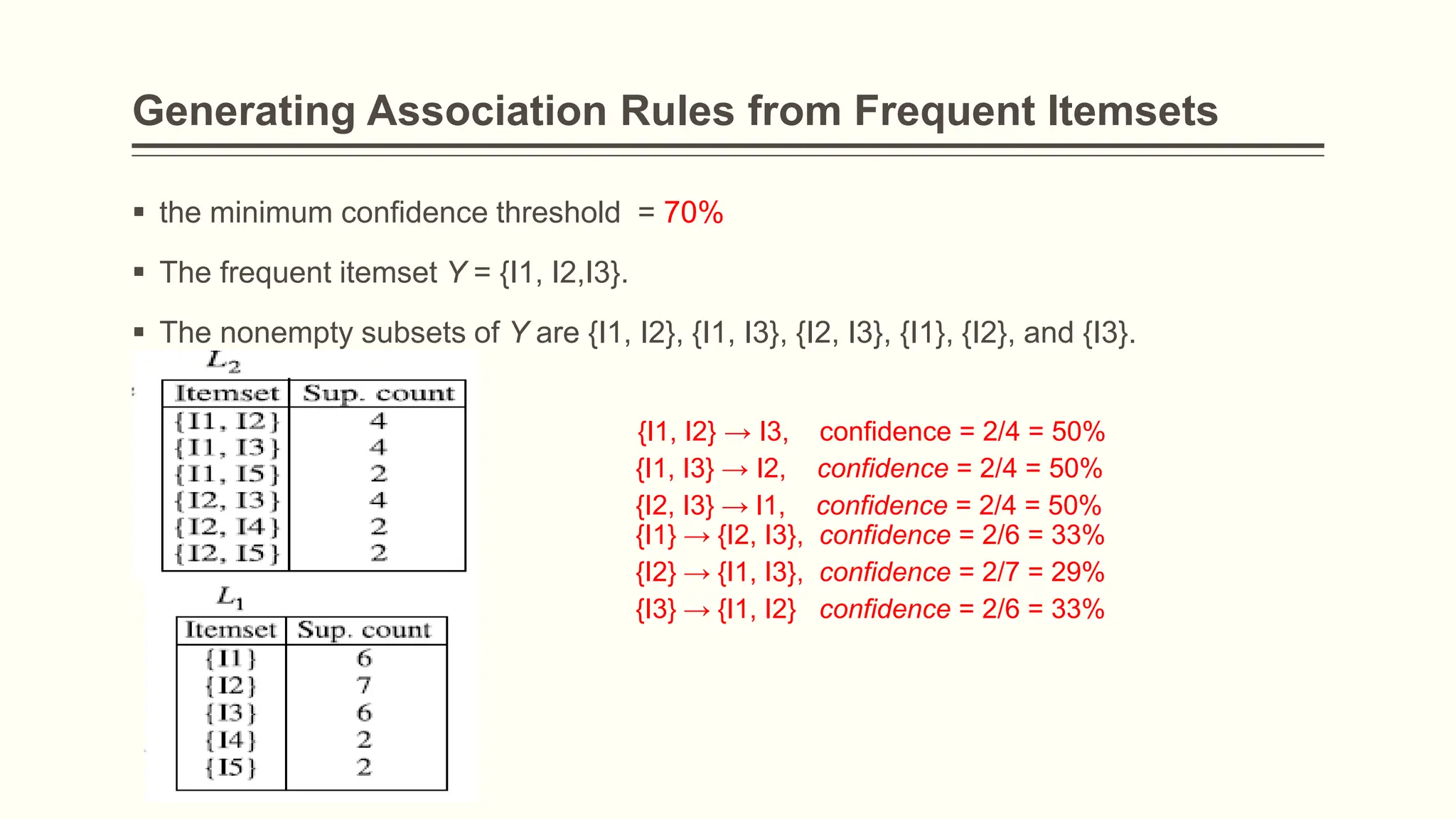

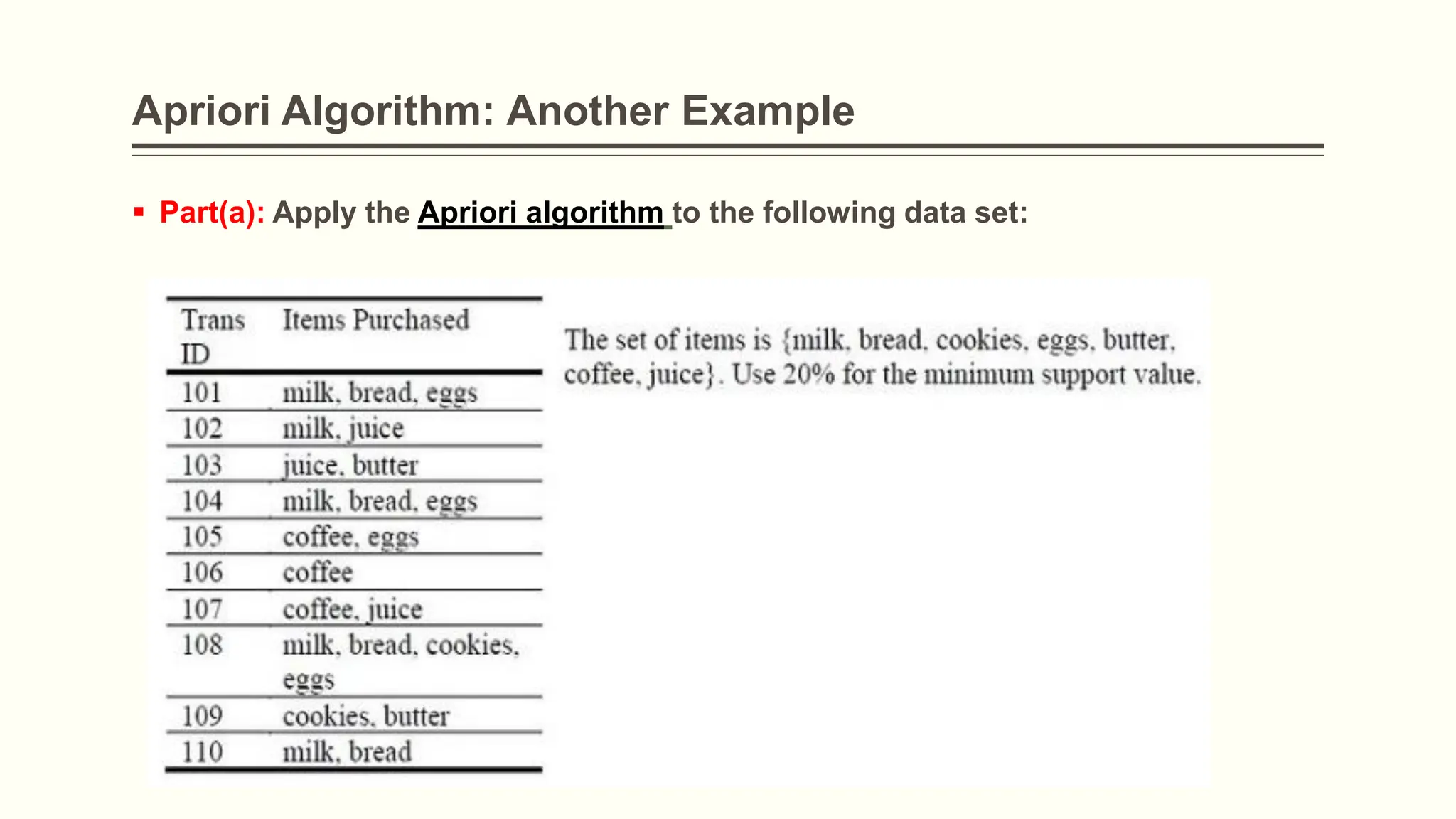

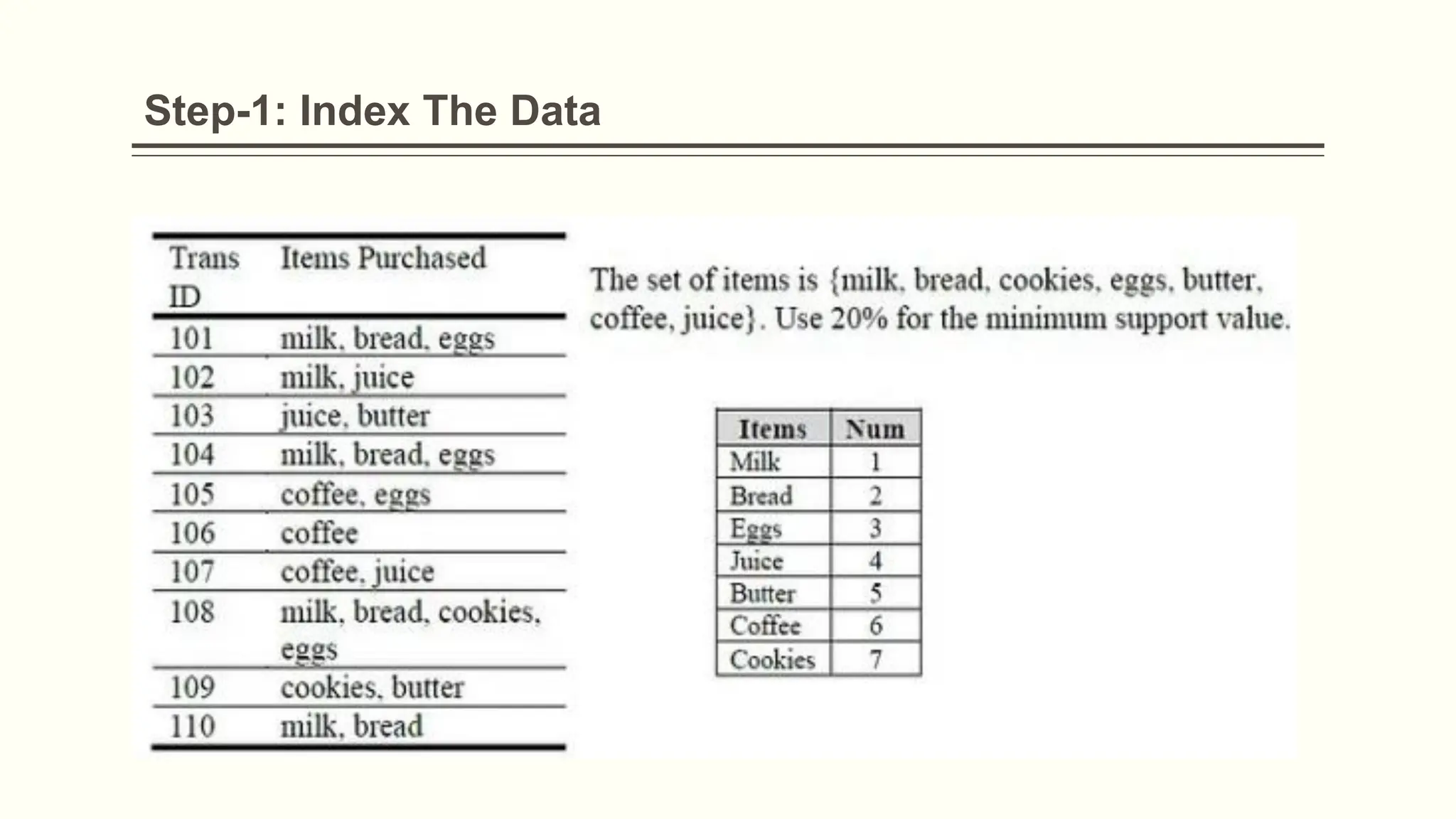

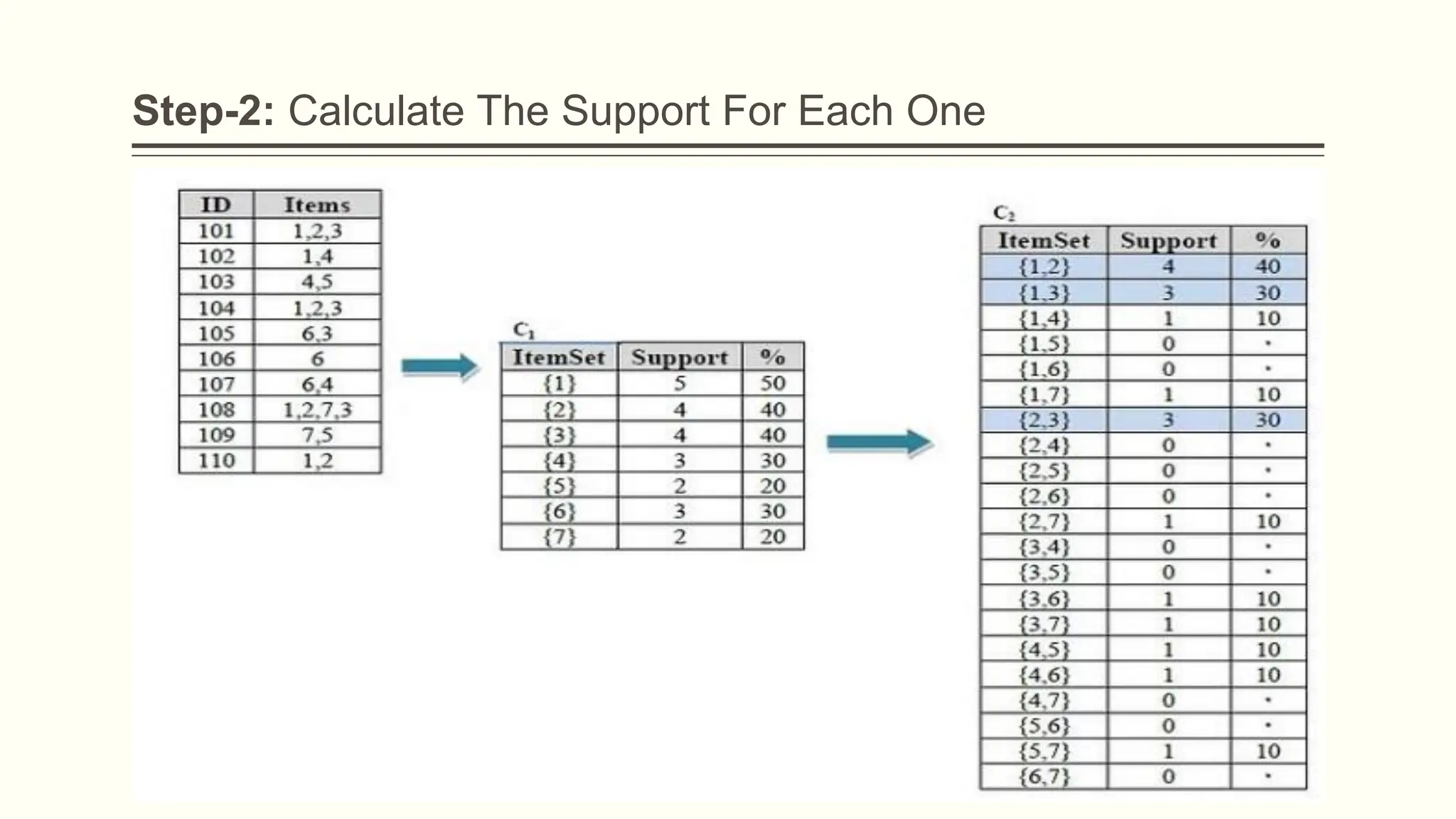

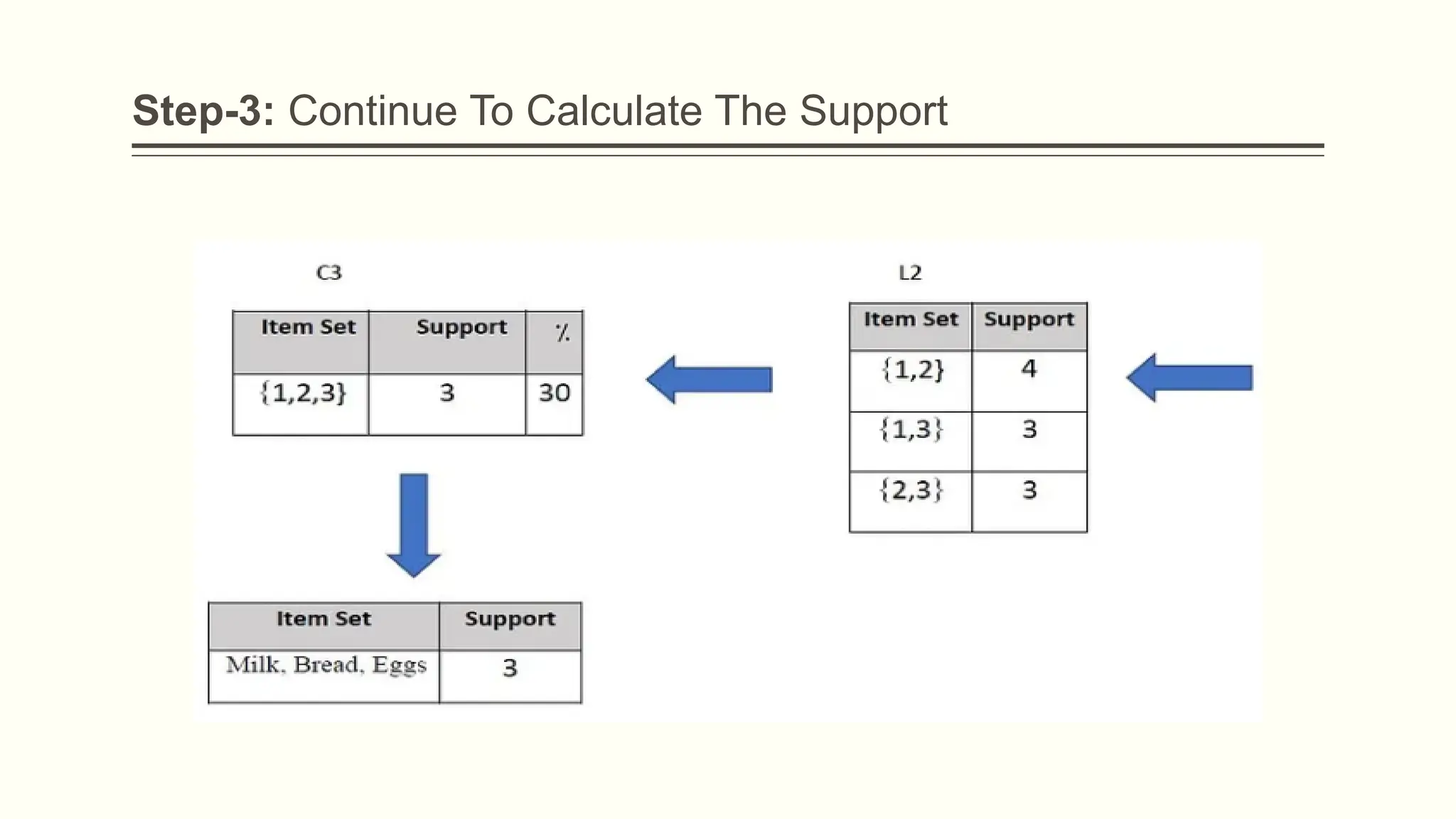

The document discusses the Apriori algorithm for mining frequent itemsets. It defines key concepts like frequent itemsets and the Apriori property. The steps of the Apriori algorithm are described through an example where transactions of items are analyzed to find frequent itemsets. Frequent itemsets that satisfy a minimum support threshold are identified. Association rules are then generated from these frequent itemsets if they meet a minimum confidence threshold. Another example applies the full Apriori algorithm to a dataset to identify frequent itemsets and generate strong association rules.