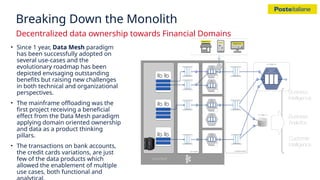

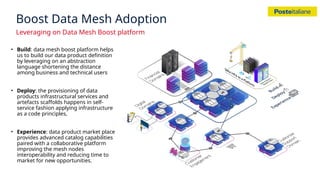

Poste Italiane is undergoing a digital transformation by adopting a data mesh architecture to improve its centralized monolithic data management, enhancing efficiency and innovation. This shift allows for decentralized data ownership, supporting a diverse customer journey across its extensive service offerings. The company aims to achieve faster time to market and better data product capabilities while fostering a culture of transparency and collaboration.