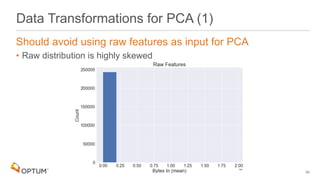

This document describes a proposed approach to rank cyber threat indicators of compromise (IOCs) matched in enterprise data based on reconstruction error from principal component analysis (PCA). The approach involves extracting IOCs from threat feeds, matching them to features engineered from proxy log and other data, decomposing the matched features with PCA, calculating a reconstruction error score to rank matches, and supplementing top ranked matches with contextual details to aid investigation. Future work may include adding an autoencoder model, incorporating analyst feedback, and using additional data sources.

![Feature Engineering Implementation (2)

Example function call for feature generation

22

# Calculate feature: stats for in and out fields

# Register the table names

# Add the table name to the list of tables to be

# passed to the join function

keys = ['dst','date']

aggs=['min','max','sum','mean']

in_out_stats = agg_num_columns(keys, columns=['in','out'], aggs=aggs)

in_out_stats.registerTempTable('io_stats')

tables.append('io_stats')](https://image.slidesharecdn.com/read-180531210834/85/Cyber-Threat-Ranking-using-READ-22-320.jpg)