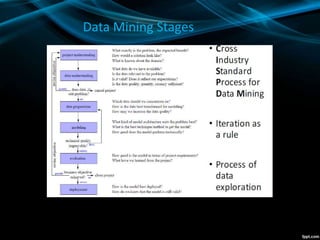

This document summarizes a machine learning project for an insurance company to predict customer purchasing behavior. It discusses:

- The objective is to predict the policy number and price a customer will purchase using historical customer data.

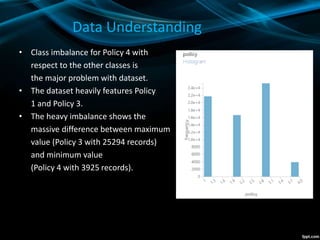

- The datasets include customer session and purchase histories. There is class imbalance with some policies having much more data than others.

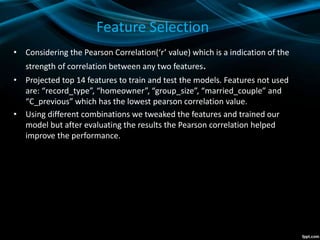

- Data preprocessing included removing duplicates, outliers, and normalization. Feature selection used Pearson correlation to identify the most important features.

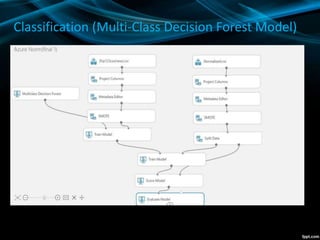

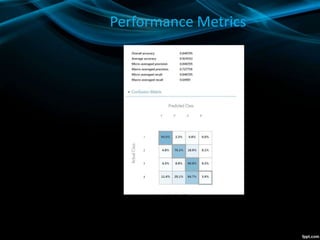

- SMOTE oversampling was used to address class imbalance for the policy number classification problem. Two models - decision forest and neural network - were evaluated for classification and regression.

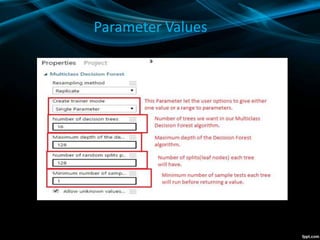

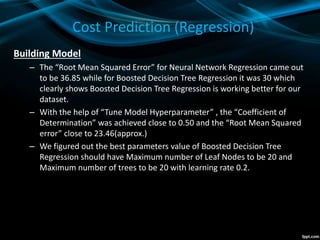

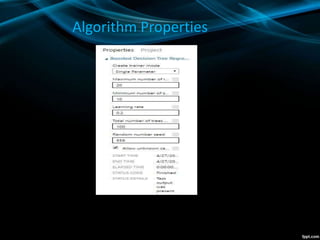

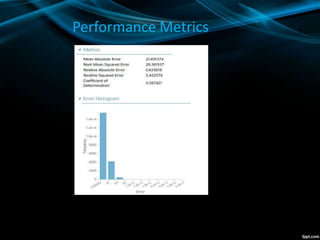

- The decision forest model performed best for classification, while boosted decision