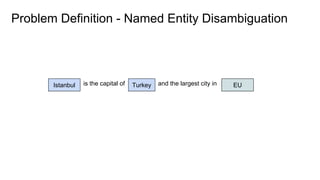

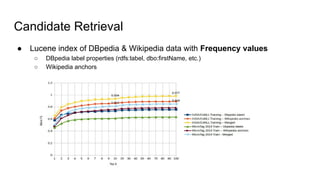

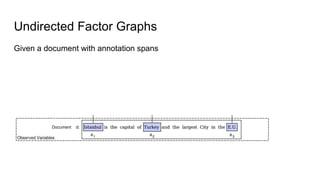

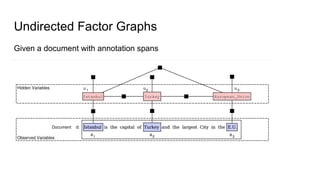

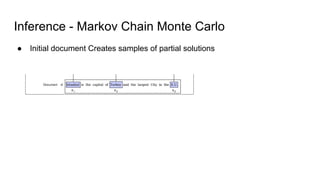

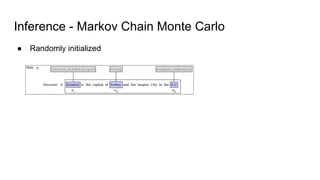

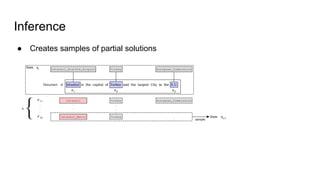

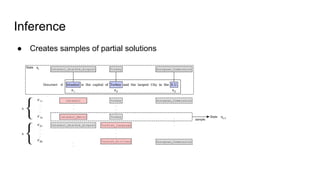

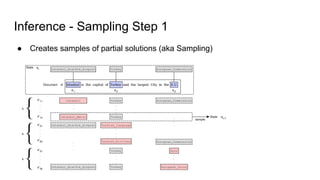

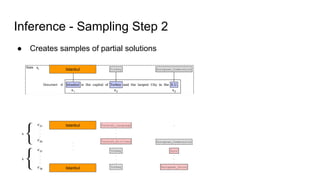

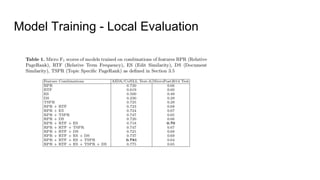

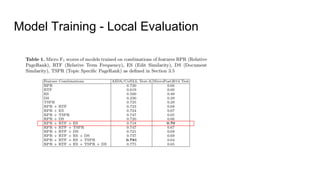

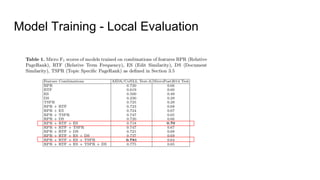

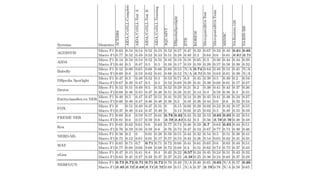

The document discusses an approach to entity disambiguation that combines textual and graph-based features. It presents an undirected factor graph model that captures dependencies between entity annotations. The model combines features like PageRank, term frequency, edit distance, and document similarity. It is trained on labeled datasets and evaluated against state-of-the-art systems using the GERBIL framework, achieving comparable results. The approach performs collective disambiguation of named entities.