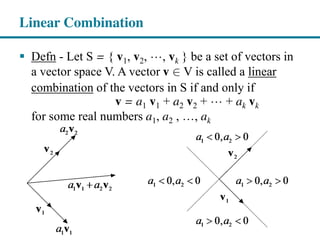

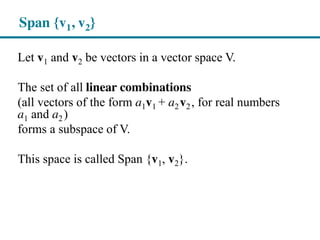

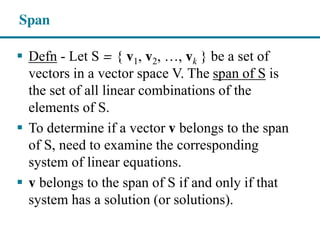

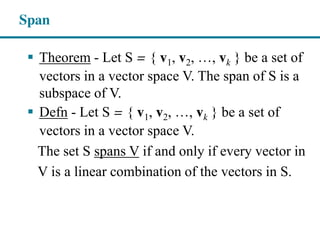

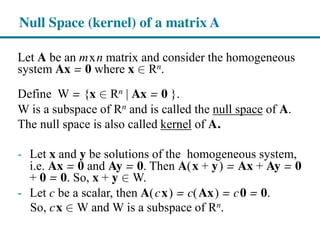

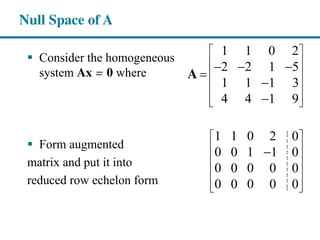

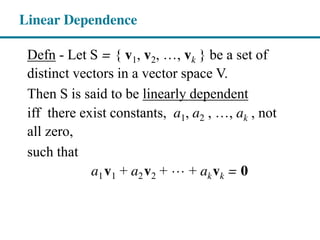

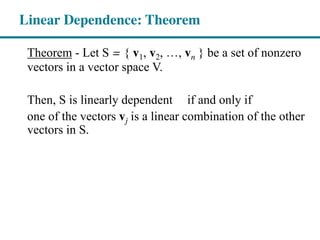

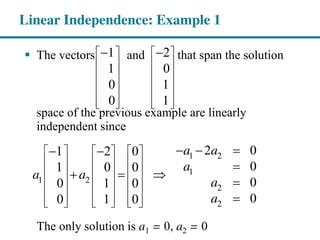

This document discusses linear combinations and independence of vectors. It defines linear combinations as vectors that can be expressed as a sum of other vectors with scalar coefficients. A set of vectors is linearly dependent if one vector can be written as a linear combination of the others, and linearly independent otherwise. The span of a set of vectors is the set of all their linear combinations, and spans the entire space if and only if the vectors are independent. The null space of a matrix contains vectors that solve the homogeneous equation Ax=0. Examples demonstrate determining if sets of vectors are linearly dependent or independent.

![Are the vectors w and z in Span{v1, v2}

where

v1=[1,0,3] ; v2=[-1,1,-3] ;

and

w=[1,2,3] ; z=[2,3,4] ?

Span](https://image.slidesharecdn.com/math211chapter4part2-180103165953/85/Chapter-4-Vector-Spaces-Part-2-Slides-By-Pearson-9-320.jpg)

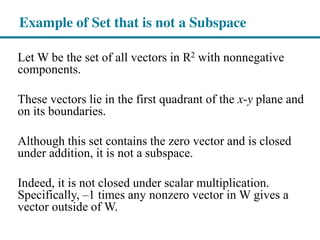

![Example of Set that is not a Subspace

Set of all vectors in R3 of the form: [3t+1, t, -2t]

-- zero vector is not of that form

Set of all vectors in R2 of the form: [t, t*t]

-- sum of such two vectors does not have that form:

Try t=1 and t=2.](https://image.slidesharecdn.com/math211chapter4part2-180103165953/85/Chapter-4-Vector-Spaces-Part-2-Slides-By-Pearson-11-320.jpg)

![Let S = { v1, v2, , vk } be a set of distinct

vectors in a vector space V.

Then S is said to be linearly dependent

if and only if the homogeneous system Ax = 0

has infinitely many solutions

where x Î∈ Rk and xi = ai i=1,…k

A = [ v1 v2 vk ]

vi are column vectors of A

Linear Dependence: Matrix form](https://image.slidesharecdn.com/math211chapter4part2-180103165953/85/Chapter-4-Vector-Spaces-Part-2-Slides-By-Pearson-17-320.jpg)

![Let S = { v1, v2, , vk } be a set of distinct

vectors in a vector space V.

Then S is said to be linearly independent

if and only if the homogeneous system Ax = 0

has a unique solution x = 0

where x Î∈ Rk and xi = ai i=1,…k

A = [ v1 v2 vk ]

vi are column vectors of A

Linear Independence: Matrix form](https://image.slidesharecdn.com/math211chapter4part2-180103165953/85/Chapter-4-Vector-Spaces-Part-2-Slides-By-Pearson-21-320.jpg)

![Let V be R4 and v1 = [1, 0, 1, 2], v2 = [0, 1, 1, 2]

and v3 = [1, 1, 1, 3]. Determine if S = { v1, v2, v3 }

is linearly independent or linearly dependent

1 3

2 3

1 1 2 2 3 3

1 2 3

1 2 3

0

0

0

2 2 3 0

a a

a a

a a a

a a a

a a a

v v v 0

Subtract second equation from third and get a1 = 0.

The first equation gives a3 = 0, then the second

equation gives a2 = 0. So, the vectors are linearly

independent.

Linear Independence: Example 2](https://image.slidesharecdn.com/math211chapter4part2-180103165953/85/Chapter-4-Vector-Spaces-Part-2-Slides-By-Pearson-24-320.jpg)