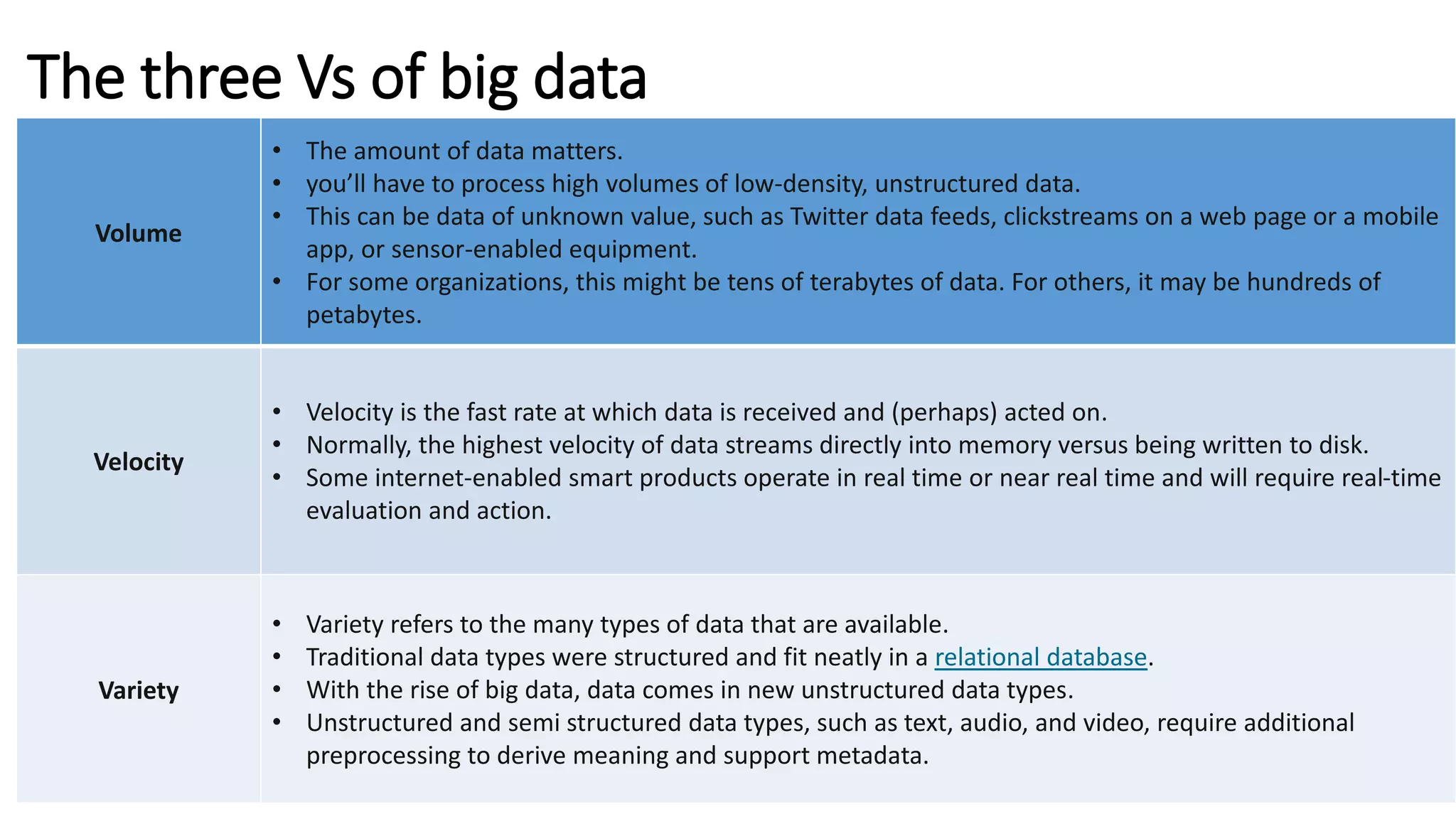

Big data is a collection of large and complex data sets that are difficult to process using traditional data processing applications. It is characterized by high volume, velocity, and variety of data. Big data is stored in data lakes and processed using technologies like Hadoop, Spark, and cloud platforms. While big data enables new insights and opportunities, it also presents challenges around data management, integration, and developing skills to work with diverse data types and systems.