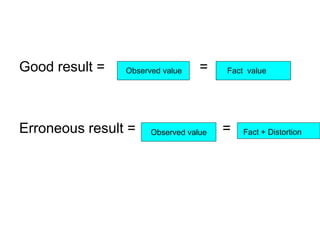

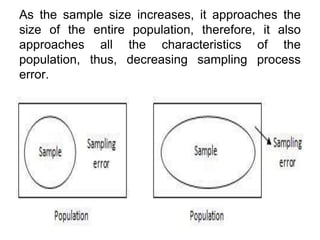

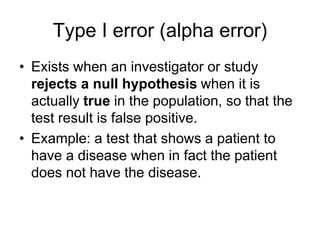

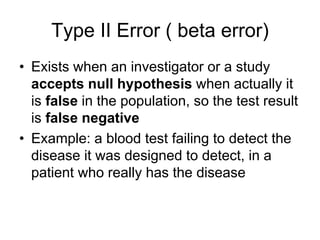

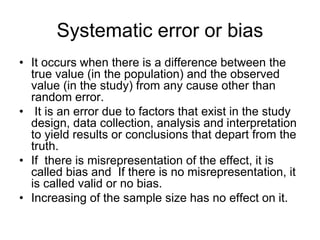

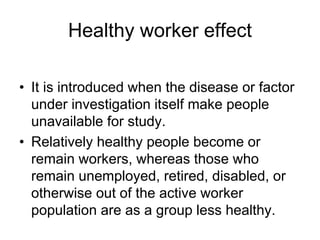

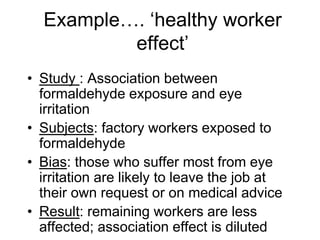

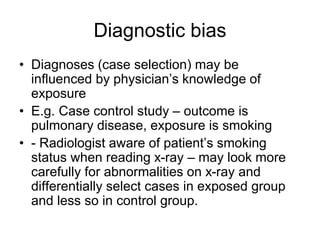

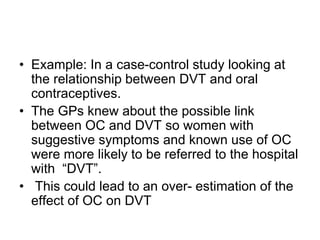

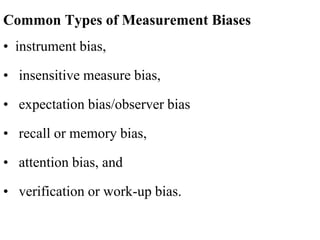

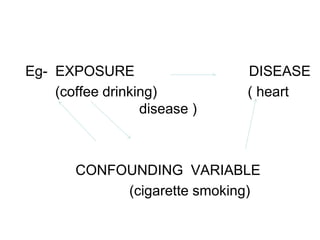

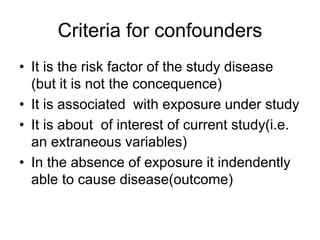

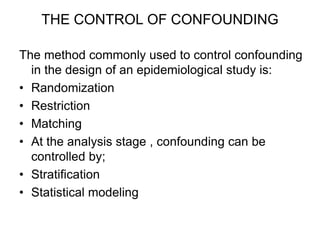

This document discusses various types of errors and biases that can occur in epidemiological studies. It defines error as a phenomenon where a study's results do not reflect the true facts. There are two basic types of error: random error, which occurs by chance and makes observed values differ from true values; and systematic error or bias, which is due to factors in the study design that cause results to depart from the truth. Types of bias discussed include selection bias, information bias, and confounding. Strategies for controlling biases such as randomization, restriction, matching, and statistical modeling are also outlined.