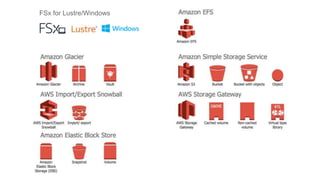

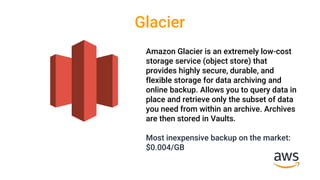

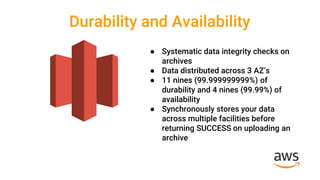

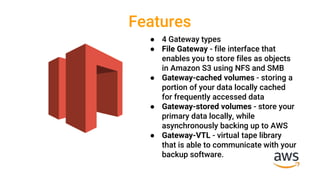

This document summarizes and compares several AWS storage options and their key features, durability and availability, scalability and elasticity, security, anti-patterns, and pricing. It covers S3, Glacier, EFS, FSx, EBS, Instance Store Volumes, and Storage Gateway. The options provide a range of capabilities from simple object storage to block and file storage for different use cases and data access needs.